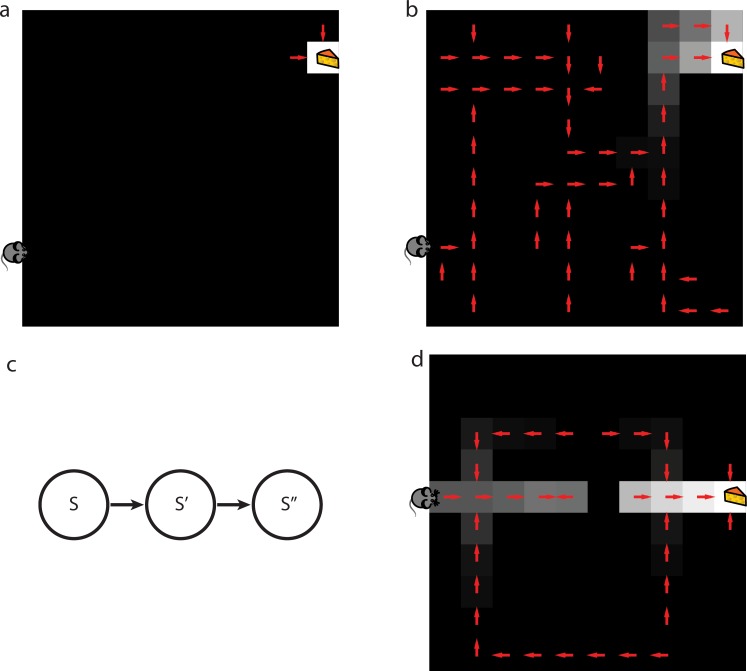

Fig 4. Behavior of SR-TD.

a) One-step of model-based lookahead combined with TD learning applied to punctate representations cannot solve the latent learning task. Median value function (grayscale) and implied policy (arrows) are shown immediately after the agent learns about reward in latent learning task. b) SR-TD can solve the latent learning task. Median value function (grayscale) and implied policy (arrows) are shown immediately after the agent learns about reward in latent learning task. c) SR-TD can only update predicted future state occupancies following direct experience with states and their multi-step successors. For instance, if SR-TD were to learn that s” no longer follows s’, it would not be able to infer that state s” no longer follows state s. Whether animals make this sort of inference is tested in the detour task. d) SR-TD cannot solve detour problems. Median value function (grayscale) and implied policy (arrows) are shown after SR-TD encounters barrier in detour task. SR-TD fails to update decision policy to reflect the new shortest path.