Version Changes

Revised. Amendments from Version 1

Thanks to the reviewers for the thorough review and many thoughtful comments. I made two major revisions:

I added a few sentences to explain why a lognormal distribution can be considered to be the best fit to the data in the Results section 3.1. I also added a sentence about how differences in bursting and irregularity, do not affect spike rate distribution ( Mochizuki et al., 2016)

Considerably more material and references on Hebbian learning is now included in the Introduction (last but one paragraph), including many new references, including Peterson and Berg’s 2016 paper

I decided to leave the Methods section intact; the data analysis is tedious, and it disrupts the flow of an essentially simple argument. Therefore, I leave it in the Methods section available for those who want to dig deeper. I also made a few minor word changes in the Abstract and Introduction, and improved the colouring in Figure 10 for better visibility.

Abstract

In this paper, we present data for the lognormal distributions of spike rates, synaptic weights and intrinsic excitability (gain) for neurons in various brain areas, such as auditory or visual cortex, hippocampus, cerebellum, striatum, midbrain nuclei. We find a remarkable consistency of heavy-tailed, specifically lognormal, distributions for rates, weights and gains in all brain areas examined. The difference between strongly recurrent and feed-forward connectivity (cortex vs. striatum and cerebellum), neurotransmitter (GABA (striatum) or glutamate (cortex)) or the level of activation (low in cortex, high in Purkinje cells and midbrain nuclei) turns out to be irrelevant for this feature. Logarithmic scale distribution of weights and gains appears to be a general, functional property in all cases analyzed. We then created a generic neural model to investigate adaptive learning rules that create and maintain lognormal distributions. We conclusively demonstrate that not only weights, but also intrinsic gains, need to have strong Hebbian learning in order to produce and maintain the experimentally attested distributions. This provides a solution to the long-standing question about the type of plasticity exhibited by intrinsic excitability.

Keywords: neural coding, synaptic weights, Hebbian learning, intrinsic excitability, rate coding, spike frequency, neural circuits, neural networks, lognormal distributions.

1 Introduction

Individual neurons have very different, but mostly stable, mean spike rates under a variety of conditions 1, 2. To report on behavioral results, spike counts are often normalized with respect to the mean for each neuron. But this obscures an important question: Why do neurons within a tissue operate at radically different levels of output frequency? In order to answer this question our approach is twofold: (a) we try to document this phenomenon for different neural tissues and behavioral conditions. We also examine neural properties for their distribution, namely intrinsic gains and synaptic weights; (b) we build a very generic neural model to explore the conditions for generating and maintaining these distributions. First, we give examples for the distribution of mean spike rates for principal neurons under spontaneous conditions, as well as in response to stimuli. We furthermore document distributions for intrinsic excitability 3– 5 for cortical and striatal neurons, as well as synaptic weight distributions 6– 11.

With the current data, we show that the distribution of spike rates within any neural tissue follows a power-law distribution, i.e. a distribution with a ‘heavy tail’. There is also a small number of very low-frequency neurons, so that we have a lognormal distribution 2. This lognormal distribution is present in spontaneous spike rates as well as under behavioral stimulation. For each neuron, the deviation from the mean rate attributable to a stimulus is small (CV = 0.3–1, standard deviation = 1–4 spikes/s), when compared to the variability in mean spike rate over the whole population (5-7-fold), cf. Table 1 and Table 3.

Table 1. Statistics of spike rate distributions in different tissues.

| Tissue | Mean

μ |

Median

μ* |

Variance

σ 2 |

Width

σ* |

Mode

e μ− σ2 |

n |

|---|---|---|---|---|---|---|

| IT cortex 39 | 1.5 | 4.50 | 0.71 | 2.32 | 2.2 | 100 |

| A1 cortex 2 | 1.6 | 4.95 | 0.47 | 1.98 | 3.1 | 145 |

| A1 cortex 40 | 3.3 | 27.11 | 0.69 | 2.29 | 13.6 | 263 |

| Purkinje in vitro 42 | 3.46 | 31.82 | 0.14 | 1.46 | 27.6 | 106 |

| Purkinje in vitro 41 | 3.44 | 31.19 | 0.198 | 1.56 | 30.0 | 34 |

| Purkinje in vivo 43 | 3.5 | 33.12 | 0.47 | 1.98 | 20.7 | 319 |

| Inferior colliculus 45 | 3.31 | 27.39 | 0.90 | 2.58 | 11.13 | 30 |

This work refers back to data initially reported in 1. At the time, we only had data on spike rates of cortical neurons available, plus independent evidence on intrinsic properties of striatal neurons. The observation on cortical data was taken up by 2, 12, and led to a number of papers 13, 14 focusing on the power-law distribution of spike rates as a cortical phenomenon, seeking explanations in the recurrent excitatory connectivity of cortical tissue 2, 13, 15, 16. However, we find the same spike rate distributions for midbrain nuclei, medium striatal neurons and cerebellar Purkinje cells, which do not have this kind of connectivity. It has even been found in the spinal motor networks of turtles 17. We then extended the data search for intrinsic excitability and found that lognormal distributions are ubiquitous there as well, at least in cortical as well as in striatal tissues. Finally, lognormal distributions have also been found for synaptic weights 6– 11, 18. The explanation for this universal phenomenon must lie elsewhere.

For this purpose we constructed a generic model for neuronal populations with adaptable weights and gains. We initialized both weights and gains with uniform, Gaussian or lognormal distributions. We then employed either Hebbian or homeostatic adaptation rules on both. Under a variety of conditions we could show that lognormal distributions develop from any initial distribution only with Hebbian (positive) adaptivity. Additional homeostatic adaptation stabilized learning but erased the lognormal distributions if it was stronger than Hebbian adaptation. We could even show that the widths of the distributions from the model match with the experimental data for rates, weights, and gains ( Table 1 and Table 3) that we have available. Lognormal distributions can only be maintained by positive, Hebbian-type learning rules 15, while homeostatic plasticity alone destroys lognormal distributions 19. There are a number of different learning rules and variants which all follow the ’Hebbian’ principle: strong activation leads to strengthening, weak activation leads to weakening 20. STDP rules are a variant of Hebbian learning for spiking neurons, which emphasize temporal sequence, but have the same positive learning effect 21– 23. It has been noticed that positive learning rules lead to run-away activation and unstable network behavior, and that they need to be counteracted by homeostatic processes 24, 25. We present a generalized model of synaptic learning which consists of both Hebbian (positive) and homeostatic (negative) adaptation rules 24, and show that positive (Hebbian) learning is necessary to establish a lognormal synaptic weight distribution.

For intrinsic learning it has often been assumed that it may implement purely homeostatic adaptation 26– 30, but see also 31. Experimental results are often inconsistent 4, 32– 38. We will present results for a lognormal gain distribution in a number of tissues. It will be shown by simulation that the same principle holds: only a Hebbian, positive learning rule is capable of maintaining lognormal distributions, while homeostatic adaptation serves to establish stability. This finally answers the question that experimental researchers have investigated for some time: Is intrinsic plasticity mostly homeostatic, i.e. adjusts values inversely to use, or is there Hebbian, positive learning involved: when a neuron fires, does its gain increase? The answer is that the attested distribution of intrinsic gain can only derive from a Hebbian style adjustment rule, even though additional homeostatic adaptation is possible. Intrinsic plasticity is Hebbian.

2 Methods

In this section we report on data collection for spike rates, intrinsic properties and synaptic weights. Secondly, we explain the simulation model we constructed to explore the generation and persistence of the attested distributions.

2.1 Experimental data

We analyze five data sets for spike rates from principal neurons under behavioral activation:

-

1.

inferior temporal (IT) cortex from monkeys 39

-

2.

primary auditory cortex (A1) from monkeys 40

-

3.

primary auditory cortex (A1) of rats 2

- 4.

-

5.

midbrain principal cells from inferior colliculus (IC) from the guinea pig 45

In monkey IT, single unit activity was recorded over 200ms for passive viewing of 77 different natural stimuli for 100 neurons, each stimulus shown 10 times 39. This yielded 770 spike rate response data points per neuron. For these data, we show mean spike rate, standard deviation, max-min values, coefficient of variation (CV) and Fano factor (FF) ( Figure 1), (cf. 1). What is remarkable is that the dispersion for each neuron (variance related to mean, FF) is fairly constant, and not related to the rank of a neuron as high or low-frequency. In other words, neurons have roughly similar behavioral responses relative to their average spike rate. For this reason, many behavioral experiments have reported percentage of increase/decrease of spiking as the relevant parameter.

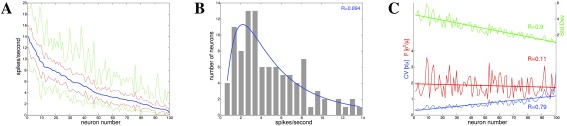

Figure 1. Spike rate data for neurons from inferotemporal cortex (IT) in monkeys 39.

100 neurons, passive viewing of 77 stimuli, 10 trials (770 data points per neuron), data collected over 200ms. The data are shown for each neuron, where neurons are sorted by mean spike count. A: Mean spike rates (blue), standard deviation (red), and minimum/maximum absolute values (green). B: Mean spike rates histogram shows a lognormal distribution ( σ*=2.32). C: Distributions of standard deviation (green) and CV (blue) have linear slopes, with small variation. The Fano factor (red), measuring the dispersion for each neuron, is nearly constant at about 2.

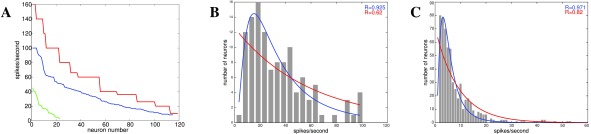

Additionally, we show data from primary auditory cortex (A1) from awake monkeys, which were recorded for spike responses to a 50ms, 100ms, or 200ms pure tone ( 40, Figure 2A and B) and data for spike rates from the primary auditory cortex of rats for four different conditions, which were recorded as cell-attached in vivo recordings ( 2, Figure 2C).

Figure 2. Responses to pure tones in primary auditory cortex from awake monkeys 40 and firing rates from primary auditory cortex in rats 2.

A: Distribution of spike rates to a 50ms tone ( n = 119, red) 100ms tone ( n = 115, blue), or 200ms tone ( n = 23, green) in primary auditory cortex in monkeys 40. B: Histogram for spike rate distribution for the 100ms tone response ( n = 115) fitted by an exponential (red) or lognormal (blue) distribution 40. C: Spontaneous spike rate distribution from primary auditory cortex in unanesthetized rats 2 fitted by an exponential (red) or lognormal (blue) distribution. Note that the spontaneous firing rates are much lower and narrower distributed than evoked spikes in response to stimuli at short time scales ( B), but that they still follow a lognormal distribution.

For midbrain nuclei neurons (IC), we re-analyzed spike rates in response to tones (for 200ms after stimulus onset) under variations of binaural correlation 45. The frequency ranking of neurons by mean spike rate, standard deviation, min-max values, CV and FF are shown in ( Figure 3A–C). CV and FF are similar to the cortical data. Data from GABAergic cerebellar Purkinje cells offer some difficulty for this analysis since they have regular single spikes at high frequencies, and in addition, calcium-based complex spikes 43. Complex spike rates however are low ( < 1 Hz). This can therefore be regarded as a form of multiplexing, with two separate codes, where single spike rates can be separately assessed in their distribution. Here we report data for single spikes from in vivo recordings in anesthetized rats 43 ( Figure 4A) and data from spontaneous spiking (in the absence of synaptic stimulation) under in vitro conditions ( 41, 42, Figure 4B and C).

Figure 3. Neuronal response to binaural stimulation for inferior colliculus of the guinea pig 45 ( n = 30), data collected over 100 ms, 200–500 trials.

The data are shown for each neuron, with neurons sorted by mean spike count. A: Mean spike rates (blue), standard deviation (red), and minimum/maximum absolute values (green). B: Mean spike rates histogram shows a lognormal distribution. C: Distributions of standard deviation (green), CV (blue) and FF (red). Again, the dispersion is fairly constant.

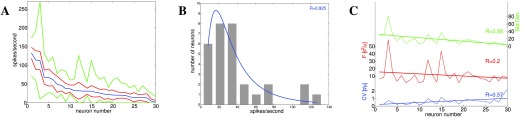

Figure 4. Spike rates for cerebellar Purkinje cells from rats 41– 44.

A: Data for single spikes for Purkinje cells recorded from anesthetized rats (spontaneous in vivo) 43 ( n = 346). B: Data for spike frequencies of isolated cell bodies of mouse Purkinje cells in vitro 41 ( n = 34). C: Spontaneous spike rates for Purkinje cells in slices ( n = 106) 42. D: Spike counts per neuron from 41 ( n = 34), together with variability data from 44 ( n = 2).

In order to show values for standard deviation and variance, data for two Purkinje cells from a behavioral experiment, 44, i.e. single spike rates during arm movements from monkeys, have been added to the ranking of spontaneously firing neurons by mean spike rate from 41 ( Figure 4D).

The logarithmic (heavy-tailed) distribution of spike rates is evident under all conditions.

The distribution of spike rates for neurons spiking in the absence of synaptic input shows that there are differences in the intrinsic excitability of neurons. To further explore this we looked at three additional datasets, which report the action potential firing of a cell due to injected current, such as by a constant pulse. This can be defined as the neuronal gain parameter (spike rate divided by current, [Hz/nA]).

-

1.

medium striatal neurons in slices from rat dorsal striatum and nucleus accumbens shell (NAcb shell) 3, cf. 1, Figure 5.

-

2.

cortical neurons in cat area 17 in vivo 46, Figure 6A and B

-

3.

striatal neurons from globus pallidus (GP) from awake rats 5, Figure 6C

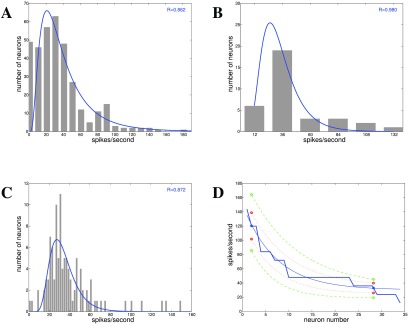

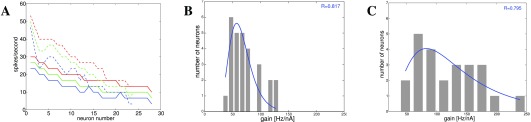

Figure 5. Spike rate and gain distributions in basal ganglia 3.

A: Spike rate in response to a 300ms constant current pulse at 180pA (blue), 200pA (green), and 220pA (red) for neurons in dorsal striatum ( n = 28, solid lines) and nucleus accumbens shell ( n = 24, dashed lines). B: Gain (Hz/nA) for neurons in dorsal striatum ( n = 28). C: Gain (Hz/nA) for neurons in NAcb shell ( n = 24).

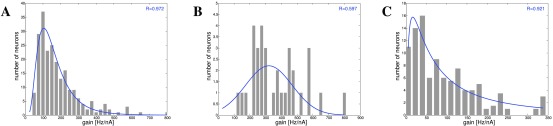

Figure 6. Gain [Hz/nA] for cortical and striatal neurons.

A: Gain for all types of cortical neurons in vivo ( n = 220) 46. B: Gain for fast spiking cortical neurons only ( n = 33) 46. C: Gain for neurons in globus pallidus (GP) in response to a +100pA current pulse ( n = 145) 5.

In 1, we already presented the data from striatum, which show that the spike response to a constant current follows a heavy-tailed distribution 3. Figure 5A shows the spike rate in response to current pulses of different magnitude in two different areas, nucleus accumbens (NAcb) shell and dorsal striatum. Figure 5B and C show the distribution of rheobase (current-to-threshold) for dorsal striatal and NAcb shell neurons. Distributions appear mostly lognormal, with the exception of the 200pA current pulse response and the data in Figure 5C, which appear normally distributed.

We extend this dataset by recordings from different types of cortical neurons in cat area 17 in vivo ( 46, Figure 6A and B) and from GP in awake rats ( 5, Figure 6C).

A lognormal distribution of intrinsic gain is clearly apparent, except for fast-spiking interneurons, which, however, may be a sampling error (n=33).

Synaptic weight distributions have been investigated starting with 10 in hippocampus by measuring EPSC magnitude 6, 47– 50 ( Figure 7). There is also a review paper available 51 to summarize the findings. Recently, the expression of AMPA receptor subunit GluA1, which is correlated with spine size, has also been measured 52, Figure 8. We used five datasets from cortex, hippocampus and cerebellum:

-

1.

EPSPs for deep-layer pyramidal-pyramidal cell connections in rat visual cortex 6, 47

-

2.

EPSP amplitudes for deep-layer excitatory neuron connections in somatosensory cortical slices of juvenile rats 49

-

3.

EPSP amplitudes for CA1 to CA3 connections in guinea pig hippocampal slices 10

-

4.

EPSCs for granule cells to Purkinje cells in adult rat cerebellar slices 50

-

5.

labeled GluA1 AMPA receptor subunit in mouse somatosensory barrel cortex 52

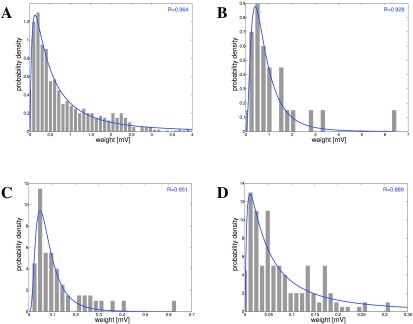

Figure 7. Strengths of EPSPs in cortex, hippocampus and cerebellum 6, 10, 47, 49, 50.

A: Cortex: Deep-layer (L5) pyramidal-pyramidal cell connections 6, 47. B: Cortex: Deep-layer (L5) pyramidal-pyramidal cell connections 49. C: Hippocampus: CA1 to CA3 connections 10. D: Cerebellum: Granule cells to Purkinje cells 50.

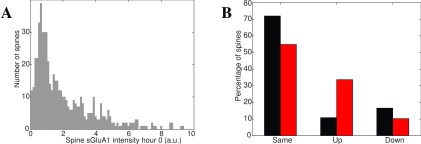

Figure 8. AMPA subunit distribution as a marker of synaptic weight.

A: Expression of labeled GluA1 AMPA receptor subunit in layer 2/3 mouse barrel cortex in vivo follows a lognormal distribution ( σ* = 2.59, µ* = 0.32, n = 560). B: GluA1 density for control (black) and after 1 hour whisker stimulation (red). Stimulation leads to an increase of GluA1 in ≈ 30% of neurons 52.

In 6, EPSP magnitude was measured for L5 pyramidal neurons in slices from rat visual cortex, averaged over 45–60 responses, and peak amplitude recorded, ( Figure 7A). Similar data were used in 49 for slices from a single barrel column in rats ( Figure 7B). A 30-fold variation of coupling strength was noted. In 10, EPSPs between CA3 and CA1 in hippocampal slices were recorded, by detecting somatic membrane potential changes in response to presynaptic neuron stimulation ( Figure 7C). There are also synaptic weight data on granule cell to Purkinje cell connections 11, 50, 51, which show a similar distribution, but have an order of magnitude weaker connections than cortical connections ( Figure 7D). Finally, a different type of evidence was obtained in 52, namely labeling for a subunit of AMPA receptors in layer 2/3 mouse barrel cortex in vivo both before and after whisker stimulation. The AMPA intensity is distributed lognormally over the spines, corresponding to the observations on the strengths of EPSPs. It is noticeable that stimulation leads to increase of on average 200% (two-fold) in about 30% of spines 52. Yet as we know, over time the overall distribution of synaptic strengths remains stable. For synaptic weights, just as for intrinsic gains and spike rates, lognormal distributions have been found for both EPSPs and AMPA receptor distribution in a highly consistent manner.

In many cases, the data were only available in the form of histograms. The parameters of the lognormal distribution were then obtained by fitting the data using a Nelder-Mead optimization method. A number of parameters were derived from these fits and reproduced in Table 1– Table 3, cf. 3.2.

Table 2. Statistics of intrinsic excitability (gain) in different tissues.

| Tissue | Peak

[Hz/nA] |

Mean

µ[Hz/nA] |

Median

µ* |

Variance

σ 2 |

Width

σ* |

Mode

e µ– σ2 |

n |

|---|---|---|---|---|---|---|---|

| Dorsal striatum 3 | 48 | 4.24 | 69.41 | 0.36 | 1.82 | 48.3 | 28 |

| NAcb shell 3 | 65 | 4.67 | 106.70 | 0.49 | 2.01 | 65.4 | 24 |

| GP in vivo 5 | 6.6 | 3.4 | 29.96 | 1.54 | 3.46 | 6.4 | 146 |

| GP model 5 | 37 | 4.0 | 54.60 | 0.40 | 1.88 | 36.6 | 10000 |

| Cortical 46 | 105 | 4.96 | 142.59 | 0.31 | 1.75 | 104.6 | 220 |

Table 3. Statistics of synaptic weight distributions in different tissues.

| Tissue | Mean

µ |

Median

µ* |

Variance

σ 2 |

Width

σ* |

Mode

e µ−σ2 |

n |

|---|---|---|---|---|---|---|

| Cortex L23 7 | -0.99 | 0.37 | 0.76 | 2.39 | 0.17 | 48 |

| Cortex L23 8 | 0.25 | 1.28 | 1.41 | 3.28 | 0.31 | 35 |

| Cortex L23 9 | -0.94 | 0.39 | 1.54 | 3.46 | 0.08 | 61 |

| Cortex L5 47 | -0.56 | 0.57 | 1.47 | 3.36 | 0.13 | 1004 |

| Cortex L5 49 | -0.31 | 0.73 | 0.58 | 2.14 | 0.41 | 26 |

| Hippocampus 10 | -2.61 | 0.07 | 0.43 | 1.93 | 0.05 | 71 |

| Cerebellum GC-PC 11 | -2.70 | 0.07 | 1.82 | 3.85 | 0.01 | 104 |

| Cortex in vivo 52 | -1.14 | 0.32 | 0.90 | 2.59 | 0.13 | 560 |

2.2 Simulation model

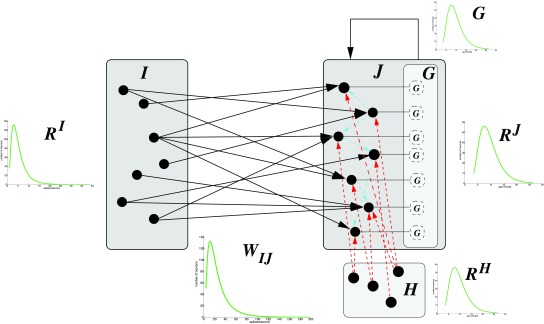

Given are two neuron populations I, J each with n = 1000 neurons and variable random connectivity C between I and J. C determines the density between I and J. The input population I always has excitatory output onto J. Inhibitory input to J is modeled by a population H with n = 200. The output neuron population J may also have recurrent excitatory connectivity. Figure 9 shows the architecture for the generic neural network used. The model (GNN) was programmed in Matlab, and is available in the public repository github ( https://github.com/gscheler/GNN, DOI: https://doi.org/10.5281/zenodo.829949).

Figure 9. Generic neural network model with neuron populations I, J (excitatory, blue arrows) and H (inhibitory, red arrows).

Lognormal distributions occur for gain G, rates R I, R J, R H, and weight distributions W IJ. J may have recurrent connectivity.

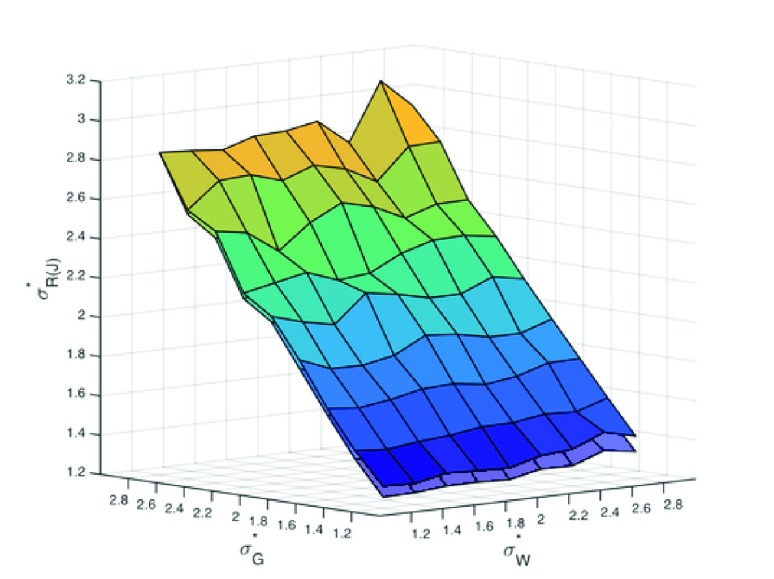

Figure 10. The width of the rate distribution for J, depends heavily on the gain but not on the weight distribution

There is a slight effect of connectivity (upper sheet C=5%, lower sheet C=10%).

The input population I is modeled according to 2 for pyramidal cortical neurons with a spike rate distribution of µ* = 4.95 and σ* = 1.98 ( Table 1). The goal is to generate a spike rate distribution R J for J, given a gain distribution G for the target neurons, and the weight distribution W IJ such that R J is similar to R I.

For each neuron j in J the spike rate r j is calculated by applying its gain g j to the weighted sum of its connected excitatory inputs and its inhibition. C j is the set of neurons from I that have excitatory connections to neuron j.

where the rate r i is taken from the distribution R I, from R H, w ij from W IJ, and g j from G. g j is modeled as a factor for a linear gain function. It is possible to use a sigmoidal gain function instead, but this makes no difference for the conclusions from the model (Section 3.3). The output R J may be used as input to I with a matrix W I,J for tests of the adaptation rules.

For the adaptation of weights W IJ and gains G, we use Hebbian or homeostatic rules, as described in Section 3.3. The system described in this way is sufficient for all the calculations on the shape of distributions used in this paper.

3 Results

3.1 Universality of lognormal distributions

We have documented the distribution of spike rates, gains, and weights for different types of neurons ( Figure 1– Figure 8). The distribution in all cases follows a lognormal shape. In some cases, we had data on the variability of spike rates and analyzed them for dispersion (CV, FF) under behavioral stimulation. While the fold-change from low spiking neurons to high spiking neurons is high, 5- to 7-fold, the variability for each neuron is comparatively low. It also seems to be adequately described by a percentage change over the whole population. This means that a low spiking neuron never reaches the same rate as a high spiking neuron, even when fully activated.

The similarities across neural systems are striking. For instance, in a midbrain nucleus (inferior colliculus) which is essentially an ’output’ site for auditory and somatosensory cortex, spike rates are high overall 45, nonetheless the distribution of mean spike rate, and the variability are comparable to cortical data ( Figure 3). Hippocampus, cerebellum and cortex vary in degree of bursting and spike irregularity 53, but the rate distribution is constant. The distribution of mean spike rate is also essentially the same under spontaneous and under behavioral conditions.

Lognormal distributions were obtained by fit to the histograms obtained from data (goodness of linear fit, mean ≈ 0.92, s. Figure 1– Figure 8). The lognormal distribution is a very simple statistical distribution 54, almost as simple and as universal in the description of natural processes as a Gaussian distribution (with which it is identical for small σ*). Even though the datasets were occasionally fairly small, and more data could be added to obtain greater precision, the conclusion seems warranted that the underlying natural process is as simple and general as the multiplication of independent variables 55, rather than assuming more complex processes which may lead to other exponential-family distributions.

Lognormal rate distributions appear to be an essential property of neural tissues that occur in areas with very different neuron types and connectivity, and different absolute spike frequencies. They are present during spontaneous activity, and under activation of a network, in vivo as well as in vitro. They have a counterpart in a lognormal distribution of intrinsic excitability, and lognormal synaptic connectivity. This type of distribution seems to be an essential component of the functional structure of a mature network, which is not altered by learning, plasticity, or processing of information.

3.2 Data analysis for distributions

A lognormal distribution is characterized by parameters µ* and σ*. µ* = e µ is the median, a scale parameter, which determines the height of the distribution. σ* = e σ is the multiplicative standard deviation, a shape parameter which determines the width of the distribution. For distributions with small σ*(approximately σ* < 1.2 or σ < 0.182) a lognormal distribution is essentially identical to a normal distribution. (The coefficient of variation CV ~ σ* − 1, so that for CV < 0.18, a lognormal equals normal distribution.) We collected data on spike rate, gain and synaptic weight distribution for a number of tissues in different experimental conditions ( Table 1– Table 3). For the height of the spike rate distribution, there are known differences, e.g. with lower values for cortex ( µ* ≈ 4.5) and higher values for Purkinje cell ( µ* ≈ 30) and midbrain nuclei (cf. Table 1). In other words, spike rates differ between brain areas such as cortex and cerebellum by a factor of 10.

In contrast to that, the width of spike rate distributions is more similar, with an average at σ* ≈ 2.2, with one outlier. The gain has a smaller σ*, i.e. a more normal, less heavy-tailed distribution than the spike rate. Minus the outlier (3.46), the mean for σ* is only 1.86, considerably lower than the width of the spike rate distribution ( Table 2). For weight distributions ( Table 3), the width σ* is consistently larger, with an average of almost 3 (2.91). The synaptic strength ( µ*) varies over at least one order of magnitude between cortex and cerebellum.

It turns out that σ* values are significantly different for rates, gains and weights, lowest for gains ( σ* ≈ 1.8), higher for rates ( σ* ≈ 2.2) and highest,( σ* ≈ 3), for weights. The data that we have are not precise enough to draw quantitative conclusions, but no large distinctions are apparent between the tissues ( Table 1 – Table 3). We use a generic neural network to recreate lognormal distributions by adaptation rules and we will also show that distribution widths are structural properties which follow from general network properties.

3.3 Generating lognormal distributions with generic neural networks

Since not only mean spike rates but also both components, intrinsic excitability and synaptic weights, have lognormal distribution, this raises the question of how the functional system that we observe is generated. It is obvious, if the data are accurate, that these are basic parameters of any simulation and need to be reproduced in any model to make it biologically realistic.

We set up a generic neural network model (cf. Section 2.2) to explore the mechanisms of generating and maintaining rate, weight and gain distributions. The model consists of a source neuron group I, a target group J, a population of inhibitory neurons H, which are connected with J, and potentially recurrent excitation in the target group J. The spike rate distribution R I acts through a weight distribution W onto a gain distribution G, where inhibition H is subtracted, and a spike rate output distribution R J is produced ( Figure 9).

In the simplest case, we look at two sets of neurons, the source and the target. The source sends excitatory connections to the target, and exhibits variable weights at outgoing synapses. The input that a target neuron receives is fed through a linear filter G to produce an output rate R J according to Eq (1). The distribution for R J depends on G and W as well as on R I. The system is sufficient for calculations on the shape of distributions, as well as the effects of Hebbian and homeostatic plasticity.

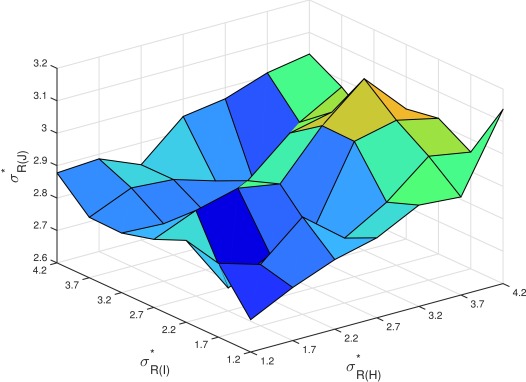

We have explored the dependencies between gain, weight and rate distributions in simulations. First, we found that the width of the output spike rate distribution R J depends heavily on the gain distribution, but only slightly on the input weight distribution ( Figure 10). It does depend on the overall connectivity C, where is wider for lower connectivity, but not very much ( Figure 10). Secondly, the width of the output distribution R J does not depend on R I or R H either ( Figure 11). The most important factor for a spike rate distribution remains the gain

Figure 11. The width of the rate distribution for J, does not depend on R I or R H.

3.4 Adaptation

We may now ask, where do lognormal spike rate distributions come from? How is the system set up, i.e. what rules of adaptation generate lognormal distributions in weights and gains?

In the case of cortical networks, there are excitatory recurrent interactions that constitute a significant part of total input. In the case of cerebellar or striatal neurons, there are no recurrent excitatory interactions, only inhibitory interneurons and excitatory input. The generation of lognormal distributions must therefore be independent of recurrent excitation. It requires a system where continuous input shapes the weights and gains of a target network J. We start with the system that we described before, with random assignment of weights and gains. We employ adaptivity for weights, and also for gains, by positive Hebbian learning, or by negative homeostatic learning. The output of I is fed into J, and W and G are adaptive. Additionally, J may have excitatory recurrent connectivity, and learning takes place within the network J.

From any given initial spike rate distribution (Gaussian, uniform, lognormal) for I, we calculate W assuming a positive learning (Hebbian) adjustment rule, which is dependent on input and output frequencies. Each individual weight w ij is updated by

We use parameters λ and µ, such that the generated spike rate output R J is compatible in strength with the input rate R I.

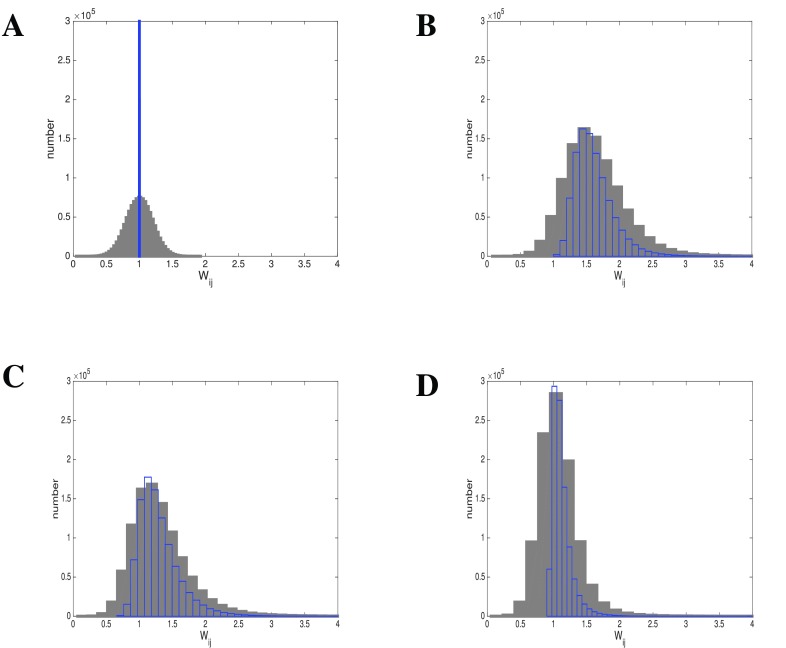

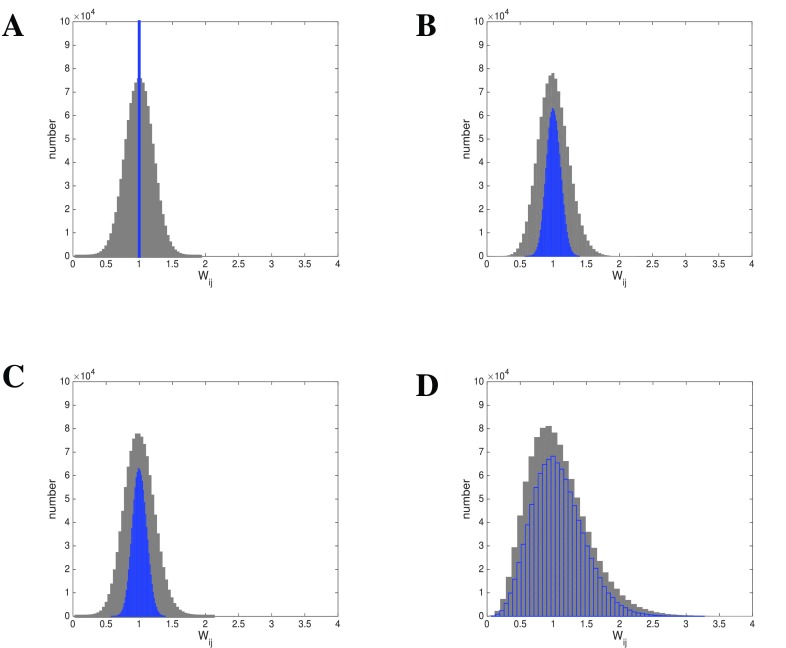

Using Hebbian learning, we generate a weight distribution W that is lognormally distributed, independent of the initial configuration or the distribution of the gains in the system ( Figure 12). The lognormal distribution also develops independently of the rate distribution of the inputs, it only develops faster with lognormal rather than normally distributed spike rate input (not shown). It makes no difference whether we use a recurrent system J, or a non-recurrent population J with input from a population I with a spike rate distribution, as long as we use a Hebbian weight adaptation rule. For the shape of the distribution, it also does not matter whether we route the output of J back to I, or whether we use local or no recurrence. To show the effect of the adaptation rule, we also used homeostatic synaptic plasticity to adjust the weights. This means that the weight is adjusted inversely to the spike rate of input and output neurons.

Figure 12. Hebbian learning results in lognormal weight distribution independent of gain distribution.

Given is a lognormal input rate A: Initial weight configurations: Gaussian (grey) or uniform (blue). B: After Hebbian learning using Gaussian gain distribution (grey, blue as before). C: After Hebbian learning using uniform gain distribution (grey, blue as before). D: After Hebbian learning using lognormal gain distribution. (grey, blue as before).

In this case, it is very clear that with any input or initial configuration and any gain distribution, only a normal distribution of weights results ( Figure 13). Again, a lognormal input spike rate slows the process of adaptation, but the end result is the same, a normal distribution.

Figure 13. Homeostatic learning results in Gaussian weight distributions, independent of gain distribution.

Given is a lognormal input rate . A: Initial Configuration: Gaussian (grey) or uniform (blue). B: Homeostatic weight learning using Gaussian gain distribution (grey, blue as before). C: Homeostatic weight learning using uniform gain distribution (grey, blue as before). D: Homeostatic weight learning using lognormal gain distribution (grey, blue as before).

Since gain distributions are also lognormal, we may ask in the same way how they develop and are maintained by plasticity rules. We adapt the linear gain G by either Hebbian or homeostatic learning. Each gain can be adjusted by a Hebbian rule

or a homeostatic rule

with parameters λ and µ.

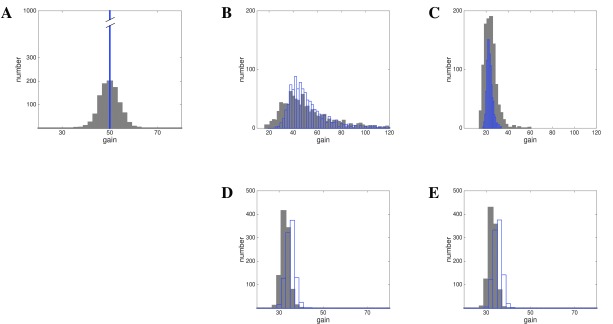

We start with uniform or normally distributed G in an environment where W is lognormal, normal or uniform, and R I is normal or lognormal. If we adapt only G for any initial configuration, using any distribution for R I, including the lognormal distribution, and a lognormal or normal weight distribution, we arrive at a normal distribution for G with homeostatic learning and a lognormal distribution with Hebbian learning ( Figure 14).

Figure 14. Hebbian or homeostatic gain learning determine lognormal or Gaussian outcome.

Given is a lognormal input rate . A: Initial Configuration: Gaussian (grey) or uniform (blue). B and C: Hebbian learning using lognormal or Gaussian weights, resulting gain distribution is lognormal. D and E: Homeostatic learning using lognormal or Gaussian weights, resulting gain distribution is Gaussian.

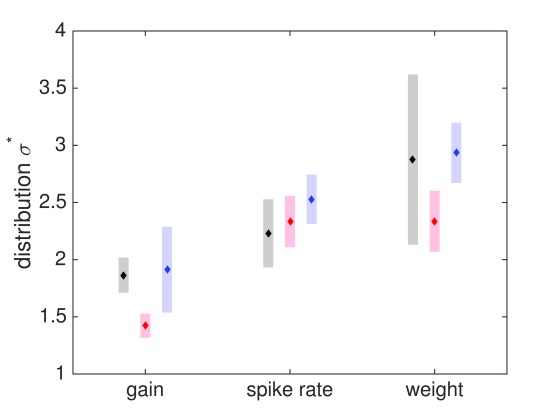

Lognormal distributions develop from Hebbian plasticity, and homeostatic plasticity generates only normal distributions. The explanation lies in the nature of random statistical events, which generate normal distributions when the underlying mechanisms are sums of many small events, but lognormal distributions when the underlying mechanisms are multiplicative 54. We also wanted to understand the observed widths of the distributions. We hypothesized that the differences for σ* between W, R and G result from the network structure. Accordingly we started a simulation with initial uniform values for G and W and Hebbian update rules using the same learning rate λ for both ( Figure 15). We find that gain, rate and weight distributions match the experimental values, and that this is true for any tested constellation. We also found that Hebbian learning alone quickly escalates values, which develop exponentially, and that additional rounds of homeostatic adaptation are required to stabilize the system. Homeostatic learning pushes the system back towards a normal distribution.

Figure 15. Experimental and generated distribution widths for spike rates, gains and weights.

Grey, experimental measurements (s. Tables); red, generated with 100% Hebbian learning; or blue, 80% Hebbian and 20% homeostatic learning combined. The basic distinction in distribution width for gains, rates, weights is reproduced with Hebbian learning, additional homeostatic learning matches experimental values best.

Our data, in the most general way, allowing for various conditions and architectures, show that Hebbian learning is required both for intrinsic gain and for weights in order to generate the attested lognormal distributions. This is an interesting result, because it shows that we need prominent Hebbian intrinsic learning to explain the gain distributions that we find experimentally. Intrinsic learning is not just homeostatic adaptation, it follows the same rules as synaptic weight learning.

4 Discussion

4.1 Universality of lognormal distributions

Spike rates of neurons seem to be universally distributed according to a lognormal distribution, with many neurons at low spike rates, and a small number at successively higher spike rates (heavy-tail) 1. The same distributions are found for synaptic weights 6, and intrinsic properties associated with excitability (gain) 1. The neurons that we reported on are of very different types, and they are embedded in different kinds of connectivity. Medium spiny neurons and Purkinje cells are GABAergic (inhibitory), while cortical and IC neurons are glutamatergic (excitatory), but this is not reflected in a distinct spike rate distribution. They also fire with very different average spike rates. IC neurons operate at very high frequencies, and Purkinje neurons at much higher frequencies than cortical or striatal projection neurons. But they all have the same spike rate distribution. It has been suggested 2 that lognormal spike distributions are a feature of cortical tissue and arise from strong excitatory recurrent connectivity, but this is experimentally not substantiated nor is it theoretically necessary. While cortical pyramidal neurons exist in a heavily excitatory recurrent environment, medium spiny neurons, cerebellar Purkinje cells and IC neurons act mostly in a feed-forward way, i.e. they don’t have significant recurrent excitatory (glutamatergic) connectivity.

Beyond spike rate distribution, we also gathered data on weight and gain distributions. Again the observation of lognormal distributions is ubiquitous. We find synaptic weight distributions for cortex 6 and cerebellum that are lognormal, with characteristic width of distributions. For intrinsic properties, striatal projection neurons and cortical neurons 46 show responses to constant current and current-to-threshold (gain) distributions, which again appear lognormally distributed, with smaller widths than spike rate distributions.

Our models show that lognormal distributions arise even in a purely input-output environment, and that they are a result of Hebbian learning of weights and gains, quite independent of the overall magnitude of the spike rates.

4.2 Generating lognormal distributions

Mean spike rates, as well as intrinsic excitability and synaptic weights, have lognormal distributions.

It has often been assumed that variability in intrinsic excitability is a source of noise in neural computation 56, even though others have argued that intrinsic variability contributes to neural coding 57, 58 and that intrinsic plasticity follows certain rules 19. An excellent overview of the experimentally attested forms of intrinsic plasticity is contained in 35, cf. 59– 61. Many other detailed observations are contained in 36– 38, 62.

Recently, Mahon and Charpier 4 have shown that intrinsic excitability is stable in individual neurons under control conditions, while stimulation protocols (e.g. in barrel cortex of anesthetized rats) change intrinsic excitability by at least 50–100%. However, the conclusions drawn from the experimental research are often contradictory. Intrinsic plasticity is sometimes assumed to act in a negative, homeostatic way, i.e. opposite to synaptic plasticity 4, but sometimes in a ’Hebbian’, positive way, i.e. cooperative with synaptic plasticity 38, 63. There is evidence for (short-term) negative or homeostatic plasticity, which has been previously investigated 4.

Our work has now shown that any kind of neural system with linear gains requires positive, Hebbian intrinsic plasticity to produce and maintain a lognormal distribution of gains. We also could show that the observed widths of the distributions, i.e. the differences for σ* between W, R and G, naturally result from the network structure and are built into the system simply by Hebbian adaptation.

Lognormal distributions may arise as stable properties of the system during early development (the set-up of the system), i.e. before actual pattern storage or event memory develops, and they are maintained during processing by a Hebbian type of positive adaptation events. Homeostatic plasticity consists in downregulating gains or weights with increases in firing rates. Purely homeostatic learning results in normal distributions, and erases existing lognormal distributions. By combining homeostatic and Hebbian adaptation we can achieve and maintain stable lognormal distributions.

4.3 Why logarithmic coding schemes

A lognormal distribution means that values are normally distributed on a logarithmic scale. From an engineering perspective, basic Hebbian plasticity for synapses and intrinsic properties is sufficient to generate stable logarithmic distributions. If there is random variation of multiplicative events, as in Hebbian plasticity, a lognormal distribution will be the result 54.

This is related to principles of sensory coding, where logarithmic scale signal processing enhances perception of weak signals, while also being able to respond to large signals - effectively increasing the perceptual range compared to linear coding 14. In an interconnected network logarithmic coding may turn into a property for the access of representations. Feature clusters, or event traces could be accessed by targeted connections to the top-level neurons, which then activate lower level neurons in their immediate vicinity. By accessing high frequency neurons preferentially, a whole feature area can be reached, and local diffusion will provide any additional computation. Similarly, the results of a local computation can be efficiently distributed by high frequency neurons to other areas. Fast point-to-point communication using only high frequency neurons may be sufficient for fast responses in many cases. Scale-free networks in general support synchronization, which is also a useful feature for rapid information transfer and access 64.

Recently, publications 65, 66 have shown that there is indeed a difference between high-frequency and low-frequency neurons in their connectivity: high-frequency neurons have short delays, strong connections, and directed targets, while low-frequency neurons have long delays, weak connections and diffuse targets.

The lognormal distribution of spike rates has significant implications for neural coding. Logarithmic spike rates are coupled with linear variance for responses to behavioral stimulation. In other words, the greatest part of the coding results already from the frequency rank of the neuron itself, such that high frequency neurons have the largest impact. A fixed mean rate for each neuron allows stable expectation values for network computations.

Logarithmic, hierarchical coding does not need to be sparse. The low frequency neurons may matter the most in terms of input response. With lognormal synaptic weight distributions, if strong synapses are kept stable, they may transmit an input neuron’s mean firing rate to targets and in this way provide stability to the system. All other synapses could be arbitrary. This would allow for continued pattern learning to be implemented by the bulk of low weight synapses, while the framework of neuronal interactions, e.g., the ensemble structure, could be unchanged. Such a division of labor between strong synapses and weaker ones could have many advantages in a complex, modular network.

Experimental data have often shown that sampling of neuronal responses from a large population (10 5 or more neurons), which become activated at 30% or more, yields accuracy for a stimulus already for small samples (100–200 neurons or 1–2%) (e.g., 67). We may suggest that this happens when we sample from a highly modular structure, and we have been able to replicate the effect with lognormal networks 68.

5 Conclusions

In our earlier work 1, we found that intrinsic excitability manifested by spike response to current injection and rheobase in vitro for dorsal striatal and nucleus accumbens neurons seems to have the same distribution as the firing rate in cortex under in vivo conditions. Approximately at the same time 6, had observed a heavy-tailed distribution of synaptic weights in cortical tissue.

In this article, we have done three things: (a) collected data to show that rate, weight and gain distributions in different brain areas all follow a heavy-tailed, specifically a lognormal distribution; (b) created a generic neural network model to show that these distributions arise from Hebbian learning, and specifically that intrinsic plasticity must be Hebbian as well; and (c) shown that the width of the distributions, as experimentally attested, arise naturally from the network structure and the role of its components, in a very robust way. We have also discussed what the lognormal distribution means for neural coding: a division of labor between fast transmission by high-frequency neurons and low-level computation by low-frequency neurons in a modular structure, and possibly a division of labor between stable components (strong synapses, high-frequency neurons) and more variable components (weak synapses, low-frequency neurons).

6 Software and data availability

The GNN simulation software was programmed in Matlab, and is available in GitHub: https://github.com/gscheler/GNN/tree/v0.1

Archived source code as at time of publication: https://doi.org/10.5281/zenodo.82994949

OSS approved license: Apache 2.0.

All the data required for re-analysis of the study have been referenced throughout the manuscript.

Funding Statement

The author(s) declared that no grants were involved in supporting this work.

[version 2; referees: 2 approved]

References

- 1. Scheler G, Schumann J: Diversity and stability in neuronal output rates. In Soc Neurosci Meeting. 2006. 10.13140/RG.2.1.1862.8967 [DOI] [Google Scholar]

- 2. Hromádka T, DeWeese MR, Zador AM: Sparse representation of sounds in the unanesthetized auditory cortex. PLoS Biol. 2008;6(1):e16. 10.1371/journal.pbio.0060016 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3. Hopf FW, Moran MM, Mohamedi ML, et al. : Inhibition of the slow calcium-dependent potassium channel in the lateral dorsal striatum enhances action potential firing in slice and enhances performance in a habit memory task. In Soc Neurosci Meeting. 2005. [Google Scholar]

- 4. Mahon S, Charpier S: Bidirectional plasticity of intrinsic excitability controls sensory inputs efficiency in layer 5 barrel cortex neurons in vivo. J Neurosci. 2012;32(33):11377–89. 10.1523/JNEUROSCI.0415-12.2012 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5. Günay C, Edgerton JR, Jaeger D: Channel density distributions explain spiking variability in the globus pallidus: a combined physiology and computer simulation database approach. J Neurosci. 2008;28(30):7476–91. 10.1523/JNEUROSCI.4198-07.2008 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6. Song S, Sjöström PJ, Reigl M, et al. : Highly nonrandom features of synaptic connectivity in local cortical circuits. PLoS Biol. 2005;3(3):e68. 10.1371/journal.pbio.0030068 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7. Mason A, Nicoll A, Stratford K: Synaptic transmission between individual pyramidal neurons of the rat visual cortex in vitro. J Neurosci. 1991;11(1):72–84. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8. Feldmeyer D, Lübke J, Sakmann B: Efficacy and connectivity of intracolumnar pairs of layer 2/3 pyramidal cells in the barrel cortex of juvenile rats. J Physiol. 2006;575(Pt 2):583–602. 10.1113/jphysiol.2006.105106 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9. Holmgren C, Harkany T, Svennenfors B, et al. : Pyramidal cell communication within local networks in layer 2/3 of rat neocortex. J Physiol. 2003;551(Pt 1):139–53. 10.1113/jphysiol.2003.044784 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10. Sayer RJ, Friedlander MJ, Redman SJ: The time course and amplitude of EPSPs evoked at synapses between pairs of CA3/CA1 neurons in the hippocampal slice. J Neurosci. 1990;10(3):826–836. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11. Brunel N, Hakim V, Isope P, et al. : Optimal information storage and the distribution of synaptic weights: perceptron versus Purkinje cell. Neuron. 2004;43(5):745–57. 10.1016/j.neuron.2004.08.023 [DOI] [PubMed] [Google Scholar]

- 12. Shafi M, Zhou Y, Quintana J, et al. : Variability in neuronal activity in primate cortex during working memory tasks. Neuroscience. 2007;146(3):1082–108. 10.1016/j.neuroscience.2006.12.072 [DOI] [PubMed] [Google Scholar]

- 13. Roxin A, Brunel N, Hansel D, et al. : On the distribution of firing rates in networks of cortical neurons. J Neurosci. 2011;31(45):16217–26. 10.1523/JNEUROSCI.1677-11.2011 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14. Buzsáki G, Mizuseki K: The log-dynamic brain: how skewed distributions affect network operations. Nat Rev Neurosci. 2014;15(4):264–278. 10.1038/nrn3687 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15. Koulakov AA, Hromádka T, Zador AM: Correlated connectivity and the distribution of firing rates in the neocortex. J Neurosci. 2009;29(12):3685–94. 10.1523/JNEUROSCI.4500-08.2009 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16. Wohrer A, Humphries MD, Machens CK: Population-wide distributions of neural activity during perceptual decision-making. Progr Neurobiol. 2013;103:156–93. 10.1016/j.pneurobio.2012.09.004 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17. Petersen PC, Berg RW: Lognormal firing rate distribution reveals prominent fluctuation-driven regime in spinal motor networks. eLife. 2016;5: pii: e18805. 10.7554/eLife.18805 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18. Ikegaya Y, Sasaki T, Ishikawa D, et al. : Interpyramid spike transmission stabilizes the sparseness of recurrent network activity. Cereb Cortex. 2013;23(2):293–304. 10.1093/cercor/bhs006 [DOI] [PubMed] [Google Scholar]

- 19. Scheler G: Learning intrinsic excitability in medium spiny neurons [version 2; referees: 2 approved]. F1000Res. 2014;2:88. 10.12688/f1000research.2-88.v2 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20. Gilson M, Savin C, Zenke F: Editorial: Emergent Neural Computation from the Interaction of Different Forms of Plasticity. Front Comput Neurosci. 2015;9:145. 10.3389/fncom.2015.00145 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21. Song S, Miller KD, Abbott LF: Competitive Hebbian learning through spike-timing-dependent synaptic plasticity. Nat Neurosci. 2000;3(9):919–26. 10.1038/78829 [DOI] [PubMed] [Google Scholar]

- 22. Gerstner W, Kistler WM: Mathematical formulations of Hebbian learning. Biol Cybern. 2002;87(5–6):404–415. 10.1007/s00422-002-0353-y [DOI] [PubMed] [Google Scholar]

- 23. Caporale N, Dan Y: Spike timing-dependent plasticity: a Hebbian learning rule. Annu Rev Neurosci. 2008;31(1):25–46. 10.1146/annurev.neuro.31.060407.125639 [DOI] [PubMed] [Google Scholar]

- 24. Zenke F, Gerstner W: Hebbian plasticity requires compensatory processes on multiple timescales. Philos Trans R Soc Lond B Biol Sci. 2017;372(1715): pii: 20160259. 10.1098/rstb.2016.0259 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25. Tetzlaff C, Kolodziejski C, Markelic I, et al. : Time scales of memory, learning, and plasticity. Biol Cybern. 2012;106(11–12):715–726. 10.1007/s00422-012-0529-z [DOI] [PubMed] [Google Scholar]

- 26. Desai NS: Homeostatic plasticity in the CNS: synaptic and intrinsic forms. J Physiol Paris. 2003;97(4–6):391–402. 10.1016/j.jphysparis.2004.01.005 [DOI] [PubMed] [Google Scholar]

- 27. Naudé J, Cessac B, Berry H, et al. : Effects of cellular homeostatic intrinsic plasticity on dynamical and computational properties of biological recurrent neural networks. J Neurosci. 2013;33(38):15032–15043. 10.1523/JNEUROSCI.0870-13.2013 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28. Cannon J, Miller P: Stable Control of Firing Rate Mean and Variance by Dual Homeostatic Mechanisms. J Math Neurosci. 2017;7(1):1. 10.1186/s13408-017-0043-7 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29. Kazantsev V, Gordleeva S, Stasenko S, et al. : A homeostatic model of neuronal firing governed by feedback signals from the extracellular matrix. PLoS One. 2012;7(7):e41646. 10.1371/journal.pone.0041646 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30. Cudmore RH, Desai NS: Intrinsic plasticity. Scholarpedia. 2008;3(2):1363 Reference Source [Google Scholar]

- 31. Tully PJ, Hennig MH, Lansner A: Synaptic and nonsynaptic plasticity approximating probabilistic inference. Front Synaptic Neurosci. 2014;6:8. 10.3389/fnsyn.2014.00008 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32. Sehgal M, Song C, Ehlers VL, et al. : Learning to learn - intrinsic plasticity as a metaplasticity mechanism for memory formation. Neurobiol Learn Mem. 2013;105:186–199. 10.1016/j.nlm.2013.07.008 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33. Whitaker LR, Warren BL, Venniro M, et al. : Bidirectional Modulation of Intrinsic Excitability in Rat Prelimbic Cortex Neuronal Ensembles and Non-Ensembles after Operant Learning. J Neurosci. 2017;37(36):8845–8856. 10.1523/JNEUROSCI.3761-16.2017 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34. Greenhill SD, Ranson A, Fox K: Hebbian and Homeostatic Plasticity Mechanisms in Regular Spiking and Intrinsic Bursting Cells of Cortical Layer 5. Neuron. 2015;88(3):539–552. 10.1016/j.neuron.2015.09.025 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35. Paz JT, Mahon S, Tiret P, et al. : Multiple forms of activity-dependent intrinsic plasticity in layer V cortical neurones in vivo. J Physiol. 2009;587(Pt 13):3189–3205. 10.1113/jphysiol.2009.169334 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36. Campanac E, Gasselin C, Baude A, et al. : Enhanced intrinsic excitability in basket cells maintains excitatory-inhibitory balance in hippocampal circuits. Neuron. 2013;77(4):712–722. 10.1016/j.neuron.2012.12.020 [DOI] [PubMed] [Google Scholar]

- 37. Campanac E, Daoudal G, Ankri N, et al. : Downregulation of dendritic I h in CA1 pyramidal neurons after LTP. J Neurosci. 2008;28(34):8635–8643. 10.1523/JNEUROSCI.1411-08.2008 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38. Frick A, Magee J, Johnston D: LTP is accompanied by an enhanced local excitability of pyramidal neuron dendrites. Nat Neurosci. 2004;7(2):126–135. 10.1038/nn1178 [DOI] [PubMed] [Google Scholar]

- 39. Hung CP, Kreiman G, Poggio T, et al. : Fast readout of object identity from macaque inferior temporal cortex. Science. 2005;310(5749):863–6. 10.1126/science.1117593 [DOI] [PubMed] [Google Scholar]

- 40. Wang X, Lu T, Snider RK, et al. : Sustained firing in auditory cortex evoked by preferred stimuli. Nature. 2005;435(7040):341–346. 10.1038/nature03565 [DOI] [PubMed] [Google Scholar]

- 41. Raman IM, Bean BP: Ionic currents underlying spontaneous action potentials in isolated cerebellar Purkinje neurons. J Neurosci. 1999;19(5):1663–74. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42. Häusser M, Clark BA: Tonic synaptic inhibition modulates neuronal output pattern and spatiotemporal synaptic integration. Neuron. 1997;19(3):665–678. 10.1016/S0896-6273(00)80379-7 [DOI] [PubMed] [Google Scholar]

- 43. de Solages C, Szapiro G, Brunel N, et al. : High-frequency organization and synchrony of activity in the Purkinje cell layer of the cerebellum. Neuron. 2008;58(5):775–88. 10.1016/j.neuron.2008.05.008 [DOI] [PubMed] [Google Scholar]

- 44. Roitman AV, Pasalar S, Johnson MT, et al. : Position, direction of movement, and speed tuning of cerebellar Purkinje cells during circular manual tracking in monkey. J Neurosci. 2005;25(40):9244–9257. 10.1523/JNEUROSCI.1886-05.2005 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45. Zohar O, Shackleton TM, Palmer AR, et al. : The effect of correlated neuronal firing and neuronal heterogeneity on population coding accuracy in guinea pig inferior colliculus. PLoS One. 2013;8(12):e81660. 10.1371/journal.pone.0081660 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46. Nowak LG, Azouz R, Sanchez-Vives MV, et al. : Electrophysiological classes of cat primary visual cortical neurons in vivo as revealed by quantitative analyses. J Neurophysiol. 2003;89(3):1541–66. 10.1152/jn.00580.2002 [DOI] [PubMed] [Google Scholar]

- 47. Sjöström PJ, Turrigiano GG, Nelson SB: Rate, timing, and cooperativity jointly determine cortical synaptic plasticity. Neuron. 2001;32(6):1149–1164. 10.1016/S0896-6273(01)00542-6 [DOI] [PubMed] [Google Scholar]

- 48. van Rossum MC, Bi GQ, Turrigiano GG: Stable Hebbian Learning from Spike Timing-Dependent Plasticity. J Neurosci. 2000;20(23):8812–8821. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49. Frick A, Feldmeyer D, Helmstaedter M, et al. : Monosynaptic connections between pairs of L5A pyramidal neurons in columns of juvenile rat somatosensory cortex. Cereb Cortex. 2008;18(2):397–406. 10.1093/cercor/bhm074 [DOI] [PubMed] [Google Scholar]

- 50. Isope P, Barbour B: Properties of unitary granule cell-->Purkinje cell synapses in adult rat cerebellar slices. J Neurosci. 2002;22(22):9668–9678. 10.3410/f.1010825.167958 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 51. Barbour B, Brunel N, Hakim V, et al. : What can we learn from synaptic weight distributions? Trends Neurosci. 2007;30(12):622–9. 10.1016/j.tins.2007.09.005 [DOI] [PubMed] [Google Scholar]

- 52. Zhang Y, Cudmore RH, Lin DT, et al. : Visualization of NMDA receptor-dependent AMPA receptor synaptic plasticity in vivo. Nat Neurosci. 2015;18(3):402–7. 10.1038/nn.3936 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 53. Mochizuki Y, Onaga T, Shimazaki H, et al. : Similarity in Neuronal Firing Regimes across Mammalian Species. J Neurosci. 2016;36(21):5736–5747. 10.1523/JNEUROSCI.0230-16.2016 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 54. Limpert E, Stahel W, Abbt M: Log-normal distributions across the sciences: Keys and clues. Bioscience. 2001;51(5):341–352. 10.1641/0006-3568(2001)051[0341:LNDATS]2.0.CO;2 [DOI] [Google Scholar]

- 55. Crow EL, Shimizu K, editors: Lognormal Distributions: Theory and Applications.Dekker,1988. Reference Source [Google Scholar]

- 56. Rudolph M, Destexhe A: The discharge variability of neocortical neurons during high-conductance states. Neuroscience. 2003;119(3):855–873. 10.1016/S0306-4522(03)00164-7 [DOI] [PubMed] [Google Scholar]

- 57. Scheler G: Regulation of neuromodulator receptor efficacy--implications for whole-neuron and synaptic plasticity. Prog Neurobiol. 2004;72(6):399–415. 10.1016/j.pneurobio.2004.03.008 [DOI] [PubMed] [Google Scholar]

- 58. Stemmler M, Koch C: How voltage-dependent conductances can adapt to maximize the information encoded by neuronal firing rate. Nat Neurosci. 1999;2(6):521–527. 10.1038/9173 [DOI] [PubMed] [Google Scholar]

- 59. Debanne D: Plasticity of neuronal excitability in vivo. J Physiol. 2009;587(Pt 13):3057–3058. 10.1113/jphysiol.2009.175448 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 60. Campanac E, Debanne D: Plasticity of neuronal excitability: Hebbian rules beyond the synapse. Arch Ital Biol. 2007;145(3–4):277–287. [PubMed] [Google Scholar]

- 61. Doudal G, Debanne D: Long-term plasticity of intrinsic excitability: learning rules and mechanisms. Learn Mem. 2003;10(6):456–465. 10.1101/lm.64103 [DOI] [PubMed] [Google Scholar]

- 62. Carvalho TP, Buonomano DV: Differential effects of excitatory and inhibitory plasticity on synaptically driven neuronal input-output functions. Neuron. 2009;61(5):774–785. 10.1016/j.neuron.2009.01.013 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 63. Mahon S, Deniau JM, Charpier S: Various synaptic activities and firing patterns in cortico-striatal and striatal neurons in vivo. J Physiol Paris. 2003;97(4–6):557–566. 10.1016/j.jphysparis.2004.01.013 [DOI] [PubMed] [Google Scholar]

- 64. Scheler G: Network topology influences synchronization and intrinsic read-out. arXiv:q-bio/0507037.2005. Reference Source [DOI] [PMC free article] [PubMed] [Google Scholar]

- 65. Omura Y, Carvalho MM, Inokuchi K, et al. : A Lognormal Recurrent Network Model for Burst Generation during Hippocampal Sharp Waves. J Neurosci. 2015;35(43):14585–14601. 10.1523/JNEUROSCI.4944-14.2015 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 66. Nigam S, Shimono M, Ito S, et al. : Rich-Club Organization in Effective Connectivity among Cortical Neurons. J Neurosci. 2016;36(3):670–684. 10.1523/JNEUROSCI.2177-15.2016 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 67. O’Connor DH, Peron SP, Huber D, et al. : Neural activity in barrel cortex underlying Vibrissa-based object localization in mice. Neuron. 2010;67(6):1048–61. 10.1016/j.neuron.2010.08.026 [DOI] [PubMed] [Google Scholar]

- 68. Scheler G: Extreme pattern compression in log-normal networks [version 1; not peer reviewed]. F1000Res.(poster),2016;5:2177 10.7490/f1000research.1113011.1 [DOI] [Google Scholar]