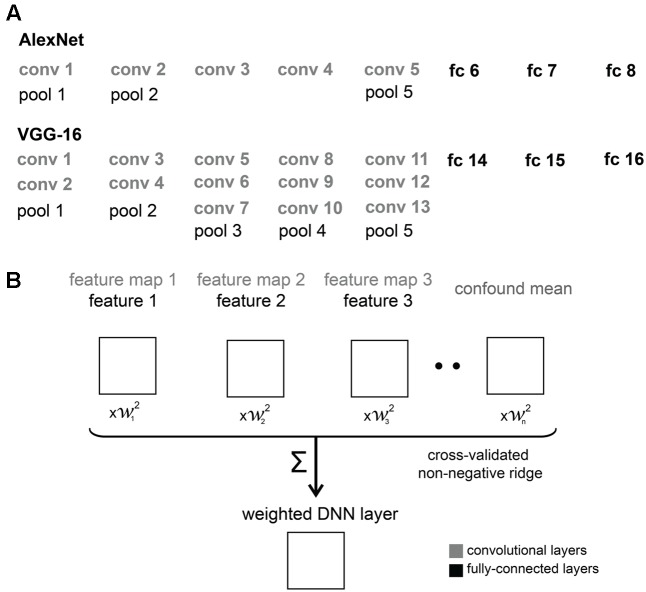

FIGURE 2.

DNN architectures and feature weighting. (A) Comparison of AlexNet and VGG-16 architectures. We used convolutional (conv) and fully-connected (fc) layers from AlexNet and VGG-16 in our analyses. (B) Schematic overview of feature weighting. The schematic shows a set of example RDMs characterizing the stimulus information represented by the DNNs. For convolutional layers, we created RDMs from activations of feature maps. For fully-connected layers, we created RDMs from activations of individual features, i.e., model units. Within each DNN layer, we used regularized (non-negative ridge) linear regression to estimate the RDM weights that best predict the similarity-judgment RDM. Each DNN layer includes a confound-mean predictor (intercept). The weights were estimated using a cross-validation procedure to prevent overfitting to a particular set of images.