Summary

The use of instrumental variables for estimating the effect of an exposure on an outcome is popular in econometrics, and increasingly so in epidemiology. This increasing popularity may be attributed to the natural occurrence of instrumental variables in observational studies that incorporate elements of randomization, either by design or by nature (e.g., random inheritance of genes). Instrumental variables estimation of exposure effects is well established for continuous outcomes and to some extent for binary outcomes. It is, however, largely lacking for time-to-event outcomes because of complications due to censoring and survivorship bias. In this paper, we make a novel proposal under a class of structural cumulative survival models which parameterize time-varying effects of a point exposure directly on the scale of the survival function; these models are essentially equivalent with a semi-parametric variant of the instrumental variables additive hazards model. We propose a class of recursive instrumental variable estimators for these exposure effects, and derive their large sample properties along with inferential tools. We examine the performance of the proposed method in simulation studies and illustrate it in a Mendelian randomization study to evaluate the effect of diabetes on mortality using data from the Health and Retirement Study. We further use the proposed method to investigate potential benefit from breast cancer screening on subsequent breast cancer mortality based on the HIP-study.

Keywords: Causal effect, Confounding, Current treatment interaction, G-estimation, Instrumental variable, Mendelian randomization

1. Introduction

A key concern in most analyses of observational studies is whether sufficient and appropriate adjustment has been made for confounding of the association between the exposure of interest and outcome. This concern can be mitigated to some extent when data are available on an instrumental variable. This is a variable which is (a) associated with the exposure, (b) has no direct effect on the outcome other than through the exposure, and (c) whose association with the outcome is not confounded by unmeasured variables (see e.g. Hernán and Robins, 2006). Condition (a) is empirically verifiable, but conditions (b) and (c) are not. However, condition (c) can sometimes be justified in observational studies that incorporate elements of randomization, either by design or by nature (Didelez and Sheehan, 2007). The plausibility of condition (b) can sometimes be argued on the basis of design elements (e.g. blinding) or a priori contextual knowledge.

Instrumental variables have a long tradition in econometrics (e.g., Angrist and Krueger, 2001). They are increasingly popular in epidemiology due to a revival of Mendelian randomization studies (Katan, 1986; Davey-Smith and Ebrahim, 2003). Such studies focus on modifiable exposures known to be affected by certain genetic variants. They then adopt the notion that an association between these genetic variants and the outcome of interest (e.g., all-cause mortality) can only be explained by an effect of the exposure on the outcome. This reasoning presupposes that the genetic variants studied satisfy the aforementioned instrumental variable conditions (Didelez and Sheehan, 2007). That is, they should have no effect on the outcome (e.g., all-cause mortality) other than by modifying the exposure, which can sometimes be justified based on a biological understanding of the functional genetic mechanism. Moreover, their association with the outcome should be unconfounded, which is sometimes realistic because of Mendelian randomization: the fact that genes are transferred randomly from parents to their offspring.

Instrumental variables estimation of exposure effects is well established for continuous outcomes that obey linear models. Two-stage least squares (2SLS) estimation proceeds via two ordinary least squares regressions: regressing the exposure variable on the instrument in the first stage, and next regressing the outcome variable on the predicted exposure value in the second stage. This approach presumes that the additive exposure effect is the same at all levels of the unmeasured confounders (Hernan and Robins, 2006), which is rarely plausible in the analysis of event times. The IV-analysis of event times is further complicated because of censoring and the fact that the instrumental variables assumptions, even when valid for the initial study population, are typically violated within the risk sets composed of subjects who survive up to a given time. Progress is often made via heuristic adaptations of 2SLS estimation, whereby the second stage regression is substituted by a Cox regression (see Tchetgen Tchetgen et al. (2015), and Rassen et al. (2008), Cai et al. (2011) for related approaches for dichotomous outcomes), but these have no formal justification outside the limited context of rare events (Tchetgen Tchetgen et al., 2015).

To the best of our knowledge, the first formal IV-approach for the analysis of event times was described in Robins and Tsiatis (1991), who parameterised the exposure effect under a structural accelerated failure time model and developed G-estimation methods for it. Their development is very general, and, in particular, can handle continuous exposures. However, recurring problems in applications have been the difficulty in finding solutions to the estimating equations and obtaining estimators with good precision. This is related to the use of an artificial censoring procedure, where some subjects with observed event times are censored in the analysis in order to maintain unbiased estimating equations. This procedure may lead to an enormous information loss. Moreover, it leads to non-smooth estimating equations (Joffe et al., 2012), so that even simple models are difficult to fit. Loeys, Goetghebeur and Vandebosch (2005) proposed an alternative approach based on structural proportional hazards models. Their development does not require the use of recensoring, but is more parametric than that of Robins and Tsiatis (1991) as it requires modeling the exposure distribution. It is moreover limited to settings with a binary instrument and constant exposure at one level of the instrument, which is characteristic of placebo-controlled randomized experiments without contamination. Cuzick et al. (2007) relax this limitation by adopting a principal stratification approach but, like other such approaches (see e.g. Abadie, 2003; Nie, Cheng and Small, 2011), restrict their development to binary exposure and instrumental variables. More recently, Tchetgen Tchetgen et al. (2015) independently demonstrated the validity of two-stage estimation approaches in additive hazard models for event times when the exposure obeys a particular location shift model (see Li, Fine and Brookhart (2015) for a related approach under a more restrictive model; other related approaches are discussed in Tchetgen Tchetgen et al., 2015). In this article, we avoid restrictions on the exposure distribution and develop IV-estimators under a semiparametric structural cumulative survival model that is closely related to, but less restrictive than, the additive hazard model in Tchetgen Tchetgen et al. (2015) and Li, Fine and Brookhart (2015). The proposed approach is general in that it can handle arbitrary exposures and instrumental variables, and can accommodate adjustment for baseline covariates. It neither requires modelling the exposure distribution nor the association between covariates and outcome, and it naturally deals with administrative censoring and certain forms of dependent censoring. Picciotto et al. (2012) studied the different problem of adjusting for time-varying confounding when estimating the effect of a time-varying exposure on a survival outcome. While we also make use of the structural cumulative failure time model, we do this for handling the different problem of estimating the effect of an exposure on a survival outcome in the presence of unobserved confounding using an instrumental variable. Because of this and the fact that we make use of semi-parametric continuous-time models, in contrast to Picciotto et al. (2012) who focus on parametric discrete-time models, the recursive estimators that we propose cannot be immediately compared with those in Picciotto et al. (2012). A further strength of our paper is that it develops asymptotic inference for the proposed recursive estimators, which is currently lacking for G-estimators in structural cumulative failure time models. The semiparametric estimator that we propose requires only a correct model for the conditional mean of the instrumental variable, given covariates, for consistency. Besides deriving its large sample properties we also develop inferential tools allowing us for instance to investigate for time-changing exposure effect. We examine the performance of the proposed method in simulation studies and two empirical studies.

2. Model specification and estimation

2.1 Basics

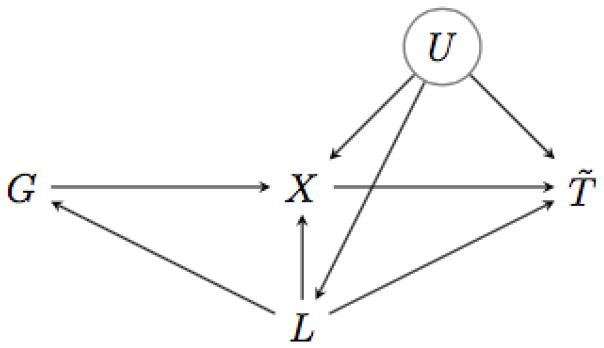

Our goal is to estimate the effect of an arbitrary exposure X on an event time T̃ under the assumption that G is an instrumental variable, conditional on a covariate set L. A data-generating mechanism that satisfies this assumption is depicted in the causal diagram (Pearl, 2009) of Figure 1. Here, the instrumental variables assumptions are guaranteed by the absence of a direct effect of G on T̃, and by the absence of effects of the unmeasured confounder U on G, and of G on U.

Figure 1.

Causal Directed Acyclic Graph. G is is the instrument, X the exposure variable and T̃ the time-to-event outcome. The potential unmeasured confounders are denoted by U, and the observed confounders of the G-T̃ association by L.

To provide insight, we will start by considering uncensored survival data under the following semi-parametric variant of the additive hazards model (Aalen, 1980): no

| (1) |

where Ñ (t) = I(T̃ ≤ t) denotes the counting process, the history spanned by Ñ (t), R̃ (t) = I(t ≤ T̃) is the at risk indicator, Ω(t, L, U) is an unknown, non-negative function of time, L and U, and BX(t) is an unknown scalar at each time t > 0. Note that the righthand side of this model does not involve G because of the instrumental variables assumptions, which imply that T̃ and G are conditionally independent, given X, L and U. Note furthermore that we explicitly choose to leave Ω(t, L, U) unspecified because U is unmeasured, thus making assumptions about the hazard’s dependence on U rather delicate.

Under model (1),

| (2) |

which captures the exposure effect of interest by virtue of conditioning on the unmeasured confounder U. By the collapsibility of the relative risk (or the related collapsibility of the hazard difference (Martinussen and Vansteelandt, 2013)), this is also equal to the directly standardized relative survival risk:

where the averaging is over the conditional distribution of U given (G, L). Letting T̃x, for each fixed x, denote the potential outcome that would have been observed if the exposure were set to x by some intervention, this can also be written as

| (3) |

This can be seen because, by virtue of U being sufficient to adjust for confounding of the effect of X on T̃, we have that T̃x is conditionally independent of X, given U, G, L.

That the effect exp {−BX(t)x} can also be defined without making reference to the unmeasured confounder U (that is, without conditioning on U) is important. Indeed, the lack of data on U as well as the lack of a precise understanding of the variables contained inside U, would otherwise make interpretation difficult (Vansteelandt et al., 2011).

Model (1) is closely related to the structural cumulative survival model:

| (4) |

This model is slightly less restrictive than model (1). It makes no assumptions as to how the unmeasured confounders are associated with the event time. It moreover models the effect of setting the exposure to zero, within exposure subgroups rather than the entire population. By evaluating effects within exposure subgroups, the parameter BX(t) in model (4) thus encodes a type of treatment effect in the treated. Under the additional assumption that there is no current treatment interaction (Hernán and Robins, 2006), a population-averaged interpretation can be made. In particular, suppose that within levels of G and L, the effect of exposure level x versus 0 on the survival function is the same for subjects with observed exposure X = x as for subjects with a different exposure level in the following sense

| (5) |

Then model (4) along with the assumption of no current treatment interaction implies (3), so that BX(t) captures a population-averaged effect (as is also the case under model (1)).

Under the instrumental variables assumptions that X and G are associated, conditional on L, and that T̃0 is conditionally independent of G, given L, the estimators of BX(t) that we will propose in the next section will be consistent estimators of BX(t) in both models (1) and (4). Condition (5) is not required for the estimation methods that we develop later on; it is only needed to provide the population-level interpretation given in (3).

2.2 Estimation

We will allow for the event time T̃ to be subject to right-censoring. In that case, we only observe whether or not T̃ exceeds a random censoring time C, i.e. we observe δ = I(T̃ ≤ C), along with the first time either failure or censoring occurs, i.e. we also observe T = min(T̃, C). It is assumed that T̃ and C are independent given L, G, X, U, and that P(C > t|X, G, L, U) = P(C > t|L, U). The censoring distribution may thus depend on unobserved characteristics, but we do not have to model such dependencies, in fact no modelling of the censoring distribution is needed under this condition. In the Web Appendix we give an alternative condition concerning the censoring that also leads to the same estimating equation, see display (6). Let (Ti, δi, Li, Gi, Xi), i = 1, …, n, denote n independent identically distributed replicates under the structural cumulative failure time model (1) together with the instrumental variables assumptions, and the independent censoring assumption. The counting processes Ni(t) = I(Ti ≤ t, δi = 1), i = 1, …, n, are observed in the time interval [0, τ], where τ is some finite time point. Further, we define the at risk indicator Ri(t) = I(t ≤ Ti), i = 1, …, n.

The crux of our estimation method for BX(t), t > 0 is that once the exposure effect has been eliminated from the event time, it only retains a dependence on L and U. It thus becomes conditionally independent of the instrumental variable, given L. In particular, using arguments similar to those of Martinussen et al. (2011), we eliminate the exposure effect from the increments dN(t) by calculating dN(t) − dBX(t)XR̃(t), as suggested by (1), and we will eliminate the exposure effect from the at risk indicators R(t) by calculating R(t) exp {BX(t)X}, as suggested by (2). It follows that

| (6) |

for each t > 0. To formally show this we note that

where is the filtration generated by the counting process and L, G, X, U, and M(t) is the corresponding counting process martingale. Thus

here, the last equality follows because G ⫫ U|L. In (6), the G−E(G|L) can be replaced by H(G, L)−E{H(G, L)|L} for a general H, but for simplicity we keep (6) as it is.

The unbiasedness of equation (6) suggests a way of estimating the increments dBX(t) by solving equation (6) for each t with population expectations replaced by sample analogs. This delivers the recursive estimator B̂X(t) defined by

| (7) |

where , with E(Gi|Li; θ) a parametric model for E(Gi|Li) and θ̂ a consistent estimator of θ (e.g., a maximum likelihood estimator).

The estimator (7) is given by a counting process integral, thus only changing values at observed death times. Because of its recursive structure, we calculate it forward in time, starting from B̂X(0) = 0. In the special case where the exposure X is binary, it can be calculated analytically as shown below. With A(t) = eBX(t), the equation (6) (with population expectations substituted by sample averages) leads to

When replacing A(t) with A(t−) on the right side of this expression and integrating, we get the Volterra equation (see Andersen et al. (1993), p. 91)

where

and the solution is given by

If we assume that , and further that βX(t) = βX, that is assuming a time-constant effect, an estimator of βX may be obtained as

| (8) |

with , w̃(t) = R• (t) = Σi Ri(t).

3. Large sample results

The following proposition, whose proof is given in the Appendix, shows that B̂X(t) is a uniformly consistent estimator of BX(t), and moreover gives its asymptotic distribution.

Proposition 1

Under model (4) with the assumption that G is an instrumental variable, conditional on L, and given the technical conditions listed in the Appendix, the IV estimator B̂X(t) is a uniformly consistent estimator of BX(t). Furthermore, Wn(t) = n1/2{B̂X(t, θ̂) − BX(t)} converges in distribution to a zero-mean Gaussian process with variance Σ(t). A uniformly consistent estimator Σ̂(t) of Σ(t) is given below.

Let , i = 1, …, n be the iid zero-mean processes given by expression (13) in the Appendix. From the proof in the Appendix, it then follows that Wn(t) is asymptotically equivalent to . The variance Σ(t) of the limit distribution can thus be consistently estimated by

where is obtained from by replacing unknown quantities with their empirical counterparts. These results can be used to construct a pointwise confidence band. The asymptotic behavior of the estimator (8) is easily obtained since:

To study temporal changes, it is more useful to consider a uniform confidence band. This and tests of the hypothesis of a linear (cumulative) causal effect

can easily be derived based on the above iid representation as also outlined in Martinussen (2010). The above hypothesis can be tested using the following test statistic

| (9) |

and since, under the null, n1/2(B̂X(t) − β̂Xt) = n1/2 {B̂X(t) − BX(t) − (β̂X − βX)t} it is easy to get the iid-representation of the test process. This development is based on the following approach of Lin et al. (1993). Let be independent standard normal variates. Then, given the data,

also converges in distribution to a zero-mean Gaussian process with variance Σ(t). The limit distribution can thus be evaluated by generating a large number, M, of replicates Ŵm(t), m = 1, …, M. The causal null hypothesis that BX(t) = 0 for all t can thus for example be tested by investigating how extreme the test statistic supt≤τ |n1/2B̂X(t)|, is in the distribution of supt≤τ |Ŵm(t)|, m = 1, …, M.

4. Numerical results

4.1 Simulation study

To investigate the properties of our proposed methods with practical sample sizes, we conducted a simulation study. We generated data according to the data-generating mechanism of Figure 1 with the following specific models where we leave out the covariate L for simplicity. We considered two different settings where the exposure variable was continuous and binary, respectively. In the first setting, we took G to be binary with P(G = 1) = 0.5, and generated X and U, given G, from a normal distribution with E(X|G = g) = 0.5 + γGg, E(U |G = g) = 1.5 and with variance-covariance matrix so that Var(X|G) = V ar(U|G) = 0.25, and Cov(X, U|G) = −1/6. The parameter γG determines the size of the correlation between exposure and the instrumental variable. Specifically we looked at correlation ρ equal to 0.3 and 0.5. We generated T̃ according to the hazard model E{dÑ(t)|T ≥ t, X, G, U} = β0(t) + βX(t)X + βU (t)U, with β0(t) = 0.25, βX(t) = 0.1 and βU (t) = 0.15. Twenty percent were potentially censored according to a uniform distribution on (0,3.5), and the rest were censored at t = 3.5, corresponding to the study being closed at this time point, leading to a cumulative censoring rate of around 20%. Under this model, as seen in the Section 2.2, (4) holds with . Under this model it further holds that E{dÑ(t)|T ≥ t, X, G} = β̃0(t) + β̃G(t)G + β̃X(t)X, with β̃X(t) = βX(t) − 2βU (t)/3 = 0, so the naive Aalen estimator (using X and G as covariates) is biased. In this specific setting θ = E(G), and we therefore calculated the estimator given in (7) with θ̂ = Ḡ, along with the estimator β̂X given in (8) where we took τ = 3. For this scenario, we considered sample sizes 1600 and 3200 when ρ = 0.3, and sample sizes 800 and 1600 when ρ = 0.5. Simulation results concerning B̂X(t), based on 2000 runs for each configuration, are given in Table 1, where (average) biases are reported at time points t = 1, 2, 3 for B̂X(t) along with coverage probability of 95% pointwise confidence intervals CP(B̂X(t)). Results concerning β̂X are given in Web Table 1, first half.

Table 1.

Continuous exposure case. Time-constant exposure effect. Bias of B̂X(t), average estimated standard error, sd(B̂X(t)), empirical standard error, see(B̂X(t))), and coverage probability of 95% pointwise confidence intervals CP(B̂X(t))) based on the instrumental variables estimator, in function of sample size n and at different strengths ρ (correlation) of the instrumental variable. Bias of B̃X(t) is the bias of the naive Aalen estimator.

| n | ρ = 0.3 | n | ρ = 0.5 | |||||

|---|---|---|---|---|---|---|---|---|

| t = 1 | t = 2 | t = 3 | t = 1 | t = 2 | t = 3 | |||

| Bias B̂X(t) | 1600 | −0.003 | −0.001 | −0.007 | 800 | −0.002 | −0.004 | −0.015 |

| sd (B̂X(t)) | 0.139 | 0.242 | 0.404 | 0.109 | 0.187 | 0.303 | ||

| see (B̂X(t)) | 0.139 | 0.245 | 0.439 | 0.107 | 0.187 | 0.314 | ||

| 95% CP(B̂X(t)) | 95.4 | 96.5 | 98.1 | 95.2 | 96.1 | 97.5 | ||

| Bias B̃X(t) | −0.101 | −0.201 | −0.300 | −0.099 | −0.197 | −0.297 | ||

| Bias B̂X(t) | 3200 | −0.003 | −0.005 | −0.014 | 1600 | 0.004 | 0.004 | −0.002 |

| sd (B̂X(t)) | 0.094 | 0.170 | 0.267 | 0.075 | 0.131 | 0.209 | ||

| see (B̂X(t)) | 0.096 | 0.166 | 0.262 | 0.075 | 0.130 | 0.206 | ||

| 95% CP(B̂X(t)) | 95.6 | 95.1 | 96.2 | 95.0 | 95.5 | 95.7 | ||

| Bias B̃X(t) | −0.099 | −0.200 | −0.296 | −0.099 | −0.200 | −0.301 | ||

In all scenarios considered the naive Aalen estimator is, as expected, biased; see Table 1. From Table 1 it is also seen that the proposed estimator B̂X(t) is unbiased. In the case with sample size 1600 and correlation equal to 0.3 the estimated standard error at time point t = 3 is a bit too large resulting in a too high coverage probability. However, it is also seen that the estimated standard error approaches the empirical standard deviation as sample size goes up, and overall the 95%-coverage probabilities have the correct size. We also calculated the size of the sup-test (9) that investigates whether the constant exposure effects model is acceptable. For the four considered scenarios of (n, ρ): (1600,0.3), (3200,0.3), (800,0.5), (1600,0.5), it was 0.03, 0.04, 0.03 and 0.05, respectively. Hence, when sample size and correlation goes up, the test has the correct size. The results concerning the constant effect estimator, β̂X, are reported in the first half of Web Table 1, and from there it is seen that the estimator is unbiased and that the variability is well estimated leading to satisfactory coverage probabilities at least when sample size goes up. When the exposure is continuous one may also calculate the 2SLS estimator β̌X of Tchetgen et al. (2015); see Web Table 1. From there it is seen that this estimator is also unbiased, and that it is slightly more efficient than the constant effects estimator given in this paper. This is not surprising as the 2SLS estimator is targeted at this specific situation while the estimator β̂X is derived from an estimator that can handle much more general situations. We also considered a setup where there was a time-varying exposure effect. Data was generated as described above except that βX(t) was now taken as βX(t) = 0.1I(t < 1.5)−0.1I(1.5 ≤ t < 3). Inducing censoring as above resulted in a cumulative censoring rate of around 25. Results from this study are given in Web Table 2, where we have dropped results for the naive Aalen estimator. From Web Table 2 we see again that the proposed estimator is unbiased and that the variability is well estimated resulting in appropriate coverage. We also calculated the size of the sup-test (9). For the four considered scenarios of (n, ρ): (1600,0.3), (3200,0.3), (800,0.5), (1600,0.5), it was 0.07, 0.18, 0.13 and 0.31, respectively. We also ran the situation where n = 3200 and ρ = 0.5, and obtained the size of the test to be 0.61. We thus see, as expected, that when correlation and sample size goes up the power of the test increases. We also calculated the constant effects estimators β̂X and β̌X, and the mean of them in all four combinations of (n, ρ) was 0.04 thus showing that the constant effects estimators are not appropriate under this scenario.

We next considered settings where the exposure variable X was binary. In the first such setting we generated data as under the first scenario with βX(t) = 0.1, but instead of using the continuous version of X, call it now X̃, we used X = I(X̃ > 0.5).

We used the same censoring mechanism and also the same hazards model as under the first setting. For this scenario, we considered sample sizes 3200 and 6400 when ρ = 0.3, and sample sizes 1600 and 3200 when ρ = 0.5. Results, again based on 2000 runs for each configuration, are shown in Table 2. For the case (n = 3200, ρ = 0.3) the coverage probability is a bit too high at t = 3. In the other settings the estimator is unbiased and coverage is satisfactory. The results concerning the constant effect estimator, β̂X, are reported in the second half of Web Table 1, and from there it is seen that the estimator is unbiased and that the variability is well estimated leading to satisfactory coverage probabilities. We also see that 2SLS estimator of Tchetgen et al. (2015) seems to be unbiased in this setting although there is no theoretical underpinning of this. To look further into this and to stress that the 2SLS estimation relies on a correct specification of a model for the exposure X given the instrument G we ran a final study as follows. The instrument G was taken to be normally distributed with mean 2 and variance 1.52. The unobserved U was taken to be 1.5Z2 with Z generated as normal with mean 1 and variance 0.252. The exposure X was binary with P(X = 1|G, U) = expit{−1 + 0.2G + 0.5G2 + U−E(U)}. In this way the correlation between X and G was approximately 0.56. We generated T̃ according to the hazard model E{dÑ(t)|T ≥ t, X, G, U} = 0.05 + 0.4X + 0.3U, and censored all at t = 2 resulting in approximately 25% censorings. We used sample size 1000 and 2000 with 1000 runs for each configuration. We calculated the 2SLS estimator in two ways using different first stage models; we denote the 2SLS estimator based on regressing X on G (despite that X is binary) in the first stage by β̌1X and the 2SLS estimator based on a first stage logistic regression model using G as explanatory variable by β̌2X. We stress that the estimator suggested in this paper, β̂X, is not based on any modelling of X given G in contrast to the 2SLS estimator. Results are given in Web Table 3 where it is seen that the estimator β̂X is unbiased while the two versions of the 2SLS estimator are both biased.

Table 2.

Binary exposure case. Bias of B̂X(t), average estimated standard error, sd(B̂X(t)), empirical standard error, see(B̂X(t))), and coverage probability of 95% pointwise confidence intervals CP(B̂X(t))) based on the instrumental variables estimator, in function of sample size n and at different strengths ρ (correlation) of the instrumental variable. Bias of B̃X(t) is the bias of the naive Aalen estimator.

| n | ρ = 0.3 | n | ρ = 0.5 | |||||

|---|---|---|---|---|---|---|---|---|

| t = 1 | t = 2 | t = 3 | t = 1 | t = 2 | t = 3 | |||

| Bias B̂X(t) | 3200 | 0.000 | 0.001 | −0.017 | 1600 | −0.000 | −0.005 | −0.022 |

| sd (B̂X(t)) | 0.109 | 0.194 | 0.316 | 0.102 | 0.183 | 0.306 | ||

| see (B̂X(t)) | 0.109 | 0.194 | 0.331 | 0.102 | 0.183 | 0.302 | ||

| 95% CP(B̂X(t)) | 95.3 | 95.4 | 96.6 | 95.7 | 95.6 | 96.1 | ||

| Bias B̃X(t) | −0.082 | −0.164 | −0.248 | −0.085 | −0.167 | −0.249 | ||

| Bias B̂X(t) | 6400 | −0.000 | −0.006 | −0.015 | 3200 | 0.001 | 0.001 | −0.005 |

| sd (B̂X(t)) | 0.077 | 0.137 | 0.221 | 0.071 | 0.128 | 0.202 | ||

| see (B̂X(t)) | 0.077 | 0.135 | 0.216 | 0.072 | 0.128 | 0.207 | ||

| 95% CP(B̂X(t)) | 95.1 | 94.6 | 95.2 | 95.1 | 95.2 | 95.9 | ||

| Bias B̃X(t) | −0.082 | −0.167 | −0.250 | −0.083 | −0.168 | −0.253 | ||

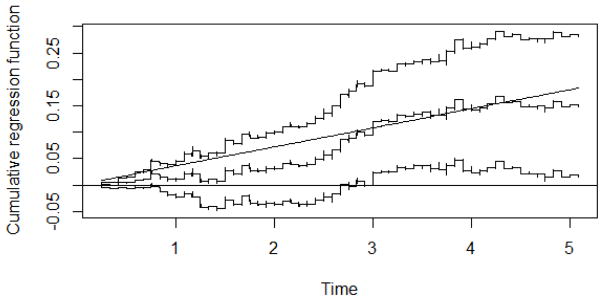

4.2 Application to the HRS on causal association between diabetes and mortality

We illustrate the proposed method using data from the Health and Retirement Study (HRS), a cohort initiated in 1992. The same data was used by Tchetgen Tchetgen et al. (2015) (TT) to investigate the causal association between diabetes and mortality. The HRS consists of persons aged 50 years or older and their spouses. There are genotype data for 12123 participants, but, like TT, we restrict our analyses to the 8446 non-Hispanic white persons with valid self-reported diabetes status at baseline. The average follow-up time was 4.10 years with a total of 644 deaths over 34055 person-years. We used an externally validated genetic risk score predictor of type 2 diabetes as IV. The risk score is based on 39 SNPs that were strongly associated with the diabetes status (Likelihood Ratio Test chi-square statistic equal to 176.75 with 39 degrees of freedom, P < 10−6). Like TT we used as observed confounders (L) age, sex and the top 4 genomewide principal components to account for possible population stratification. The 2SLS control function approach used in TT is only valid if the instrument is binary unless one makes a further linearity assumption concerning a conditional mean of the un-observed confounder(s), specifically they assume that E{Ω(t, U)|G, X} is linear in G. This assumption is untestable. The method we propose in this paper is not restricted to binary instruments; it places no restrictions on the exposure, nor the instrument. The proposed approach furthermore allows the exposure effect to vary with time, unlike the proposal by TT. The analysis used here thus generalizes that of TT in several aspects. Figure 2 shows the estimated causal effect of diabetes status on mortality, B̂X(t), along with 95% pointwise confidence bands. The straight line corresponds to the constant effects estimator (8). From Figure 2 it seems reasonable to assume a time-constant exposure effect, which we can formally test using the statistic (9). It gives a p-value of 0.61 thus giving no evidence against the time-constant exposure effect model. The estimate of the time-constant exposure effect is β̂X = 0.036 with estimated standard error 0.0142 corresponding to the 95% confidence interval (0.008,0.064). It suggests a causal effect of diabetes on all cause mortality corresponding to an average of 3.6 additional deaths occurring for each year of follow-up in each 100 persons with diabetes alive at the start of the year, compared with each 100 diabetes-free persons alive at the start of the year, conditional on age and sex. This estimated effect is less than half of that obtained by TT suggesting that the linearity assumption used in TT may not hold.

Figure 2.

HRS-study. Estimated causal effect of diabetes, B̂X(t), along with 95% pointwise confidence bands. The straight line corresponds to the constant effects estimator (8).

4.3 Application to the HIP trial on effectiveness of screening on breast cancer mortality

The Health Insurance Plan (HIP) of Greater New York was a randomized trial of breast cancer screening that began in 1963. The purpose was to see whether screening has any effect on breast cancer mortality. About 60000 women aged 40–60 were randomized into two approximately equally sized groups. Study women were offered the screening examinations consisting of clinical examination, usually by a surgeon, and a mammography. Further three annual examinations were offered in this group. Control women continued to receive their usual medical care. About 35% of the women that were offered screening refused to participate (non-compliers); see Web Table 4. There were large differences between the study women who participated and those who refused (Shapiro, 1977) and therefore the results from the “as treated” analysis may be doubtful due to unobserved confounding.

The same data were analysed by Joffe (2001) and as he did, we will also focus on the first 10 years of follow-up. Since screening ended after three years, Joffe argued that focussing on the first 10 years of follow up will reduce attenuation of the effects of screening in the later periods in which treatment was the same both groups. We will later look into the possibility of a time-varying effect in a more formal way as our estimator B̂X(t) captures this directly.

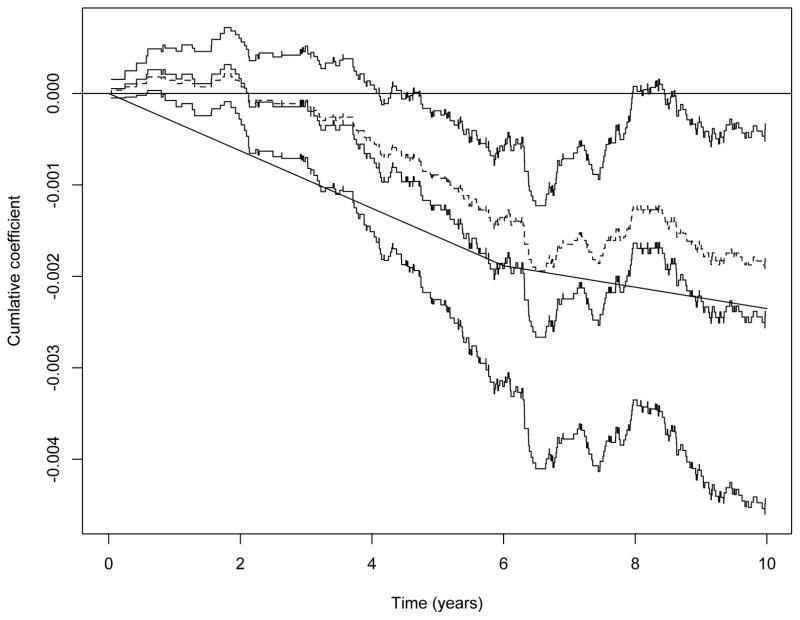

A Cox-regression intention-to-treat analysis showed that there is reduced mortality from breast cancer in the screening group (P 0.01). Figure 3 shows the estimated cumulative regression coefficient from a corresponding Aalen additive hazards intention-to-treat analysis (broken curve), along with 95% confidence intervals indicating a time-varying effect of the screening; there seems to be a beneficial effect in the first 6 years or so, and no effect thereafter. The supremum test shows evidence of an overall effect of screening (P 0.005).

Figure 3.

HIP-study. Estimated causal effect of screening, B̂X (t) along with 95% pointwise confidence bands (solid curves) and the intention to treat estimate (broken curve). A two-parameter piecewise constant estimator , see (11), is also shown.

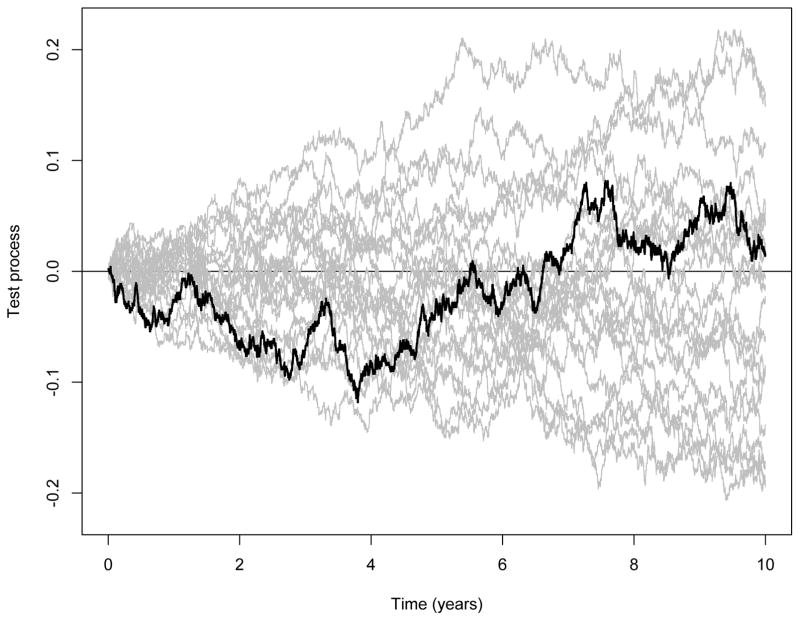

We will now apply our suggested method to estimate the causal effect of screening on breast cancer mortality, noting that there is an additional complication due to competing risks: in the first 10 years of follow-up there are 4221 deaths but only 340 were deemed due to breast cancer. We will use the randomisation variable G as instrument and denote screening by X. The ith counting process in our estimator (7) is now the counting process that jumps at time point t if the ith women at that point in time dies from breast cancer. We show in a separate report to be communicated elsewhere that B̂X(t) contrasts the cumulative breast cancer death specific hazards among the treated between scenarios with versus without screening under the assumption that the cause specific hazard of death due to other causes than breast cancer for the screened women would have been the same at all times had they not been screened. To test this assumption one may use the test process

where N2i(t) is the ith counting process counting non-breast cancer death. Under the null of no causal effect of screening on the non-breast cancer death hazards, this process is a zero-mean process. One may further show that , where are independent identically distributed zero-mean processes. Specifically,

considering here the case without covariates so that θ = E(Gi). In the previous display, ζ1(t) and ζ2(t) are the limits in probability of

respectively. This representation can be used to resample from the limit distribution of Hn(t) under the null. Further, a formal test based on for instance supt≤10 |Hn(t)| may be performed and whether or not it is significant can also be based on resampling from the limit distribution under the null. Figure 4 shows the test process Hn(t) along with 20 resampled processes from its limit distribution under the null, and it is seen that the test process does not seem to deviate in any respect. The supremum test based on 1000 resamples results in a p-value of 0.63. Based on this, we proceed to calculate the estimator B̂X(t). This estimate along with 95% confidence bands (pointwise) are given in Figure 3 that also shows the intention-to-treat estimate (broken curve). The causal effect of the screening appears to be slightly more pronounced than what is seen from the intention-to-treat estimator and again it is seen that there seems to be a time-varying effect with screening bein g beneficial in a period of approximately 6 years. The supremum test supt≤10 |B̂X(t)| is significant (P 0.02).

Figure 4.

HIP-study. Investigation of whether the cause specific hazard of death due to other causes than breast cancer for the screened patients would have been the same at all times had they not been screened. Test process Hn(t) along with 20 resampled processes from its limit distribution under the null.

Using the proposed approach, it is now possible to study the time-dynamics even further. For illustrative purposes, assume one hypothesised that an effect of screening would, if any, only last for a few years (as screening stopped after 3 years), and let us say it corresponds to roughly six years of follow up. We could then attempt the simpler model

| (10) |

with ξ = 6 years. The two parameters β0 and β1 are estimated by

with , w̃(t) = R• (t) = ΣiRi(t).. The estimate of BX(t) under this simplified model is then given by

| (11) |

The constant effects parameters are estimated to β̂0 = 0.00031 (SE 0.00011) and β̂1 =−0.00012 (SE 0.00020), indicating a significant effect of the screening only in the first 6 years. The estimator is also shown in Figure 3. To test whether the simplified model, that is assuming a constant effect of treatment with a change in the effect after 6 years, gives a reasonable description of the data we consider the test process which, under the null, can be written as

Using the iid representation of Wn(t) we can resample from the limit distribution, under the null, of TST(t); such 20 randomly picked processes are shown in Web Figure 1 along with the observed test process TST(t). We may use the supremum test statistic TST = supt≤10 |TST (t)| to investigate whether the test process is deviating. To see whether the observed TST is extreme we sampled 1000 draws from the limit distribution as outlined in Section 3; this gave a p-value of 0.56 suggesting that the constant effects model with a change in the effect after 6 years gives a reasonable fit to the data.

However, it also seen from Figure 3 and Web Figure 1 that the two parameter constant effects model is perhaps not giving a fully satisfactory fit in the first period of follow-up (two years or so). Actually, if instead one uses the test statistic TST = supt≤6 |TST (t)| then one gets a p-value of 0.06 giving some indication of a non-satisfactory fit in the initial phase of the follow up period. One could consider extending the two parameter constant effects model with an additional parameter allowing for a separate effect in the initial phase of two years or so. The cutpoints chosen here were used for illustrative purposes only, in practice they should have been specified ahead of performing the analysis.

5. Concluding remarks

In this article, we proposed an instrumental variables estimator for the effect of an arbitrary exposure on an event time. In comparison with other instrumental variables estimators for event times, our proposed approach has the advantage that it can handle arbitrary (e.g., continuous) exposures, without the need for modelling the exposure distribution, and that it naturally adjusts for censoring whenever censoring is independent of the event time, exposure and instrument, conditional on measured and unmeasured confounders. The independent censoring assumption is relatively weak as it allows for a dependence on unmeasured factors. This assumption can be relaxed via inverse probability of censoring weighting under a model for the dependence of censoring on the exposure and/or instrumental variable.

Under the usual instrumental variable assumptions, listed in Section 1, the IV-estimator (8) provides a consistent estimator of the causal exposure effect as opposed to the naive estimator when there is unmeasured confounding. However, in the case of a weak instrument, the IV-estimator may have a large variance. It is therefore of interest to develop semi-parametric efficient estimators (Tsiatis, 2006). Along the same lines, it is also of interest to consider estimators that are robust to some model deviations. For instance, consider the following two models

| (12) |

where h is a user defined function such as h(t, G, L) = G; and ψ(t) and θ are parameters indexing the two models. Consider then the estimating function

| (13) |

where

and d(t, L) is an arbitrary index function. One may then show that (13) has zero mean if either of the two models in (12) hold; the solution to an estimating equation based on estimating function (13) therefore yields a double robust estimator. This estimator has the further advantage of being invariant to linear transformations of the exposure. A detailed study of efficient and double robust estimators will be communicated in a separate report.

Our focus in this paper has been on additive hazards models, whose parameters translate directly into relative survival chances. While 2SLS approaches for Cox models enjoy greater popularity at this point, these have no justification (except when few subjects experience an event over the length of follow-up (Tchetgen Tchetgen et al., 2015)). Since it is in particular unclear what causal parameter 2SLS estimators under Cox models are targeting, one cannot use the results obtained from such analyses to evaluate the impact of exposure on the survival time distribution.

Supplementary Material

Acknowledgments

Torben Martinussen’s work is part of the Dynamical Systems Interdisciplinary Network, University of Copenhagen. Stijn Vansteelandt was supported by IAP research network grant nr. P07/05 from the Belgian government (Belgian Science Policy). Eric Tchetgen Tchetgen is supported by NIH grant R01A I104459.

Footnotes

The Web Appendix referenced in Sections 3 and 4 is available with this paper at the Biometrics website on Wiley Online Library. The Web Appendix also contains R code.

Contributor Information

Torben Martinussen, Department of Biostatistics, University of Copenhagen, Øster Farimagsgade 5B, 1014 Copenhagen K, Denmark.

Stijn Vansteelandt, Department of Applied Mathematics and Computer Sciences, Ghent University, Krijgslaan 281 S9, 9000 Ghent, Belgium.

Eric J. Tchetgen Tchetgen, Departments of Epidemiology and Biostatistics, Harvard School of Public Health, 677 Huntington Avenue, MA 02115 Boston, U.S.A

David M. Zucker, Department of Statistics, Hebrew University, Mount Scopus, 91905 Jerusalem, Israel

References

- Aalen OO. A model for non-parametric regression analysis of counting processes. Lecture Notes in Statistics. 1980;2:1–25. [Google Scholar]

- Abadie A. Semiparametric instrumental variable estimation of treatment response models. journal of Econometrics. 2003;113:231–263. [Google Scholar]

- Andersen PK, Borgan O, Gill RD, Keiding N. Statistical Models Based on Counting Processes. Berlin: Springer-Verlag; 1993. [Google Scholar]

- Angrist J, Krueger A. Instrumental variables and the search for identification: From supply and demand to natural experiments. Journal of Economic Perspectives. 2001;15:69–85. [Google Scholar]

- Cai B, Small DS, Ten Have TR. Two-stage instrumental variable methods for estimating the causal odds ratio: analysis of bias. Statistics in Medicine. 2011;30:1809–1824. doi: 10.1002/sim.4241. [DOI] [PubMed] [Google Scholar]

- Cuzick J, Sasieni P, Myles J, et al. Estimating the effect of treatment in a proportional hazards model in the presence of non-compliance and contamination. Journal of the Royal Statistical Society - Series B. 2007;69:565–588. [Google Scholar]

- Davey-Smith G, Ebrahim S. Mendelian randomization’: can genetic epidemiology contribute to understanding environmental determinants of disease? International Journal of Epidemiology. 2003;32:1–22. doi: 10.1093/ije/dyg070. [DOI] [PubMed] [Google Scholar]

- Didelez V, Sheehan N. Mendelian randomization as an instrumental variable approach to causal inference. Statistical Methods in Medical Research. 2007;16:309–330. doi: 10.1177/0962280206077743. [DOI] [PubMed] [Google Scholar]

- Hernán MA, Robins JM. Instruments for causal inference: an epidemiologist’s dream? Epidemiology. 2006;17:360–372. doi: 10.1097/01.ede.0000222409.00878.37. [DOI] [PubMed] [Google Scholar]

- Joffe MM. Administrative and artificial censoring in censored regression models. Statistics in Medicine. 2001;20:2287–2304. doi: 10.1002/sim.850. [DOI] [PubMed] [Google Scholar]

- Joffe MM, Yang WP, Feldman H. G-Estimation and Artificial Censoring: Problems, Challenges, and Applications. Biometrics. 2012;68:275–286. doi: 10.1111/j.1541-0420.2011.01656.x. [DOI] [PubMed] [Google Scholar]

- Katan MB. Apolipoprotein E isoforms, serum cholesterol, and cancer. Lancet. 1986:507–8. doi: 10.1016/s0140-6736(86)92972-7. [DOI] [PubMed] [Google Scholar]

- Li J, Fine J, Brookhart A. Instrumental variable additive hazards models. Biometrics. 2014;71:122–130. doi: 10.1111/biom.12244. [DOI] [PubMed] [Google Scholar]

- Lin DY, Wei LJ, Yang I, Ying Z. Semiparametric regression for the mean and rate functions of recurrent events. Journal of the Royal Statistical Society - Series B. 2000;62:711–730. [Google Scholar]

- Loeys T, Goetghebeur E, Vandebosch A. Causal proportional hazards models and time-constant exposure in randomized clinical trials. Lifetime Data Analysis. 2005;11:435–449. doi: 10.1007/s10985-005-5233-z. [DOI] [PubMed] [Google Scholar]

- Martinussen T. Dynamic path analysis for event time data: large sample properties and inference. Lifetime Data Analysis. 2010;16:85–101. doi: 10.1007/s10985-009-9128-2. [DOI] [PubMed] [Google Scholar]

- Martinussen T, Vansteelandt S, Gerster M, Hjelmborg JVB. Estimation of direct effects for survival data by using the Aalen additive hazards model. Journal of the Royal Statistical Society - Series B. 2011;73:773–788. [Google Scholar]

- Nie H, Cheng J, Small DS. Inference for the Effect of Treatment on Survival Probability in Randomized Trials with Noncompliance and Administrative Censoring. Biometrics. 2011;67:1397–1405. doi: 10.1111/j.1541-0420.2011.01575.x. [DOI] [PubMed] [Google Scholar]

- Pearl J. Causality: Models, Reasoning, and Inference. Cambridge University Press; Cambridge: 2009. [Google Scholar]

- Picciotto S, Hernán MA, Page J, Young JG, Robins JM. Structural nested cumulative failure time models to estimate the effects of hypothetical interventions. Journal of the American Statistical Association. 2012;107:886–900. doi: 10.1080/01621459.2012.682532. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Robins JM, Tsiatis A. Correcting for non-compliance in randomized trials using rank-preserving structural failure time models. Communications in Statistics. 1991;20:2609–2631. [Google Scholar]

- Robins JM, Rotnitzky A. Estimation of treatment effects in randomised trials with non-compliance and a dichotomous outcome using structural mean models. Biometrika. 2004;91:763–783. [Google Scholar]

- Shapiro S. Evidence of screening for breast cancer from a randomised trial. Cancer. 1977;39:2772–2782. doi: 10.1002/1097-0142(197706)39:6<2772::aid-cncr2820390665>3.0.co;2-k. [DOI] [PubMed] [Google Scholar]

- Tchetgen Tchetgen EJ, Walter S, Vansteelandt S, Martinussen T, Glymour M. Instrumental variable estimation in a survival context. Epidemiology. 2015;26:402–410. doi: 10.1097/EDE.0000000000000262. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tsiatis AA. Semiparametric Theory and Missing Data. Springer Verlag; 2006. [Google Scholar]

- Vansteelandt S, Bowden J, Babanezhad M, Goetghebeur E. On instrumental variable estimation of the causal odds ratio. Statistical Science. 2011;26:403–422. [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.