Abstract

Even in the aggregate, genomic data can reveal sensitive information about individuals. We present a new model-based measure, PrivMAF, that provides provable privacy guarantees for aggregate data (namely minor allele frequencies) obtained from genomic studies. Unlike many previous measures that have been designed to measure the total privacy lost by all participants in a study, PrivMAF gives an individual privacy measure for each participant in the study, not just an average measure. These individual measures can then be combined to measure the worst case privacy loss in the study. Our measure also allows us to quantify the privacy gains achieved by perturbing the data, either by adding noise or binning. Our findings demonstrate that both perturbation approaches offer significant privacy gains. Moreover, we see that these privacy gains can be achieved while minimizing perturbation (and thus maximizing the utility) relative to stricter notions of privacy, such as differential privacy. We test PrivMAF using genotype data from the Wellcome Trust Case Control Consortium, providing a more nuanced understanding of the privacy risks involved in an actual genome-wide association studies. Interestingly, our analysis demonstrates that the privacy implications of releasing MAFs from a study can differ greatly from individual to individual. An implementation of our method is available at http://privmaf.csail.mit.edu.

I. Introduction

Recent research has shown that sharing aggregate genomic data, such as p-values, regression coefficients, and minor allele frequencies (MAFs) may compromise participant privacy in genomic studies [1], [2], [3], [4], [5]. In particular, Homer et al. showed that, given an individual‘s genotype and the MAFs of the study participants, an interested party can determine with high confidence if the individual participated in the study (recall that the MAF is the frequency with which the least common allele occurs at a particular location in the genome). Following the initial realization that aggregate data can be used to reveal information about study participants, subsequent work has led to even more powerful methods for determining if an individual participated in a study based on MAFs [5], [6], [7], [8], [9]. These methods work by comparing an individual‘s genotype to the MAF in a study and to the MAF in the background population. If their genotype is more similar to the MAF in the study, then it is likely that the individual was in the study. This raises a fundamental question: how do researchers know when it is safe to release aggregate genomic data?

To help answer this question we introduce a new model-based measure, PrivMAF, that provides provable privacy guarantees for MAF data obtained from genomic studies. Unlike many previous privacy measures, PrivMAF gives an individual privacy measure for each study participants, not just an average measure. These individual measures can then be combined to measure the worst case privacy loss in the study. Our measure also allows us to quantify the privacy gains achieved by perturbing the data, either by adding noise or binning.

Previous work

Several methods have been proposed to help determine when MAFs are safe to release. The simplest method– one suggested for regression coefficients [10]– is to just choose a certain number and release the MAFs for at most that many single nucleotide polymorphisms (SNPs, e.g. locations in the genome with multiple alleles). Sankararaman et al. [7] suggested calculating the sensitivity and specificity of the likelihood ratio test to help decide if the MAFs for a given dataset are safe to release. More recently, Craig et al. [11] advocated a similar approach, using the Positive Predictive Value (PPV) rather than sensitivity and specificity. These measures provide a powerful set of tools to help determine the amount of privacy lost after releasing a given dataset. One limitation of these approaches, however, is that they ignore the fact that a given piece of aggregate data might reveal different amounts of information about different individual study participants, and instead look at an average measure of privacy over all participants. For the unlucky few who lose a lot of privacy in a given study, a privacy guarantee for the average participant is not very comforting. This observation was hinted at by Im et al. [5] who noted that individuals who have extremely large or small values for a particular phenotype can be more readily re-identified using regression coefficients from GWAS studies than those with average phenotypes. The only sure way to avoid potentially harmful repercussions is to produce provable privacy guarantees for all participants when releasing sensitive research data.

Some researchers have recently suggested k-anonymity [12], [3], [13] or differential privacy [14], [15] based approaches, which allow release of a transformed version of the aggregate data in such a way that privacy is preserved. The idea behind these methods is that perturbing the data decreases the amount of private information released. Though such approaches do give improved privacy guarantees, they limit the usefulness of the results, as the data has often been perturbed beyond its usefulness; thus, there is a need to develop methods that perturb the data as little as possible in order to maximize its utility.

Identifying individuals whose genomic information has been included in an aggregate result can have real-world repercussions. Consider, for example, studies of the genetics of drug abuse [16]. If the MAFs of the cases (e.g. people who had abused drugs) were released, then knowing someone contributed genetic material would be enough to tell that they had abused drugs. Along the same lines, there have been numerous genome-wide association studies (GWAS) related to susceptibility to numerous STDs, including HIV [17]. Since many patients would want to keep their HIV status secret, these studies need to use care in deciding what kind of information they give away. Such privacy concerns have led the NIH and the Wellcome Trust, among others, to move genomic data from public databases to access-controlled repositories [18], [19], [20]. Such restrictions are clearly not optimal, since ready access to biomedical databases has been shown to enable a wide range of secondary research [21], [22].

Many types of biomedical research data may compromise individual‘s privacy, not just MAF [2], [23], [10], [24], [25], [26], [27]. For instance, even if we just limit ourselves to genomic data there are several broad categories of privacy challenges that depend on the particular data available, e.g. determining from an individuals genotype and aggregated data whether they participated in a GWAS study [4], from an individual‘s genotype whether they are in a gene-expression database [5], or, alternately, determining an individual‘s identity from just genotype and public demographic information [24].

Our Contribution

We introduce a privacy statistic, our measure PrivMAF, which provides provable privacy guarantees for all individuals in a given study when releasing MAFs for unperturbed or minimally perturbed (but still useful) data. The guarantee we give is straightforward: given only the MAFs and some knowledge about the background population, PrivMAF measures the probability of a particular individual being in the study. This guarantee implies that, if d is any individual and PrivMAF(d, MAF) is the score of our statistic, then, under reasonable assumptions, knowledge of the minor allele frequencies implies that d participated in the study with probability at most PrivMAF(d, MAF). Intuitively, this measure bounds how confident an adversary can be in concluding that a given individual is in our study cohort based off the available information.

Moreover, the PrivMAF framework can measure privacy gains achieved by perturbing MAF data. Even though it is preferential to release unperturbed MAFs, there may be situations in which releasing perturbed statistics is the only option that ensures the required level of privacy– such as when the number of SNPs whose data we want to release is very large. With this scenario in mind, PrivMAF can be modified to measure the amount of privacy lost when releasing perturbed MAFs. In particular, the statistic we obtain allows us to measure the privacy gained by adding noise to (common in differential privacy) or binning (truncating) the MAFs. To our knowledge, PrivMAF is the first method for measuring the amount of privacy gained by binning MAFs. In addition, our method shows that much less noise is necessary to achieve reasonable differential privacy guarantees, at the cost of adding realistic assumptions about what information potential adversaries have access to, thus providing more useful data.

In addition to developing PrivMAF, we apply our statistic to genotype data from the Wellcome Trust Case Control Consortium‘s (WTCCC) British Birth Cohorts genotype data. This allows us to demonstrate our method on both perturbed and unperturbed data. Moreover, we use PrivMAF to show that, as claimed above, different individuals in a study can experience very different levels of privacy loss after the release of MAFs.

II. METHODS

A. The Underlying Model

Our method assumes a model implicitly described by Craig et al. [11], with respect to how data were generated and what knowledge is publicly available.

PrivMAF assumes a large background population. Like previous works, we assume this population is at Hardy-Weinberg (H-W) equilibrium. We choose a subset (B) of this larger population, consisting of all individuals who might reasonably be believed to have participated in the study. Finally, the smallest set, denoted D, consists of all individuals who actually participated in the study. As an example, consider performing a GWAS study at a hospital in Britain. The underlying population might be all people of British ancestry; B, the set of all patients at the hospital; and D, all study participants.

As a technical aside, it should be noted that– breaking with standard conventions– we allow repetitions in D and B. Moreover, we assume that the elements in D and B are ordered.

In our model B is chosen uniformly at random from the underlying population, and D is chosen uniformly at random from B (we briefly address this assumption in the Appendix). An individual‘s genotype, d = (d1, . . . , dm), can be viewed as a vector in {0, 1, 2}m, where m is the number of SNPs we are considering releasing. Let pj be the minor allele frequency of SNP j in the underlying population. We assume that each of the SNPs is chosen independently. By definition of H-W equilibrium, for any d ∈ B, the probability that dj = i for i ∈ {0, 1, 2} is .

Let be the minor allele frequency of SNP j in D, the frequency with which the least common allele occurs at SNP j. Then MAF(D) = (MAF1(D), . . . , MAFm(D)). We assume the parameters, {pi}i, the size of B (denoted N), and the size of D (denoted n) are publicly known. We are trying to determine if releasing MAF(D) publicly will lead to a breach of privacy.

Note that our model does assume the SNPs are independent, even though this is not always the case due to linkage disequilibrium (LD). This independence assumption is made in most previous approaches. We can, however, extend PrivMAF to take into account LD by using a Markov Chain based model (see the Appendix). The original WTCCC paper [28] looked at the dependency between SNPs in their dataset and found that there are limited dependencies between close-by SNPs. In situations where LD is an issue one can often avoid such complications by picking one representative SNP for each locus in the genome.

B. Measuring Privacy of MAF

Consider an individual d ∈ B. We want to determine how likely it is that d ∈ D based on publicly released information. We assume that it is publicly known that d ∈ B. This is a realistic assumption, since it corresponds to an attacker believing that d may have participated in the study. This inspires us to use

| (1) |

as the measure of privacy for individual d, where D̃ and B̃ are drawn from the same distribution as D and B. Informally, D̃ and B̃ are random variables that represent our adversary‘s a priori knowledge about D and B.

More precisely, we calculate an upper bound on Equation 1, denoted by PrivMAF(d, MAF(D)). In practice we use the approximation:

where x(D) = 2nMAF(D) and

It should be noted that, for reasonable parameters, this upper bound is almost tight. We can then let

Informally, for all d ∈ D, PrivMAF(D) bounds the probability that d participated in our study given only publicly-available data and MAF(D). A sketch of the derivation is given in the Appendix.

This measure allows a user to choose some privacy parameter, α, and release the data if and only if PrivMAF(D) ≤ α. It is worth noting, however, that deciding whether or not to release the data gives away a little bit of information about D, which can weaken our privacy guarantee. While in practice this seems to be a minor issue, we develop a method to correct for it in the Appendix.

C. Measuring Privacy of Truncated Data

In order to deal with privacy concerns it is common to release perturbed versions of the data. This task can be achieved by adding noise (as in differential privacy), binning (truncating results), or using similar approaches. Here we show how PrivMAF can be extended to perturbed data.

We first consider truncated data. Let be obtained by taking the minor allele frequencies of the jth SNP and truncating it to k decimal digits. For example, if k = 1 then .111 would become .1, and if k = 2 it would become .11. We are interested in

As above, we can calculate an upper bound, denoted by PrivMAFtrunc(k)(d, MAFtrunc(k)(D)). The approximation we use to calculate this is given in the Appendix. We then have

For each d ∈ D, this measure upper bounds the probability that individual d participated in our study given only publicly-available data and knowledge of MAFtrunc(k)(D).

D. Measuring Privacy of Adding Noise

Another way to achieve privacy guarantees on released data is by perturbing the data using random noise (this is a common way of achieving differential privacy). Though there are many approaches to generate this noise, most famously by drawing it from the Laplace distribution [14], we investigate one standard approach to adding noise that is used to achieve differential privacy when releasing integer values [29].

Consider ε > 0. Let η be an integer valued random variable such that P(η = i) is proportional to e−ε|i|. Let

where η1, . . . , ηn are independently and identically distributed (iid) copies of η. It is worth noting that is 2ε-differentially private. Recall [14]:

Definition 1

Let n be an integer, Ω and Σ sets, and X a random function that maps n element subsets of Ω (we call such subsets ‘databases of size n‘) into Σ. We say that X is ε-differentially private if, for all databases D and D′ of size n that differ in exactly one element and all S ⊂ Σ, we have that

Using the same framework as above we can define PrivMAFε (d, MAFε (D)) and PrivMAFε (D) to measure the amount of privacy lost by releasing MAFε (D). As above the approximation we use to calculate this is given in the Appendix.

E. Choosing the Size of the Background Population

One detail we did not go into above is the choice of N, where N is the number of people who could reasonably be assumed to have participated in the study. This parameter depends on the context, and giving a realistic estimate of it is critical. In most applications the background population from which the study is drawn is fairly obvious. That being said, one needs to be careful of any other information released publicly about participants–just listing a few facts about the participants can greatly reduce N, thus greatly reducing the bounds on privacy guarantees (since the amount of privacy lost by an individual is roughly inversely proportional to N − n, so doubling N gives us an estimate that is about half of what it would be otherwise).

Note that N can be considered as one of the main privacy parameters of our method. The smaller the N, the stronger the adversary we are protected against. Therefore we want to make N as large as possible, while at the same time ensuring the privacy we need. In our method, an adversary who has limited his pool of possible contenders to fewer than N individuals before we publish the MAF can be considered to have already achieved a privacy breach; thus it is a practitioner‘s job to choose N small enough that such a breach is unlikely.

F. Simulated Data

In what follows, all simulated genotype data was created by choosing a study size, denoted n, and a number of SNPs, denoted m. For each SNP a random number, p, in the range .05 to .5 was chosen uniformly at random to be the MAF in the background population. Using these MAFs we then generated the genotypes of n individuals independently. Note that all computations were run on a machine with 48GB RAM, 3.47GHz XEON X5690 CPU liquid cooled and overclocked to 4.4GHz, using a single core.

III. Results

A. Privacy and MAF

As a case study we tested PrivMAF on data from the Wellcome Trust Case Control Consortium (WTCCC)s 1958 British Birth Cohort [28]. This dataset consists of genotype data from 1500 British citizens born in 1958.

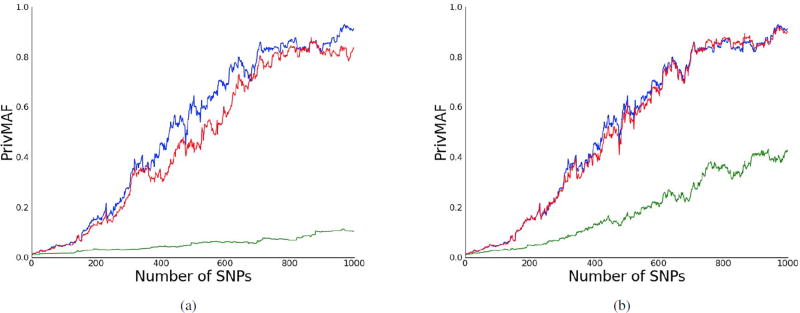

We first looked at the privacy guarantees given by PrivMAF for the WTCCC data for varying numbers of SNPs (blue curve, Fig. 1a), quantifying the relationship between number of SNPs released and privacy lost. The data were divided into two sets: one of size 1,000 used as the study participants, the other of size 500 which was used to estimate our model parameters (pi’s). We assumed that participants were drawn from a background population of 100,000 individuals (N = 100, 000; see Methods for more details). Releasing the MAFs of a small number of SNPs results in very little loss of privacy. If we release 1,000 SNPs, however, we find that there exists a participant in our study who loses most of their privacy– based on only the MAF and public information we can conclude they participated in the study with 90% confidence.

Figure 1.

PrivMAF applied to the WTCCC dataset. In all plots we take n=1000 research subjects and a background population of size N=100,000. (a) Our privacy measure PrivMAF increases with the number of SNPs. The blue line corresponds to releasing MAFs with no rounding, the green line to releasing MAFs rounded to one decimal digit, and the red line to releasing MAFs rounded to two decimal digits. Rounding to two digits appears to add very little to privacy, whereas rounding to one digit achieves much greater privacy gains. (b) The blue line corresponds to releasing MAF with no noise, the red line to releasing MAF.5, and the green line to releasing MAF.1. Adding noise corresponding to ε = .5 seems to add very little to privacy, whereas taking ε = .1 achieves much greater privacy gains.

In addition, we considered the behavior of PrivMAF as the size of the population from which our sample was drawn increases. From the formula for our statistic we see that PrivMAF approaches 0 as the background population size, N, increases, since there are more possibilities for who could be in the study, while it goes to 1 as N decreases towards n.

B. Privacy and Truncation

Next we tested PrivMAF on perturbed WTCCC MAF data, showing that both adding noise and binning result in large increases in privacy. First we considered perturbing our data by binning. We bin by truncating the unperturbed MAFs, first to one decimal digit (MAFtrunc(1), k = 1) and then to two decimal digits (MAFtrunc(2), k = 2). As depicted in Fig. 1a we see that truncating to two digits gives us very little in terms of privacy guarantees, while truncating to one digit gives substantial gains.

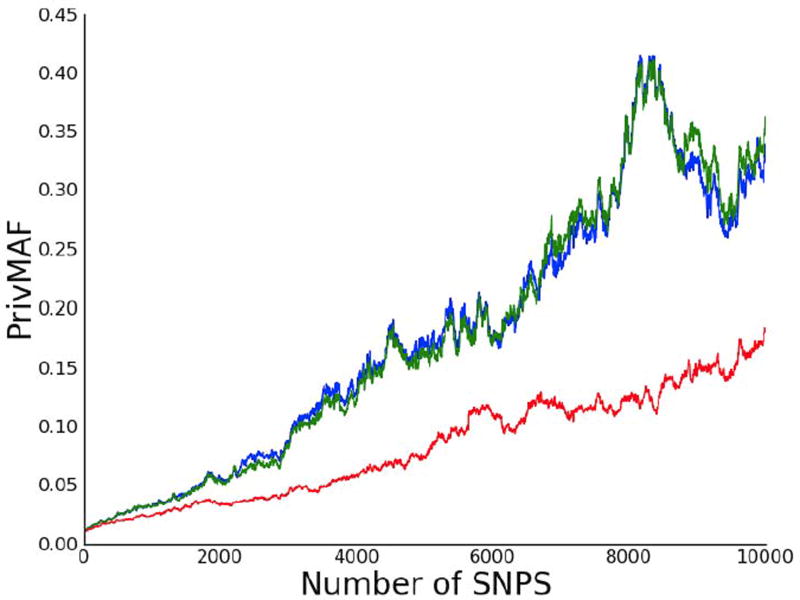

In practice, releasing the MAF truncated to one digit may render the data useless for most purposes. It seems reasonable to conjecture, however, that as the size of GWAS continues to increase similar gains can be made with less sacrifice. As a demonstration of how population size affects the privacy gained by truncation, we generated simulated data for 10,000 study participants and 10,000 SNPs, choosing N to be one million. We then ran a similar experiment to the one performed on truncated WTCCC data, except with k = 2 and k = 3; we found the k = 2 case had similar privacy guarantees to those seen in the k = 1 case on the real data (Fig. 2). For example, we see that if we consider releasing all 10000 SNPs then PrivMAF is near 0.35, while when k = 2 it is below 0.2 (almost a factor of two difference).

Figure 2.

Truncating simulated data to demonstrate scaling. We plot our privacy measure PrivMAF versus the number of SNPs for simulated data with n=10000 subjects and a background population of size N=1,000,000. The green line corresponds to releasing MAFs with no rounding, the blue line to releasing MAFs rounded to three decimal digit, and the red line to releasing MAFs rounded to two decimal digits. Rounding to three digits seems to add very little to privacy, whereas rounding to two digits achieves much greater privacy gains.

C. Privacy and Adding Noise

We also applied our method to data perturbed by adding noise to each SNPs MAF (Fig. 1b). We used ε = 0.1 and 0.5 as our noise perturbation parameters (see Methods). We see that when ε = 0.5, adding noise to our data resulted in very small privacy gains. When we change our privacy parameter to ε = 0.1, however, we see that the privacy gains are significant. For example, if we were to release 500 unperturbed SNPs then PrivMAF(D) would be over 0.4, while PrivMAF.1(D) is still under 0.2.

The noise mechanism we use here gives us 2mε-differential privacy (see Methods), where m is the number of SNPs released. For ε = .1, if m = 200 then the result is 40-differentially private, which is a nearly useless privacy guarantee in most cases. Our measure, however, shows that the privacy gains are quite large in practice. This result suggests that PrivMAF allows one to use less noise to get reasonable levels of privacy, at the cost of having to make some reasonable assumptions about what information is publicly available.

Note that, using expected L1 error as a measure of utility (unfortunately we are not aware of what alternative measures of utility might be most appropriate), we see that adding noise with parameter ε leads to an expected L1 error of . For ε = .5 this corresponds to an L1 error of just under .001, while for ε = .1 this corresponds to an L1 error of just under .005, both of which are relatively small.

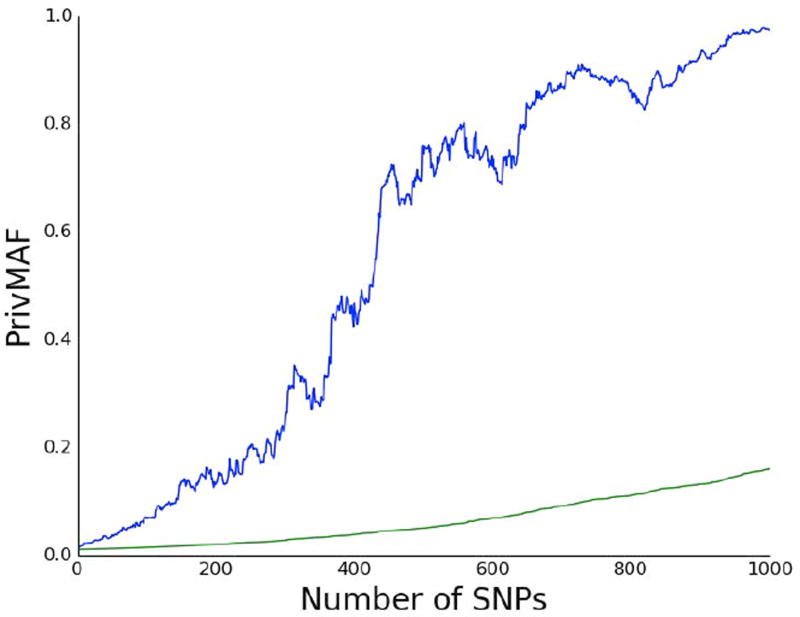

D. Worst Case Versus Average

As stated earlier, the motivation for PrivMAF is that previous methods do not measure privacy for each individual in a study but instead provide a more aggregate measure of privacy loss. This observation led us to wonder exactly how much the privacy risk differs between individuals in a given study. To test this question, we compared the maximum and mean score of PrivMAF(d,MAF(D)) in the WTCCC example for varying values of m, the number of released SNPs. The result is pictured in Fig. 3. The difference is stark–the person with the largest loss of privacy (pictured in blue) loses much more privacy than the average participant (pictured in green). By the time m = 1000 the participant with the largest privacy loss is almost five times as likely to be in the study as the average participant. This result clearly illustrates why worse case, and not just average, privacy should be considered.

Figure 3.

Worst Case Versus Average Case PrivMAF. Graph of the number of SNPs, denoted m, versus PrivMAF. The blue curve is the maximum value of PrivMAF(d,MAF(D)) taken over all d ∈ D for a set of n = 1, 000 randomly chosen participants in the British Birth Cohort, while the green curve is the average value of PrivMAF(d,MAF(D)) in the same set. The the maximum value of PrivMAF far exceeds the average. By the time m = 1000 it is almost five times larger.

IV. CONCLUSION

On the one hand, to facilitate genomic research, many scientists would prefer to release even more data from studies [21], [31]. Though tempting, this approach can sacrifice study participants‘ privacy. As highlighted in the introduction, several different classes of methods have been previously employed to balance privacy with the utility of data. Methods such as sensitivity/PPV based methods are dataset specific, but only give average-case privacy guarantees. Because our method provides worst-case privacy guarantees for all individuals, we are able to ensure improved anonymity for individuals. Thus, PrivMAF can provide stronger privacy guarantees than sensitivity/PPV based methods. Moreover, since our method for deciding which SNPs to release takes into account the genotypes of individuals in our study, it allows us to release more data than any method based solely on MAFs with comparable privacy guarantees.

Our findings demonstrate that differential privacy may not always be the method of choice for preserving privacy of genomic data. Notably, perturbing the data appears to provide major gains in privacy, though these gains come at the cost of utility. That said, our results suggest that, when n is large, truncating minor allele frequencies may result in privacy guarantees without the loss of too much utility. Moreover, the method of binning we used here is very simple– it might be worth considering how other methods of binning may be able to achieve similar privacy guarantees while resulting in less perturbation on average. We further show that adding noise can result in improved privacy, even if the amount of noise we add does not provide reasonable levels of differential privacy.

Note that our method is based off a certain model of how the data is generated, a model that is similar to those used in previous approaches. It will not protect against an adversary that has access to insider information. This caveat, however, seems to be unavoidable if we do not want to turn to differential privacy or similar approaches that perturb the data to a greater extent to get privacy guarantees, thus greatly limiting data utility. It would be of interest to develop methods for generalizing this model based approach to other types of aggregate genomic data (p-values, multiple different statistics, etc), though it is not obvious how to do so.

These assumptions lead some to wonder about model misspecification. For example, if we assume when calculating PrivMAF that the underlying population is of British ancestry then our model does not give privacy guarantees for an individual in the study not of British ancestry. In practice this should lead to overestimation of privacy risk to that individual, since (with high probability) the likelihood of that individual‘s genome having been produced under a model derived from individuals of British ancestry is much lower than it would be under a correct probability model. As such we are likely to overestimate the probability of that individual being in our cohort. Despite this caveat, in order to ensure privacy practitioners should check that the assumptions we make are reasonable in their study.

Having presented results on moderate-sized real datasets, we test the ability of PrivMAF to scale as genomic data sets grow. In particular, we ran our algorithm on larger artificial datasets (with 10,000 individuals and 1000 SNPs) and have found our PrivMAF implementation still runs in a short amount of time (19.14 seconds on our artificial dataset of size 10,000 described above, with a running time of O(mn), where n is the study size and m is the number of SNPs).

Though our work focuses on the technical aspects related to preserving privacy, a related and equally important aspect comes from the policy side. Methods similar to those presented here offer the biomedical community the tools it needs to ensure privacy; however, the community must determine appropriate privacy protections (ranging from the release of all MAF data to use of controlled access repositories) and in what contexts (i.e., do studies of certain populations, such as children, require extra protection?). It is our hope that our work helps inform this debate. Our tool could, for example, be used in combination with controlled access repositories to release the MAFs of a limited number of SNPs depending on what privacy protections are deemed reasonable

Our work addresses the critical need to provide privacy guarantees to study participants and patients by introducing a quantitative measurement of privacy lost by release of aggregate data, and thus may encourage release of genomic data.

A Python implementation of our method, as well as more detailed derivations of our results, are available at http://privmaf.csail.mit.edu.

Acknowledgments

We thank Y.W. Yu, N. Daniels and R. Daniels for most helpful comments. Also thanks to the anonymous reviewers for helpful suggestions. This material is based upon work supported by the National Science Foundation Graduate Research Fellowship under Grant No. #1122374 and NIH Grant GM108348. This study makes use of data generated by the Wellcome Trust Case Control Consortium. A full list of the investigators who contributed to the generation of the data is available from www.wtccc.org.uk. Funding for the project was provided by the Wellcome Trust under award 076113.

Appendix

A. Derivation of PrivMAF

We give a quick sketch of the derivation for PrivMAF on unperturbed data, the derivation for the perturbed versions being similar. A more detailed derivation is available at http://privmaf.csail.mit.edu. Note that we use the notation D̃ = {z̃1, · · ·, z̃n}.

We begin with Pr(d ∈ D̃|d ∈ B̃, MAF(D̃) = MAF(D)). Let Pn and x(D) be as in Section II-B. By repeated us of Bayes law, the fact

and the fact that B̃ − D̃ = {b ∈ B̃|b ∉ D̃ and D̃ are independent random variables, we get that this equals

Using the fact that (1 − z)n ≥ 1 − nz when 0 ≤ z ≤ 1 (this follows from the inclusion exclusion principle) we get that this is

Note that for realistic choices of n, N, p and m we get that Pr(d ∈ B̃ − D̃) is approximately equal to (N − n) Pr(d = z̃1) and that Pr(d ∈ B̃ − D̃) << 1, so 1 − Pr(d ∈ B̃ − D̃) ≈ 1. Plugging this in we get the measure

which is what we use in practice.

B. Perturbed Statistics

When calculating PrivMAFε we use the approximation

where

Similarly, when considering truncated data, we use the approximation

where

and

Letting , we see that

and

This allows us to calculate , just as we wanted. The exact formulas and the corresponding derivations for PrivMAFtrunc(k) and PrivMAFε are available at http://privmaf.csail.mit.edu.

C. Changing the Assumptions

The above model makes a few assumptions (assumptions that are present in most previous work that we are aware of). In particular it assumes that there is no linkage disequilibrium (LD) (which is to say that the SNPs are independently sampled), that the genotypes of individuals are independent of one another (that there are no relatives, population stratification, etc. in the population), and that the background population is in Hardy-Weinberg Equilibrium (H-W Equilibrium). The assumption that genotypes of different individuals are independent from one another is difficult to remove, and we do not consider it here. We can, however, remove either the assumption of H-W Equilibrium or of SNPs being independent.

First consider the case of H-W Equilibrium. Let us consider the ith SNP, and let pi be the minor allele frequency. We also let p0,i, p1,i and p2,i be the probability of us having zero, one, or two copies of the minor allele respectively. Assuming the population is in H-W equilibrium is the same as assuming that p0,i = (1 − pi)2, p1,i = 2pi(1 − pi), and . Dropping this assumption, we see that all of the calculations above still hold, except we get that

where we use the convention that when c < 0. This allows us to remove the assumption of H-W Equilibrium. Unfortunately there are two problems with this approach. The first is statistical– instead of having to just estimate one parameter per SNP (pi), we have to estimate two (p0,i and p1,i, since p2,i can be calculated from the other two). The other problem is that calculating Pr(xi(D) = xi) suddenly becomes more computationally intensive, so much so that it is prohibitive for large data sets.

In order to allow us to drop the assumption of no LD we can model the genome as a Markov model (you could also use a hidden Markov model instead which allows for more complex relationships, but for simplicity sake we will only talk about Markov models since the generalization to HMM is straightforward). In such a model the state of a given SNP only depends on the state of the previous SNP. To specify such a model we need to specify the probability distribution of the first SNP, and for each subsequent SNP we need to specify its distribution conditional on the previous SNP. It is then straightforward to modify our framework to deal with this model. As above, however, this requires us to estimate lots of parameters and also is much more time consuming; thus it is not likely to be useful in practice.

Finally, we assume that D is chosen uniformly at random from B. This can be thought of as assuming that the adversary has no knowledge about how the genomic make up of D differs from that of B. If this is not the case then different probabilistic models may have to be used. In particular, we would like to develop probabilistic models that take into account the release of other aggregate data (such as regression coefficients, p-values, etc)– such models will likely require us to abandon the assumption of individuals being chosen uniformly at random.

It is worth noting that choosing a smaller N could conceivably be used as a buffer to help protect against such misspecification. More specifically, the adversary has a prior belief Pr(d ∈ D| background knowledge). In our model we assume that this equals (where there are some complications due to the possibility of duplicate genomes). This gives us a correspondence between the choice of N and the choice of Pr(d ∈ D| background knowledge), so choosing smaller N could help overcome this issue. The exact way in which N determines our privacy measure can be seen in Fig S5 on our website at http://privmaf.csail.mit.edu.

D. Release Mechanism

Often one might like to use PrivMAF to decide if it is safe to release a set of MAF from a study. This can be done by choosing α between 0 and 1 and releasing the MAF if and only if PrivMAF(D) ≤ α. The action of deciding to release D or not release D, however, gives away a little information. In practice this is unlikely to be an issue, but in theory it can lead to privacy breaches. This issue can be dealt with by releasing the MAF if and only if PrivMAF(D) ≤ β(α), where β = β(α) is chosen so that:

where

We call this release mechanism the Allele Leakage Guarantee Test (ALGT). Unlike the naive release mechanism ALGT gives us the following privacy guarantee:

Theorem 1

Choose β as above. Then, if PrivMAF(D) ≤ β, for any choice of d ∈ D we get that

is less than or equal to α.

Note that the choice of α determines the level of privacy achieved. Picking this level is left to the practitioner– perhaps an approach similar to that taken by Hsu et al. [30] is appropriate.

A more detailed proof of the privacy result above can be found at http://privmaf.csail.mit.edu.

References

- 1.Homer N, Szelinger S, Redman M, Duggan D, Tembe W, Muehling J, Pearson J, Stephan D, Nelson S, Craig D. Resolving individual‘s contributing trace amounts of DNA to highly complex mixtures using high-density SNP genotyping microarrays. PLoS Genet. 2008;4(8) doi: 10.1371/journal.pgen.1000167. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Erlich Y, Narayanan A. Routes for breaching and protecting genetic privacy. Nature Reviews Genetics. 2014;15:409–421. doi: 10.1038/nrg3723. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Zhou X, Peng B, Li Y, Chen Y, Tang H, Wang X. ESORICS. 2011. To release or not to release: evaluating information leaks in aggregate human-genome data; pp. 607–627. [Google Scholar]

- 4.Schadt E, Woo S, Hao K. Bayesian method to predict individual SNP genotypes from gene expression data. Nat Genet. 2012;44(5):603608. doi: 10.1038/ng.2248. [DOI] [PubMed] [Google Scholar]

- 5.Im H, Gamazon E, Nicolae D, Cox N. On sharing quantitative trait GWAS results in an era of multiple-omics data and the limits of genomic privacy. Am J Hum Genet. 2012;90(4):591598. doi: 10.1016/j.ajhg.2012.02.008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Visscher P, Hill W. The limits of individual identification from sample allele frequencies: theory and statistical analysis. PLoS Genet. 2009;5(10) doi: 10.1371/journal.pgen.1000628. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Sankararaman S, Obozinski G, Jordan M, Halperin E. Genomic privacy and the limits of individual detection in a pool. Nat Genet. 2009;41:965–967. doi: 10.1038/ng.436. [DOI] [PubMed] [Google Scholar]

- 8.Jacobs K, Yeager M, Wacholder S, Craig D, Kraft P, Hunter D, Paschal J, Manolio T, Tucker M, Hoover R, Thomas G, Chanock S, Chatterjee N. A new statistic and its power to infer membership in a genome-wide association study using genotype frequencies. Nat Genet. 2009;41(11):1253–1257. doi: 10.1038/ng.455. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Braun R, Rowe W, Schaefer C, Zhan J, Buetow K. Needles in the haystack: identifying individual‘s present in pooled genomic data. PLoS Genet. 2009;5(10) doi: 10.1371/journal.pgen.1000668. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Lumley T, Rice K. Potential for revealing individual-level information in genome-wide association studies. J Am Med Assoc. 2010;303(7):659–660. doi: 10.1001/jama.2010.120. [DOI] [PubMed] [Google Scholar]

- 11.Craig D, Goor R, Wang Z, Paschall J, Ostell J, Feolo M, Sherry S, Manolio T. Assessing and mitigating risk when sharing aggregate genetic variant data. Nat Rev Genet. 2011;12(10):730–736. doi: 10.1038/nrg3067. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Sweeney L. K-anonymity: a model for protecting privacy. International Journal on Uncertainty, Fuzziness and Knowledge-based Systems. 2011;10:557–570. [Google Scholar]

- 13.Loukides G, Gkoulalas-Divanis A, Malin B. Anonymization of electronic medical records for validating genome-wide association studies. PNAS. 2010;107(17):7898–7903. doi: 10.1073/pnas.0911686107. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Dwork C, Pottenger R. Towards practicing privacy. J Am Med Inform Assoc. 2013;20(1):102–108. doi: 10.1136/amiajnl-2012-001047. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Uhler C, Fienberg S, Slavkovic A. Privacy-preserving data sharing for genome-wide association studies. Journal of Privacy and Confidentiality. 2013;5(1):137–166. [PMC free article] [PubMed] [Google Scholar]

- 16.Bierut L, et al. ADH1B is associated with alcohol dependence and alcohol consumption in populations of European and African ancestry. Mol Psychiatry. 2012;17(4):445–450. doi: 10.1038/mp.2011.124. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Manen D, Wout A, Schuitemaker H. Genome-wide association studies on HIV susceptibility, pathogenesis and pharmacogenomics. Retrovirology. 2012;9(70):18. doi: 10.1186/1742-4690-9-70. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Ramos E, Din-Lovinescu C, Bookman E, McNeil L, Baker C, Godynskiy G, Harris E, Lehner T, McKeon C, Moss J, Starks V, Sherry S, Manolio T, Rodriguez L. A mechanism for controlled access to GWAS data: experience of the GAIN data access committee. Am J Hum Genet. 2013;92(4):479488. doi: 10.1016/j.ajhg.2012.08.034. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Gilbert N. Researchers criticize genetic data restrictions. Nature. 2008 doi: 10.1038/news.2008.1083. [DOI] [Google Scholar]

- 20.Zerhouni E, Nabel E. Protecting aggregate genomic data. Science. 2008;321(5898):1278. doi: 10.1126/science.322.5898.44b. [DOI] [PubMed] [Google Scholar]

- 21.Walker L, Starks H, West K, Fullerton S. dbGaP data access requests: a call for greater transparency. Sci Transl Med. 2011;3(113):1–4. doi: 10.1126/scitranslmed.3002788. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Oliver J, Slashinski M, Wang T, Kelly P, Hilsenbeck S, McGuirea A. Balancing the risks and benefits of genomic data sharing: genome research participants perspectives. Public Health Genom. 2012;15(2):106–114. doi: 10.1159/000334718. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Malin B, El Emam K, OKeefe C. Biomedical data privacy: problems, perspectives and recent advances. J Am Med Inform Assoc. 2013;20(1):2–6. doi: 10.1136/amiajnl-2012-001509. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Gymrek M, McGuire A, Golan D, Halperin E, Erlich Y. Identifying personal genomes by surname inference. Science. 2013;339(6117):321–324. doi: 10.1126/science.1229566. [DOI] [PubMed] [Google Scholar]

- 25.Sweeney L, Abu A, Winn J. Identifying participants in the personal genome project by name. SSRN Electronic Journal. 2013:1–4. [Google Scholar]

- 26.El Emam K, Jonker E, Arbuckle L, Malin B. A systematic review of re-identification attacks on health data. PLoS ONE. 2011;6(12) doi: 10.1371/journal.pone.0028071. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Sweeney L. Simple demographics often identify people uniquely. 2010 http://dataprivacylab.org/projects/identifiability/

- 28.The Wellcome Trust Case Control Consortium. Genome-wide association study of 14,000 cases of seven common diseases and 3000 shared controls. Nature. 2007;447:661–683. doi: 10.1038/nature05911. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Ghosh A, et al. Universally utility-maximizing privacy mechanisms. SIAM J Comput. 2012;41(6):16731693. [Google Scholar]

- 30.Hsu J, Gaboardi M, Haeberlen A, Khanna S, Narayan A, Pierce B, Roth A. Differential privacy: an economic method for choosing epsilon; Proceedings of 27th IEEE Computer Security Foundations Symposium; 2014. [Google Scholar]

- 31.Rodriguez L, Brooks L, Greenberg J, Green E. The complexities of genomic identifiability. Science. 2013;339:275–276. doi: 10.1126/science.1234593. [DOI] [PubMed] [Google Scholar]