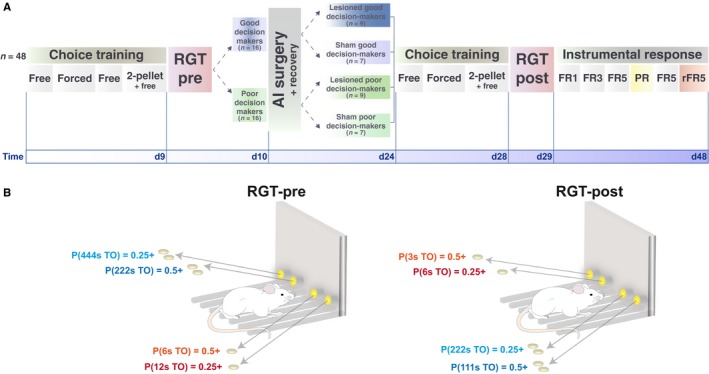

Figure 1.

General experimental design and rGT choice structure. (A) The behavioural procedure before surgery consisted of nine daily sessions (see details in Materials and methods). Animals initially responded in any of the four active holes during free choice sessions to obtain one food pellet. Forced choice sessions subsequently ensured that animals explored every active hole. Two further free choice sessions were then administered, during which animals received two‐sugar pellets for half of each session. Rats were then tested on a single pre‐surgery rGT session from which good (n = 16) and poor (n = 16) decision‐makers were identified according to their performance. Rats underwent either bilateral AI‐ or sham lesion (respectively, n = 9 and n = 7). After recovery, animals were exposed to similar training sessions, but over 4 days and finally a post‐surgery rGT session was conducted. The second rGT was based on the same principle of disadvantageous and advantageous two‐hole options but utilised a new combination of conditions to avoid learning effects. To further explore the effects of AIC lesions, the acquisition of instrumental responding for food reward in a two‐lever operant conditioning chamber under increased FR response requirements (FR1, FR3 and FR5) was measured. Subsequently a PR schedule during which the cost of the reward is progressively increased within a single session measured animals’ motivation for food reward. Lastly, rats’ ability to update or alter their behaviour was measured via five reversal learning sessions according to a FR5 schedule under reversed values of the levers (FR5r). (B) Diagram showing the utility of the four options in each of the two rGT sessions. Rats are offered four options, two of which were associated with small reward (one pellet) and the other two with higher reward (two pellets, represented here pictorially). The higher rewards were associated with higher punishment in the form of time–out periods, the duration of which is shown in seconds along with the relative probability (P). Timeouts were delivered concomitant with reward. The options delivering single pellets were considered advantageous due to the lower potential timeout punishments. Thus, rats had to sample the various options and learn to move away from high reward/high cumulative loss choices to low reward/low cumulative loss choices. Advantageous options were counterbalanced against the animals preferred side during training. During the second rGT, the contingencies were reshuffled and the duration of penalties was altered so animals had to sample each hole again in order to re‐learn the optimal strategy. [Colour figure can be viewed at wileyonlinelibrary.com].