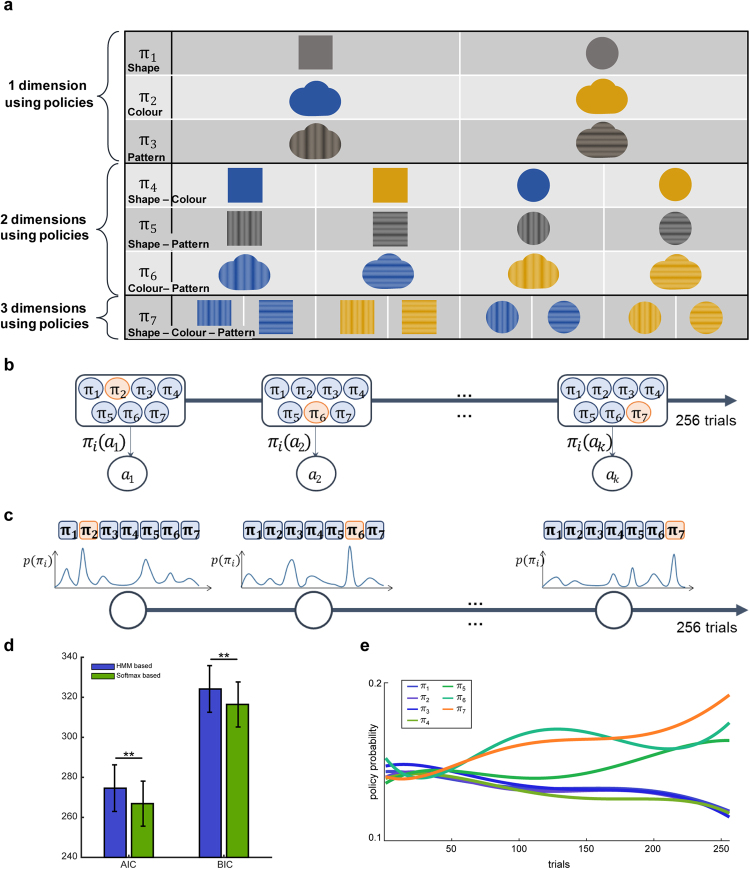

Figure 2.

Probabilistic policy exploration model. (a) In the naïve Reinforcement Learning (RL) phase, possibly used features were abstracted as policies, as follows: π1, using shape information (1 dim), π2, using colour information (1 dim), π3, using pattern information (1 dim), π4, using combinations of colour and shape information (2 dim), π5, using combinations of shape and pattern information (2 dim), π6, using combinations of colour and pattern information (2 dim), π7, using combinations of shape, colour, and pattern information (3 dim). (b) A schematic diagram of the hidden Markov model (HMM)-based policy search model. (c) A schematic diagram of the softmax function-based policy search model. (d) Comparison of model results. blue: HMM-based model, green: Softmax function-based policy search model, paired t-test p = 0.0080, mean ± SEM. (e) Representative fitted policy probability. Each policy is represented by an individual colour.