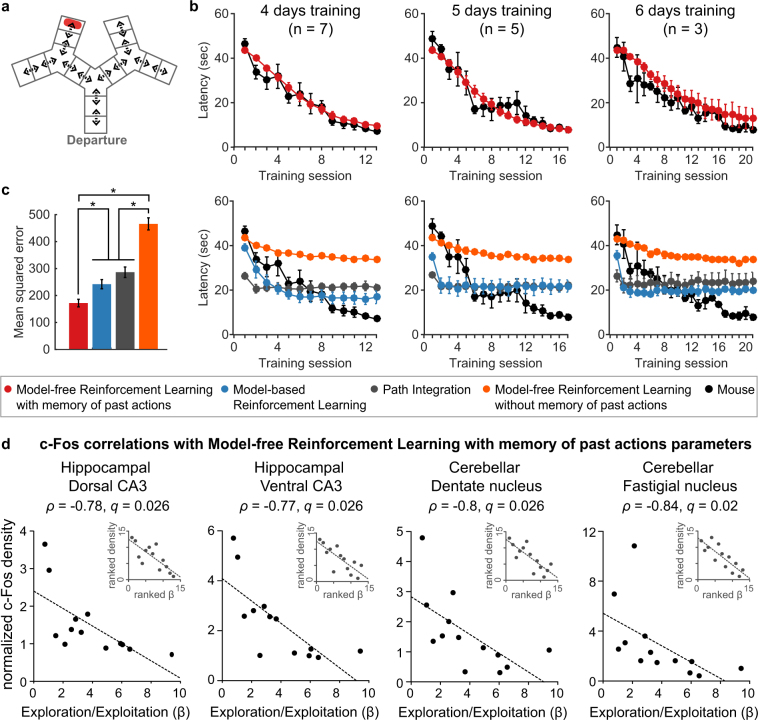

Figure 3.

Neural correlates of the exploration-exploitation balance of model-free reinforcement learning model with a memory of past actions. (a) Virtual maze used for the simulations. The maze was discretized in corridors, dead-ends and intersections. (b) Average curves of simulations for each mouse, which reached the exploitation criteria in 4 days (left), 5 days (centre) or 6 days (right). The simulations with 100 freely choosing agents per mouse tested the ability of each model to reproduce the behavioural data (black). The optimal parameters used for the simulations were identified by fitting each mouse’s actions on a trial-by-trial basis (Supplementary Table 5). Only the model-free reinforcement learning model with a memory of past actions successfully replicated mice behaviour (top), whereas other models were unable to reach the mice’s final performances (bottom). (c) Average mean-squared error between the simulations and the mice’s performances for each model tested, showing significantly lower mean-squared error with the model-free reinforcement learning model with a memory of past actions (*indicates q < 0.05, FDR corrected, Mann Whitney comparisons). (d) c-Fos correlations with model-free reinforcement learning with memory of past actions exploration/exploitation trade-off, for the 13 mice which showed a higher log-likelihood amongst the three models which can learn the sequence. Only hippocampal and cerebellar structures showed significant correlations (q < 0.05, FDR corrected, Spearman correlation). The main plot shows the correlation on the raw data and the inset in the top right hand side shows the same correlation on the ranked data, which is used to calculate Spearman’s correlation. Data represent mean ± s.e.m.