Evidence shows most teaching evaluation practices do not reflect stated policies, even when the policies specifically espouse teaching as a value. This essay discusses four guiding principles for aligning practice with stated priorities in formal policies and highlights three university efforts to improve the practice of evaluating teaching.

Abstract

Recent calls for improvement in undergraduate education within STEM (science, technology, engineering, and mathematics) disciplines are hampered by the methods used to evaluate teaching effectiveness. Faculty members at research universities are commonly assessed and promoted mainly on the basis of research success. To improve the quality of undergraduate teaching across all disciplines, not only STEM fields, requires creating an environment wherein continuous improvement of teaching is valued, assessed, and rewarded at various stages of a faculty member’s career. This requires consistent application of policies that reflect well-established best practices for evaluating teaching at the department, college, and university levels. Evidence shows most teaching evaluation practices do not reflect stated policies, even when the policies specifically espouse teaching as a value. Thus, alignment of practice to policy is a major barrier to establishing a culture in which teaching is valued. Situated in the context of current national efforts to improve undergraduate STEM education, including the Association of American Universities Undergraduate STEM Education Initiative, this essay discusses four guiding principles for aligning practice with stated priorities in formal policies: 1) enhancing the role of deans and chairs; 2) effectively using the hiring process; 3) improving communication; and 4) improving the understanding of teaching as a scholarly activity. In addition, three specific examples of efforts to improve the practice of evaluating teaching are presented as examples: 1) Three Bucket Model of merit review at the University of California, Irvine; (2) Evaluation of Teaching Rubric, University of Kansas; and (3) Teaching Quality Framework, University of Colorado, Boulder. These examples provide flexible criteria to holistically evaluate and improve the quality of teaching across the diverse institutions comprising modern higher education.

Research on how students learn and on learner-centered teaching practices is well documented in peer-reviewed scholarship (National Research Council, 2000; Doyle, 2008; Ambrose et al., 2010; Brown et al., 2014) and more recently highlighted in high-level policy reports and papers (Handelsman et al., 2004; Singer et al., 2012; Kober, 2015). Robust evidence shows that active-learning pedagogies are more effective than traditional lecture-based methods in helping students, including students from underrepresented backgrounds, learn more, persist in STEM (science, technology, engineering, and mathematics) fields, and experience higher rates of completing their undergraduate degrees (Lorenzo et al., 2006; Haak et al., 2011; Eddy and Hogan, 2014; Freeman et al., 2014; Becker et al., 2015; Trenshaw et al., 2016). Grounded in this scholarship, many postsecondary institutions have launched institution-wide efforts to improve the quality and effectiveness of undergraduate teaching and learning. As commented by Susan Singer, former director of the Division of Undergraduate Education at the National Science Foundation, the landscape is filled with encouraging ways to transform undergraduate education (Singer, 2015).

Despite this movement toward developing and supporting systemic reform in undergraduate education, a majority of research university faculty members who teach undergraduate science and engineering classes remain inattentive to the shifting landscape. Student-centered, evidence-based teaching practices are not yet the norm in most undergraduate STEM education courses, and the desired magnitude of change in STEM pedagogy has not materialized (Henderson and Dancy, 2007; Dancy and Henderson, 2010; Dancy et al., 2014; Anderson et al., 2011; Singer et al., 2012; Malcolm and Feder, 2016).

A critical factor impeding systemic improvements of undergraduate education, not only in STEM fields, is how teaching is considered in the rewards structure. Development of a coherent set of policies to guide the evaluation of a faculty member’s work is a precondition for improving the merit and promotion processes. However, evidence shows a wide variation in commitment to and expectations for research, teaching, and service between and within research universities exists (Fairweather and Beach, 2002). Furthermore, stated policies that articulate the value of teaching have been insufficient to raise the attention paid to teaching. Merely espousing the value of teaching is not enough. Frequently, department, college, and university practices do not align with stated priorities in their formal policies (Fairweather, 2002, 2009; Huber, 2002).

Currently, faculty members at research universities tend to be assessed and promoted mainly on the basis of research success (Bradforth et al., 2015). “Neglect of undergraduate education had been built into the postwar university, in which faculty members were rewarded for their research output, graduate student Ph.D. production, and the procurement of external research support, but not for time devoted to undergraduate education” (Lowen, 1997, p. 224). This reality is frequently reinforced by a lack of support and feedback about teaching (Gormally et al., 2014). Furthermore, teaching effectiveness is overwhelmingly assessed using student evaluation surveys completed at the end of each course, despite evidence that these evaluations rarely measure teaching effectiveness (Clayson, 2009; Boring et al., 2016), contain known biases (Centra and Gaubatz, 2000), promote the status quo, and in some cases reward poor teaching (Braga et al., 2014). There is also increasing evidence that unintended biases of students influence course evaluations (MacNell et al., 2015). However, the ease with which these student evaluation surveys are administered and used in the promotion and tenure process has resulted in a long-standing practice that presents a barrier to innovation and scholarly teaching.

Providing faculty members with support for improved teaching, aligning incentives with the expectation of quality teaching, using metrics that accurately reflect teaching effectiveness, and developing transparent evaluation practices that are not unduly biased are necessary for systemic improvement of undergraduate education. Enabling effective evaluation of teaching will require the development of practical frameworks that are scholarly, accessible, efficient, and aligned with local cultures so as not to preclude their use by most institutions. Such frameworks will provide the greatest probability that teaching and its evaluation will be taken seriously in the academy (Wieman, 2015).

This essay discusses the collaboration between the Association of American Universities1 (AAU) and the Cottrell Scholars2 funded by Research Corporation for Science Advancement (RCSA) to address this critical barrier to improve the quality of undergraduate STEM education. While our efforts have focused primarily on undergraduate STEM education, many of the recommended practices would serve to improve undergraduate education generally.

An initial AAU and Cottrell Scholar collaborative project (2012–2015) focused on understanding the landscape of established and emergent means to more accurately evaluate and assess teaching effectiveness. Building from this work, a second collaborative project (2015–2017) aimed to develop practical guidelines to recognize and reward contributions to teaching at research universities at the department, college, and university levels. A starting point for this project was to assess the current espoused importance of teaching at research universities by examining published promotion and tenure policies at research-intensive institutions. This information was combined with an analysis from a 2014 survey administered to instructional staff on the importance of teaching at research universities as part of the AAU Undergraduate STEM Education Initiative. These results formed the basis for a workshop sponsored by the AAU and RCSA held in May of 2016 that brought together leading higher education scholars and practitioners and research-active faculty members to develop specific recommendations and guidance to value, assess, and reward effective teaching.

The following essay reports on the gap between policies and practices within an institution and offers strategies intended to provide guidance on how institutions can more effectively align their practices for valuing teaching with the stated priorities in their formal policies. The essay concludes with profiles of three institutional examples drawing upon such strategies to assess and reward contributions to teaching.

THE GAP BETWEEN POLICY AND PRACTICE

An analysis of 51 institutions’ university-level promotion and tenure policies shows that many contain language valuing teaching in addition to research (see list of universities in the Supplemental Materials). Forty-one of these policies give some form of guidelines as to how teaching should be considered. Out of the 41 institutions that provide guidelines, 36 required at least one form of evidence, 36 recommend or require student evaluations to be used, and 26 recommend or require peer classroom observation.

The AAU, as part of its Undergraduate STEM Education Initiative, collected statements on the evaluation of teaching from 32 department chairs at eight universities. Across all institutions and departments there was a strong assertion that teaching is highly valued. Furthermore, all departments make use of student evaluations at the end of courses and provide an annual award for excellence in teaching. However, it was impossible to discern for 19 of 32, or 59%, of the statements submitted whether attention to student learning outcomes or evidence-based pedagogy was either required or recognized.

Additionally, the AAU collected information about the value placed on teaching and the quality of the evidence used to assess effective teaching in merit and promotion processes from approximately 1000 instructional staff. Respondents3 were asked to indicate the extent to which they agreed with a series of statements about the value placed on teaching by their departments, colleges, and schools, as shown in Table 1. This information was collected to provide a baseline of the overall culture toward teaching at these various levels as part of the AAU Undergraduate STEM Education Initiative pilot project sites. Respondents agreed that both their department and campus administrations at their universities recognize the importance of teaching and are supportive of faculty members improving and changing their teaching practices (3.20 ± 0.74 and 3.02 ± 0.75, respectively). However, when asked whether faculty members in their departments believe that ongoing improvement in teaching is part of their job duties, the level of agreement drops slightly (2.90 ± 0.74). Also, when asked to give their opinion whether effective teaching plays a meaningful role in the annual review and salary processes within their colleges and within the promotion and tenure processes at their institutions, the mean responses were in the middle between agree and disagree (2.50 ± 0.87 and 2.54 ± 0.86, respectively). These results suggest some disconnection between what is publicly supported within colleges and universities and what actually happens in day-to-day processes.

TABLE 1.

Overall means for survey statements by faculty members about importance and recognition of teaching (1 = strongly disagree, 2 = disagree, 3 = agree, 4 = strongly agree)

| Statement | Mean | SD | Valid N |

|---|---|---|---|

| My departmental administration recognizes the importance of teaching and is supportive of faculty improving and changing teaching practices. | 3.20 | 0.74 | 964 |

| Campus administration at my university recognizes the importance of teaching and is supportive of faculty improving and changing teaching practices. | 3.02 | 0.75 | 960 |

| Instructors in my department believe that ongoing improvement in teaching is part of their jobs. | 2.90 | 0.74 | 962 |

| In my opinion, effective teaching plays a meaningful role in the annual review and salary processes in my college. | 2.50 | 0.87 | 950 |

| In my opinion, effective teaching plays a meaningful role in the promotion and tenure processes at my institution. | 2.54 | 0.86 | 950 |

Furthermore, when respondents were asked to provide their opinions about the quality of the evidence for effective teaching used by their colleges in annual review and salary processes and in the promotion and tenure processes at their institutions, those choosing “don’t know” or not answering increased to slightly more than 40% (see Table 2). Of those who chose to respond, in both cases, one-third noted the teaching evidence was of “low quality” and half cited “medium quality” evidence of effective teaching. This reinforces findings previously demonstrated by Wieman (2015).

TABLE 2.

Responses to quality of evidence of effective teaching

| Your feedback regarding the quality of the evidence for teaching used in the following circumstances: | Low quality | Medium quality | High quality | Total | No response or don’t know | |||

|---|---|---|---|---|---|---|---|---|

| N | % | N | % | N | % | N | N | |

| By your college in the annual review and salary process | 224 | 34.4 | 331 | 50.8 | 97 | 14.9 | 652 | 441 |

| By your institution in the promotion and tenure process | 212 | 33.2 | 325 | 50.9 | 101 | 15.8 | 638 | 455 |

RECOMMENDATIONS TO VALUE, ASSESS, AND REWARD CONTRIBUTIONS TO TEACHING AT RESEARCH UNIVERSITIES

Larger long-term improvement to undergraduate STEM education will evolve from an environment of continuous improvement of teaching coupled with an altering of the practice of how contributions to teaching are recognized and rewarded at research institutions. Interpretation and enactment of written policies relating to the evaluation of teaching for purposes of merit and promotion are where true institutional values lie (Fairweather, 2002).

Fostering a university culture that values high-quality and continuous improvement of teaching as much as performing high-quality research requires establishing teaching as a public and collaborative university activity, as well as an integral aspect of the individual faculty member’s scholarship. To do this, it is critical to identify the criteria and relevant roles of the faculty member, program, department, college, and institution for evaluating an individual faculty member’s work that fits both the local context (program/department/college) and the larger institutional mission. Ultimately, the goal is to allow local variation in a manner that both preserves the academic freedom of faculty in the classroom while supporting the university’s collective responsibilities for undergraduate education.

Deans and Department Chairs Play a Critical Role

As institutional leaders, deans and department chairs can reinforce an expectation that faculty members understand teaching not as an isolated activity, but as integrated into their roles as scholars, as members of the university, and as members of their own disciplinary-based community. This requires department chairs and deans to encourage faculty members to think critically about their teaching and develop a continuous improvement mind-set about their teaching within their disciplines and in the context of the educational responsibilities of their departments. Beginning this conversation during the hiring process (e.g., through clear language in the job announcement and application package materials) articulates the importance of teaching. Furthermore, assessing a candidate’s attitudes about teaching and advising can be achieved by including questions about teaching and advising in addition to research in the on-campus interview. Some schools also schedule a teaching demonstration (such as a mini-class) as part of the interview process, going beyond simply discussing teaching with the candidate. This approach demands that universities, colleges, and departments must desire to hire outstanding scholars who participate in the dissemination of the knowledge that they create and view teaching as an essential element of scholarship.

Emphasize the Importance of Teaching at the Onset of Hiring

For new hires, a department could provide teaching professional development funds as part of start-up packages, require a professional development plan for teaching, support participation in faculty learning communities, or intentionally support faculty mentoring by pairing expert teachers with those interested in improving their teaching and provide course-load credit for both faculty members.

Communicate Criteria and Expectations on How Contributions to Teaching Will Be Evaluated and Recognized

Faculty members should be provided with mechanisms to document and evaluate teaching innovations and improvements necessary to satisfy these criteria and expectations. Additionally, data from such documentation should feed into reward systems. Three practices are essential to this recommendation. 1) Empower departments to establish an agreed-upon set of metrics that go beyond student satisfaction surveys for each faculty member. A broader array of materials could include: development/revision of learning goals and content in course syllabus, incorporation of new pedagogical practices into courses, documented achievement of student learning outcomes or changes in classroom culture, involvement in teaching service or scholarship, or shifts of assessment from factual recall to providing evidence of how students use their knowledge. The primary purpose of these strategies is to encourage faculty members to be reflective about their teaching practices. 2) Make sure that metrics are efficient, that is, they are not so labor-intensive as to preclude their use by most faculty members. 3) Ensure that promotion and tenure committees at both the departmental and institutional levels are educated with respect to best practices about how to effectively review the materials submitted by faculty members.

Establish a Culture Consistent across Departments, Colleges, and the University That Recognizes the Scholarly Activity of Teaching

Fundamentally, the values of a university and a department can be discerned from the activities they promote and reward. The above recommendations are aimed at establishing a culture consistent across departments, colleges, and the university that recognizes the scholarly activity associated with the time and effort to maintain and improve education. Achievement of this goal will require a holistic approach to value, support, assess, and reward teaching at multiple institutional levels. Universities and colleges can signal a commitment to quality educational practices for all by providing resources to support faculty members improving large introductory STEM courses. A commitment by the department and university to use clearly articulated empirical evidence for rewarding teaching, both in the promotion and tenure process and for teaching awards, provides validation for the importance of effective undergraduate education. Fundraising around curricular programs can bring exposure and reward to faculty members invested in student learning. Efforts to address the perceived divide between tenure-stream “research” faculty and instructional faculty, who often play a significant role in the large introductory courses, could further support the university’s educational mission. Opportunities to discuss and present scholarly activities around teaching provide public recognition that can be emphasized by the visible support of key institutional leaders, such as deans, chairs, and other academic administrators. Furthermore, increasing awareness within the university about existing efforts and related scholarship to improve student learning and teaching effectiveness on campus has the potential to better articulate how the institution’s educational objectives relate to the research mission of the university.

THREE EXAMPLES OF INSTITUTIONAL INITIATIVES TO ASSESS AND REWARD TEACHING

Promotion Process at the University of California, Irvine: Moving to a Three-Bucket System

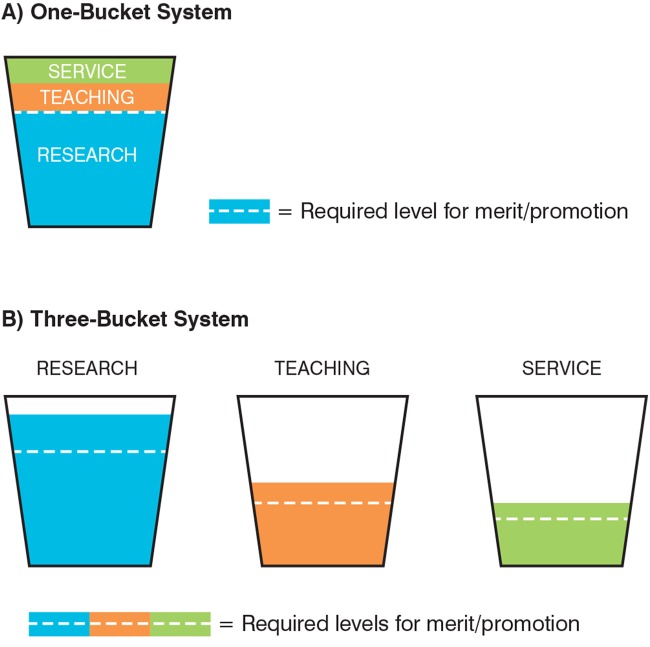

At most universities, accomplishment in three areas—research, teaching, and service—is evaluated to inform merit and promotion decisions. Overall accomplishment that has both quantity and impact components can be represented by a single bucket (Figure 1A). The level that must be achieved for promotion varies by university and discipline, but is generally agreed upon locally and is represented by the dashed line in Figure 1A. Getting over the line results in promotion. But this graphic illustrates the common perception, particularly at R1 universities, that the full line can be determined almost completely by accomplishments in research.

FIGURE 1.

Moving from a one- to three-bucket system. (A) In the one-bucket system, the arrow on left indicates the level of accomplishment, determined by quantity and impact components, required for promotion. Sufficient accomplishment in research is often enough to reach this level. (B) Using a three-bucket system requires accomplishment not only in research but in teaching and service as well, and the shading indicates that accomplishment expected might vary depending on department, school/unit, or even at different times in one’s career.

When all faculty are compared in this one-bucket system, those who do more teaching and service rarely benefit in terms of merit and promotion, because getting to the dashed line is what is needed. One solution is to move to a three-bucket system, in which a level of accomplishment that has both quantity and impact components is required in each of three buckets (Figure 1B). If the faculty member does not reach the required level in all three buckets, merit-based salary increases are not awarded or promotion/tenure is denied. In this system, one cannot simply fill the research bucket so full that empty teaching and service buckets are acceptable.

The University of California (UC), Irvine, has not yet made a complete transition from a one-bucket to a three-bucket system, but is making steady changes in this direction. For example, as a member of the UC system, UC Irvine has a merit and promotion system that governs advancement through the ranks with associated salary increases on a regular schedule (www.ucop.edu/academic-personnel-programs/_files/apm/apm-210.pdf). Advancements can be accelerated in time to reward the most outstanding faculty. On the UC Irvine campus, accelerations have typically required demonstration of research accomplishments at a significantly higher rate and of similar or greater impact than expected for a regular action. Since 2014, accelerations have required evidence of excellence above that expected for normal actions, not only in research but also in teaching and/or service.

What is put into the buckets also matters. While published UC policy indicates that at least two types of evidence should support evaluation of teaching (see the Supplemental Material or visit www.ucop.edu/academic-personnel-programs/_files/apm/apm-210.pdf, p. 5), in practice, student evaluations are often the only evidence used. For the 2016 review cycle, UC Irvine has required individuals to upload at least one additional type of evidence to evaluate teaching (e.g., reflective teaching self-statement, syllabus, peer evaluation, or measure of student achievement). This change is a first step toward conducting a more thorough evaluation of the contributions to teaching. It also broadens the discussion of teaching by everyone involved in the review process and thus has the potential to increase awareness of the innovative and effective teaching practices taking place on campus.

University of Kansas Department Evaluation of Faculty Teaching Rubric

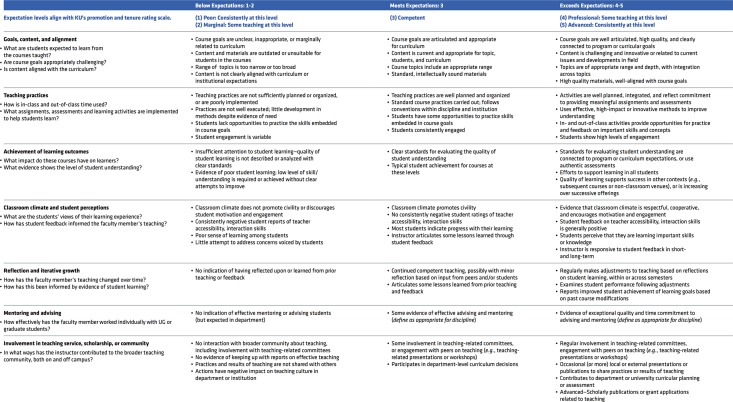

The Center for Teaching Excellence at the University of Kansas (KU) recently developed a rubric (Figure 2) for department-level evaluation of faculty teaching. The university requires that evaluation of faculty teaching for promotion and tenure and progress toward tenure includes information from the instructor, students, and peers. However, the quality of the information collected is highly variable, and reviewers often struggle to integrate and make sense of information from the three sources. In practice, many evaluations prioritize a narrow dimension of teaching activity (the behavior of the instructor in the classroom) and a limited source of evidence (student evaluations). Providing a rubric to structure the evaluation of faculty members’ teaching increases the visibility of all dimensions of teaching, clarifies faculty teaching expectations, enables quick identification of strengths and areas for improvement, and brings consistency across evaluations and over time.

FIGURE 2.

Rubric for department evaluation of faculty teaching.

The goal of the rubric is to help department committees integrate information from the faculty members being evaluated, their peers, and their students to create a more holistic view of a faculty member’s teaching contributions. Drawing on the peer review of teaching literature (e.g., Hutchings, 1995, 1996; Glassick et al., 1997; Bernstein and Huber, 2006; Bernstein, 2008; Lyde et al., 2016), the rubric identifies seven dimensions of teaching practice that address contributions to both individual courses and the department’s curriculum. For each category, the rubric provides both guiding questions and defined expectations. The rubric can also be used to guide a constructive peer-review process, reflection, and iterative improvement.

To ensure applicability across disciplines, the rubric does not weigh or place focus on any particular element or require a particular type of evidence to be used. Departments are encouraged to modify the rubric and use it to build consensus about the dimensions, the questions, and the criteria. The implementation strategy included discussions with department chairs and the KU Center for Teaching Excellence department ambassadors in advance of its release in order to increase the probability of broad buy-in. The rubric was piloted during the 2016–2017 academic year as a guide for peer review of teaching, promotion and tenure, and third-year reviews.

University of Colorado Teaching Quality Framework

The AAU-sponsored undergraduate STEM initiative has helped to support the development of a new Teaching Quality Framework (TQF) at the University of Colorado. The framework draws upon organizational change literature and cites the University of Colorado, Boulder (CU Boulder), already existing guidelines—“Dossiers for comprehensive review, tenure, or promotion must include multiple measures of teaching”—to create a framework for assessing and promoting teaching quality (Finkelstein et al., 2015). The goal of the TQF is to create a common campus-wide framework for using scholarly measures of teaching effectiveness that is disciplinary specific and provides faculty members with feedback in order to support improved teaching. Thus, CU Boulder seeks to address the calls to professionalize teaching and create a climate of continuous improvement. The framework defines teaching as a scholarly activity—like research—and assesses the core components of such scholarship (Bernstein, 2008). Current efforts draw from decades of research in teaching evaluation to create a common framework (Glassick et al., 1997) by defining categories of evaluation as follows: 1) clear goals, 2) adequate preparation, 3) appropriate methods, 4) significant results, 5) effective presentation, and 6) reflective critique.

These framework categories are held constant across all departments; however, specific interpretation of the components of the framework and their relative weights are defined at a department level (Figure 3). Thus, departments specify in a clear way what is meant by “multiple measures” and “significant results” locally, but use common categories across the campus. This approach provides the university with a common framework while preserving disciplinary identity and specificity.

FIGURE 3.

Three “voices” in a scholarly framework for assessing teaching.

The implementation strategy has created two layers of work: one at the departmental level and one at the campus-wide level. Participation in the TQF is purposefully voluntary, asking departments to work to develop the framework rather than address a top-down mandate. The departmental level seeks to increase engagement and exploration of new ways to assess teaching by empowering individual departments to identify how they might enact more scholarly measures of teaching. Nine CU Boulder departments participated in the TQF in the 2016–2017 academic year with a postdoctoral-level facilitator. These cross-departmental discussions have led to departmental-based work in Fall 2017. An initial four departments have committed two to five lead faculty to identify what the measures of scholarly teaching are that address the framework in their disciplines, while the facilitator manages the biweekly meetings and shares information across departments. Additional departments are expected to phase in participation in Spring and Fall 2018.

CU Boulder has plans for two levels of campus discussions: the first among the pilot departments; and a subsequent one that will include broader representation from other departments, deans, and other institutional stakeholders. Once the departmental metrics and common campus framework and review system are coordinated, these tools will be deployed in the annual merit review and/or promotion and tenure review of departments across campus.

CONCLUSION

There is no question that strong examples of excellent teaching practice already exist throughout research universities. However, increasing visibility of and institutionalizing support for and reward of effective teaching is a challenge faced by many research universities. In most cases, relevant policies are already in place that emphasize the importance of teaching, but work remains to change the culture such that common practice aligns with these policies, especially at the departmental level.

Here, we have outlined some key elements associated with reward structures within research universities that can be leveraged to align practice and policy. To illustrate potential variations within the general framework, we highlighted three different approaches that are being piloted at specific research universities. At the department level, there needs to be an explicit conversation about the scholarly nature of teaching and a faculty member’s responsibilities regarding teaching as a scholar in a particular discipline. There also needs to be an explicit discussion of the collective nature of undergraduate teaching and its role within the broader responsibility of the research university. Finally, there needs to be recognition and adoption of empirical models for evaluating teaching that have been tested and validated. Within this broader context, the specific implementation at any given research university must be flexible and adaptable to local culture, structures, and goals.

Supplementary Material

Acknowledgments

This research collaborative between the AAU and the Cottrell Scholars is supported by funds from the RCSA.

Footnotes

Founded in 1900, the AAU comprises 62 distinguished institutions in the United States and Canada that continually advance society through education, research, and discovery. Our U.S. member universities earn the majority of competitively awarded federal funding for academic research, are improving human life and well-being through research, and are educating tomorrow’s visionary leaders and global citizens. AAU members collectively help shape policy for higher education, science, and innovation; promote best practices in undergraduate and graduate education; and strengthen the contributions of research universities to society.

The Cottrell Scholar program develops outstanding teacher-scholars in chemistry, physics, and astronomy who are recognized by their scientific communities for the quality and innovation of their research programs and their academic leadership skills.

A total of 2971 instructional staff received the AAU faculty survey across the eight project-site institutions. More than 1000 (1093) submitted at least a partially completed survey, resulting in an overall response rate of 36.8%; individual institutional response rates ranged from 21.6% to 69.4%. A majority of respondents (542, or 49.6%) were either associate professors or professors with tenure. Twelve percent were tenure-track professors who did not yet have tenure at the time they were surveyed. More than a quarter of respondents were graduate students (26%), and the final 12.5% were instructor/lecturers, nontenured faculty, no response, or other instructional staff. Responses from private institutions comprised 36% of the total, with 64% from public institutions. The Supplemental Materials include the survey instrument and the complete project site baseline data report.

REFERENCES

- Ambrose S. A., Bridges M. W., DiPietro M., Lovett M. C., Norman M. K. How learning works: Seven research-based principles for smart teaching. San Francisco: Wiley; 2010. [Google Scholar]

- Anderson W. A., Banerjee U., Drennan C. L., Elgin S. C. R., Epstein I. R., Handelsman J., Warner I. M. Changing the culture of science education at research intensive universities. Science. 2011;331(6014):152–152. doi: 10.1126/science.1198280. https://doi.org/10.1126/science.1198280. [DOI] [PubMed] [Google Scholar]

- Becker E., Easlon E., Potter S., Guzman-Alvarez A., Spear J., Facciotti M., Pagliarulo C. The Effects of Practice-Based Training on Graduate Teaching Assistants’ Classroom Practices. 2015. bioRxiv. https://doi.org/10.1101/115295. [DOI] [PMC free article] [PubMed]

- Bernstein D. Peer review and evaluation of the intellectual work of teaching. Change: The Magazine of Higher Learning. 2008;40(2):48–51. [Google Scholar]

- Bernstein D. J., Huber M. T. What is good teaching? Raising the bar through Scholarship Assessed. 2006. Invited presentation to the International Society for the Scholarship of Teaching and Learning (Washington, DC)

- Boring A., Ottoboni K., Stark P. B. Student evaluations of teaching (mostly) do not measure teaching effectiveness. ScienceOpen Research. 2016 https://doi.org/10.14293/S2199-1006.1.SOR-EDU.AETBZC.v1. [Google Scholar]

- Bradforth S. E., Miller E. R., Dichtel W. R., Leibovich A. K., Feig A. L., Martin J. D., Smith T. L. University learning: Improve undergraduate science education. Nature. 2015;532(7560):282–284. doi: 10.1038/523282a. https://doi.org/10.1038/523282a. [DOI] [PubMed] [Google Scholar]

- Braga M., Paccagnella M., Pellizzari M. Evaluating students’ evaluations of professors. Economics of Education Review. 2014;41:71–88. https://doi.org/10.1016/j.econedurev.2014.04.002. [Google Scholar]

- Brown P. C., Roediger H. L., McDaniel M. A. Make it stick: The science of successful learning. Cambridge, MA: Harvard University Press; 2014. [Google Scholar]

- Centra J. A., Gaubatz N. B. Is there gender bias in student evaluations of teaching. Journal of Higher Education. 2000;71(1):17–33. [Google Scholar]

- Clayson D. E. Student evaluations of teaching: Are they related to what students learn? A meta-analysis and review of the literature. Journal of Marketing Education. 2009;31(1):16–30. [Google Scholar]

- Dancy M., Henderson C. Pedagogical practices and instructional change of physics faculty. American Journal of Physics. 2010;78(10):1056–1063. [Google Scholar]

- Dancy M., Henderson C., Smith J. Proceedings of the 2013 Physics Education Research Conference. 2014. Understanding educational transformation: Findings from a survey of past participants of the Physics and Astronomy New Faculty Workshop; pp. 113–116. [Google Scholar]

- Doyle T. Helping students learn in a learner-centered environment: A guide to facilitating learning in higher education. Sterling, VA: Stylus; 2008. [Google Scholar]

- Eddy S., Hogan K. Getting under the hood: How and for whom does increasing course structure work. CBE—Life Sciences Education. 2014;13(3):453–468. doi: 10.1187/cbe.14-03-0050. doi: 10.1187/cbe.14-03-0050. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fairweather J. The ultimate faculty evaluation: Promotion and tenure decisions. New Directions for Institutional Research. 2002;114:97–108. [Google Scholar]

- Fairweather J. Work allocation and rewards in shaping academic work. In: Enders J., deWeert E., editors. The changing face of academic life: Analytical and comparative perspectives. New York: Palgrave Macmillan; 2009. pp. 171–192. [Google Scholar]

- Fairweather J., Beach A. Variation in faculty work within research universities: Implications for state and institutional policy. Review of Higher Education. 2002;26(1):97–115. [Google Scholar]

- Finkelstein N., Reinholz D. L., Corbo J. C., Bernstein D. J. Towards a teaching framework for assessing and promoting teaching quality at CU-Boulder. Boulder, CO: Center for STEM Learning.; 2015. Report from the STEM Institutional Transformation Action Research [SITAR] Project). Retrieved January 1, 2016, from www.colorado.edu/csl/aau/resources/TQF_WhitePaper_2016-1-17.pdf. [Google Scholar]

- Freeman S., Eddy S., McDonough M., Smith M., Okoroafor N., Jordt H., Wenderoth M. Active learning increases student performance in science, engineering, and mathematics. Proceedings of the National Academy of Sciences USA. 2014;111(23):8410–8415. doi: 10.1073/pnas.1319030111. doi: 10.1073/pnas.1319030111. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Glassick C. E., Huber M. T., Maeroff G. I. Scholarship assessed: Evaluation of the professoriate. San Francisco: Jossey-Bass; 1997. [Google Scholar]

- Gormally C., Evans M., Brickman P. Feedback about teaching in higher ed: Neglected opportunities to promote change. CBE—Life Sciences Education. 2014;13(2):187–199. doi: 10.1187/cbe.13-12-0235. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Haak D., Hille Ris Lambers J., Pitre E., Freeman S. Increased structure and active learning reduce the achievement gap in introductory biology. Science. 2011;332(6034):1213–1216. doi: 10.1126/science.1204820. doi: 10.1126/science.1204820. [DOI] [PubMed] [Google Scholar]

- Handelsman J., Ebert-May D., Beichner R., Bruns P., Chang A., DeHaan R., Wood W. Policy forum: Scientific teaching. Science. 2004;304(5670):521–522. doi: 10.1126/science.1096022. [DOI] [PubMed] [Google Scholar]

- Henderson C., Dancy M. Barriers to the use of research-based instructional strategies: The influence of both individual and situational characteristics. Physical Review Special Topics—Physics Education Resarch. 2007;3(2):020102. [Google Scholar]

- Huber M. T. Faculty evaluation and the development of academic careers. New Directions for Institutional Research. 2002;114:73–84. doi: 10.1002/ir.48. [Google Scholar]

- Hutchings P., editor. From idea to prototype: The peer review of teaching. Sterling, VA: Stylus; 1995. [Google Scholar]

- Hutchings P., editor. Making teaching community property: A menu for peer collaboration and peer review. Sterling, VA: Stylus; 1996. [Google Scholar]

- Kober N. Reaching students: What research says about effective instruction in undergraduate science and engineering. Washington, DC: National Academies Press; 2015. [Google Scholar]

- Lorenzo M., Crouch C. H., Mazur E. Reducing the gender gap in the physics classroom. American Journal of Physics. 2006;74(2):118–122. doi: 10.1119/1.2162549. [Google Scholar]

- Lowen R. S. Creating the Cold War university: The transformation of Stanford. Berkeley: University of California Press; 1997. [Google Scholar]

- Lyde A. R., Grieshaber D. C., Byrns G. Faculty teaching performance: Perceptions of a multi-source method for evaluation (MME) Journal of the Scholarship of Teaching and Learning. 2016;16(3):82–94. [Google Scholar]

- MacNell L., Driscoll A., Hunt A. N. What’s in a name: Exposing gender bias in student ratings of teaching. Innovative Higher Education. 2015;40:291. https://doi.org/10.1007/s10755-014-9313-4. [Google Scholar]

- Malcolm S., Feder M., editors. Barriers and opportunities for 2-year and 4-year STEM degrees: Systemic change to support students’ diverse pathways. Washington, DC: National Academies Press; 2016. doi: 10.17226/21739. [PubMed] [Google Scholar]

- National Research Council. How people learn: Brain, mind, experience, and school. (Expanded ed.) Washington, DC: National Academies Press; 2000. doi: 10.17226/9853. [Google Scholar]

- Singer S. In Searching for better approaches: Effective evaluation of teaching and learning in STEM. Tucson, AZ: RCSA; 2015. Keynote: Implementing evidence-based undergraduate STEM teaching practice; pp. 1–5. . Retrieved July 15, 2015, from https://rescorp.org/gdresources/publications/effectivebook.pdf. [Google Scholar]

- Singer S. R., Nielsen N. R., Schweingruber H. A., editors. Discipline-based education research: Understanding and improving learning in undergraduate science and engineering. Washington, DC: National Academies Press; 2012. [Google Scholar]

- Trenshaw K. F., Targan D. M., Valles J. M. In Proceedings from ASEE NE ‘16: The American Society for Engineering Education Northeast Section 2016 Conference. 2016. Closing the achievement gap in STEM: A two-year reform effort at Brown University. Retrieved August 24, 2017, from https://egr.uri.edu/wp-uploads/asee2016/73-1064-1-DR.pdf. [Google Scholar]

- Wieman C. A better way to evaluate undergraduate teaching. Change: The Magazine of Higher Learning. 2015;47(1):6–15. doi: 10.1080/00091383.2015.996077. [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.