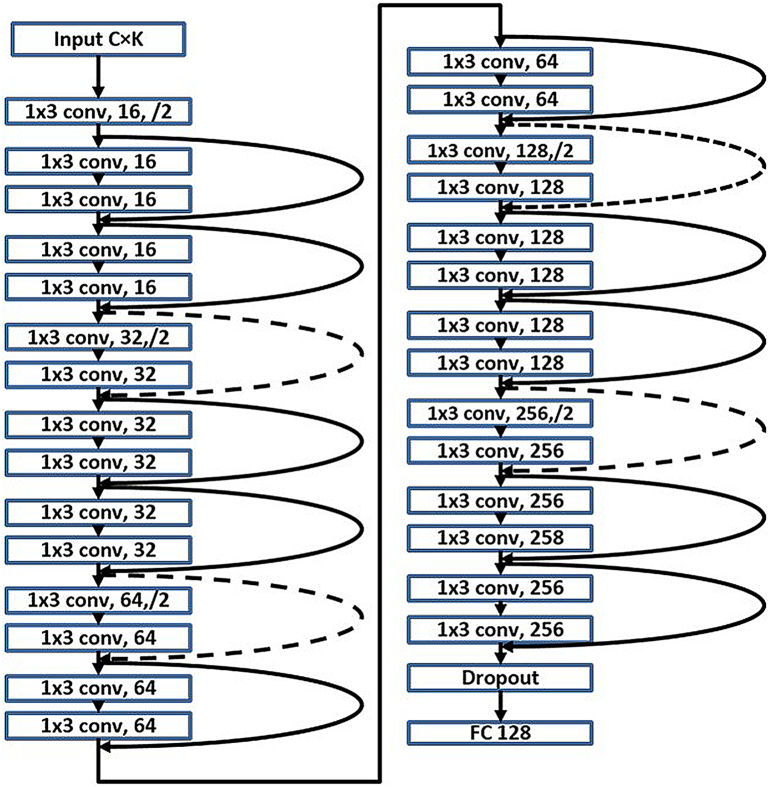

Fig. 3.

Network architecture.

This network contains one input layer, twenty-nine convolutional (conv) layers, one dropout layer and one full connection (FC) output layer. The conv layer computes the output of neurons that are connected to local regions in the input by using K (with value ranging from 16 to 256 as denoted by the second parameter of corresponding layer) filters of size 1 × 3, the number of filters K is doubled if the feature map size is halved (demonstrated with/2 for the third parameter of corresponding layer). Following the idea of residual learning, Identity shortcut connections (when the input and output have same dimensions, solid shortcuts line) and projection shortcut (dashed shortcuts lines) are used here to allow the information from the input or earlier layers to flow more easily to deep layers. Please see a more detailed description in the text.