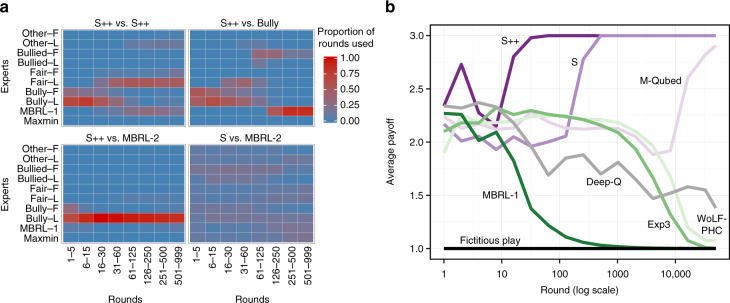

Fig. 1.

Illustrations of S++’s learning dynamics. a An illustration of S++’s learning dynamics in Chicken, averaged over 50 trials. For ease of exposition, S++’s experts are categorized into groups (see Supplementary Note 3 for details). Top-left: When (unknowingly) paired with another agent that uses S++, S++ initially tries to bully its partner, but then switches to fair, cooperative experts when attempts to exploit its partner are unsuccessful. Top-right: When paired with Bully, S++ learns the best response, which is to be bullied, achieved by playing experts MBRL-1, Bully-L, or Bully-F. Bottom-left: S++ quickly learns to play experts that bully MBRL-2, meaning that it receives higher payoffs than MBRL-2. Bottom-right: on the other hand, algorithm S does not learn to consistently bully MBRL-2, showing that S++’s pruning rule (see Eq. 1 in Methods) enables it to teach MBRL-2 to accept being bullied, thus producing high payoffs for S++. b The average per-round payoffs (averaged over 50 trials) of various machine-learning algorithms over time in self-play in a traditional (0-1-3-5)-prisoner’s dilemma in which mutual cooperation produces a payoff of 3 and mutual defection produces a payoff of 1. Of the machine-learning algorithms we evaluated, only S++ quickly forms successful relationships with other algorithms across the set of 2 × 2 games