Abstract

Purpose

The growing number of technical systems in the operating room has increased attention on developing touchless interaction methods for sterile conditions. However, touchless interaction paradigms lack the tactile feedback found in common input devices such as mice and keyboards. We propose a novel touchless eye-tracking interaction system with auditory display as a feedback method for completing typical operating room tasks. Auditory display provides feedback concerning the selected input into the eye-tracking system as well as a confirmation of the system response.

Methods

An eye-tracking system with a novel auditory display using both earcons and parameter-mapping sonification was developed to allow touchless interaction for 6 typical scrub nurse tasks. An evaluation with novice participants compared auditory display with visual display with respect to reaction time and a series of subjective measures.

Results

When using auditory display to substitute for the lost tactile feedback during eye-tracking interaction, participants exhibit reduced reaction time compared to using visual-only display. In addition, the auditory feedback led to lower subjective workload and higher usefulness and system acceptance ratings.

Conclusion

Due to the absence of tactile feedback for eye-tracking and other touchless interaction methods, auditory display is shown to be a useful and necessary addition to new interaction concepts for the sterile operating room, reducing reaction times while improving subjective measures, including usefulness, user satisfaction, and cognitive workload.

Keywords: auditory display, sterile interaction, eye tracking, scrub nurse, touchless interaction, operating room, digital operating room, human-computer interaction

1 Introduction

With the increasing number of computer systems available in today’s operating rooms, both clinicians and assistants are exposed to a plethora of interaction possibilities in both preinterventional and intraoperative phases. Although this increase endows clinicians with expanded and enhanced interaction possibilities, the sterile nature of the operating room often limits or prevents the use of traditional computer input devices such as mouse and keyboard, as these can be quickly contaminated during an operation [1]. Touchscreens, joysticks, and buttons are typically covered in a plastic sheathes, which become soiled during an operation and must often be changed, leading to costly delays [2]. In many complex cases, clinicians must exit the sterile environment to operate a workstation, which requires repeated scrubbing into the operating room.

Thus, a current trend in operating room interaction research is to investigate new paradigms for touch-less interaction. Current solutions for sterile interaction in the operating room include foot pedals [3,4] and gesture-controlled interaction systems using standard motion-detection devices such as the Leap Motion controller [5,6] or the Microsoft Kinect [7]. For a review of the literature on touchless interaction in the operating room, see Mewes et al. [8]. Gesture-based interfaces promise improved sterile interaction with computer systems in the operating room, although their use has not yet been established in clinical routines. Although these methods free the clinician or assistant from having to touch input devices, sterile interaction systems based on gestures exhibit the drawback that interaction with the device is based on hand or body movement. By doing so, a user is required to interrupt the clinical routine to interact with such a system. To this end, the use of eye-tracking glasses as a sterile means of interaction have been investigated to eliminate the need for complex hand or body gestures. This has already been successfully demonstrated, for instance, in laparoscopic surgery for training [9] or to position the camera [10]. Furthermore, use cases for sterile eye tracking have been proposed for scrub nurses [11].

Although eye-tracking interaction systems show great potential for flexible employment in sterile environments, they, along with other touchless interaction concepts, only provide primary visual feedback, in that the user can only see the result of an initiated action on a screen. Unlike traditional input devices such as keyboard, mice, and joysticks, touchless interaction concepts, thus, fail to provide the user with a secondary, tactile means of feedback to inform the user that an action has been performed. This may impede the adoption of touchless interaction concepts in the operating room, as the availability of real-time feedback for interaction has been shown to reduce mental load and improve efficiency and user satisfaction [12].

To overcome the lack of a secondary feedback in touchless interaction concepts, the use of auditory display has been proposed to provide the user with this missing secondary feedback. Compared to visual-only feedback, the addition of auditory display reduces selection time and improves selection accuracy in hand gesture-based circular menus [13] using the Leap Motion Controller as input device.

This work investigates the benefits of both eye-tracking as an input concept for sterile operating room interaction and the effect of the inclusion of auditory display as a secondary feedback mechanism. We propose a touchless, gesture-free, sterile interaction method using eye-tracking to help surgical assistants complete a series of 3 typical OR uses cases, each with 2 tasks.

2 Methods

Essential to the interaction with the eye-tracking system are so-called gaze gestures, which describe how the user views objects in the scene. In the developed eye-tracking system, QR (quick response) codes were printed and placed within the demonstration operating room to permit study participants to view locations and initiate actions with the system. Two kinds of gaze gestures were created to permit interaction: simple gazes gestures and repeated gaze gestures.

Simple gaze gestures describe a single trigger when a user views the desired QR code for a defined amount of time. This duration must be long enough to allow the eye-tracking system to recognize the gaze and prevent accidental triggering of actions when the user simply changes view around the demonstrator operating room, but short enough to provide a sufficiently rapid feedback response time so that the user is not frustrated by excessive latency. In the case of the developed sterile interaction system, preliminary design iterations with potential clinical users resulted in a system recognition time of 1 second to strike an optimal balance between robust recognition, minimal accidental gaze gesture occurrence, and short enough interaction time.

Repeating gaze gestures are those for which a user initiates a continuous action with the eye-tracking system that extends over a variable period of time. Repeating gaze gestures are executed by viewing a QR code and holding the gaze until interaction is completed. This method is used in this evaluation, for instance, to dim operating room lights. During light dimming, the repeated gaze gesture continuously controls the brightness of the light bulb in stages between complete darkness and maximum brightness.

2.1 Eye-tracking system design

The developed eye-tracking system employs a set of SMI v2.0 eye-tracking glasses, which consist of an outward-facing camera with a resolution of 1280 x 960 pixels combined with inward-facing infrared cameras which detect the point of regard (PoR) of the user in relation to the forward-facing captured scene. The glasses weight 68 grams and are connected by USB to a host computer.

The PoR as well as the images acquired by the forward-facing visual camera are retrieved by proprietary software which is delivered with the eye-tracking glasses. This custom software extracts the QR codes from the visual image, determines if the PoR is located on a QR code and sends a network message to client software when a gaze gesture is detected. The client software handles the interaction with the devices in the OR, including room lights, telephone, and surgical instrument management system.

2.2 Auditory display as secondary feedback mechanism

The auditory display system for eye-tracking interaction provides a secondary feedback to the system, which is missing due to the touchless nature of the interaction. This is contrasted to interaction with physical devices such as mice, keyboards, joysticks, and buttons, all of which are commonly used in the operating room and provide a secondary, tactile feedback, produced when the user actuates the device. This tactile feedback is useful to know whether or not an action was successfully performed. Touchless interaction concepts, however, most commonly rely on hand gestures or eye gaze, neither of which provide a secondary feedback mechanism necessary to ensure the user that a gesture was recognized. To augment a touchless interface with secondary feedback, auditory display has been shown to increase efficiency as well as satisfaction [14]. In addition to informing the user that a gesture or gaze was recognized, auditory display can also inform the user as to which specific gesture or gaze was recognized, so that undesired input can be corrected, thereby easing training with the system.

To support secondary feedback for uses cases, an auditory display environment was created to support both the simple gaze gesture use cases 1–4 (begin and end video call, marking instrument, and generating instrument report) as well as the repeating gaze gesture in use cases 5 and 6, dimming the operating room light to full brightness or compete darkness. For all auditory display, the PureData [15] real-time audio programming environment was used.

2.3 Use Cases for Sterile Interaction

Based on Glaser et al. [11], who proposed suitable use cases for potential interaction with eye-tracking in a sterile environment, we chose 3 typical use cases found in the OR that are often performed by surgical assistants. These use cases were derived from the situation of a surgical assistant or scrub nurse standing next to the operating table and in front of the instrument table. For each of the use cases, the participant could complete 2 tasks by initiating an action by gazing at a QR code located in the test laboratory.

Use Case 1: Initiating and ending a video call: The use cases required the scrub nurse to (task 1) initiate a video or telephone call for the clinician and (task 2) end the call thereafter.

Use Case 2: Marking an instrument as defective and generating an instrument report: After being handed an instrument, the scrub nurse must mark (task 3) the instrument as defective so that it can be replaced or repaired during sterilization after the operation. The scrub nurse prepares an electronic report of the defective instrument received. Here (task 4), the scrub nurse sends the report to the clinical database.

Use Case 3: Controlling operating room light brightness: In these cases, the surgical assistant controls the brightness of an operating room lamp by (task 5) dimming to complete brightness and (task 6) dimming to complete darkness.

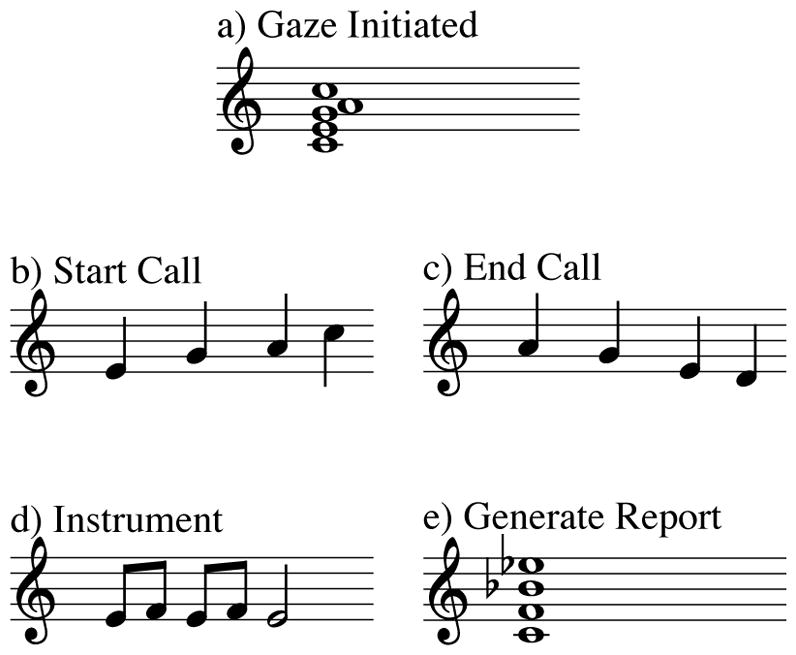

2.4 Earcons for Simple Gaze Gestures

For simple gaze gesture tasks 1–4, we implemented a set of so-called earcons for informing the user when a gesture was recognized by the eye-tracking system, and, specifically, which gesture was recognized and executed. Earcons are short, abstract, synthetic musical audio segments used to deliver messages about events in a system [16]. These short segments, usually single pitches or rhythmic sequences of pitches, may be grouped into larger units called families of related motives to identify related messages. Audio parameters such as timbre, register, pitch, rhythm, duration, and tempo are useful for differentiating earcons and grouping them into families. During initial design iterations with potential users, various earcon variations were generated and evaluated using informal think-aloud protocols, resulting in the single set of 5 earcons described here and employed in the rest of the evaluation. The fixed set of earcons was used as a basis to determine the overall feasibility of the approach. The earcons employed melodies and chords found in Figure 3 using a software digital synthesizer with a marimba tone. Each earcon lasted less than 1 second.

Fig. 3.

The auditory display for simple gaze gestures consisted of earcons for a) initial gesture recognition, b) starting a video call (task 1), c) ending a video call (task 2), d) marking instrument as defective (task 3), and e) generating an instrument report (task 5)

To support feedback of gaze recognition, earcons were generated for the following interactions:

Initiation of simple gaze gesture

Generate instrument report, simple gaze recognized

Video call begin, simple gaze recognized

Video call end, simple gaze recognized

Instrument defective, simple gaze recognized

2.5 Parameter-Mapping Auditory Display for Repeated Gaze Gestures

In the case of auditory display for dimming the operating room lamp (tasks 5 and 6), a parameter-mapping auditory display mechanism was implemented. In parameter-mapping auditory display, the underlying data are used to ‘play’ a real-time software instrument according to those changes. Because audio has various parameters that may be altered (such as frequency, intensity, and timbre), continuous parameter mapping is suitable for displaying multivariate data. This technique makes the listener an active participant in the listening process by browsing the data set using the auditory display or by interactively changing the mappings that relate data to audio [16]. The listener can navigate through a set of data to perform a task. Thus, this method is useful for smoothly representing continuous changes in underlying data. In the case of light dimming, the auditory display conveys the current light brightness using a parameter-mapping mechanism. The brightness of the bulb is mapped to the pitch of a sine tone generator quantized to notes of a major C-scale from MIDI note 60 (C, 261 Hz) to note 72 (C, 523 Hz). During brightness changes, the moving tone alternates with a steady sine tone of 523 Hz at a frequency of 2 Hz to provide the user with the ability to compare both tones. By doing so, the user receives feedback on the current brightness relative to maximum brightness. When reaching full brightness (bulb on) or complete darkness (bulb off), a major chord is played back.

2.6 Experiment design

We conducted a user study employing the prototypical eye-tracking system to evaluate the effect of auditory display as a substitute for the loss of tactile feedback during sterile interaction tasks with respect to response time, subjective workload, and system acceptance.

2.6.1 Physical Setup

A laboratory operating room was outfitted with the eye-tracking system. The operating room consisted of an operating table, instrument table, and three main computer displays. The eye-tracking system was installed on a computer which sat out of sight of the participant. The participant sat on a stool next to the operating table and in front of the instrument table, facing three main computer displays. A speaker to play the generated auditory display synthesis output was placed opposite the participant near the operating table. As a means of visual feedback, full-screen icons that were shown after completing each task were displayed on the central computer monitor in the laboratory operating room.

2.6.2 Demographic composition

Twenty-six (26) participants completed the laboratory evaluation. The 18 male and 8 female participants had an average age of 29 (ranging from 24 to 43), and 9 wore glasses. One participant reported a slight hearing disability. Three participants came from medical backgrounds with experience in an operating room and the remainder were scientific researchers. Half of the participants were assigned to group AV (first performing the tasks with audiovisual feedback, followed by visual-only feedback) and the other half to group VA (first performing the tasks with visual-only feedback, followed by audiovisual feedback).

2.6.3 Procedure

The experiment consisted of a set of 6 tasks which represented use cases found in a typical ear-nose-throat intervention, including initiating a video call, hanging up the video call, generating an instrument report, labeling an instrument as defective, turning a light on, and turning a light off. Each participant repeated the entire set of tasks once using audiovisual feedback and once using visual-only feedback. Before beginning the experiment, participants signed an informed consent agreement, the experimenter explained the usage of the eye-tracking system to the participant, and a calibration of the eye-tracking glasses was completed. For both audiovisual and visual-only feedback, each participant completed a training session of 12 tasks. Thereafter, the participants completed the set of 6 tasks 3 times each in a random sequence, thus resulting in 24 training and 36 test tasks completed per participant. During all tasks, an audio recording of a typical ENT surgery was played in the background.

For each individual task, participants were instructed by the experimenter, who played the role of the lead surgeon during an ENT intervention, to execute the desired task. After instruction, the participant pressed a timer button located on the instrument table, thereby recording the start time of the task. After performing the desired task, the participant again pressed the timer button to indicate task completion.

Following the entire sequence of tasks using either audiovisual or visual-only feedback, the participant completed a questionnaire consisting of demographic questions and three sets of evaluation questions. Van der Laan technology acceptance scale [17] was used to judge the usefulness of and satisfaction with using the novel eye-tracking system. The questionnaire provides 9 pairs of adjectives, such as “effective /superfluous” or “assisting /worthless” to generate composite ratings for usefulness and satisfaction. The widely-used NASA Task Load Index (TLX) scale [18] was used to measure the workload experienced by the participants during task completion. We employed the Raw TLX scale, as the weighted scale is more time intensive and has not been conclusively shown to provide additional benefits [19]. Finally, four additional questions were asked concerning task execution confidence, ease of use, necessary time to complete the task, and helpfulness of the feedback method.

2.7 Statistical analysis

Reaction time was recorded for each executed task, determined by the elapsed time between the point the system triggered the designated action and the participant engaged the timer button at end of the task. We excluded reaction times under 0.25 seconds because reaction time would have been less than a physiologically reasonable reaction time [20]. In such cases, users preemptively pressed the task-complete button before the eye-tracking system could successfully complete the task and provide an auditory or visual feedback.

Reaction times for each task (dependent variable) were analyzed as mixed Analysis of Variance (ANOVA) of feedback (audiovisual, visual only) as within subjects factor (conducted by all participants) and group (AV, VA) as between subjects factor (either starting with audiovisual or with visual feedback and conducting the second condition thereafter), see Table 1. This was done to determine the presence of training effects on reaction time by receiving additional training during the first completed condition. The questionnaires for user feedback were analyzed by repeated measures ANOVA for the condition of feedback (audiovisual, visual only), irrespective of the group, since no training effects were expected for subjective feedback. The level of significance, i.e., the statistical difference of the means, was indicated by p < .05. For details on the ANOVA method used, see [21].

Table 1.

Summary of statistical analysis of repeated ANOVA for reaction times and questionnaires

| Task | Condition | dF | F | p | sig | η2 |

|---|---|---|---|---|---|---|

| Call | time | 1, 24 | 5.14 | 0.03 | * | 0.18 |

| time*group | 1, 24 | 6.63 | 0.017 | * | 0.22 | |

|

| ||||||

| Hangup | time | 1, 24 | 35.35 | 0.00 | ** | 0.60 |

| time*group | 1, 24 | 3.16 | 0.09 | 0.12 | ||

|

| ||||||

| Generate Report | time | 1, 24 | 6.10 | 0.02 | * | 0.00 |

| time*group | 1, 24 | 0.01 | 0.94 | 0.00 | ||

|

| ||||||

| Mark Defective | time | 1, 24 | 73.53 | 0.00 | ** | 0.03 |

| time*group | 1, 24 | 2.20 | 0.15 | 0.08 | ||

|

| ||||||

| Light On | time | 1, 24 | 32.66 | 0.00 | ** | 0.58 |

| time*group | 1, 24 | 1.11 | 0.30 | 0.04 | ||

|

| ||||||

| Light Off | time | 1, 24 | 1.13 | 0.30 | 0.05 | |

| time*group | 1, 24 | 6.03 | 0.02 | * | 0.20 | |

| Questionnaire | dF | F | p | sig | η2 |

|---|---|---|---|---|---|

| NASA Raw TLX (overall) | 1, 25 | 8.77 | 0.01 | ** | 0.26 |

|

| |||||

| van der Laan (overall) | 1, 25 | 10.21 | 0.00 | ** | 0.29 |

| Usefulness | 1,25 | 7.57 | 0.01 | ** | 0.23 |

| Satisfaction | 1,25 | 8.9 | 0.01 | ** | 0.26 |

|

| |||||

| Agreement (overall) | 1 | 24.89 | 0.00 | ** | 0.50 |

| Confidence | 1, 25 | 29.56 | 0.00 | ** | 0.54 |

| Ease | 1, 25 | 3.22 | 0.09 | 0.11 | |

| Time | 1, 25 | 5.00 | 0.03 | * | 0.17 |

| Helpfulness | 1, 25 | 40.10 | 0.00 | ** | 0.62 |

3 Results

Reaction time

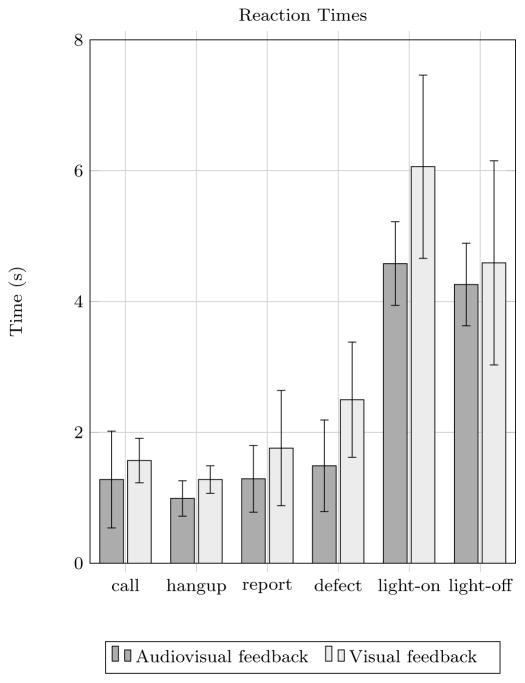

The average reaction times for audiovisual and visual-only feedback are shown in Figure 5. Average reaction times were significantly faster when using audio for all use cases, except for dimming the light off, where a level of significance was not reached. A slight training effect occurred for the light-off task, in which the VA group (first using visual-only feedback and then audiovisual) improved reaction time for the second round more than the AV group (first using audiovisual feedback and then visual-only feedback). In addition, the task of placing a video call also showed significant group interaction effects; the AV group maintained their reaction time from the first round (A) to the second (V), and the VA group improved their reaction time from the first round (V) to the second round (A).

Fig. 5.

Mean reaction times in seconds over all participants for each of the 6 tasks: (1) place call, (2) end call, (3) generate instrument report, (4) mark instrument as defective, (5) light on, and (6) light off

Workload

The results of the NASA Raw TLX questionnaire are shown in Table 2. The mean overall subjective workload for the audiovisual feedback was 32.50, whereas the mean subjective workload for visual-only feedback was 40.23. Interaction with audiovisual feedback showed significantly reduced subjective workload overall compared to interaction without auditory display. In every dimension, auditory display was rated with less cognitive load compared than when using visual-only feedback.

Table 2.

Mean and standard deviation values for questionnaire results over all participants for both audiovisual and visual feedback. Ranges for NASA Raw TLX are 0 (low workload) to 100 (high workload), for van der Laan -2 (fully reject) to 2 (fully accept) and for agreement questions 0 (strongly disagree) to 7 (strongly agree).

| Questionnaire | Feedback Type | |

|---|---|---|

|

| ||

| Audiovisual | Visual | |

| M (SD) | M (SD) | |

| NASA Raw TLX | 32.50 (13.93) | 40.23 (12.94) |

| Mental | 32.31 (19.91) | 40.58 (25.50) |

| Physical | 23.27 (21.21) | 29.62 (21.91) |

| Temporal | 37.50 (21.65) | 44.04 (23.83) |

| Performance | 25.00 (21.21) | 27.88 (12.90) |

| Effort | 58.85 (26.84) | 65.77 (23.05) |

| Frustration | 18.05 (26.84) | 33.46 (21.39) |

|

| ||

| Van der Laan | ||

| Usefulness | 1.26 (0.62) | 0.88 (0.61) |

| Satisfaction | 1.11 (0.66) | 0.69 (0.66) |

|

| ||

| Agreement | ||

| Confidence | 5.35 (0.69) | 3.96 (1.51) |

| Ease | 5.23 (0.95) | 4.69 (1.23) |

| Time | 5.00 (1.33) | 4.50 (1.42) |

| Helpfulness | 5.27 (1.08) | 2.73 (1.89) |

System acceptance

The results of the Van der Laan system acceptance questionnaire [17] are shown in Table 2. Using the Van der Laan scale, both the usefulness as well as satisfaction with the eye-tracking system were rated as significantly higher when using audiovisual feedback as opposed to visual-only feedback.

Participant agreement

The results of questions regarding participant satisfaction with confidence, ease of use, and helpfulness of the display are shown in Table 2. Audiovisual feedback was rated as providing significantly higher satisfaction than when using visual feedback for confidence, ease of use, and helpfulness. For satisfaction with the amount of time needed to complete the task, no significant difference was found.

4 Discussion

We developed a novel concept to interact with operating room technology that is both sterile and does not require the use of hands or feet for input. Touchless interaction systems are desired in the operating room to increase the possibilities of using certain systems without the need for sterilization of physical input devices. By implementing touchless interaction, surgical assistants or clinicians could save valuable time and costs by reducing the amount of sterilization that is involved with ordinary input devices.

However, because touchless interaction paradigms do not include the secondary haptic feedback generated when interacting with typical operating room input devices such as mice, keyboards, buttons, and joysticks, an auditory display was created to provide feedback to compensate for the this loss.

The developed auditory display supports interaction with the eye-tracking system by notifying the user when a gaze target is recognized by the eye-tracking system, by providing feedback regarding which gesture was successfully recognized, as well as by generating continuous feedback regarding repeating gestures such as dimming operating room lights. The auditory display consisted of a set of earcons for simple gaze gestures for tasks including starting and ending a video call, generating an instrument report, and generating the report. Continuous auditory feedback was generated to relay the brightness of a operating room light bulb with notifications when the light was completely off or at full brightness.

The evaluation of the eye-tracking system compared the effects of audiovisual and visual-only feedback on reaction time, subjective workload, usefulness, and satisfaction for 6 common surgical assistant tasks.

For each of the subjective dependent measures, audiovisual feedback was shown to be superior to visual-only feedback. Average reaction time was significantly reduced when using the audiovisual feedback for all tasks except for turning the operating light off, for which reaction time was reduced but a statistical level of significance could not be reached. The largest average time saving using audiovisual feedback was found during the light-on task, perhaps because the light dimming function gives no feedback when full brightness is achieved. Thus, when changing lights to full brightness, participants may have achieved full brightness without having realized this. Completing such a task without receiving feedback could be especially frustrating, as noted in comments by some of the participants.

In addition to improving reaction time, audiovisual feedback was rated significantly higher for system acceptance, significantly lower for workload, and significantly higher for participant satisfaction for confidence, ease of use, and helpfulness of the feedback methods.

Implementing audiovisual display in the operating room is not without challenges. By design, operating rooms host a range of sound sources, such as speech and instrument noises, as well as functional warning and status monitoring sounds emanating, for example, from anesthesiology equipment. Previous studies which evaluate auditory display in the operating room have noted the need for auditory display design to take existing operating room sounds into account ([23–27]). However, despite the operating environment often being noisy, according to Katz et al. [28], there is little evidence to demonstrate a direct association between excessive operating room noise and poor surgical outcomes. Additionally, Moorthy et al. [29] report that surgeons can successfully disregard extraneous noise in the operating room. However, to minimize extraneous noise in the operating room generated, for instance, by the auditory feedback described in this work, a variety of playback methods can transmit the desired auditory feedback while permitting communication between surgical team members. These could include bone-conducting headphones, open-back headphones, small speakers located near the scrub nurse, or even parabolic speakers which focus sound output directly to the target nurse.

Further investigations must evaluate which sound output method provides the best usability while generating the least amount of noise and distraction to other team members. In addition, customized auditory display could play a role in a networked operating room [30], in which each member of a complex, interwoven intervention team receives personalized auditory feedback based on individualized auditory display preference and current task. In addition, further evaluations should determine the extent to which variations of the auditory display (including, for instance, aforementioned personalized earcons or voice samples) affect performance and subjective workload. For clinical scenarios in which a high number of tasks must be completed by the nurse, a subsequent evaluation could similarly determine the effect of auditory display on performance and subjective workload. A further factor that must be considered in future auditory display designs is the total number of sounds to be incorporated: because earcons are abstract and need to be trained, a balance must be struck between the number of earcons and the ability of the nurse to train and remember them successfully during the clinical routine. If the number of functions to be employed using auditory display is large, grouped or hierarchical earcons [31] could be developed to accelerate training. Doing so, similar clinical task functions could be grouped to correspond to similar earcons, thus hypothetically increasing extendibility and reducing initial training time compared to many dissimilar earcons.

5 Conclusion

As the number of technological possibilities within the operating room continues to expand, touchless interaction will be a field of focus as a sterile input method. This evaluation of prototypical eye-tracking for touch-less interaction in the operating room demonstrates that such a system is best used in combination with an audiovisual display rather than a purely visual display. Auditory display has demonstrated to improve quantitative performance measures and exhibits lower workload and higher satisfaction and acceptance than visual-only feedback. Touchless interaction for gesture recognition is not yet an established method within the operating room, and its introduction will be burdened if there is no secondary feedback mechanism present. The use of auditory display or audiovisual display will help accelerate implementation and acceptance of novel touchless interaction in the sterile operating room.

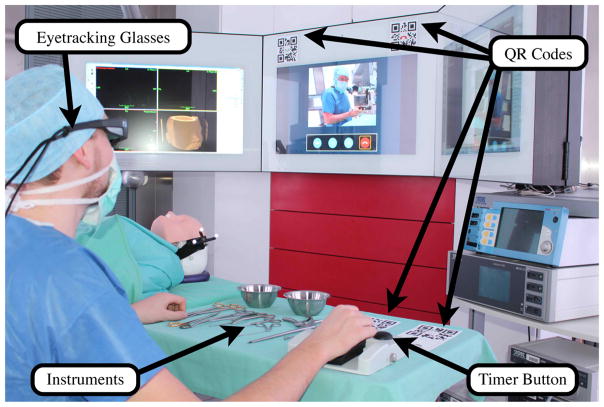

Fig. 1.

The developed eye-tracking system in the laboratory operating room. Using lightweight glasses, the user can execute predefined actions by gazing at QR codes located within the operating room.

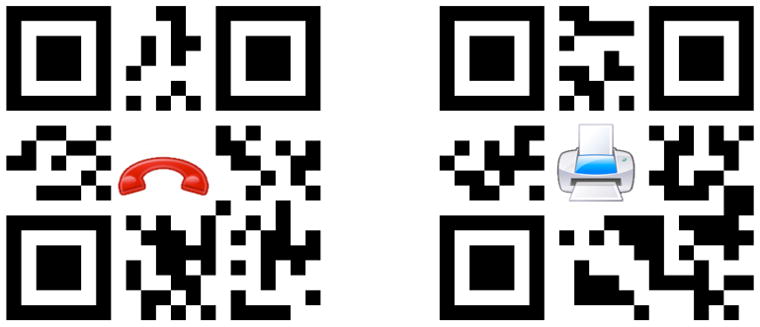

Fig. 2.

Two QR code examples used for eye tracking, showing a red telephone handset to denote hanging up a video call (left), and a printer to denote generating an instrument report (right)

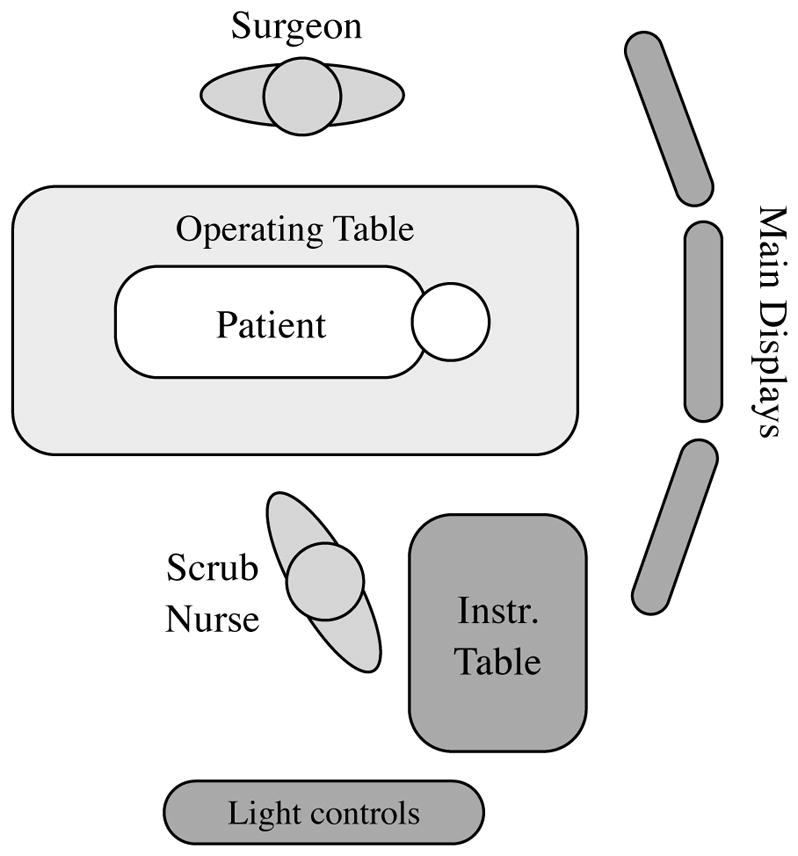

Fig. 4.

Laboratory evaluation setup showing placement of scrub nurse, surgeon, monitors, operating table, instrument table, and lighting controls.

Acknowledgments

Funding

The study was partly supported by National Institutes of Health grants P41 EB015902, P41 EB015898, R01EB014955, and U24CA180918.

Footnotes

Conflict of Interest

The authors state that they have no conflict of interest.

Ethical Approval

For this kind of study no formal ethics approval is required by the institutional ethics committee.

Informed consent

Informed consent was obtained from all individual participants included in the study.

Contributor Information

David Black, Medical Image Computing, University of Bremen, Jacobs University, and Fraunhofer MEVIS, Bremen, Germany.

Michael Unger, Innovation Center Computer Assisted Surgery, Leipzig, Germany.

Nele Fischer, Leipzig University of Applied Sciences, Leipzig, Germany.

Ron Kikinis, Medical Image Computing, University of Bremen and Fraunhofer MEVIS, Bremen, Germany.

Horst Hahn, Jacobs University and Fraunhofer MEVIS, Bremen, Germany.

Thomas Neumuth, Innovation Center Computer Assisted Surgery, Leipzig, Germany.

Bernhard Glaser, Innovation Center Computer Assisted Surgery, Leipzig, Germany.

References

- 1.Rutala W, White M, Gergen M, Weber D. Bacterial Contamination of Keyboards: Efficacy and Functional Impact of Disinfectants. Infection Control. 2006;27(4):372377. doi: 10.1086/503340. [DOI] [PubMed] [Google Scholar]

- 2.Stockert E, Langerman A. Assessing the Magnitude and Costs of Intraoperative Inefficiencies Attributable to Surgical Instrument Trays. J Am Coll Surg. 2014;219(4):646–55. doi: 10.1016/j.jamcollsurg.2014.06.019. [DOI] [PubMed] [Google Scholar]

- 3.Fitzke T, Krail N, Kroll F, Ohlrogge L, Schrder F, Spillner L, Voll A, Dylla F, Herrlich M, Malaka R. Fubasierte Interaktionen mit Computersystemen im Operationssaal. 14. Jahrestagung der Gesellschaft für Computer- und Roboterassistierte Chirurgie e.V. - CURAC; Bremen. September 2015.2015. [Google Scholar]

- 4.Hatscher B, Luz M, Hansen C. Foot Interaction Concepts to Support Radiological Interventions. Mensch und Computer; Regensburg: 2017. Sep, [Google Scholar]

- 5.Bizzotto N, Costanzo A, Bizzotto L, Regis D, Sandri A, Magnan B. Leap Motion Gesture Control With OsiriX in the Operating Room to Control Imaging. Surgical innovation. 2014;21(6):655656. doi: 10.1177/1553350614528384. [DOI] [PubMed] [Google Scholar]

- 6.Black D, Ganze S, Hettig J, Hansen C. Auditory Display for Improving Free-hand Gesture Interaction. Mensch und Computer; Regensburg: 2017. Sep, [Google Scholar]

- 7.Kirmizibayrak C, Radeva N, Wakid M, Philbeck J, Sibert J, Hahn J. Evaluation of gesture based interfaces for medical volume visualization tasks. Proceedings of the 10th international conference on Virtual reality; 2011. [DOI] [Google Scholar]

- 8.Mewes A, Hensen B, Wacker F, Hansen C. Touch-less Interaction with Software in Interventional Radiology and Surgery: A Systematic Literature Review. Int J CARS (2016) 2016;11(1):1–16. doi: 10.1007/s11548-016-1480-6. [DOI] [PubMed] [Google Scholar]

- 9.Chetwood A, Kwok K, Sun L, Mylonas G, Clark J, Darzi A, Yang G. Collaborative eye tracking: a potential training tool in laparoscopic surgery. Surgical Endoscopy. 2012;26(7):20032009. doi: 10.1007/s00464-011-2143-x. [DOI] [PubMed] [Google Scholar]

- 10.Ali S, Reisner L, King B, Cao A, Auner G, Klein M, Pandya A. Eye gaze tracking for endoscopic camera positioning: an application of a hardware/software interface developed to automate Aesop. Studies in health technology and informatics. 2007;132:47. [PubMed] [Google Scholar]

- 11.Glaser B, Unger M, Schellenberg T, Neumuth T. Use Cases für sterile blickgesteuerte Mensch-Maschine-Interaktionskonzepte im digitalen Operationssaal. 14. Jahrestagung der Gesellschaft für Computer- und Roboterassistierte Chirurgie e.V. - CURAC; Bremen. September 2015.2015. [Google Scholar]

- 12.Kangas J, Akkil D, Rantala J, Isokoski P, Majaranta P, Raisamo R. Gaze Gestures and Haptic Feedback in Mobile Devices. SIGCHI Conference on Human Factors in Computing Systems; Toronto. April 2014; 2014. [DOI] [Google Scholar]

- 13.Park Y, Kim J, Lee K. Effects of Auditory Feedback on Menu Selection in Hand-Gesture Interfaces. IEEE Multimedia. 2015;22(1):32–40. doi: 10.1109/MMUL.2015.5. [DOI] [Google Scholar]

- 14.Black D, Hansen C, Nabavi A, Kikinis R, Hahn H. A Survey of Auditory Display in Image-Guided Interventions. Int J CARS. 2017 doi: 10.1007/s11548-017-1547-z. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Puckette M. Pure Data: another integrated computer music environment. Second Intercollege Computer Music Concerts; Tachikawa, Japan. 1996. pp. 37–41. [Google Scholar]

- 16.Hermann T. Taxonomy and definitions for sonification and auditory display. 14th International Conference on Auditory Display; Paris, France. 2008.2008. [Google Scholar]

- 17.Van der Laan J, Heino A, de Waard D. A simple procedure for the assessment of acceptance of advanced transport telematics. Transportation Research -Part C: Emerging Technologies. 1997;5:1–10. doi: 10.1016/S0968-090X(96)00025-3. [DOI] [Google Scholar]

- 18.Byers J, Bittner A, Hill S. Traditional and raw task load index (TLX) correlations: Are paired comparisons necessary? In: Mital A, editor. Advances in Industrial Ergonomics and Safety. Taylor and Francis; 1989. p. 481485. [Google Scholar]

- 19.Hart SG. NASA-Task Load Index (NASA-TLX); 20 Years Later. Human Factors and Ergonomics Society 50th Annual Meeting; Santa Monica: HFES; 2006. pp. 904–908. [Google Scholar]

- 20.Jain A, Bansal R, Kumar A, Singh K. A comparative study of visual and auditory reaction times on the basis of gender and physical activity levels of medical first year students. Int J Appl Basic Med Res. 2015;5(2):124127. doi: 10.4103/2229-516X.157168. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Field A. Discovering Statistics Using IBM SPSS Statistics. Sage Publishing; Thousand Oaks, CA, USA: 2013. [Google Scholar]

- 22.Black D, Hettig J, Luz M, Hansen C, Kikinis R, Hahn H. Auditory feedback to support image-guided medical needle placement. Int J CARS. 2017 doi: 10.1007/s11548-017-1537-1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Bork F, Fuerst B, Schneider A, Pinto F, Graumann C, Navab N. Auditory and Visio-Temporal Distance Coding for 3-Dimensional Perception in Medical Augmented Reality. Proceedings of 2015 IEEE International Symposium on Mixed and Augmented Reality (ISMAR); 2015. pp. 7–12. [DOI] [Google Scholar]

- 24.Cho B, Oka M, Matsumoto N, Ouchida R, Hong J, Hashizume M. Warning navigation system using realtime safe region monitoring for otologic surgery. Int J CARS. 2013;8:395–405. doi: 10.1007/s11548-012-0797-z. [DOI] [PubMed] [Google Scholar]

- 25.Hansen C, Black D, Lange C, Rieber F, Lamadé W, Donati M, Oldhafer K, Hahn H. Auditory Support for Resection Guidance in Navigated Liver Surgery. Medical Robotics and Computer Assisted Surgery. 2013;9(1):36. doi: 10.1002/rcs.1466. [DOI] [PubMed] [Google Scholar]

- 26.Kitagawa M, Dokko D, Okamura A, Yuh D. Effect of sensory substitution on suture-manipulation forces for robotic surgical systems. Thoracic and Cardiovascular Surgery. 2005;129(1):151–8. doi: 10.1016/j.jtcvs.2004.05.029. [DOI] [PubMed] [Google Scholar]

- 27.Willems P, Noordmans H, van Overbeeke J, Viergever M, Tulleken C, van der Sprenkel J. The impact of auditory feedback on neuronavigation. Acta Neurochirurgica. 2005;147:167–173. doi: 10.1007/s00701-004-0412-3. [DOI] [PubMed] [Google Scholar]

- 28.Katz J. Noise in the operating room. Anesthesiology. 2014;121(4):894–8. doi: 10.1097/ALN.0000000000000319. [DOI] [PubMed] [Google Scholar]

- 29.Moorthy K, Munz Y, Undre S, Darzi A. Objective evaluation of the effect of noise on the performance of a complex laparoscopic task. Surgery. 2004;136(1):25–30. doi: 10.1016/j.surg.2003.12.011. Discussion 31. [DOI] [PubMed] [Google Scholar]

- 30.Rockstroh M, Franke S, Hofer M, Will A, Kasparick M, Andersen B, Neumuth T. OR.NET: Multi-perspective qualitative evaluation of an integrated operating room based on IEEE 11073 SDC. Int J CARS. 2017;(2017) doi: 10.1007/s11548-017-1589-2. [DOI] [PubMed] [Google Scholar]

- 31.Blattner M, Sumikawa D, Greenberg R. Earcons and Icons: Their Structure and Common Design Principles. HumanComputer Interaction. 1989;4(1):11–44. doi: 10.1207/s15327051hci0401_1. [DOI] [Google Scholar]