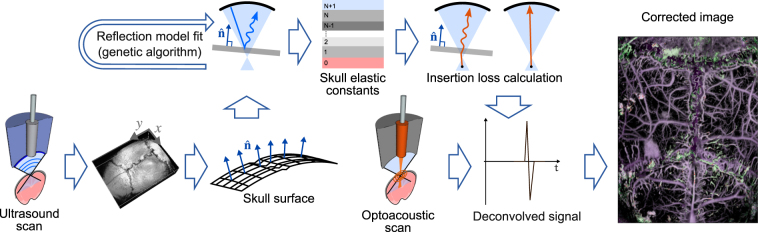

Figure 3.

Workflow of the virtual craniotomy deconvolution algorithm. Transcranial 3D OA and pulse-echo US images are simultaneously acquired. The geometry of the skull and the apparent position of the brain vasculature are extracted using the time of flight and a constant speed of sound assumptions. The OA and US signals are then transformed into the frequency domain and the ultrasound reflection spectrum is fitted using a wave model. Subsequently, the insertion loss is calculated using the skull elastic constants that reached the best fit into the reflection model. The insertion loss is employed to deconvolve the OA signal spectrum. Finally, the deconvolved spectrum is transformed back into time domain and a distortion-corrected image of the mouse brain is formed.