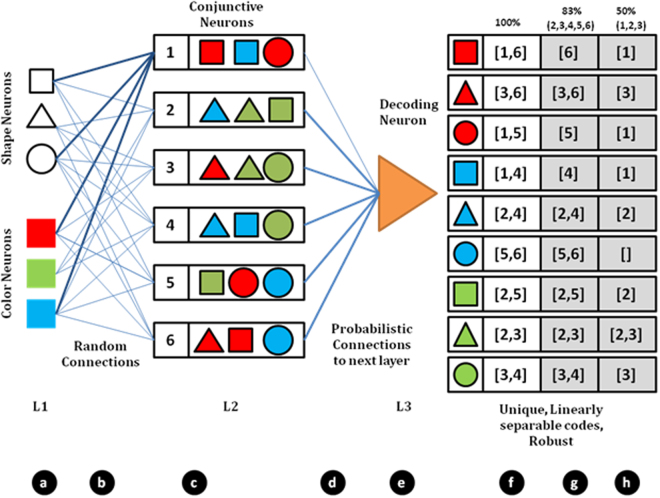

Figure 1.

Architecture for pattern classification presented in this report. (a) L1 represents a set of input neurons. (b) These are connected to the next layer L2 probabilistically. (c) Such probabilistic connections give rise to coarse conjunctive neurons. As an example, Neuron 1 in L2 receives inputs from neurons coding for square, circle, red and blue and activates for the cases red square, blue square and red circle. With several such neurons in L2, a population code is formed. This is highlighted in f. When a red square is presented, neurons 1 and 6 are activated (say population code [1,6]) while for a blue circle, the population code is [5,6]. These codes are linearly separable (when considered as a binary vector in 6 dimensions). With such linearly separable codes, a single neuron in layer 3 (we have a perceptron as a proxy) can learn to decode any one of the unique population code using STDP mechanism. Even if the connection between layer 2 and layer 3 is probabilistic as in d, the code as seen by the perceptron is unique and linearly separable. For example, suppose a neuron in L3 does not receive a connection from Neuron 1 in L2, still the population code as seen by it (as shown in g) is unique for each pattern and it can decode the pattern. With further reduction in probability of connection (50%) as in h, the population code is no longer unique.