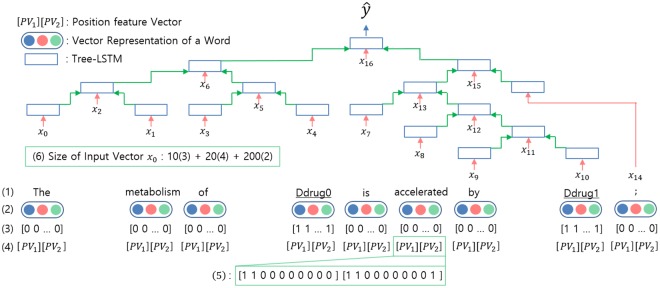

Fig 2. The architecture of our recursive neural network model.

Our model is a variation of the binary tree-LSTM model. (1) The words in a sentence. The names of drug targets are underlined. (2) Vector representation of a word through the word embedding lookup process. (3) Subtree containment feature represents the importance of a node. (4) Position feature vector representing the relative distance of two target drugs from the current word position. (5) An example of the position feature vector. The current word is “accelerated.” (6) The size of the concatenated vector input x0 of our model is 10 (size of the subtree containment feature; (3) in the figure) + 20 (size of the position feature; (4) in the figure) + 200 (size of the word embedding; (2) in the figure).