Abstract

Study Objectives:

The American Academy of Sleep Medicine has published manuals for scoring polysomnograms that recommend time spent in non-rapid eye movement sleep stages (stage N1, N2, and N3 sleep) be reported. Given the well-established large interrater variability in scoring stage N1 and N3 sleep, we determined the range of time in stage N1 and N3 sleep scored by a large number of technologists when compared to reasonably estimated true values.

Methods:

Polysomnograms of 70 females were scored by 10 highly trained sleep technologists, two each from five different academic sleep laboratories. Range and confidence interval (CI = difference between the 5th and 95th percentiles) of the 10 times spent in stage N1 and N3 sleep assigned in each polysomnogram were determined. Average values of times spent in stage N1 and N3 sleep generated by the 10 technologists in each polysomnogram were considered representative of the true values for the individual polysomnogram. Accuracy of different technologists in estimating delta wave duration was determined by comparing their scores to digitally determined durations.

Results:

The CI range of the ten N1 scores was 4 to 39 percent of total sleep time (% TST) in different polysomnograms (mean CI ± standard deviation = 11.1 ± 7.1 % TST). Corresponding range for N3 was 1 to 28 % TST (14.4 ± 6.1 % TST). For stage N1 and N3 sleep, very low or very high values were reported for virtually all polysomnograms by different technologists. Technologists varied widely in their assignment of stage N3 sleep, scoring that stage when the digitally determined time of delta waves ranged from 3 to 17 seconds.

Conclusions:

Manual scoring of non-rapid eye movement sleep stages is highly unreliable among highly trained, experienced technologists. Measures of sleep continuity and depth that are reliable and clinically relevant should be a focus of clinical research.

Citation:

Younes M, Kuna ST, Pack AI, Walsh JK, Kushida CA, Staley B, Pien GW. Reliability of the American Academy of Sleep Medicine rules for assessing sleep depth in clinical practice. J Clin Sleep Med. 2018;14(2):205–213.

Keywords: digital sleep analysis, interrater variability, sleep depth

BRIEF SUMMARY

Current Knowledge/Study Rationale: Although interrater variability in sleep stage scoring is well documented, results of such studies are typically given as agreement between technologists in pooled data that include several dozen polysomnograms. The effect of this variability on the accuracy of scoring sleep stages in a single polysomnogram scored by a single technologist, as is the case in clinical practice, is not known.

Study Impact: When 70 polysomnograms were scored by 10 experienced technologists, the amount of time in stage N1 and N3 sleep ranged from very high to very low within almost each record depending on who scored it. Accordingly, when a polysomnogram is scored by a single technologist, dividing non-rapid eye movement sleep into three stages has little value in evaluating sleep depth.

INTRODUCTION

The American Academy of Sleep Medicine (AASM) has published manuals for scoring polysomnograms (PSGs) that have consistently recommended that times spent in, and percent of total sleep time (% TST) occupied by, different non-rapid eye movement (NREM) sleep stages (stage N1, N2 and N3 sleep) be reported.1,2 The implications of these recommendations are that these values are reliably determined by a single scorer and that sleep stage information is useful in the clinical management of patients with sleep disorders.

A number of studies have evaluated epoch-by-epoch agreement between two technologists in scoring individual sleep stages.3–14 Scoring of stage N1 and N3 sleep had the least agreement; average agreement ranged from 20% to 70% in different studies. A study by Norman et al. in 2000 compared scoring among five expert technologists. They found that the likelihood of epochs scored by any technologist as stage N1 sleep receiving the same score by the other four technologists was less than 4%, whereas the corresponding number for stage N3 sleep was less than 18%.5 The implication of this important finding to the clinical utility of the three-stage classification of NREM sleep was not pursued further.

In clinical practice a PSG is typically scored by one technologist. Results of previous studies indicate that stage N1 and N3 sleep times reported by the local technologist may be different if the PSG were scored by another technologist. They do not fully detail the possible extent of these differences (confidence interval [CI] of the score of a single technologist) or how close the scoring of either technologist is to estimated true stage N1 and N3 sleep times. Previous studies that assessed reliability of scoring stage N1 and N3 sleep time in minutes (as opposed to epoch-by-epoch agreement) typically involved two scorers and estimates of true values of stage N1 and N3 sleep times (eg, average stage N1 and N3 sleep times assigned by a large number of technologists to the same PSGs) were not determined.

Data from a recent comparison of sleep stage scoring from a digital scoring system against the scoring of 10 highly experienced academic technologists12 provided a unique opportunity to examine manual sleep stage scoring reliability. Specifically, the range of stage N1 and N3 sleep times, in minutes, allocated by each of the 10 technologists to each PSG, allowed calculation of a CI for the scoring of a single technologist scoring a single PSG. Furthermore, given the large number of scorers evaluating each PSG, the averages of the 10 stage N1 sleep times and 10 stage N3 sleep times assigned to each PSG may reasonably be used as an estimate of the “true” times for that PSG. Furthermore, as the poor epoch-by-epoch agreement among multiple expert scorers reported by Norman et al.5 was neither replicated nor subsequently emphasized, we repeated their analysis using epoch-by-epoch scores available from six of the original scorers.

METHODS

The current study reports results of additional analysis of data from 70 PSGs used previously in studies to evaluate the effect of using different hypopnea definitions on the apnea-hypopnea index and for validation of an automatic scoring system.11,12 Methods used to select the 70 PSGs, (which were obtained from participants in a community-based cohort study of sleep-disordered breathing in women in midlife), scoring methods, participating institutions, data collection and transfer, and compilation and analysis of the scoring from the 10 sleep technologists have been described previously.11 Briefly, the PSGs had at least 5 hours of scorable data and included four electroencephalogram (EEG) signals (C3/M2, C4/M1, O1/M2, O2/ M1), bilateral electrooculograms, chin muscle activity, rib cage and abdominal excursions, nasal pressure, oronasal thermistry, body position, electrocardiogram, and oxyhemoglobin saturation.

Two highly trained, experienced, and certified PSG technologists from each of the following five AASM-certified sleep centers manually scored the PSGs with their local data acquisition systems using the applicable AASM guidelines2 at the time except for the absence of frontal leads: University of Pennsylvania, University of Wisconsin at Madison, St. Luke's Hospital in Chesterfield, Missouri, Stanford University, and Harvard University. The digital scoring system being evaluated in the previous study12 was not used in any way during manual scoring. The results of manual scoring from all five centers were submitted to the University of Pennsylvania for independent analyses.

For the current study the original epoch-by-epoch sleep staging of the 70 PSGs was requested in 2016 from all 5 centers but data from only three were available (total of 6 technologists per PSG). The other two centers indicated that these records were no longer available. Contributing centers were University of Pennsylvania, St. Luke's Hospital, and Stanford University. Epoch-by-epoch scores were compiled by University of Pennsylvania and forwarded to the first author (MY) for further analysis. It should be noted that using scoring data from two technologists from each center (with common training and periodic reliability assessments) should increase inter-scorer agreement relative to scorers from different centers.

The summaries presented here in the tables and figures were generated from these raw data. Summary results were sent back to University of Pennsylvania for confirmation. In addition, the original PSG files were digitally analyzed in 2016 to determine the duration of delta waves in each 30-second epoch in order to evaluate the accuracy of individual technologists in estimating delta duration per epoch.

Evaluation of Agreement Between Technologists in Scoring Sleep Stages

Epoch-by-Epoch Comparisons

This analysis used epoch-by-epoch scores of six technologists for each PSG. These were aligned at the lights-out epoch. We used the method first proposed by Norman et al.5 to determine extent of agreement among the six technologists when a given stage was assigned by at least one technologist. For example, if stage N1 sleep was assigned to an epoch by any sleep technologist, we determined how many of the other five technologists scored the epoch as stage N1 sleep. The maximum score was 6 (unanimous score) and minimum was 1 (no other technologist concurred). The same was done for all sleep stages. For each PSG, a summary table was generated that contained the number of epochs in which each stage was scored by at least one technologist and the percentages that were scored unanimously, by five of the six, four of the six, etc. Average (standard deviation) of these percentages was determined (n = 70).

Variability in Sleep Stage Durations

Sleep stage durations were available from all 10 technologists, as they were submitted to the University of Pennsylvania at the time of the original report (2012). For each PSG and each sleep stage we determined the average and 5% to 95% CI of the times (n = 10) scored by the 10 technologists. Awake times were expressed as percent of the total recording time and times in stage N1, N2, N3, and R sleep were expressed as percent of the total sleep time (% TST). Sleep durations scored by individual technologists were then plotted against the average of the 10 values in each PSG. Intraclass correlation coefficients (ICCs) were calculated for the relationship between sleep times assigned by each individual technologist for each PSG and the average of the 10 sleep durations obtained in each PSG. To determine whether individual technologists tended to systematically underestimate or overestimate sleep durations, we calculated the difference between each technologist's sleep duration and the average scores for each PSG.

Relationship Between Digitally Measured Delta Wave Durations and Interrater Scoring Differences of Stage N3 Sleep

Michele Sleep Scoring system (MSS) (Cerebra Health, Winnipeg, Canada) is a validated automated scoring system.12 MSS calculates total duration of delta waves in each 30-second epoch. This is done first by applying a 3-Hz low-pass filter to the EEG signal to remove superimposed high-frequency waves that confound the visual determination of delta wave amplitude and duration. The filtered EEG signal is then scanned to identify waves with peak-trough deflection greater than 75 μV and duration between 0.3 and 2.5 seconds. The durations of all eligible waves in each 30-second epoch is obtained. To determine the accuracy of individual technologists in estimating delta wave duration, all 30-second epochs scored as NREM sleep were sorted according to their delta wave duration (n = 38,778 epochs) and the entire range was grouped into 1-second intervals (20 intervals from 0 to 20 seconds of delta waves per epoch). Percentage of epochs within each interval that was scored as stage N3 sleep was determined for each of the six sleep technologists with available epoch-by-epoch data. According to this analysis, scoring stage N3 sleep when MSS-derived delta wave duration was less than 6 seconds indicated a tendency for the technologist to overestimate delta wave duration, and not scoring stage N3 sleep when MSS delta duration was at least 6 seconds reflected underscoring.

RESULTS

Participants were 70 females aged 51.1 ± 4.2 years. Body mass index was 32.9 ± 9.2 kg/m2.11 Average apnea-hypopnea index was 12.1 ± 13.3 events/h (range, 0 to 74 events/h).12 Average times in different sleep stages (manual scoring) were 43 ± 18, 244 ± 47, 30 ± 21, and 81 ± 25 minutes for stage N1, N2, N3 and R, respectively.12 TST was 398 ± 52 minutes and sleep efficiency was 84 ± 8%.

Epoch-by-Epoch Agreement Among Six Technologists

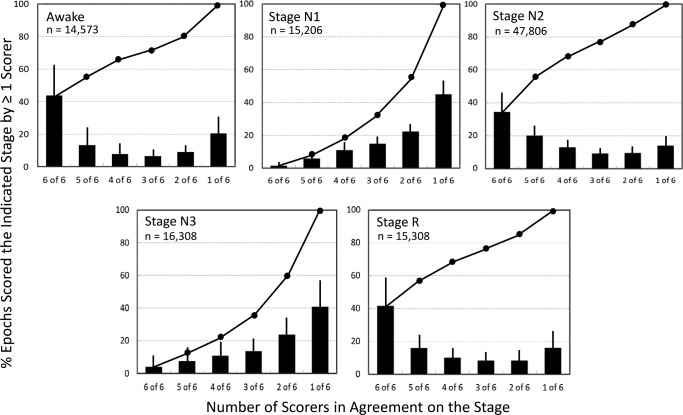

Figure 1 shows likelihood of agreement among the six technologists when one of them scored a given stage. When one technologist scored an epoch as awake, likelihood of all technologists agreeing (6 of 6) was 43.7 ± 17.9% and likelihood of a plurality (> 3 of 6) was 64.1 ± 14.3% (Figure 1, top left). In 20.6 ± 10.9% of cases, the stage score was not supported by any other technologist. Similar results were obtained for stage N2 and R sleep (Figure 1). By comparison, in 45.2 ± 9.2% of epochs scored as stage N1 sleep by one technologist the decision was not supported by anyone else (1 of 6), and in only 1.5 ± 1.1% was the score unanimous. Plurality was seen in only 18.1 ± 6.5% of such cases. Similarly, in 40.8 ± 15.1% of epochs scored as stage N3 sleep by one technologist, no other scorer scored stage N3 sleep (1 of 6), and in only 3.8 ± 7.9% was the score unanimous. Plurality was seen in only 22.0 ± 17.3% of such cases.

Figure 1. Frequency of agreement among the six technologists when a given stage is scored by at least one of the technologists.

Bars are standard deviations. Continuous line is the cumulative percentage. Note that only 1.5 ± 1.2 % of epochs scored by any technologist as stage N1 sleep received a unanimous (6 of 6) N1 score and a majority N1 (4 of 6) score was reached in only 18% of such cases. A similar pattern was observed for stage N3 sleep.

Impact of Inter-Rater Scoring Differences on Estimated Sleep Times in Individual Polysomnograms

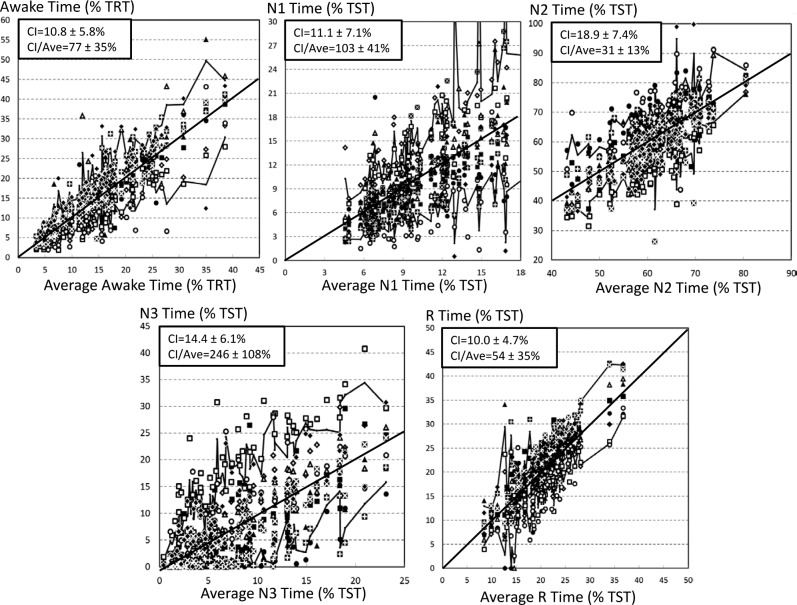

Figure 2 shows the relationship between average sleep stage times in each PSG (n = 70) and the range of values assigned by the 10 technologists in the same PSG. Solid jagged lines represent the CI of the 10 sleep times in individual PSGs. For each PSG and each stage we calculated the ratio (CI / average sleep time in the relevant stage [abscissa value]). Although CI was widest for stage N2 sleep (18.9 ± 7.4 % TST), it was associated with the lowest CI / average ratio (31 ± 13%; Figure 2), indicating that the error resulting from differences between scorers for this stage are small relative to the true awake time. This ratio was highest for stage N3 sleep (CI = 246 ± 108% of the average of the 10 scores) and stage N1 sleep (103 ± 41%; Figure 2). Assuming an upper normal value of 10% for percent of stage N1 sleep, in nearly all PSGs, regardless of the average value obtained from the 10 technologists, percent of stage N1 sleep could be reported as well below normal or well above normal depending on who scored the PSG. Similarly, reported stage N3 sleep time ranged from zero to high values regardless of average stage N3 sleep time of the PSG (Figure 2).

Figure 2. Duration of different sleep stages scored by individual technologists as a function of the average (of 10 scores) duration of the stage in individual PSGs.

Each PSG is represented by 10 points aligned at the average of the 10 durations. Technologists are represented by different symbols. Upper and lower irregular lines join the 5th and 95th percentiles of the individual PSGs. Solid diagonal line is the line of identity. Note that the confidence interval (difference between the upper and lower lines) is quite variable and generally quite wide for stage N1 and stage N3 sleep. Three PSGs are not represented in the N1 panel because their results would nearly double both axes, compressing most of the data in one corner. The average (confidence interval) of N1 durations in these three PSGs were 20.7 (13.3, 25.4), 28.0 (15.2, 49.3), and 36.5 (22.9, 45.1). PSG = polysomnogram, TRT = total recording time, TST = total sleep time.

Figure 2 also shows that for stage N1 and N3 sleep, the value assigned by a single scorer to any PSG (any data point in the panel) can be scored by others when scoring PSGs having widely different average values (horizontal distance between the two jagged lines).

Agreement Between Individual Technologists and the Average Score

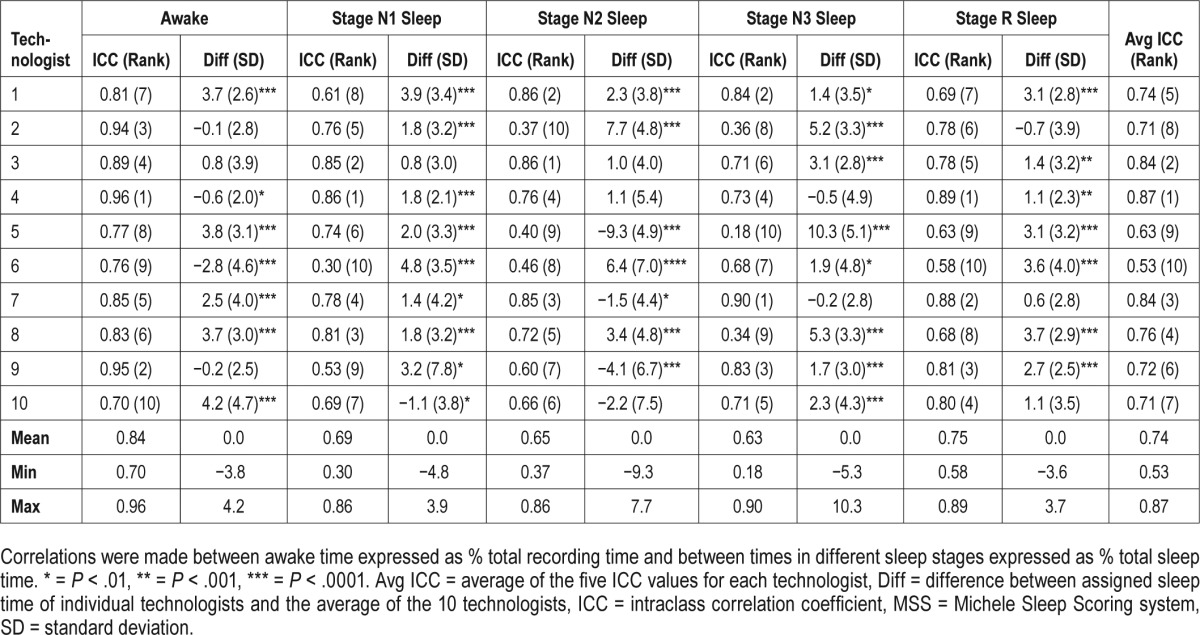

Table 1 shows ICCs for the relationship between sleep times assigned by individual technologists and the average sleep time of the 10 technologists (n = 70 PSGs). It also shows the average (± standard deviation) difference between each technologist's sleep time and the average sleep time in the 70 PSGs. ICCs ranged from 0.18 (stage N3 sleep, technologist 5) to 0.96 (awake, technologist 4). Most technologists showed good agreement with the average score in some stages and relatively poor agreement in others. For example, technologist 2 had an ICC of 0.94 when scoring awake but an ICC of approximately 0.36 for stage N2 and N3 sleep with an intermediate ICC for stage N1 and stage R sleep. The average of the five ICCs for individual technologists (last column, Table 1) ranged 0.53 to 0.87. No technologist had an ICC > 0.80 in all stages and only one technologist (#7) had an ICC > 0.75 in all sleep stages. Significant differences between individual technologists and average scores were common for all sleep stages (second column in each stage, Table 1).

Table 1.

Performance of individual technologists versus average of 10 technologists.

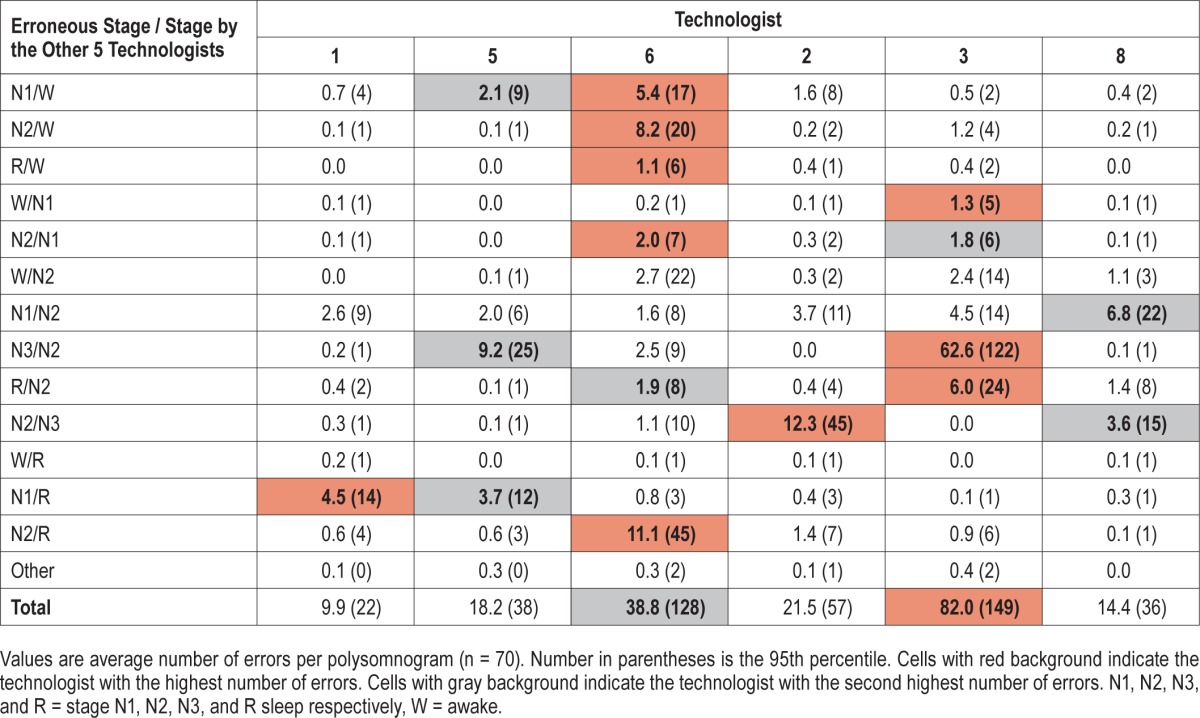

Table 2 shows frequency and types of unequivocal errors made by the six technologists. An unequivocal error was defined as a score by the technologist that was different from the score given unanimously by the other five technologists. The dominant type of error varied among different technologists. Each technologist had the highest (black background, Table 2) or next highest (grey background) rate of errors in at least one category (3.0 ± 1.8 categories/technologist; range, one to six categories).

Table 2.

Unequivocal errors by individual technologists (no. epochs / PSG).

Relation Between Digitally Measured Delta Wave Duration and Interrater Scoring Differences

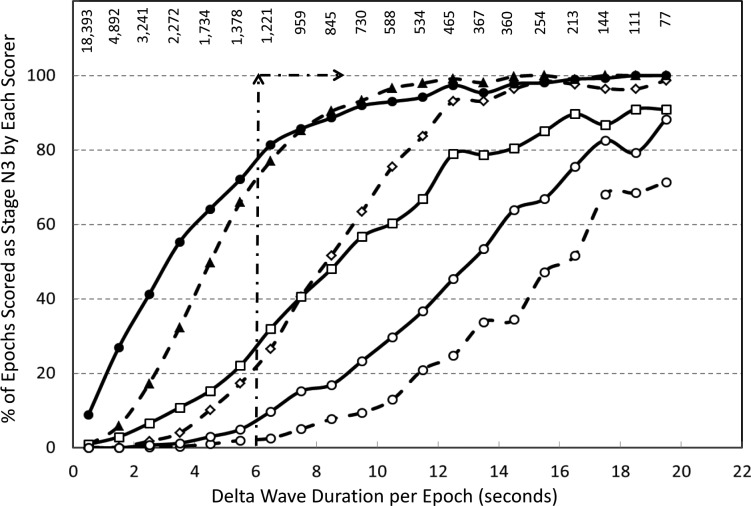

Figure 3 demonstrates the frequency with which each of the six technologists scored stage N3 sleep as a function of digitally measured delta wave duration in the epoch. Ideally stage N3 sleep should not be scored until delta wave duration exceeds 6 seconds (20% of the epoch)1,2 whereas above this level all epochs should be scored stage N3 sleep (arrows). Figure 3 shows that although the frequency of scoring stage N3 sleep increased with delta duration with all technologists, two individuals overscored stage N3 sleep when delta wave duration was less than 6 seconds whereas another two underscored it at all levels. The remaining two tended to slightly overscore stage N3 sleep when delta duration was less than 6 seconds while underscoring it above 6 seconds. The result is that the difference between technologists was widest when delta duration was 6 to 10 seconds and the difference narrowed at lower and higher levels. Two technologists (two rightmost lines) did not score a substantial number of epochs as stage N3 sleep even when delta wave duration exceeded 15 seconds.

Figure 3. Frequency of scoring stage N3 sleep by six technologists in epochs with different total delta wave duration.

Delta wave duration is the sum of durations of all delta waves identified digitally in each 30-second epoch. Arrows represent ideal scoring; no N3 until delta duration exceeds 6 seconds and N3 scored in all epochs with delta duration > 6 seconds. Numbers in the upper section represent the number of epochs examined within each delta duration range.

DISCUSSION

The main finding in this study is that visual classification of NREM sleep into its three stages according to the AASM rules is unreliable—and may be misleading—in clinical practice where such staging is typically done by one technologist.

Assessment of NREM Sleep Depth by the AASM Rules

By definition, sleep depth refers to the ease/difficulty of waking from sleep under the influence of arousal stimuli (arousability). Arousability is typically measured by determining the magnitude of an experimental arousal stimulus required to cause cortical arousal (arousal threshold). Several studies have shown that arousal threshold to acoustic or respiratory stimuli increases as NREM sleep progresses from stage N1 to N3 sleep, reaching the highest level in delta sleep.15–18 These findings form the basis for using percentage of total sleep time in stage N1 and N3 sleep as measures of NREM sleep depth. Clearly, the validity of this approach is entirely dependent on the reliability in classifying NREM sleep into these three stages.

Whereas it is well known that interrater agreement in scoring stage N1 and N3 sleep is relatively low,3–14 this study shows that this inconsistency is severe among multiple technologists. The likelihood of a unanimous score of stage N1 sleep by six experienced sleep technologists is largely nonexistent and in nearly half of cases a score of stage N1 sleep by any technologist is not supported by any other technologist (Figure 1). A plurality of scores of stage N1 sleep is reached in only 20% of such epochs. Roughly similar results were found for stage N3 sleep (Figure 1). These findings were observed even though two technologists from each center provided the scoring, and are nearly identical to those reported by Norman et al. while evaluating agreement among single scorers from five sleep centers.5 The effect of this disagreement on NREM PSG summary data (minutes in each stage) as used in clinical practice, is marked. Assuming that average stage N1 and N3 sleep times from the group of 10 experienced technologists represent the true stage N1 and N3 sleep times in a given PSG, Figure 2 shows that virtually any PSG can be scored as having very high or very low stage N1 or N3 sleep times, regardless of the true values (abscissa values).

Implicit from these findings is the need for reassessment of the value in clinical practice of scoring NREM sleep in three stages. Abandoning this practice would reduce scoring time insofar as pondering whether an EEG event is really a spindle or K complex and whether delta wave duration is more or less than 6 seconds are among the most time-consuming decisions during scoring. It would also greatly reduce interrater variability because stage N1/N2 and N2/N3 disagreements are the most common types of disagreement and together account for nearly two-thirds of all disagreements.14 These disagreements stem from the fact that the definition of K complexes lacks specificity (at least in the scoring manuals published by the AASM that were used by the technologists1,2), identification of EEG spindles is highly inconsistent among technologists,19,20 and deciding on whether delta wave duration is more or less than 6 seconds in a 30-second epoch requires a number of measurements that are neither feasible nor practical to do visually. In this study we evaluated the extent of subjectivity in visual estimates of delta wave duration by determining the duration of eligible delta waves required by individual technologists to score the epoch as stage N3 sleep. Figure 3 shows that some technologists scored stage N3 sleep when delta wave duration was well below 6 seconds whereas for others much greater durations were required. If one defines the individual threshold as the duration associated with scoring stage N3 sleep in half the epochs (ordinate value = 50%, Figure 3), the individual thresholds ranged from 3 to 17 seconds among different technologists. If threshold is defined as duration required to score stage N3 sleep 90% of the time the range is 8 to > 20 seconds. To compound matters, frequency of epochs where actual delta wave duration is sufficiently high to be outside the range vulnerable to subjective assessment (eg, > 17 seconds) is extremely small relative to the number with durations within the highly ambiguous range (numbers on the top of Figure 3). This analysis further illustrates the difficulty of trying to improve interscorer agreement by fine-tuning the scoring guidelines.

Abandoning the practice of scoring times in the three NREM stages would greatly simplify scoring and eliminate the major sources of interrater scoring variability, but it would leave the clinician with reduced means to evaluate sleep depth and potential changes with treatment for disorders. It may be argued that the determination of stage N1 through N3 sleep times has little clinical utility in practice and that, therefore, not much will be lost if NREM sleep is treated as a single stage. However, the fact that the predominant EEG pattern in NREM sleep ranges widely among individuals, from patterns with minimal delta/theta activity to ones with very prominent theta and delta waves, is clearly evident to anyone who scores PSGs. To the extent that these differences reflect differences in arousability,18 they very likely have clinical implications. For example, a normal or excessive amount of delta/theta activity in a patient with excessive somnolence may favor a primary disorder of excessive sleepiness. High levels of stage N3 sleep on a PSG may also reflect recent insufficient sleep, which might be relevant for interpreting a Multiple Sleep Latency Test the next day. Conversely, the presence of little time with rich delta/theta activity may point to poor quality sleep as the reason for a patient's complaint of nonrestorative sleep or excessive somnolence. Such a pattern may also indicate an increased likelihood of developing sleep disorders in response to conditions that increase arousal stimuli. Perhaps the failure of stage N1 and N3 sleep times to show clear utility in these respects is related to the fact that their estimates have, so far, been quite unreliable. Fortunately, credible alternatives to staging NREM sleep exist. These alternatives to manual scoring of NREM sleep depth fall into two categories: use of digital systems to implement scoring rules; and use of a continuous digital scale to evaluate sleep “depth.”

There are currently two free-standing commercial digital systems for scoring sleep according to the AASM rules, the Somnolyzer (Philips Respironics, Murrysville, Pennsylvania, United States)9,21 and MSS.12,22–24 Both systems have undergone substantial clinical validation.9,12,21–24 Given the large interrater differences in scoring stage N1 and N3 sleep, it is not surprising that any given scorer may find these systems inaccurate. However, that differences between stage N1 and N3 sleep times measured by these systems and times assigned by multiple technologists to the same PSGs are well within the range of differences observed between technologists12,21 suggests that these digital systems are an adequate compromise while having the advantage of consistency.

Another approach proposed recently25 involves the use of modules that provide the location of spindles and K complexes detected digitally and the duration of digitally measured delta wave duration in 30-second epochs. The results of these modules can be displayed on the screen during manual scoring to help the technologist decide on the sleep stage in equivocal epochs.

Rechtschaffen and Kales rules, which form the basis for the AASM rules, were proposed in 196826 when sleep signals were recorded with ink pens writing on paper and microprocessors had not yet been invented. Given that scoring had to be done manually, it was not possible to use a continuous scale of sleep depth. Sleep was categorized into a limited number of stages with distinct landmarks to enable visual scoring. Yet, sleep depth does not progress in a stepwise fashion; arousal threshold and delta power increase gradually as sleep progresses from stage N1 to stage N3 during sleep cycles with no apparent step change at the first appearance of a spindle or K complex (beginning of stage N2) or upon the duration of delta waves exceeding 6 seconds (onset of N3).18 Furthermore, arousal threshold is continuously correlated with absolute delta power with no apparent step change when the amplitude of delta waves exceeds an arbitrary 75 μV.18

The technical limitations at the time the Rechtschaffen and Kales sleep stage scoring rules were established are no longer present and a number of continuous digital markers of sleep depth have been proposed, including the ratio of high frequency to low frequency power (H/L ratio),27 delta power,18 alpha/beta power,28 EEG entropy,29–34 and, more recently, the odds ratio product (ORP), which utilizes the relationship of powers in different frequency ranges to each other.35 Considerable support has recently accumulated for ORP as a continuous index of sleep depth.35,36–38 This evidence, along with how ORP can be used to enhance sleep scoring has recently been reviewed.39

We conclude that manual application of AASM rules in clinical practice, where only one technologist scores the PSG, is unreliable. Moreover, with current computational techniques, this is unnecessary. Available digital methods to score NREM sleep (or assist in manual scoring) are highly reliable (as well as time and cost effective), and allow investigation of new measures of sleep continuity and depth that potentially will be more useful in clinical practice.

DISCLOSURE STATEMENT

Dr. Younes is supported by YRT Ltd. Drs. Kuna, Pack, and Staley are supported by the National Institutes of Health HL094307. Dr. Younes is the owner of YRT Ltd, a research and development company that develops methods for scoring sleep studies. YRT receives royalties and consultation fees from Cerebra Health which is licensed to market the Michele Sleep Scoring system. Dr. Walsh serves as a consultant for Merck and Purdue Pharma. The other authors report no conflicts of interest.

REFERENCES

- 1.Berry RB, Brooks R, Gamaldo CE, et al. for the American Academy of Sleep Medicine. The AASM Manual for the Scoring of Sleep and Associated Events: Rules, Terminology and Technical Specifications. Darien, IL: American Academy of Sleep Medicine; 2012. Version 2.0. [Google Scholar]

- 2.Iber C, Ancoli-Israel S, Chesson AL, Quan SF for the American Academy of Sleep Medicine. The AASM Manual for the Scoring of Sleep and Associated Events: Rules, Terminology and Technical Specifications. 1st ed. Westchester, IL: American Academy of Sleep Medicine; 2007. [Google Scholar]

- 3.Ferri R, Ferri P, Colognola RM, Petrella MA, Musumeci SA, Bergonzi P. Comparison between the results of an automatic and a visual scoring of sleep EEG recordings. Sleep. 1989;12(4):354–362. [PubMed] [Google Scholar]

- 4.Whitney CW, Gottlieb DJ, Redline S, et al. Reliability of scoring respiratory disturbance indices and sleep staging. Sleep. 1998;21(7):749–757. doi: 10.1093/sleep/21.7.749. [DOI] [PubMed] [Google Scholar]

- 5.Norman RG, Pal I, Stewart C, Walsleben JA, Rapoport DM. Interobserver agreement among sleep scorers from different centers in a large dataset. Sleep. 2000;23(7):901–908. [PubMed] [Google Scholar]

- 6.Collop NA. Scoring variability between polysomnography technologists in different sleep laboratories. Sleep Med. 2002;3(1):43–47. doi: 10.1016/s1389-9457(01)00115-0. [DOI] [PubMed] [Google Scholar]

- 7.Danker-Hopfe H, Kunz D, Gruber G, et al. Interrater reliability between scorers from eight European sleep laboratories in subjects with different sleep disorders. J Sleep Res. 2004;13(1):63–69. doi: 10.1046/j.1365-2869.2003.00375.x. [DOI] [PubMed] [Google Scholar]

- 8.Pittman SD, MacDonald MM, Fogel RB, et al. Assessment of automated scoring of polysomnographic recordings in a population with suspected sleep-disordered breathing. Sleep. 2004;27(7):1394–1403. doi: 10.1093/sleep/27.7.1394. [DOI] [PubMed] [Google Scholar]

- 9.Anderer P, Gruber G, Parapatics S, et al. An E-health solution for automatic sleep classification according to Rechtschaffen and Kales: validation study of the Somnolyzer 24 × 7 utilizing the Siesta database. Neuropsychobiology. 2005;51(3):115–133. doi: 10.1159/000085205. [DOI] [PubMed] [Google Scholar]

- 10.Magalang UJ, Chen NH, Cistulli PA, et al. Agreement in the scoring of respiratory events and sleep among international sleep centers. Sleep. 2013;36(4):591–596. doi: 10.5665/sleep.2552. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Kuna ST, Benca R, Kushida CA, et al. Agreement in computer-assisted manual scoring of polysomnograms across sleep centers. Sleep. 2013;36(4):583–589. doi: 10.5665/sleep.2550. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Malhotra A, Younes M, Kuna ST, et al. Performance of an automated polysomnography scoring system versus computer-assisted manual scoring. Sleep. 2013;36(4):573–582. doi: 10.5665/sleep.2548. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Zhang X, Dong X, Kantelhardt JW, et al. Process and outcome for international reliability in sleep scoring. Sleep Breath. 2015;19(1):191–195. doi: 10.1007/s11325-014-0990-0. [DOI] [PubMed] [Google Scholar]

- 14.Younes M, Raneri J, Hanly P. Staging sleep in polysomnograms: analysis of inter-scorer variability. J Clin Sleep Med. 2016;12(6):1347–1356. doi: 10.5664/jcsm.5894. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Williams HL, Hammack JT, Daly RL, Dement WC, Lubin A. Responses to auditory stimulation, sleep loss, and the EEG stages of sleep. Electroencephalogr Clin Neurophysiol. 1964;16:269–279. doi: 10.1016/0013-4694(64)90109-9. [DOI] [PubMed] [Google Scholar]

- 16.Gugger M, Molloy J, Gould GA, et al. Ventilatory and arousal responses to added inspiratory resistance during sleep. Am Rev Respir Dis. 1989;140(5):1301–1307. doi: 10.1164/ajrccm/140.5.1301. [DOI] [PubMed] [Google Scholar]

- 17.Berry RB, Bonnet MH, Light RW. Effect of ethanol on the arousal response to airway occlusion during sleep in normal subjects. Am Rev Respir Dis. 1992;145(2 Pt 1):445–452. doi: 10.1164/ajrccm/145.2_Pt_1.445. [DOI] [PubMed] [Google Scholar]

- 18.Berry RB, Asyali MA, McNellis MI, Khoo MC. Within-night variation in respiratory effort preceding apnea termination and EEG delta power in sleep apnea. J Appl Physiol (1985) 1998;85(4):1434–1441. doi: 10.1152/jappl.1998.85.4.1434. [DOI] [PubMed] [Google Scholar]

- 19.Warby SC, Wendt SL, Welinder P, et al. Sleep-spindle detection: crowdsourcing and evaluating performance of experts, non-experts and automated methods. Nat Methods. 2014;11(4):385–392. doi: 10.1038/nmeth.2855. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Wendt SL, Welinder P, Sorensen HB, et al. Inter-expert and intra-expert reliability in sleep spindle scoring. Clin Neurophysiol. 2015;126(8):1548–1556. doi: 10.1016/j.clinph.2014.10.158. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Punjabi NM, Shifa N, Dorffner G, Patil S, Pien G, Aurora RN. Computer-assisted automated scoring of polysomnograms using the Somnolyzer System. Sleep. 2015;38(10):1555–1566. doi: 10.5665/sleep.5046. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Younes M, Thompson W, Leslie C, Egan T, Giannouli E. Utility of technologist editing of polysomnography scoring performed by a validated automatic system. Ann Am Thorac Soc. 2015;12(8):1206–1218. doi: 10.1513/AnnalsATS.201411-512OC. [DOI] [PubMed] [Google Scholar]

- 23.Younes M, Younes M, Giannouli E. Accuracy of automatic scoring using frontal electrodes. J Clin Sleep Med. 2016;12(5):735–746. doi: 10.5664/jcsm.5808. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Younes M, Soiferman M, Thompson W, Giannouli E. Performance of a new portable wireless monitor. J Clin Sleep Med. 2017;13(2):245–258. doi: 10.5664/jcsm.6456. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Younes M, Hanly PJ. Minimizing interrater variability in staging sleep by use of computer-derived features. J Clin Sleep Med. 2016;12(10):1347–1356. doi: 10.5664/jcsm.6186. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Rechtschaffen A, Kales A. A Manual of Standardized Terminology, Techniques and Scoring System for Sleep Stages of Human Subjects. Los Angeles, CA: University of California Los Angeles Brain Information Service; 1968. [Google Scholar]

- 27.Krystal AD, Edinger JD. Measuring sleep quality. Sleep Med. 2008;9(Suppl 1):S10–S17. doi: 10.1016/S1389-9457(08)70011-X. [DOI] [PubMed] [Google Scholar]

- 28.Asyali MH, Berry RB, Khoo MC, Altinok A. Determining a continuous marker for sleep depth. Comput Biol Med. 2007;37(11):1600–1609. doi: 10.1016/j.compbiomed.2007.03.001. [DOI] [PubMed] [Google Scholar]

- 29.Fell J, Röschke J, Mann K, Schäffner C. Discrimination of sleep stages: a comparison between spectral and nonlinear EEG measures. Electroencephalogr Clin Neurophysiol. 1996;98(5):401–410. doi: 10.1016/0013-4694(96)95636-9. [DOI] [PubMed] [Google Scholar]

- 30.Pezard L, Martinerie J, Varela FJ, et al. Entropy maps characterize drug effects on brain dynamics in Alzheimer's disease. Neurosci Lett. 1998;253(1):5–8. doi: 10.1016/s0304-3940(98)00603-x. [DOI] [PubMed] [Google Scholar]

- 31.Burioka N, Cornélissen G, Halberg F, et al. Approximate entropy of human respiratory movement during eye-closed waking and different sleep stages. Chest. 2003;123(1):80–86. doi: 10.1378/chest.123.1.80. [DOI] [PubMed] [Google Scholar]

- 32.Shen Y, Olbrich E, Achermann P, Meier PF. Dimensional complexity and spectral properties of the human sleep EEG. Electroencephalograms. Clin Neurophysiol. 2003;114(2):199–209. doi: 10.1016/s1388-2457(02)00338-3. [DOI] [PubMed] [Google Scholar]

- 33.Abásolo D, Hornero R, Espino P, Poza J, Sánchez CI, de la Rosa R. Analysis of regularity in the EEG background activity of Alzheimer's disease patients with approximate entropy. Clin Neurophysiol. 2005;116(8):1826–1834. doi: 10.1016/j.clinph.2005.04.001. [DOI] [PubMed] [Google Scholar]

- 34.Bruce EN, Bruce MC, Vennelaganti S. Sample entropy tracks changes in electroencephalogram power spectrum with sleep state and aging. J Clin Neurophysiol. 2009;26(4):257–266. doi: 10.1097/WNP.0b013e3181b2f1e3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Younes M, Ostrowski M, Soiferman M, et al. Odds Ratio Product of sleep EEG as a continuous measure of sleep state. Sleep. 2015;38(4):641–654. doi: 10.5665/sleep.4588. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Younes M, Hanly PJ. Immediate postarousal sleep dynamics: an important determinant of sleep stability in obstructive sleep apnea. J Appl Physiol (1985) 2016;120(7):801–808. doi: 10.1152/japplphysiol.00880.2015. [DOI] [PubMed] [Google Scholar]

- 37.Qanash S, Giannouli E, Younes M. Assessment of intervention-related changes in non-rapid-eye-movement sleep depth: importance of sleep depth changes within stage 2. Sleep Med. 2017;40:84–93. doi: 10.1016/j.sleep.2017.09.022. [DOI] [PubMed] [Google Scholar]

- 38.Meza-Vargas S, Giannouli E, Younes M. Enhancements to the multiple sleep latency test. Nat Sci Sleep. 2016;8:145–158. doi: 10.2147/NSS.S103596. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Younes M. The case for using digital EEG analysis in clinical sleep medicine. Sleep Science and Practice. 2017;1:2. [Google Scholar]