Abstract

With the growing capabilities of Geographic Information Systems (GIS) and user-friendly software, statisticians today routinely encounter geographically referenced data containing observations from a large number of spatial locations and time points. Over the last decade, hierarchical spatiotemporal process models have become widely deployed statistical tools for researchers to better understand the complex nature of spatial and temporal variability. However, fitting hierarchical spatiotemporal models often involves expensive matrix computations with complexity increasing in cubic order for the number of spatial locations and temporal points. This renders such models unfeasible for large data sets. This article offers a focused review of two methods for constructing well-defined highly scalable spatiotemporal stochastic processes. Both these processes can be used as “priors” for spatiotemporal random fields. The first approach constructs a low-rank process operating on a lower-dimensional subspace. The second approach constructs a Nearest-Neighbor Gaussian Process (NNGP) that ensures sparse precision matrices for its finite realizations. Both processes can be exploited as a scalable prior embedded within a rich hierarchical modeling framework to deliver full Bayesian inference. These approaches can be described as model-based solutions for big spatiotemporal datasets. The models ensure that the algorithmic complexity has ~ n floating point operations (flops), where n the number of spatial locations (per iteration). We compare these methods and provide some insight into their methodological underpinnings.

Keywords: Bayesian statistics, Gaussian process, low rank Gaussian process, Nearest Neighbor Gaussian process (NNGP), predictive process, sparse Gaussian process, spatiotemporal statistics

1 Introduction

The increased availability of inexpensive, high speed computing has enabled the collection of massive amounts of spatial and spatiotemporal datasets across many fields. This has resulted in widespread deployment of sophisticated Geographic Information Systems (GIS) and related software, and the ability to investigate challenging inferential questions related to geographically-referenced data. See, for example, the books by Cressie (1993), Stein (1999), Moller and Waagepetersen (2003), Schabenberger and Gotway (2004), Gelfand et al. (2010), Cressie and Wikle (2011) and Banerjee et al. (2014) for a variety of statistical methods and applications.

This article will focus only on point-referenced data, which refers to data referenced by points with coordinates (latitude-longitude, Easting-Northing etc.). Modeling typically proceeds from a spatial or spatiotemporal process that introduces dependence among any finite collection of random variables from an underlying random field. For our purposes, we will consider the stochastic process as an uncountable set of random variables, say {w(ℓ): ℓ ∈ ℒ}, over a domain of interest ℒ, which is endowed with a probability law specifying the joint distribution for any finite sample from that set. For example, in spatial modeling ℒ is often assumed to be a subset of points in the Euclidean space ℜd (usually d = 2 or 3) or, perhaps, a set of geographic coordinates over a sphere or ellipsoid. In spatiotemporal settings ℒ = 𝒮 × 𝒯, where 𝒮 ⊂ ℜd is the spatial region, 𝒯 ⊂ [0,∞) is the time domain and ℓ = (s, t) is a space-time coordinate with spatial location s ∈ 𝒮 and time point t ∈ 𝒯 (see, e.g., Gneiting and Guttorp, 2010, for details).

Such processes are specified with a covariance function Kθ(ℓ, ℓ′) that gives the covariance between w(ℓ) and w(ℓ′) for any two points ℓ and ℓ′ in ℒ. For any finite collection 𝒰 = {ℓ1, ℓ2, …, ℓn} in ℒ, let w𝒰 = (w(ℓ1), w(ℓ2), …, w(ℓn)) ⊤ be the realizations of the process over 𝒰. Also, for two finite sets 𝒰 and 𝒱 containing n and m points in ℒ, respectively, we define the n × m matrix Kθ(𝒰, 𝒱) = Cov(w𝒰, w𝒱 | θ), where the covariances are evaluated using Kθ(·, ·). When 𝒰 or 𝒱 contains a single point, Kθ(𝒰, 𝒱) is a row or column vector, respectively. A valid spatiotemporal covariance function ensures that Kθ(𝒰, 𝒰) is positive definite for any finite set 𝒰. In geostatistics, we usually deal with a fixed set of points 𝒰 and, if the context is clear, we write Kθ(𝒰, 𝒰) simply as Kθ. A popular specification assumes {w(ℓ): ℓ ∈ ℒ} is a zero-centered Gaussian process written as w(ℓ) ~ GP(0,Kθ(·, ·)), which implies that the n × 1 vector w = (w(ℓ1), w(ℓ2) …, w(ℓn))⊤ is distributed as N(0,Kθ), where Kθ is the n × n covariance matrix with (i, j)-th element Kθ(ℓi, ℓj ). Various characterizations and classes of valid spatial (and spatiotemporal) covariance functions can be found in Gneiting and Guttorp (2010), Cressie (1993), Stein (1999), Gelfand et al. (2010), Cressie and Wikle (2011) and Banerjee et al. (2014) and numerous references therein. The more common assumptions are of stationarity and isotropy. The former assumes that Kθ(ℓ, ℓ′) = Kθ(ℓ−ℓ′) depends upon the coordinates only through their separation vector, while isotropy goes a step further and assumes the covariance is a function of the distance between them.

Spatial and spatiotemporal processes are conveniently embedded within Bayesian hierarchical models. The most common geostatistical setting assumes a response or dependent variable y(ℓ) observed at a generic point ℓ along with a p × 1 (p < n) vector of spatially referenced predictors x(ℓ). Model-based geostatistical data analysis customarily envisions a spatial regression model,

| (1) |

where β is the p×1 vector of slopes, and the residual from the regression is the sum of a spatial or spatiotemporal process, w(ℓ) ~ GP(0,Kθ(·, ·)) capturing spatial and/or temporal association, and an independent process, ε(ℓ) modeling measurement error or fine scale variation attributed to disturbances at distances smaller than the minimum observed separations in space and time. A Bayesian spatial model can now be constructed from (1) as

| (2) |

where Y = (y(ℓ1), y(ℓ2), …, y(ℓn))⊤ is the n × 1 vector of observed outcomes, X is the n × p matrix of regressors with i-th row x⊤ (ℓi) and the noise covariance matrix D(τ ) represents measurement error or micro-scale variation and depends upon a set of variance parameters τ. A common specification is Dτ = τ2In, where τ2 is called the “nugget.” The hierarchy is completed by assigning prior distributions to β, θ and τ.

Bayesian inference can proceed by sampling from the joint posterior density in (2) using, for example, Markov chain Monte Carlo (MCMC) methods (see, e.g., Robert and Casella, 2004). A major computational bottleneck emerges from the size of Kθ in computing (2). Since θ is unknown, each iteration of the model fitting algorithm will involve decomposing or factorizing Kθ, which typically requires ~ n3 floating point operations (flops). Memory requirements are of the order ~ n2. These become prohibitive for large values of n when Kθ has no exploitable structure. Evidently, multivariate process settings, where y(ℓ) is a q × 1 vector of outcomes, exacerbate the computational burden by a factor of q. For Gaussian likelihoods, one can integrate out the random effects w from (2). This reduces the parameter space to {τ2, θ, β}, but one still needs to work with Kθ +τ 2In, which is again n×n. These settings are referred to as “big-n” or “high-dimensional” problems in geostatistics and are widely encountered in environmental sciences today.

As modern data technologies are acquiring and exploiting massive amounts of spatiotemporal data, modeling and inference for large spatiotemporal datasets are receiving increased attention. In fact, it is impossible to provide a comprehensive review of all existing methods for geostatistical models for massive spatial data sets; Sun et al. (2011) offer an excellent review for a number of methods for high-dimensional geostatistics. The ideas at the core of fitting models for large spatial and spatiotemporal data concern effectively solving positive definite linear systems such as Ax = b, where A is a covariance matrix. Thus one can use probability models to build computationally efficient covariance matrices. One approach is to approximate or model A with a covariance structure that can significantly reduce the computational burden. An alternative is to model A−1 itself with an exploitable structure so that the solution A−1b is available without computing the inverse. For full Bayesian inference, one also needs to ensure that the determinant of A is available easily.

We remark that when inferring about stochastic processes, it is also possible to work in the spectral domain. This rich, and theoretically attractive, option has been advocated by Stein (1999) and Fuentes (2007) and completely avoids expensive matrix computations. The underlying idea is to transform to the space of frequencies, construct a periodogram (an estimate of the spectral density), and exploit the Whittle likelihood (see, e.g., Whittle, 1954; Guyon, 1995) in the spectral domain as an approximation to the data likelihood in the original domain. The Whittle likelihood requires no matrix inversion so, as a result, computation is very rapid. In principle, inversion back to the original space is straightforward. However, there are practical impediments. First, there is discretization to implement a fast Fourier transform whose performance can be tricky over large irregular domains. Predictive inference at arbitrary locations also will not be straightforward. Other issues include arbitrariness to the development of a periodogram. Empirical experience is employed to suggest how many low frequencies should be discarded. Also, there is concern regarding the performance of the Whittle likelihood as an approximation to the exact likelihood. While this approximation is reasonably well centered, it does an unsatisfactory job in the tails (thus leading to poor estimation of model variances). Lastly, modeling non-Gaussian first stages will entail unobservable random spatial effects, making the implementation impossible. In summary, use of the spectral domain with regard to handling large n, while theoretically attractive, has limited applicability.

Broadly speaking, model-based approaches for large spatial datasets proceeds from either exploiting “low-rank” models or exploiting “sparsity”. The former attempts to construct Gaussian processes on a lower-dimensional subspace (see, e.g., Wikle and Cressie, 1999; Higdon, 2002a; Kammann and Wand, 2003; Quinoñero and Rasmussen, 2005; Stein, 2007; Gramacy and Lee, 2008; Stein, 2008; Cressie and Johannesson, 2008; Banerjee et al., 2008; Crainiceanu et al., 2008; Sansó et al., 2008; Finley et al., 2009a; Lemos and Sansó, 2009; Cressie et al., 2010) in spatial, spatiotemporal and more general Gaussian process regression settings. Sparse approaches include covariance tapering (see, e.g., Furrer et al., 2006; Kaufman et al., 2008; Du et al., 2009; Shaby and Ruppert, 2012) using compactly supported covariance functions. This is effective for parameter estimation and interpolation of the response (“kriging”), but it has not been fully evaluated for fully Bayesian inference on residual or latent processes. Introducing sparsity in is prevalent in approximating Gaussian process likelihoods using Markov random fields (e.g., Rue and Held, 2005), products of lower dimensional conditional distributions (Vecchia, 1988, 1992; Stein et al., 2004), or composite likelihoods (e.g., Bevilacqua and Gaetan, 2014; Eidsvik et al., 2014).

This article aims to provide a focused review of some massively scalable Bayesian hierarchical models for spatiotemporal data. The aim is not to provide a comprehensive review of all existing methods. Instead, we focus upon two fully model-based approaches that can be easily embedded within hierarchical models and deliver full Bayesian inference. These are low-rank processes and sparsity-inducing processes. Both these processes can be used as “priors” for spatiotemporal random fields. Here is a brief outline of the paper. Section 2 discusses a Bayesian hierarchical framework for low-rank models and their implementation. Section 3 discusses some recent developments in sparsity-inducing Gaussian processes, especially nearest-neighbor Gaussian processes, and their implementation. Finally, Section 4 provides a brief account of outstanding issues for future research.

2 Hierarchical low-rank models

A popular way of dealing with large spatial datasets is to devise models that bring about dimension reduction (Wikle and Cressie, 1999). A low rank or reduced rank specification is typically based upon a representation or approximation in terms of the realizations of some latent process over a smaller set of points, often referred to as knots. To be precise,

| (3) |

where z(ℓ) is a well-defined process and bθ(s, s′) is a family of basis functions possibly depending upon some parameters θ. The collection of r locations { } are the knots, bθ(ℓ) and z are r×1 vectors with components and , respectively. For any collection of n points, the n × 1 vector w̃ = (w̃(ℓ1), w̃(ℓ2), …, w̃(ℓn))⊤ is represented as w̃ = Bθz, where Bθ is n × r with (i, j)-th element . Irrespective of how big n is, we now have to work with the r (instead of n) ’s and the n × r matrix Bθ. Since we anticipate r ≪ n, the consequential dimension reduction is evident and, since we will write the model in terms of the z’s (with the w̃’s being deterministic from the z’s, given bθ(·, ·)), the associated matrices we work with will be r × r. Evidently, w̃(ℓ) as defined in (3) spans only an r-dimensional space. When n > r, the joint distribution of w̃ is singular. However, we do create a valid stochastic process with covariance function

| (4) |

where Vz is the variance-covariance matrix (also depends upon parameter θ) for z. From (4), we see that, even if bθ(·, ·) is stationary, the induced covariance function is not. If the z’s are Gaussian, then w̃(ℓ) is a Gaussian process. Every choice of basis functions yields a process and there are too many choices to enumerate here. Wikle (2010) offers an excellent overview of low rank models.

Different families of spatial models emerge from different specifications for the process z(ℓ) and the basis functions bθ(ℓ, ℓ′). In fact, (3) can be used to construct classes of rich and flexible processes. Furthermore, such constructions need not be restricted to low rank models. If dimension reduction is not a concern, then full rank models can be constructed by taking r = n basis functions in (3). A very popular specification for z(ℓ) is a white noise process so that z ~ N(0, σ2In), whereupon (4) simplifies to σ2bθ(ℓ)⊤ bθ(ℓ′). A natural choice for the basis functions is a kernel function, say bθ(ℓ, ℓ′) = Kθ(ℓ − ℓ′), which puts more weight on ℓ′ near ℓ. Variants of this form have been called “moving average” models and explored by Barry and Ver Hoef (1996), while the term “kernel convolution” has been used in a series of papers by Higdon and collaborators (Higdon, 1998; Higdon et al., 1999; Higdon, 2002b) to not only achieve dimension reduction, but also model nonstationary and multivariate spatial processes. The kernel (which induces a parametric covariance function) can depend upon parameters and might even be spatially varying (Higdon, 2002b; Paciorek and Schervish, 2006). Sansó et al. (2008) use discrete kernel convolutions of independent processes to construct two different class of computationally efficient spatiotemporal processes.

Some choices of basis functions can be more computationally efficient than others depending upon the specific application. For example, Cressie and Johannesson (2008) (also see Shi and Cressie (2007)) discuss “Fixed Rank Kriging” (FRK) by constructing Bθ using very flexible families of non-stationary covariance functions to carry out high-dimensional kriging, Cressie et al. (2010) extend FRK to spatiotemporal settings calling the procedure “Fixed Rank Filtering” (FRF), Katzfuss and Cressie (2012) provide efficient constructions for Bθ for massive spatiotemporal datasets, and Katzfuss (2013) uses spatial basis functions to capture medium to long range dependence and tapers the residual w(ℓ) − w̃(ℓ) to capture fine scale dependence. Multiresolution basis functions (see, e.g., Nychka et al., 2002, 2015) have been shown to be effective in building computationally efficient nonstationary models. These papers amply demonstrate the versatility of low-rank approaches using different basis functions.

A different approach is to specify the z(ℓ) as a spatial process model having a selected covariance function. This process is called the parent process and one can derive a low-rank process w̃(ℓ) from the parent process. For example, one could use the Karhunen–Loeve (infinite) basis expansion for a Gaussian process (see, e.g., Rasmussen and Williams, 2005; Banerjee et al., 2014) and truncate it to a finite number of terms to obtain a low-rank process. Another example is to project the realizations of the parent process onto a lower-dimensional subspace, which yields the predictive process and its variants; see Section 2.2 for details.

The idea underlying low-rank dimension reduction is not dissimilar to Bayesian linear regression. For example, consider a simplified version of the hierarchical model in (2), where β = 0 and the process parameters {θ, τ} are fixed. A low rank version of (2) is obtained by replacing w with Bθz, so the joint distribution is

| (5) |

where Y is n × 1, z is r × 1, Dτ and Vz are positive definite matrices of sizes n × n and r × r, respectively, and Bθ is n × r. The low rank specification is accommodated using Bθz and the prior on z, while Dτ (usually diagonal) has the residual variance components. By computing the marginal covariance matrix var{y} in two ways (Lindley and Smith, 1972), one arrives at the well-known Sherman–Woodbury–Morrison formula

| (6) |

The above formula reveals dimension reduction in terms of the marginal covariance matrix for y. If Dτ is easily invertible (e.g., diagonal), then the inverse of an n × n covariance matrix of the form can be computed efficiently using the right-hand-side which only involves inverses of r × r matrices and . A companion formula for (6) is that for the determinant,

| (7) |

which shows that the determinant of the n × n matrix can be computed as a product of the determinants of two r × r matrices and that of Dτ.

In practical Bayesian computations, however, it is less efficient to directly use the formulas in (6) and (7). Since both the inverse and the determinant are needed, it is more useful to compute the Cholesky decomposition of the covariance matrix. In fact, one can avoid (6) completely and resort to a common trick in hierarchical models (see, e.g., Gelman et al., 2013) and smoothed analysis of variance (Hodges, 2013) that expresses (5) as the linear model

| (8) |

and are matrix square roots of Vz and Dτ, respectively. For example, in practice Dτ is diagonal so is simply the square root of the diagonal elements of Dτ, while is the triangular (upper or lower) Cholesky factor of the r × r matrix Vz. The marginal density of p(y* | θ, τ) after integrating out z now corresponds to the linear model y* = B*ẑ + e*, where ẑ is the ordinary least-square estimate of z. Such computations are easily conducted in statistical programming environments such as R by applying the chol function to obtain the Cholesky factor , a backsolve function to efficiently obtain in constructing (8), and an lm function to compute the least squares estimate of z using the QR decomposition of the design matrix B*. We discuss implementation of low rank hierarchical models in a more general contexts in Section 2.3.

2.1 Biases in low-rank models

Irrespective of the precise specifications, low-rank models tend to underestimate uncertainty (since they are driven by a finite number of random variables), hence, overestimate the residual variance (i.e., the nugget). Put differently, this arises from systemic over-smoothing or model under-specification by the low-rank model when compared to the parent model. For example, if w(ℓ) = w̃(ℓ) + η(ℓ), where w(ℓ) is the parent process and w̃(ℓ) is a low-rank approximation, then ignoring the residual η(ℓ) = w(ℓ)− w̃(ℓ) can result in loss of uncertainty and oversmoothing. In settings where the spatial signal is weak compared to the noise, such biases will be less pronounced. Also, it is conceivable that in certain specific case studies proper choices of basis functions (e.g., multiresolution basis functions) will be able to capture much of the spatial behavior and the effect of the bias will be mitigated. However, in general it will be preferable to develop models that will be able to compensate for the overestimation of the nugget.

This phenomenon, in fact, is not dissimilar to what is seen in linear regression models and is especially transparent from writing the parent likelihood and low-rank likelihood as mixed linear models. To elucidate, suppose, without much loss of generality, that 𝒰 is a set with n points of which the first r act as the knots. Let us write the Gaussian likelihood with the parent process as N(Y |Bu, τ2I), where B is the n × n lower-triangular Cholesky factor of Kθ (B = Bθ depends on θ, but we suppress this here) and u = (u1, u2, …, un)⊤ is now an n × 1 vector such that . Writing B = [B1 : B2], where B1 has r < n columns, suppose we derive a low-rank model by truncating to only the first r basis functions. The corresponding likelihood is N(Y |B1ũ1, τ2I), where ũ1 is an r × 1 vector whose components are independently and identically distributed N(0, 1) variables. Customary linear model calculations reveal that the magnitude of the residual vector from the parent model is given by y⊤ (I − PB)y, while that from the low-rank model is given by y⊤ (I−PB1 )y, where PA denotes the orthogonal projector matrix onto the column space of any matrix A. Using the fact that PB = PB1 +P[(I−PB1)B2], which is a standard result in linear model theory, we find the excess residual variability in the low-rank likelihood is summarized by y⊤ P[(I−PB1)B2]Y which can be substantial when r is much smaller than n.

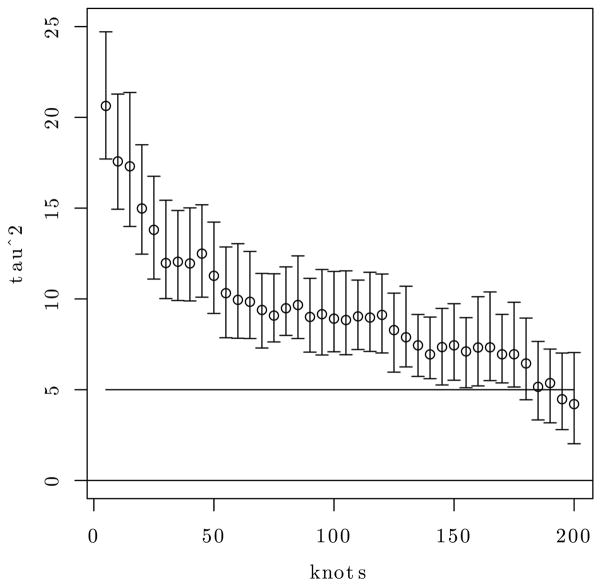

In practical data analysis, the above phenomenon is usually manifested by an over-estimation of the nugget variance as it absorbs the residual variation from the low-rank approximation. Consider the following simple experiment. We simulated a spatial dataset using the spatial regression model in (1) with n = 200 fixed spatial locations, say {ℓ1, ℓ2, …, ℓn}, within the unit square, and setting β = 0, τ2 = 5, w(ℓ) ~ GP(0,Kθ), where Kθ(ℓi, ℓj) = σ2 exp(−ϕ||ℓi − ℓj||) with σ2 = 5 and ϕ = 9. We then fit the low rank model (5) with D = τ2In × n, V = Ir × r, and B as the n × r matrix with i-th row , where is a set of r knots, Kθ(ℓi, 𝒰*) is the 1 × r vector with j-th element and is the inverse of the lower-triangular Cholesky factor of the r × r matrix with elements . This emerges from using low-rank radial basis functions in (3); (see, e.g., Ruppert and Carroll, 2003). We fit 40 such models increasing r from 5 to 200 in steps of 5. Figure 1 presents the 95% posterior credible intervals for τ2. Even with r = 175 knots for a dataset with just 200 spatial locations, the estimate of the nugget was significantly different from the true value of the parameter. This indicates that low rank processes may be unable to accurately estimate the nugget from the true process. Also, they will likely produce oversmoothed interpolated maps of the underlying spatial process and impair predictive performance. As one specific example, Table 4 in Banerjee et al. (2008) report less than optimal posterior predictive coverage from a predictive process model (see Section 2.2) with over 500 knots for a dataset comprising 15,000 locations.

Figure 1.

95% credible intervals for the nugget for 40 different low-rank radial-basis models with knots varying between 5 and 200 in steps of 5. The horizontal line at τ2 = 5 denotes the true value of τ2 with which the data was simulated.

Although this excess residual variability can be quantified as above (for any given value of the covariance parameters θ), it is less clear how the low-rank likelihood could be modified to compensate for this oversmoothing without adding significantly to the computational burden. Matters are complicated by the fact that expressions for the excess variability will involve the unknown process parameters θ, which must be estimated. In fact, not all low-rank models deliver a straightforward quantification for this bias. For instance, low-rank models based upon kernel convolutions approximate w(ℓ) with , where Kθ(·) is some kernel function and , assumed to arise from a Brownian motion U(ω) on ℜ2. The difference w(ℓ) − wKC(ℓ) does not, in general, render a closed form and may be difficult to approximate efficiently.

2.2 Predictive process models and variants

One particular class of low-rank processes have been especially useful in providing easy tractability to the residual process. Let w(ℓ) ~ GP(0, Kθ(·, ·)) and let w* be the r × 1 vector of ’s over a set 𝒰* of r knots. The usual spatial interpolant (that leads to “kriging”) at an arbitrary site ℓ is

| (9) |

This single site interpolator, in fact, is a well-defined process w̃(ℓ) ~ GP(0, K̃θ(·, ·)) with covariance function, . We refer to w̃(ℓ) as the predictive process derived from the parent process w(ℓ). The realizations of w̃(ℓ) are precisely the kriged predictions conditional upon a realization of w(ℓ) over 𝒰*. The process is completely specified given the covariance function of the parent process and the set of knots, 𝒰*. The corresponding basis functions in (3) are given by . These methods have are referred to as subset of regressors in Gaussian process regressions for large data sets in machine learning (Quinoñero and Rasmussen, 2005; Rasmussen and Williams, 2005). Banerjee et al. (2008) coined the term predictive process (as the process could be derived from kriging equations) and developed classes of scalable Bayesian hierarchical spatial process models by replacing the parent process with its predictive process counterpart. An alternate derivation is available by truncating the Karhunen–Loeve (infinite) basis expansion for a Gaussian process to a finite number of terms and solving (approximately) the integral eigen-system equation for Kθ(ℓ, ℓ′) by an approximate linear system over the set of knots (see, e.g., Rasmussen and Williams, 2005; Sang and Huang, 2012; Banerjee et al., 2014).

Exploiting elementary properties of conditional expectations, we obtain

| (10) |

which implies that var{w(ℓ)} ≥ var{ w̃(ℓ)} and the variance of η(ℓ) = w(ℓ) − w̃(ℓ) is simply the difference of the variances. For Gaussian processes, we get the following closed form for Cov{η(ℓ), η(ℓ′)},

| (11) |

Therefore, var{η(ℓ)} = Kη,θ(ℓ, ℓ), which we denote as δ2(ℓ).

Perhaps the simplest way to remedy the bias in the predictive process is to approximate the residual process η(ℓ) with a heteroskedastic process . We construct a modified or bias-adjusted predictive process as

| (12) |

where ε̃(ℓ) is independent of w̃(ℓ). It is easy to see that var{ w̃ε(ℓ)} = var{w(ℓ)}, so the variance of the two processes are the same. Also, the remedy is computationally efficient – adding an independent space-varying nugget does not incur substantial computational expense. Finley et al. (2009b) offer computational details for the modified predictive process, while Banerjee et al. (2010) show the effectiveness of the bias adjustment in mitigating the effect exhibited in Figure 1 and in estimating multiple variance components in the presence of different structured random effects.

We present a brief simulation example revealing the benefits of the modified predictive process. We generate 2000 locations within a [0, 100] × [0, 100] square and then generate the outcomes from (1) using only an intercept as the regressor, an exponential covariance function with range parameter ϕ = 0.06 (i.e., such that the spatial correlation is ~ 0.05 at 50 distance units), scale σ2 = 1 for the spatial process, and with nugget variance τ2 = 1. We then fit the predictive process and modified predictive process models derived from (1) using a hold out set of randomly selected sites, along with a separate set of regular lattices for the knots (m = 49, 144 and 900). Table 1 shows the posterior estimates and the square roots of mean squared predictive error (RMSPE) based on the predictions for the hold-out data. We clearly see the overestimation of τ2 by the predictive process and that the modified predictive process is able to adjust for the τ2. Not surprisingly, the RMSPE is essentially the same under either process model.

Table 1.

Parameter estimates for the predictive process (PP) and modified predictive process (MPP) models in the univariate simulation.

| μ | σ2 | τ2 | RMSPE | |

|---|---|---|---|---|

| True | 1 | 1 | 1 | |

| m = 49 | ||||

| PP | 1.37 (0.29,2.61) | 1.37 (0.65,2.37) | 1.18 (1.07,1.23) | 1.21 |

| MPP | 1.36 (0.51,2.39) | 1.04 (0.52,1.92) | 0.94 (0.68.1,14) | 1.20 |

| m = 144 | ||||

| PP 1.36 | (0.52,2.32) | 1.39 (0.76,2.44) | 1.09 (0.96, 1.24) | 1.17 |

| MPP | 1.33 (0.50,2.24) | 1.14 (0.64,1.78) | 0.93 (0.76,1.22) | 1.17 |

| m = 900 | ||||

| PP | 1.31 (0.23, 2.55) | 1.12 (0.85,1.58) | 0.99 (0.85,1.16) | 1.17 |

| MPP | 1.31 (0.23,2.63) | 1.04 (0.76,1.49) | 0.98 (0.87,1.21) | 1.17 |

Further enhancements to the modified predictive process are possible. Since the modified predictive process adjusts only the variance, information in the covariance induced by the residual process η(ℓ) is lost. One alternative is to use the so called “full scale approximation” proposed by Sang et al. (2011) and Sang and Huang (2012), where η(ℓ) is approximated by a tapered process, say ηtap(ℓ). The covariance function for η(ℓ) is of the form Kη,θ(ℓ, ℓ′)Ktap,ν(||ℓ − ℓ′||), where Kη,θ(ℓ, ℓ′) is as in (11) and Ktap,ν(||ℓ − ℓ′||) is a compactly supported covariance function that equals 0 beyond a distance ν (see, e.g., Furrer et al., 2006, for some practical choices.). This full scale approximation is also able to more effectively capture small scale dependence. Katzfuss (2013) extended some of these ideas by modeling the spatial error as a combination of a low-rank component designed to capture medium to long-range dependence and a tapered component to capture local dependence.

Perhaps the most promising use of the predictive process, at least in terms of scalability to massive spatial datasets, is the recent multiresolution approximation proposed by Katzfuss (2017). Instead of approximating the residual process η(ℓ) in one step, the idea here is to partition the spatial domain recursively and construct a sequence of approximations. We start by partitioning the domain of interest ℒ into J non-intersecting subregions, say ℒ1,ℒ2,…, ℒJ, such that . We call the ℒj ’s level-1 subregions. We fix a set of knots in ℒ and write the parent process as w(ℓ) = w̃(ℓ) + η(ℓ), where w̃(ℓ) is the predictive process as in (9) and η(ℓ) is the residual Gaussian process with covariance function given by (11). At resolution 1, we replace η(ℓ) with a block-independent process η1(ℓ) such that Cov{η1(ℓ), η1(ℓ′)} = 0 if ℓ and ℓ′ are not in the same subregion and is equal to (11) if ℓ and ℓ′ are in the same subregion.

At the second resolution, each ℒj is partitioned into a set of disjoint subregions ℒj1,ℒj2,…, ℒjm. We call these the level-2 subregions and choose a set of knots within each. We approximate η1(ℓ) ≈ η̃1(ℓ)+η2(ℓ), where η̃1(ℓ) is the predictive process derived from η1(ℓ) using the knots in ℒj if ℓ ∈ ℒj and η2(ℓ) is the analogous block-independent approximation across the subregions within each ℒj. Thus, Cov{η2(ℓ), η2(ℓ′)} = 0 if ℓ and ℓ′ are not in the same level-2 subregion and will equal Cov{η1(ℓ), η1(ℓ′)} when ℓ and ℓ′ are in the same level-2 subregion. At resolution 3 we partition each of the level-2 subregions into level-3 subregions and continue the approximation of the residual process from the predictive process. At the end of M resolutions, we arrive at the mult-resolution predictive process , which, by construction, is a valid Gaussian process. The computational complexity with the multi-resolution predictive process is ~ O(nM2r2), where M is the number of resolutions and r is the number of knots chosen within each subregion.

To summarize, we do not recommend the use of just a reduced/low rank model. To improve performance, it is necessary to approximate the residual process and, in this regard, the predictive process is especially attractive since the residual process is available explicitly.

2.3 Bayesian implementation for low-rank models

A very rich and flexible class of spatial and spatiotemporal models emerge from the hierarchical linear mixed model

| (13) |

where y is an n × 1 vector of possibly irregularly located observations, X is a known n×p matrix of regressors (p < n), Vu,θ and Dτ are families of r×r and n×n covariance matrices depending on unknown process parameters θ and τ, respectively, and Bθ is n×r with r ≤ n. The low-rank models in (3) emerge when r ≪ n and Bθ is the matrix obtained by evaluating the basis functions. Proper prior distributions p(θ) and p(τ) for θ and τ, respectively, complete the hierarchical specification.

Bayesian inference proceeds, customarily, by sampling {β, z, θ, τ} from (13) using Markov chain Monte Carlo (MCMC) methods. For faster convergence, we integrate out z from the model and first sample from p(θ, τ, β | y) ∝ p(θ) × p(τ) × N(β | μβ, Vβ) × N(y |Xβ, Σy | θ,τ), where . Working directly with Σy | θ,τ will be expensive. Usually Dτ is diagonal or sparse, so the expense is incurred from the matrix . Assuming that Bθ and Vz,u are computationally inexpensive to construct for each θ and τ, requires ~ O(rn2) flops. Using the Sherman–Woodbury–Morrison formula in (6) will avoid constructing or inverting any n×n matrix. However, in practice it is better to not directly compute the right hand side of (6) as it involves some redundant matrix multiplications. Furthermore, we wish to obtain the determinant of Σy | θ,τ cheaply. These are efficiently accomplished as outlined below.

The primary computational bottleneck lies in evaluating the multivariate Gaussian likelihood N(y |Xβ, Σy | θ,τ) which is required for updating the parameters {θ, τ} (e.g., using random-walk Metropolis or Hamiltonian Monte Carlo steps). We can accomplish this effectively using two functions: L = chol(V) which computes the Cholesky factorization for any positive definite matrix V = LL⊤, where L is lower-triangular, and W = trsolve(T, B) which solves the triangular system TW = B for a triangular (lower or upper) matrix T. We first compute

| (14) |

where H is obtained by first computing W = D−1/2Bθ, then the Cholesky factorization , and finally solve the triangular system H = trsolve(L, W⊤). Having obtained H, we compute e = y − Xβ, m1 = D−1/2e, m2 = Hm1, and obtain T = chol(Ir −HH⊤). The log-target density for {θ, τ} is then computed as

| (15) |

where dii’s and tii’s are the diagonal elements of Dτ and T, respectively. The total number of flops required for evaluating the target is O(nr2 + r3) ≈ O(nr2) (since r ≪ n) which is considerably cheaper than the O(n3) flops that would have been required for the analogous computations in a full Gaussian process model. In practice, Gaussian proposal distributions are employed for the Metropolis algorithm and all parameters with positive support are transformed to their logarithmic scale. Therefore, the necessary Jacobian adjustments are made to (15) by adding some scalar quantities with negligible computational costs.

Starting with initial values for all parameters, each iteration of the MCMC executes the above calculations to provide a sample for {θ, τ}. The regression parameter β is then sampled from its full conditional distribution. Writing as in (14), the full conditional distribution for β is N(Aa, A), where and . These are efficiently computed as [f: F] = D−1/2[y: X], F̃ = HF and setting and . We then compute β = trsolve(L⊤, trsolve(L, a)) + trsolve(L, Z̃), where Z̃ is a conformable vector of independent N(0, 1) variables.

We repeat the above computations for each iteration of the MCMC algorithm using the current values of the process parameters in Σy. The algorithm described above will produce, after convergence, posterior samples for Ω = {θ, τ, β}. We then sample from the posterior distribution p(z | y) = ∫ p(z | Ω, y)p(Ω | y)dΩ, where p(z | Ω, y) = N(z | Aa, A) with and . For each Ω drawn from p(Ω | y) we will need to draw a corresponding z from N(z | Aa, A). This will involve chol(A). Since the number of knots r is usually fixed at a value much smaller than n, obtaining chol(A) is ~ O(r3) and not as expensive. However, it will involve the inverse of Vz,θ, which is computed using chol(Vz,θ) and can be numerically unstable for certain smoother covariance functions such as the Gaussian or the Matérn with large ν. A numerically more stable algorithm exploits the relation A = Q − Q(Vz,θ + Q)−1Q, where . For each Ω sampled from p(Ω | y), we compute L = chol(Vz,θ + Q), W = trsolve(L, Q) and L = Q−W⊤W. We generate an r×1 vector Z* ~ N(0, Ir) and set z = L(Z* + L⊤a). Repeating this for each Ω drawn from p(Ω | y) produces a sample of z’s from p(z | y).

Finally, we seek predictive inference for y(ℓ0) at any arbitrary space-time coordinate ℓ0. Given x⊤(ℓ0), we draw for every posterior sample of Ω and z. This yields the corresponding posterior predictive samples for z(ℓ0) and y(ℓ0). Posterior predictive samples of the latent processes can also be easily computed as for each posterior sample of the z and θ. Posterior predictive distributions at any of the observed ℓi’s yield replicated data (see, e.g., Gelman et al., 2013) that can be used for model assessment and comparisons. Finley et al. (2015) provide more extensive implementation details for models such as (13) in the context of the spBayes package in R.

3 Sparsity-inducing nearest-neighbor Gaussian processes

Low-rank models have been, and continue to be, widely employed for analyzing spatial and spatiotemporal data. The algorithmic cost for fitting low-rank models typically decrease from O(n3) to O(nr2 + r3) ≈ O(nr2) flops since n ≫ r. However, when n is large, empirical investigations suggest that r must be fairly large to adequately approximate the parent process and the nr2 flops become exorbitant. Furthermore, low-rank models can perform poorly depending upon the smoothness of the underlying process or when neighboring observations are strongly correlated and the spatial signal dominates the noise (Stein, 2014).

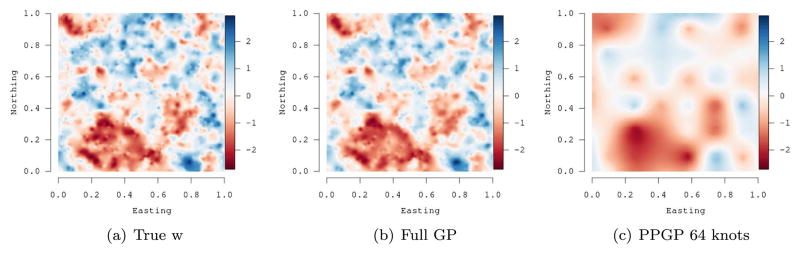

As an example, consider part of the simulation experiment presented in Datta et al. (2016a), where a spatial random field was generated over a unit square using a Gaussian process with fixed spatial process parameters over a set of 2500 locations. We then fit a full Gaussian process model and a predictive process model with 64 knots. Figure 2 presents the results (see, e.g., Datta et al., 2016a, for details.) While the estimated random field from the full Gaussian process is almost indistinguishable from the true random field, the surface obtained from the predictive process with 64 locations substantially oversmooths. This oversmoothing can be ameliorated by using a larger number of knots, but that adds to the computational burden.

Figure 2.

Comparing estimates of a simulated random field using a full Gaussian Process (Full GP) and a Gaussian Predictive process (PPGP) with 64 knots. The oversmoothing by the low-rank predictive process is evident.

Figure 2 serves to reinforce findings that low-rank models may be limited in their ability to produce accurate representation of the underlying process at massive scales. They will need a considerably larger number of basis functions to capture the features of the process and will require substantial computational resources for emulating results from a full GP. As the demands for analyzing large spatial datasets increase from the order of ~ 104 to ~ 106 locations, low-rank models may struggle to deliver acceptable inference. In this regard, enhancements such as the multi-resolution predictive process approximations referred to in Section 2.2 are highly promising.

An alternative is to develop full rank models that can exploit sparsity. Instead of deriving basis approximations for w, one could achieve computational gains by modeling either its covariance function or its inverse as sparse. Covariance tapering does the former by modeling var{w} = Kθ ⊙ Ktap,ν, where Ktap,ν is a sparse covariance matrix formed from a compactly supported, or tapered, covariance function with tapering parameter ν and ⊙ denotes the element wise (or Hadamard) product of two matrices. The Hadamard product of two positive definite matrices is again a positive definite matrix, so Kθ ⊙ Ktap,ν is positive definite. Furthermore, Ktap,ν is sparse because a tapered covariance function is equal to 0 for all pairs of locations separated by a distance beyond a threshold ν. We refer the reader to Furrer et al. (2006), Kaufman et al. (2008) and Du et al. (2009) for further computational and theoretical details on covariance tapering. Covariance tapering is undoubtedly an attractive approach for constructing sparse covariance matrices, but its practical implementation for full Bayesian inference will generally require efficient sparse Cholesky decompositions, numerically stable determinant computations and, perhaps most importantly, effective memory management. These issues are yet to be tested for truly massive spatiotemporal datasets with n ~ 105 or more.

Another way to exploit sparsity is to model the inverse of var{w} as a sparse matrix. For finite-dimensional distributions conditional and simultaneous autoregressive (CAR and SAR) models (see, e.g., Cressie, 1993; Banerjee et al., 2014, and references therein) adopt this approach for areally referenced datasets. More generally, Gaussian Markov random fields or GMRFs (see, e.g., Rue and Held, 2005) are widely used tools for constructing sparse precision matrices and have led to computational algorithms such as the Integrated Nested Laplace Approximation (INLA) developed by Rue et al. (2009). A subsequent article by Lindgren et al. (2011) show how Gaussian processes can be approximated by GMRFs using computationally efficient sparse representations. Thus, a Gaussian process model with a dense covariance function is approximated by a GMRF with a sparse precision matrix. The approach is very computationally efficient for certain classes of covariance functions generated by a certain class of stochastic partial differential equations (including the versatile Matérn class), but their inferential performance on unobservable spatial, spatiotemporal or multivariate Gaussian processes (perhaps specified through more general covariance or cross-covariance functions) embedded within Bayesian hierarchical models is yet to be assessed.

Rather than working with approximations to the process, one could also construct massively scalable sparsity-inducing Gaussian processes that can be conveniently embedded within Bayesian hierarchical models and deliver full Bayesian inference for random fields at arbitrary resolutions. Section 3.1 describes how sparsity is introduced in the precision matrices for graphical Gaussian models by exploiting the relationship between the Cholesky decomposition of a positive definite matrix and conditional independence. These sparse Gaussian models (i.e., normal distributions with sparse precision matrices) can be used prior models for a finite number of spatial random effects. Section 3.2 shows the construction of a process from these graphical Gaussian models. This process will be a Gaussian process whose finite-dimensional realizations will have sparse precision matrices. We call them Nearest Neighbor Gaussian Processes (NNGP). Finally, Section 3.3 outlines how the process can be embedded within hierarchical models and presents some brief simulation examples demonstrating certain aspects of inference from NNGP models.

3.1 Sparse Gaussian graphical models

Consider the hierarchical model (2) and, in particular, the expensive prior density N(w | 0, Kθ). From the dense covariance matrix Kθ, we wish to obtain a covariance matrix K̃θ such that is sparse and, importantly, its determinant is available cheaply. What would be an effective way of achieving this? One approach would be to consider modeling the Cholesky decomposition of the precision matrix so that it is sparse. For example, forcing some elements in the dense half of the triangular Cholesky factor to be zero will introduce sparsity in the precision matrix. To precisely set out which elements should be made zero in the Cholesky factor, we borrow some fundamental notions of sparsity from graphical (Gaussian) models.

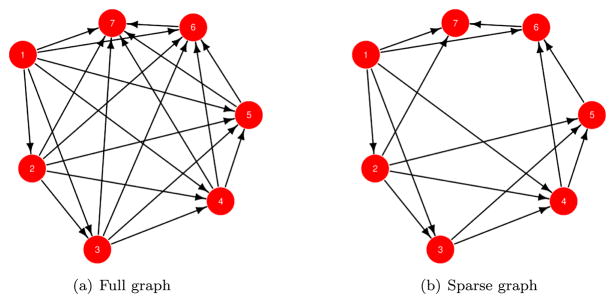

The underlying idea is, in fact, ubiquitous in graphical models or Bayesian networks (see, e.g., Lauritzen, 1996; Bishop, 2006; Murphy, 2012). The joint distribution for a random vector w can be looked upon as a directed acyclic graph (DAG) where each node is a random variable wi. We write the joint distribution as

where Pa[1] is the empty set and Pa[i] = {1, 2, …, i − 1} for i = 2, 3, …, n − 1 is the set of parent nodes with directed edges to i. This model is specific to the ordering (sometimes called “topological ordering”) of the nodes. The DAG corresponding to this factorization is shown in Figure 3(a) for n = 7 nodes. One can refer to this as the full graphical model since Pa[i] comprises all nodes preceding i in the topological order. Shrinking Pa[i] from the set of all nodes preceding i to a smaller subset of parent nodes yields a different, but still valid, joint distribution. In spatial settings, each of the nodes in the DAG have associated spatial coordinates. Thus, the parents for any node i can be chosen to include a certain fixed number of “nearest neighbors”, say based upon their distance from node i. For example, Figure 3(b) shows the DAG when some of the edges are deleted so as to retain at most 3 nearest neighbors in the conditional probabilities. The resulting joint density is

Figure 3.

Sparsity using directed acyclic graphs.

The above model posits that any node i, given its parents, is conditionally independent of any other node that is neither its parent nor its child.

Applying the above notion to multivariate Gaussian densities evinces the connection between conditional independence in DAGs and sparsity. Consider an n × 1 random vector w distributed as N(0, Kθ). Writing N (w | 0, Kθ) as is equivalent to the following set of linear models,

or, more compactly, simply w = Aw + η, where A is n × n strictly lower-triangular with elements aij = 0 whenever j ≥ i and η ~ N (0, D) and D is diagonal with diagonal entries d11 = var{w1} and dii = var{wi | wj : j < i} for i = 2, …, n.

From the structure of A it is evident that I − A is nonsingular and Kθ = (I − A)−1D(I − A)−⊤. The possibly nonzero elements of A and D are completely determined by the matrix Kθ. Let a[i,j], d[i,j] and K[i,j] denote the (i, j)-th entries of A, D and Kθ, respectively. Note that d[1,1] = K[1,1] and the first row of A is 0. A pseudocode to compute the remaining elements of A and D is:

| (16) |

Here a[i+1,1:i] is the 1 × i row vector comprising the possibly nonzero elements of the i+1-th row of A, K[1:i,1:i] is the i × i leading principal submatrix of Kθ, K[1:i, i] is the i × 1 row vector formed by the first i elements in the i-th column of Kθ, K[i, 1:i] is the 1 × i row vector formed by the first i elements in the i-th row of Kθ, solve(B,b) computes the solution for the linear system Bx = b, and dot(u,v) provides the inner product between vectors u and v. The determinant of Kθ is obtained with almost no additional cost: it is simply .

The above pseudocode provides a way to obtain the Cholesky decomposition of Kθ. If Kθ = LDL⊤ is the Cholesky decomposition, then L = (I − A)−1. There is, however, no apparent gain to be had from the preceding computations since one will need to solve increasingly larger linear systems as the loop runs into higher values of i. Nevertheless, it immediately shows how to exploit sparsity if we set some of the elements in the lower triangular part of A to be zero. For example, suppose we set at most m elements in each row of A to be nonzero. Let N[i] be the set of indices j < i such that a[i,j] ≠ 0. We can compute the nonzero elements of A and the diagonal elements of D efficiently as:

| (17) |

In (17) we solve n-1 linear systems of size at most m × m. This can be performed in ~ nm3 flops, whereas the earlier pseudocode in (16) for the dense model required ~ n3 flops. These computations can be performed in parallel as each iteration of the loop is independent of the others.

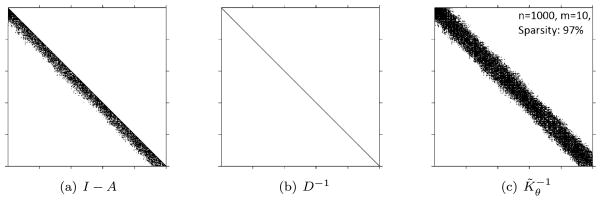

The above discussion provides a very useful strategy for introducing sparsity in a precision matrix. Let Kθ and both be dense n × n positive definite matrices. Suppose we use the pseudocode in (17) with K = Kθ to construct a sparse strictly lower-triangular matrix A with no more than m non-zero entries in each row, where m is considerably smaller than n, and the diagonal matrix D. The resulting matrix K̃θ = (I − A)−1D(I − A)−⊤ is a covariance matrix whose inverse is sparse. Figure 4 presents a visual representation of the sparsity. While K̃θ need not be sparse, the density N(w | 0, K̃θ) is cheap to compute since is sparse and is the product of the diagonal elements of D−1. Therefore, one way to achieve massive scalability for models such as (2) is to assume that w has prior N(w | 0, K̃θ) instead of N(w | 0, Kθ).

Figure 4.

Structure of the factors making up the sparse matrix.

3.2 From distributions to processes

If we are interested in estimating the spatial or spatiotemporal process parameters from a finite collection of random variables, then we can use the approach in Section 3.1 with wi := w(ℓi). In spatial settings, matters are especially convenient as we can delete the edges in the DAG based upon the distances among ℓi’s. In fact, one can decide to retain at most m of the nearest neighbors for each location and delete all remaining edges. This implies that the (i, j)-th element of A in Section 3.1 will be nonzero only if ℓj is one of the m nearest neighbors of ℓi. In fact, this idea has been effectively used to construct composite likelihoods for Gaussian process models by Vecchia (1988) and Stein et al. (2004), while Stroud et al. (2017) exploits this idea to propose preconditioned conjugate gradient algorithms for Bayesian and maximum likelihood estimates on large incomplete lattices.

Localized Gaussian process regression based on few nearest neighbors has also been used to obtain fast kriging estimates. Emery (2009) provides fast updates for kriging equations after adding a new location to the input set. Iterative application of their algorithm yields a localized kriging estimate based on a small set of locations (including few nearest neighbors). The local estimate often provides an excellent approximation to the global kriging estimate which uses data observed at all the locations to predict at a new location. However, this assumes that the parameters associated with the mean and covariance of the GP are known or already estimated. Local Approximation GP, or LAGP (Gramacy and Apley, 2015; Gramacy and Haaland, 2016; Gramacy, 2016), extends this further to estimate the parameters at each new location, essentially providing a non-stationary local approximation to a Gaussian Process at every predictive location and can be used to interpolate or smooth the observed data.

If, however, posterior predictive inference is sought at arbitrary spatiotemporal resolutions, i.e., for the entire process {w(ℓ): ℓ ∈ ℒ}, then the ideas in Section 3.1 need to be extended to process-based models. Recently, Datta et al. (2016a) proposed a Nearest Neighbor Gaussian Process (NNGP) for modeling large spatial data. NNGP is a well de-fined Gaussian Process over a domain ℒ and yields finite dimensional Gaussian densities with sparse precision matrices. This has been also extended to a dynamic NNGP with dynamic neighbor selection for massive spatiotemporal data (Datta et al., 2016b). The NNGP delivers massive scalability both in terms of parameter estimation and kriging. Unlike low rank processes, it does not oversmooth and accurately emulates the inference from full rank GPs.

We will construct the NNGP in two steps. First, we specify a multivariate Gaussian distribution over a fixed finite set r points in ℒ, say , which we call the reference set. The reference set can be very large. It can be a fine grid of points over ℒ or one can simply take r = n and let ℛ be the set of observed points in ℒ. We require that the inverse of the covariance matrix be sparse and computationally efficient. Therefore, we specify that wℛ ~ N(0, K̃θ), where wℛ is the r × 1 vector with elements and K̃θ is a covariance matrix such that is sparse. The matrix K̃θ is constructed from a dense covariance matrix Kθ as described in Section 3.1. This provides a highly effective approximation (Vecchia, 1988; Stein et al., 2004) as below:

| (18) |

where history sets so that is the empty set and for i = 2, 3, …, r and we have much smaller neighbor sets for each in ℛ. We have legitimate probability models for any choice of ’s as long as . One easy specification is to define as the set of m nearest neighbors of among the points in ℛ. Therefore,

If m(≪ r) denotes the limiting size of the neighbor sets N(ℓ), then has at most O(rm2) non-zero elements. Hence, the approximation in (18) produces a sparsity-inducing proper prior distribution for random effects over ℛ that closely approximates the realizations from a GP (0, Kθ).

To construct the NNGP we extend the above model to arbitrary locations. We define neighbor sets N(ℓ) for any ℓ ∈ ℒ as the set of m nearest neighbors of ℓ in ℛ. Thus, N(ℓ) ⊆ ℛ and the process can be derived from p(wℛ, w(ℓ) | θ) = N (wℛ | 0, K̃θ) × p(w(ℓ) | wN(ℓ), θ) or, equivalently, by writing

| (19) |

where ai(ℓ) = 0 whenever is a process independent of w(ℓ), Cov{η(ℓ), η(ℓ′)} = 0 for any two distinct points in ℒ, and

Taking conditional expectations in (19) yields , which implies that for each ℓ the nonzero ai(ℓ)’s are obtained by solving an m × m linear system. The above construction ensures that w(ℓ) is a legitimate Gaussian process whose realizations over any finite collection of arbitrary points in ℒ will have a multivariate normal distribution with a sparse precision matrix. More formal developments and technical details in the spatial and spatiotemporal settings can be found in Datta et al. (2016a) and Datta et al. (2016b), respectively.

One point worth considering is the definition of “neighbors.” There is some flexibility here. In the spatial setting, the correlation functions usually decay with increasing inter-site distance, so the set of nearest neighbors based on the inter-site distances represents locations exhibiting highest correlation with the given locations. For example, on the plane one could simply use the Euclidean metric to construct neighbor sets, although Stein et al. (2004) recommend including a few points that are farther apart. The neighbor sets can be fixed before the model fitting exercise.

In spatiotemporal settings, matters are more complicated. Spatiotemporal covariances between two points typically depend on the spatial as well as the temporal lag between the points. Non-separable isotropic spatiotemporal covariance functions can be written as Kθ ((s1, t1), (s2, t2)) = Kθ (h, u) where h = ||s1 − s2|| and u = |t1 − t2|. This often precludes defining any universal distance function d: (𝒮 × 𝒯)2 → ℜ+ such that Kθ ((s1, t1), (s2, t2)) will be monotonic with respect to d((s1, t1), (s2, t2)) for all choices of θ. This makes it difficult to define universal nearest neighbors in spatiotemporal domains. To obviate this hurdle, Datta et al. (2016b) define “nearest neighbors” in a spatiotemporal domain using the spatiotemporal covariance function itself as a proxy for distance. This can work for arbitrary domains. For any three points ℓ1, ℓ2 and ℓ3, we say that ℓ1 is nearer to ℓ2 than to ℓ3 if Kθ (ℓ1, ℓ2) > Kθ(ℓ1, ℓ3). Subsequently, this definition of “distance” is used to find m nearest neighbors for any location.

However, for every point ℓi, its neighbor set Nθ (ℓ) will now depend on θ and can change from iteration to iteration in the estimation algorithm. If θ were known, one could have simply evaluated the pairwise correlations between any point in ℛ and all points in its history set to obtain – the set of m true nearest neighbors. In practice, however, θ is unknown and for every new value of θ in an iterative algorithm, we need to search for the neighbor sets within the history sets. Since the history sets are quite large, searching the entire space for nearest neighbors in each iteration will be computationally unfeasible. Datta et al. (2016b) offer some smart strategies for selecting spatiotemporal neighbors. They propose restricting the search for the neighbor sets to carefully constructed small subsets of the history sets. These small eligible sets are constructed in such a manner that, despite being much smaller than the history sets, they are guaranteed to contain the true nearest neighbor sets. This strategy works when we choose m to be a perfect square and the original nonseparable covariance function Kθ(h, u) satisfies natural monotonicity, i.e. Kθ(h, u) is decreasing in h for fixed u and decreasing in u for fixed h. All Matèrn-based space-time separable covariances and many non-separable classes of covariance functions possess this property (Stein, 2013; Omidi and Mohammadzadeh, 2015).

3.3 Hierarchical NNGP models

We briefly turn to model fitting and estimation. For the approximation in (18) to be effective, the size of the reference set, r, needs to be large enough to represent the spatial domain. However, this does not impede computations involving NNGP models because the storage and number of floating point operations are always linear in r. The reference set ℛ can, in principle, be any finite set of locations in the study domain. A particularly convenient choice, in practice, is to simply take ℛ to be the set of observed locations in the dataset. Datta et al. (2016a) demonstrate through extensive simulation experiments and a real application that this simple choice seems to be very effective.

Since the NNGP is a proper Gaussian process, we can use it as a prior for the spatial random effects in any hierarchical model. We write w(ℓ) ~ NNGP(0, K̃θ(·, ·)), where K̃θ(ℓ, ℓ′) is the covariance function for the NNGP (see Datta et al., 2016a, for a closed form expression). For example, with r = n and ℛ the set of observed locations, one can build a scalable Bayesian hierarchical model exactly as with a usual spatial process, but assigning an NNGP to the spatial random effects. Here is a simple NNGP-based spatial model with a first stage exponential family model:

| (20) |

where Pτ is an exponential family distribution with link function g(·). Posterior sampling from (20) is customarily performed using Gibbs sampling with Metropolis steps. Computational benefits emerge from the fact that the full conditional distribution p(w(ℓi) |wℛ, θ, β, τ) = p(w(ℓi) |wN(ℓi), θ, β, τ) and since wN(ℓi) is an m×1 subset of wℛ. Prediction at any arbitrary location ℓ ∉ ℛ is performed by sampling from the posterior predictive distribution. For each draw of {wℛ, β, θ, τ} from p(wℛ, β, τ, θ | y), we draw a w(ℓ) from N(a⊤(ℓ)wN(ℓ), δ2(ℓ)) and y(ℓ) from p(y(ℓ) | β,w(ℓ), τ), where y is the vector of observed outcomes and a(ℓ) is a vector of the nonzero aj(ℓ)’s in (19).

Another, even simpler, example could be modeling a continuous outcome itself as an NNGP. Let the desired full GP specification be Y (ℓ) ~ GP(x⊤(ℓ)β,Kθ(·, ·)). We derive the NNGP from this Kθ and obtain

| (21) |

The above model is extremely fast. The likelihood is of the form y ~ N(Xβ, K̃θ), where is sparse and A and D are obtained from (17) efficiently in parallel. The parameter space of interest is {θ, β}, which is much smaller than for (20) where the latent spatial process also was unknown. While (21) does not separate the residuals into a spatial process and a measurement error process, one can still include measurement error variance, or the nugget, in (21). Here, one would absorb the nugget into θ. For example, suppose we wish to approximate (1) using (21). We could write the likelihood in (1) as N(y |Xβ,Kθ), where Kθ = σ2Rϕ +τ2In, Rϕ is a spatial correlation matrix and θ = {σ2, ϕ, τ2}. These will also feature in the derived NNGP covariance matrix K̃θ. We can predict the outcome at an arbitrary point ℓ by sampling from the posterior predictive distribution as follows: for each draw of {β, θ} from p(β, θ | y), we draw a y(ℓ) from N(y(ℓ) | x⊤(ℓ)β, δ2(ℓ)). Note, however, that there is no latent smooth process w(ℓ) in (21) and inference on the latent spatial process is precluded.

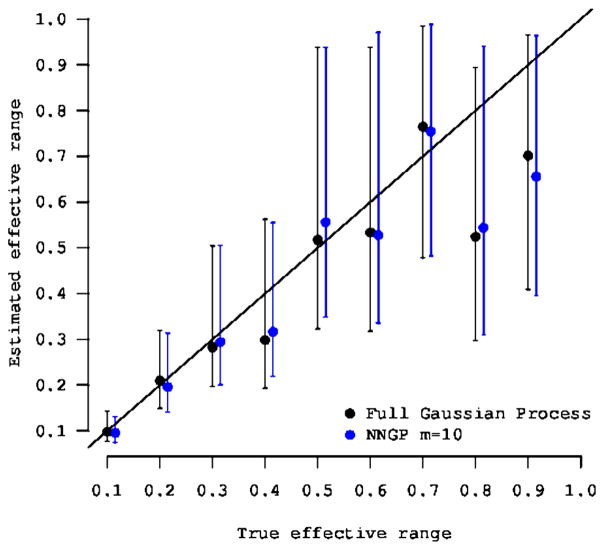

Likelihood computations in NNGP models usually involve O(nm3) flops. One does not need to store n × n matrices, only m × m matrices which leads to storage ~ nm2. Substantial computational savings accrue because m is usually very small. Datta et al. (2016a) demonstrate that fitting NNGP models to the simulated data in Figure 2 with number of neighbors as less as m = 10 produce posterior estimates of the spatial surface indistinguishable from Figures 2(a) and 2(b). In fact, simulation experiments in Datta et al. (2016a) and Datta et al. (2016b) also affirm that m can usually be taken to be very small compared to r; there seems to be no inferential advantage to taking m to exceed 15, even for datasets with over 105 spatial locations. For example, Figure 5 shows the 95% posterior credible intervals for a series of 10 simulation experiments where the true effective range was fixed at values from 0.1 to 1.0 in increments of 0.1. Each dataset comprised 2500 points. Even with m = 10 neighbors, the credible intervals for the effective spatial range from the NNGP model were very consistent with those from the full GP model. Datta et al. (2016a) present simulations using the Matérn and other covariance functions revealing very similar behavior.

Figure 5.

95% credible intervals for the effective spatial range from an NNGP model with m = 10 and a full GP model fitted to 10 different simulated datasets with true effective range fixed at values between 0.1 and 1.0 in increments of 0.1.

Another important point to note is that K̃θ is not invariant to the order in which we define H(ℓ1) ⊆ H(ℓ2) ⊆ · · · ⊆ H(ℓr) (i.e., the topological order). Vecchia (1988) and Stein et al. (2004) both assert that the approximation in (18) is not sensitive to this ordering. This is corroborated by simulation experiments by Datta et al. (2016a), but a recent manuscript by Guinness (2016) has indicated sensitivity to the ordering in terms of model deviance. We conducted some preliminary investigations to investigate the effect of the topological order. In one simple experiment we generated data from the “true” model in (1) for 6400 spatial locations arranged over an 80 × 80 grid. The parameter β in (1) was set to 0, the covariance function was specified as Kθ(ℓi, ℓj) = σ2 exp(−ϕ||ℓi – ℓj||), and with the true values of σ2, ϕ and τ2 given in the second column of Table 2. Four different NNGP models corresponding to (21) with K̃θ derived from Kθ = σ2Rϕ+τ2I and Rϕ having elements exp(−ϕ||ℓi–ℓj||), were fitted to the simulated data. Each of these models were constructed with m = 10 nearest neighbors, but with different ordering of the points ℓ = (x, y). These were performed according to the sum of the coordinates x + y, a maximum-minimum distance (MMD) proposed by Guinness (2016), the x coordinate, and the y coordinate. Table 2 presents a comparison of these NNGP models. Irrespective of the ordering of the points, the inference with respect to parameter estimates and predictive performance is extremely robust and effectively indistinguishable from each other. However, the posterior mean of the Kullback–Leibler divergence of these models from the true generating model revealed that the metric proposed by Guinness (2016) is indeed less than the other three. Further explorations are currently being conducted to see how this behavior changes for more complex nonstationary models and in more general settings.

Table 2.

Posterior parameter estimates, the Kullback–Leibler divergence (KL-D) and root mean square predictive errors (RMSPE) are presented for four NNGP models constructed from different topological orderings. The four orderings from left to right are “sorted on the sum of vertical and horizontal coordinate”, maximum-minimum distance (Guinness, 2016), sorted on horizontal coordinate and sorted on vertical coordinate.

| NNGP from different topological orders | |||||

|---|---|---|---|---|---|

| True | Sorted coord(x+y) | MMD | Sorted x | Sorted y | |

| σ | 1 | 0.79 (0.69, 1.04) | 0.80 (0.69, 1.02) | 0.80 (0.70, 1.05) | 0.83 (0.69, 1.08) |

| τ | 0.45 | 0.45 (0.44, 0.46) | 0.45 (0.44, 0.47) | 0.45 (0.44, 0.46 | ) 0.45 (0.44, 0.47) |

| ϕ | 5 | 8.11 (4.42, 11.10) | 7.63 (4.58, 10.97) | 8.01 (4.26, 11.18) | 7.12 (4.06, 11.03) |

|

| |||||

| KL-D | – | 24.04022 | 13.88847 | 22.30667 | 21.59174 |

| RMSPE | – | 0.5278996 | 0.5278198 | 0.527912 | 0.527807 |

4 Discussion and future directions

The article has attempted to provide some insight into constructing highly scalable Bayesian hierarchical models for very large spatiotemporal datasets using low-rank and sparsity-inducing processes. Such models are increasingly being employed to answer complex scientific questions and analyze massive spatiotemporal datasets in the natural and environmental sciences. Any standard Bayesian estimation algorithm, such as Markov chain and Hamiltonian Monte Carlo (see, e.g., Robert and Casella, 2004; Brooks et al., 2011; Gelman et al., 2013; Neal, 2011; Hoffman and Gelman, 2014), Integrated Nested Laplace Approximations (Rue et al., 2009), and Variational Bayes (see, e.g., Bishop, 2006) can be used for fitting these models. The models ensure that the algorithmic complexity has ~ n floating point operations (flops), where n the number of spatial locations (per iteration). Storage requirements are also linear in n. Methods such as the multiresolution predictive process (Katzfuss, 2017) and the NNGP (Datta et al., 2016a) can scale up to datasets in the order of ~ 106 spatial and/or temporal points without sacrificing richness in the model.

While the NNGP certainly seem to have an edge in scalability over the more conventional low-rank or fixed rank models, it is premature to say whether its inferential performance will always excel over low rank of fixed rank models. For example, analyzing complex nonstationary random fields may pose challenges regarding construction of neighbor sets as simple distance-based definition of neighbors may prove to be inadequate. Multiresolution basis functions may be more adept at capturing nonstationary, but may struggle with massive datasets. Dynamic neighbor selection for nonstationary fields, where neighbors will be chosen based upon the covariance kernel itself, analogous to Datta et al. (2016b) for space-time covariance functions, may be an option worth exploring. Multiresolution NNGPs, where the residual from the NNGP approximation is modeled hierarchically (analogous to Katzfuss, 2017, for the predictive process) may also be promising in terms of full Bayesian inference at massive scales.

There remain other challenges in high-dimensional geostatistics. Here, we have considered geostatistical settings where we have very large numbers of locations and/or time-points, but restricted our discussion to univariate outcomes. In practice, we often observe a q×1 variate response y(ℓ) along with a set of explanatory variables X(ℓ) and q × 1 variate GP, w(ℓ), is used to capture the spatial patterns beyond the observed covariates. We seek to capture associations among the variables as well as the strength of spatiotemporal association for each outcome. One specific geostatistical problem in ecology that currently lacks a satisfying solution is a joint species distribution model, where we seek to model a large collection of species (say, order 103) over a large collection of spatial sites (again, say, order 103).

The linear model of coregionalization (LMC) proposed by Matheron (1982) is among the most general models for multivariate spatial data analysis. Here, the spatial behavior of the outcomes is assumed to arise from a linear combination of the independent latent processes operating at different spatial scales (Chilés and Delfiner, 1999). The idea resembles latent factor analysis (FA) models for multivariate data analysis (e.g., Anderson, 2003) except that in the LMC the number of latent processes is usually taken to be the same as the number of outcomes. Then, an q × q covariance matrix has to be estimated for each spatial scale (see, e.g., Lark and Papritz, 2003; Castrignanó et al., 2005; Zhang, 2007), where q is the number of outcomes. When q is large (e.g., q ≥ 5 and 300 spatial locations), obtaining such estimates is expensive. Schmidt and Gelfand (2003) and Gelfand et al. (2004) associate only a q×q triangular matrix with the latent processes. However, high dimensional outcomes are still computationally prohibitive for these models.

Spatial factor models (see, e.g., Lopes and West, 2004; Lopes et al., 2008; Wang and Wall, 2003) have been used to handle high dimensional outcomes but with modest number of spatial locations. Dimension reduction is needed in two aspects: (i) the length of the vector of outcomes, and (ii) the very large number of spatial locations. Latent variable (factor) models are usually used to address the former, while low-rank spatial processes offer a rich and flexible modeling option for dealing with a large number of locations. Ren and Banerjee (2013) have exploited these two ideas to propose a class of hierarchical low-rank spatial factor models and also explored stochastic selection of the latent factors without resorting to complex computational strategies (such as reversible jump algorithms) by utilizing certain identifiability characterizations for the spatial factor model. Their model was designed to capture associations among the variables as well as the strength of spatial association for each variable. In addition, they reckoned with the common setting where not all the variables have been observed over all locations, which leads to spatial misalignment. The fully Bayesian approach effectively deals with spatial misalignment. However, this method is likely to suffer from the limited ability of low-rank models to scale to a very large number of locations. Promising ideas include using the multiresolution predictive process or the NNGP as a prior on the spatial factors.

Computational developments with regard to Markov chain Monte Carlo (MCMC) algorithms (see, e.g., Robert and Casella, 2004) have contributed enormously to the dissemination of Bayesian hierarchical models in a wide array of disciplines. Spatial modeling is no exception. However, the challenges for automated implementation of geostatistical model fitting and inference are substantial. First, expensive matrix computations are required that can become prohibitive with large datasets. Second, routines to fit unmarginalized models are less suited for direct updating using a Gibbs sampler and result in slower convergence of the chains. Third, investigators often encounter multivariate spatial datasets with several spatially dependent outcomes, whose analysis requires multivariate spatial models that involve demanding matrix computations. These issues have, however, started to wane with the delivery of relatively simpler software packages in the R statistical computing environment via the Comprehensive R Archive Network (CRAN) (http://cran.r-project.org). Several packages that automate Bayesian methods for point-referenced data and diagnose convergence of MCMC algorithms are easily available from CRAN. Packages that fit Bayesian models include geoR, geoRglm, spTimer, spBayes, spate, and ramps.

In terms of the hierarchical geostatistical models presented in this article, spBayes offers users a suite of Bayesian hierarchical models for Gaussian and non-Gaussian univariate and multivariate spatial data as well as dynamic Bayesian spatio-temporal models. It focuses upon performance issues for full Bayesian inference, sampler convergence rate and efficiency using a collapsed Gibbs sampler, decreasing sampler run-time by avoiding expensive matrix computations, and increased scalability to large datasets by implementing predictive process models. Beyond these general computational improvements for existing models, it analyzes data indexed both in space and time using a class of dynamic spatiotemporal models, and their predictive process counterparts, for settings where space is viewed as continuous and time is taken as discrete. Finally, we have modeling environments such as Nimble (de Valpine et al., 2017) that gives users enormous flexibility to choose algorithms for fitting their models, and Stan (Carpenter et al., 2017) that estimates Bayesian hierarchical models using Hamiltonian dynamics. The NNGP and the predictive process can be also coded in Nimble and Stan fairly easily.

Acknowledgments

The author wishes to thank the Editor-in-Chief (Professor Bruno Sansó) and the anonymous reviewers for very constructive and insightful feedback. In addition, the author also wishes to thank Dr. Abhirup Datta, Dr. Andrew O. Finley and Ms. Lu Zhang for useful discussions. The work of the author was supported in part by NSF DMS-1513654 and NSF IIS-1562303.

References

- Anderson TW. An Introduction to Multivariate Statistical Analysis. 3 New York, NY: Wiley; 2003. [Google Scholar]

- Banerjee S, Carlin BP, Gelfand AE. Hierarchical Modeling and Analysis for Spatial Data. 2 Boca Raton, FL: Chapman & Hall/CRC; 2014. [Google Scholar]

- Banerjee S, Finley AO, Waldmann P, Ericcson T. Hierarchical spatial process models for multiple traits in large genetic trials. Journal of the American Statistical Association. 2010;105:506–521. doi: 10.1198/jasa.2009.ap09068. doi: http://dx.doi.org/10.1198/jasa.2009.ap09068. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Banerjee S, Gelfand AE, Finley AO, Sang H. Gaussian predictive process models for large spatial datasets. Journal of the Royal Statistical Society, Series B. 2008;70:825–848. doi: 10.1111/j.1467-9868.2008.00663.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Barry R, Ver Hoef J. Blackbox kriging: Spatial prediction without specifying variogram models. Journal of Agricultural, Biological and Environmental Statistics. 1996;1:297–322. [Google Scholar]

- Bevilacqua M, Gaetan C. Comparing composite likelihood methods based on pairs for spatial Gaussian random fields. Statistics and Computing. 2014:1–16. [Google Scholar]

- Bishop C. Pattern Recognition and Machine Learning. New York, NY: Springer-Verlag; 2006. doi: http://dx.doi.org/10.1007/978-0-387-45528-0. [Google Scholar]

- Brooks S, Gelman A, Jones GL, Meng X-L. Handbook of Markov Chain Monte Carlo. Boca Raton, FL: CRC Press; 2011. [Google Scholar]

- Carpenter B, Gelman A, Hoffman M, Lee D, Goodrich B, Betancourt M, Brubaker M, Guo J, Li P, Riddell A. Stan: A probabilistic programming language. Journal of Statistical Software. 2017;76(1):1–32. doi: 10.18637/jss.v076.i01. https://www.jstatsoft.org/index.php/jss/article/view/v076i01. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Castrignanó A, Cherubini C, Giasi C, Castore M, Mucci GD, Molinari M. Using Multivariate Geostatistics for Describing Spatial Relationships among some Soil Properties. ISTRO Conference Brno.2005. [Google Scholar]

- Chilés J, Delfiner P. Geostatistics: Modeling Spatial Uncertainty. John Wiley; New York: 1999. [Google Scholar]

- Crainiceanu CM, Diggle PJ, Rowlingson B. Bivariate binomial spatial modeling of Loa Loa prevalence in tropical Africa. Journal of the American Statistical Association. 2008;103:21–37. doi: http://dx.doi.org/10.1198/016214507000001409. [Google Scholar]

- Cressie N. Statistics for Spatial Data. Wiley-Interscience; 1993. revised edition doi: http://dx.doi.org/10.1002/9781119115151. [Google Scholar]

- Cressie N, Johannesson G. Fixed rank kriging for very large data sets. Journal of the Royal Statistical Society, Series B. 2008;70:209–226. [Google Scholar]

- Cressie N, Shi T, Kang EL. Fixed rank filtering for spatio-temporal data. Journal of Computational and Graphical Statistics. 2010;19:724–745. doi: http://dx.doi.org/10.1198/jcgs.2010.09051. [Google Scholar]

- Cressie NAC, Wikle CK. Statistics for Spatio-temporal Data. Hoboken, N.J: Wiley; 2011. Wiley series in probability and statistics. http://opac.inria.fr/record=b1133266. [Google Scholar]

- Datta A, Banerjee S, Finley AO, Gelfand AE. Hierarchical nearest-neighbor Gaussian process models for large geostatistical datasets. Journal of the American Statistical Association. 2016a;111:800–812. doi: 10.1080/01621459.2015.1044091. doi: http://dx.doi.org/10.1080/01621459.2015.1044091. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Datta A, Banerjee S, Finley AO, Hamm NAS, Schaap M. Non-separable dynamic nearest-neighbor Gaussian process models for large spatiotemporal data with an application to particulate matter analysis. Annals of Applied Statistics. 2016b;10:1286–1316. doi: 10.1214/16-AOAS931. doi: http://dx.doi.org/10.1214/16-AOAS931. [DOI] [PMC free article] [PubMed] [Google Scholar]

- de Valpine P, Turek D, Paciorek C, Anderson-Bergman C, Temple Lang D, Bodik R. Programming with models: Writing statistical algorithms for general model structures with NIMBLE. Journal of Computational and Graphical Statistics. 2017;26:403–413. doi: http://dx.doi.org/10.1080/10618600.2016.1172487. [Google Scholar]

- Du J, Zhang H, Mandrekar VS. Fixed-domain asymptotic properties of tapered maximum likelihood estimators. Annals of Statistics. 2009;37:3330–3361. doi: http://dx.doi.org/10.1214/08-AOS676. [Google Scholar]

- Eidsvik J, Shaby BA, Reich BJ, Wheeler M, Niemi J. Estimation and prediction in spatial models with block composite likelihoods. Journal of Computational and Graphical Statistics. 2014;23:295–315. [Google Scholar]

- Emery X. The kriging update equations and their application to the selection of neighboring data. Computational Geosciences. 2009;13(3):269–280. http://dx.doi.org/10.1007/s10596-008-9116-8. [Google Scholar]