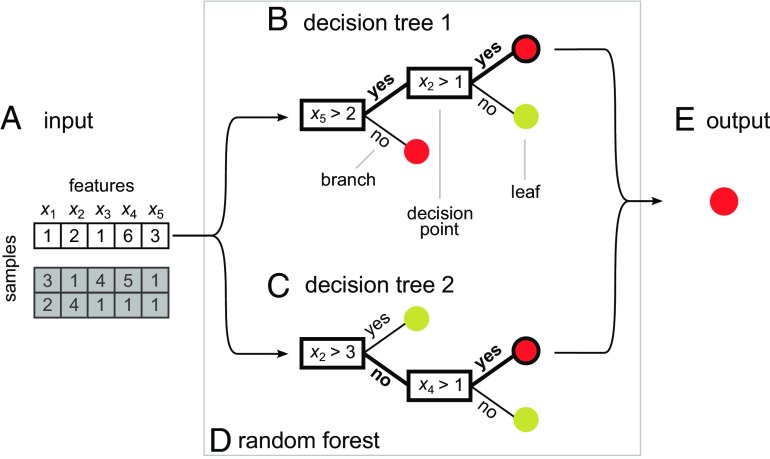

Fig. 1.

Individual decision trees vote for class outcome in a toy example random forest. (A) This input dataset characterizes three samples, in which five features (x1, x2, x3, x4, and x5) describe each sample. (B) A decision tree consists of branches that fork at decision points. Each decision point has a rule that assigns a sample to one branch or another depending on a feature value. The branches terminate in leaves belonging to either the red class or the yellow class. This decision tree classifies sample 1 to the red class. (C) Another decision tree, with different rules at each decision point. This tree also classifies sample 1 to the red class. (D) A random forest combines votes from its constituent decision trees, leading to a final class prediction. (E) The final output prediction is again the red class.