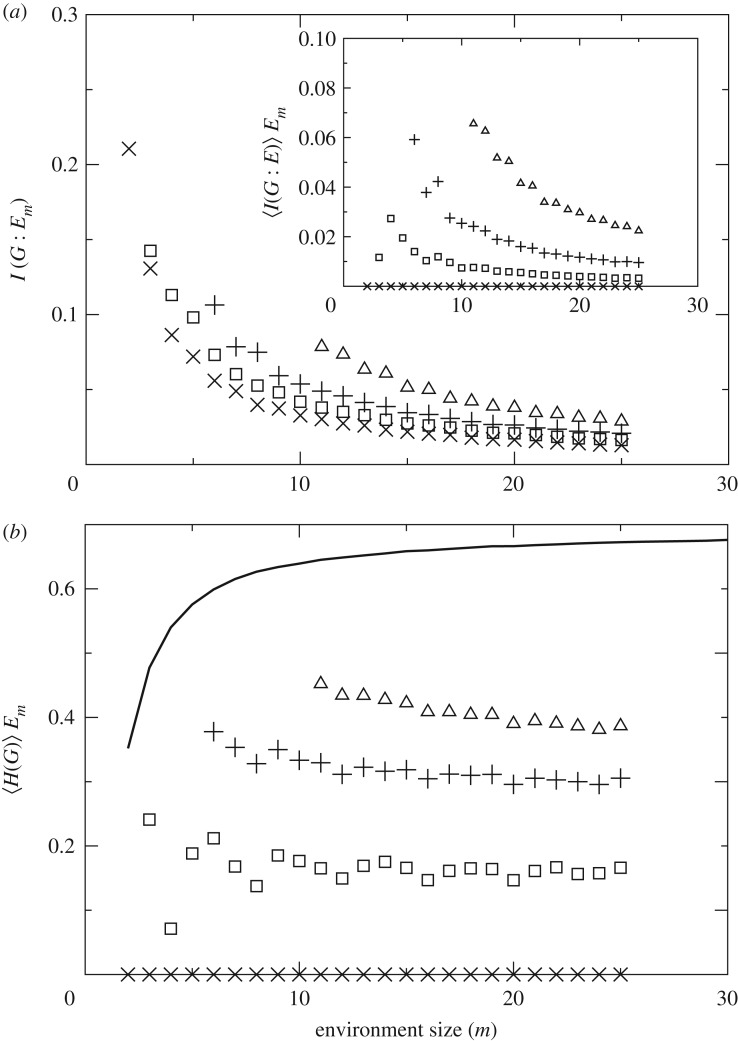

Figure 5.

Mutual information and entropy. Guessers with n=1 (crosses), n=2 (squares), n=5 (pluses) and n=10 (triangles) are presented. (a) I(G:Em) and 〈I(G:E)〉Em (inset) quantify the different sources of information that allow more complex guessers to thrive in environments in which simpler life is not possible. (b) The entropy of a guesser’s message given its environment seems roughly constant in these experiments despite the growing environment size. This suggests an intrinsic measure of complexity for guessers. Larger guessers look more random even if they might carry more meaningful information about their environment. The thick black line represents the average entropy of the environments (which approaches log(2)) against which the entropy of the guessers can be compared.