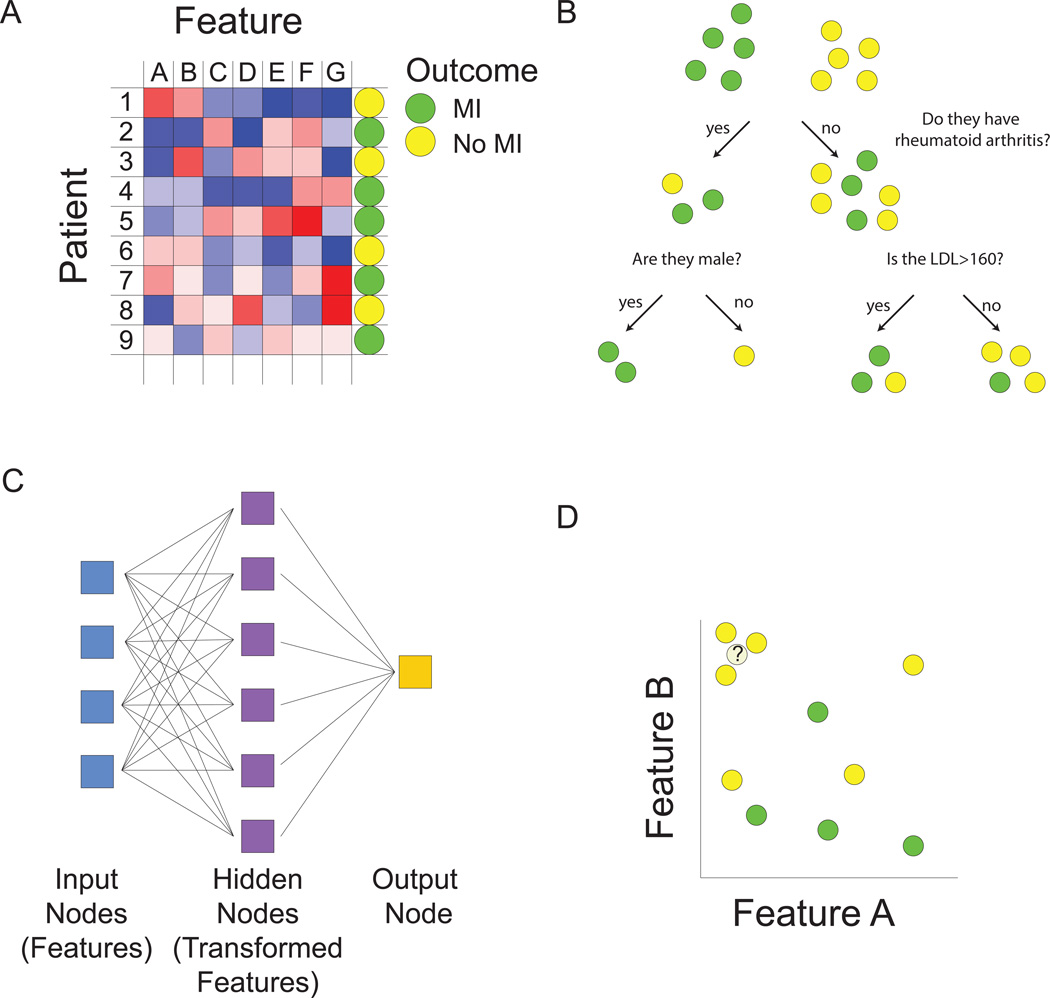

Figure 1.

Machine learning overview. A. Matrix representation of the supervised and unsupervised learning problem. We are interested in developing a model for predicting myocardial infarction (MI). For training data, we have patients, each characterized by an outcome (positive or negative training examples), denoted by the circle in the right-hand column, as well as by values of predictive features, denoted by blue to red coloring of squares. We seek to build a model to predict outcome using some combination of features. Multiple types of functions can be used for mapping features to outcome (B–D). Machine learning algorithms are used to find optimal values of free parameters in the model in order to minimize training error as judged by the difference between predicted values from our model and actual values. In the unsupervised learning problem, we are ignoring the outcome column, and grouping together patients based on similarities in the values of their features. B. Decision trees map features to outcome. At each node or branch point, training examples are partitioned based on the value of a particular feature. Additional branches are introduced with the goal of completely separating positive and negative training examples. C. Neural networks predict outcome based on transformed representations of features. A hidden layer of nodes integrates the value of multiple input nodes (raw features) to derive transformed features. The output node then uses values of these transformed features in a model to predict outcome. D. The k-nearest neighbor algorithm assigns class based on the values of the most similar training examples. The distance between patients is computed based on comparing multidimensional vectors of feature values. In this case, where there are only two features, if we consider the outcome class of the three nearest neighbors, the unknown data instance would be assigned a “no MI” class.