Abstract

Purpose

The present study aimed to compare acoustic models of speech intelligibility in individuals with the same disease (Parkinson's disease [PD]) and presumably similar underlying neuropathologies but with different native languages (American English [AE] and Korean).

Method

A total of 48 speakers from the 4 speaker groups (AE speakers with PD, Korean speakers with PD, healthy English speakers, and healthy Korean speakers) were asked to read a paragraph in their native languages. Four acoustic variables were analyzed: acoustic vowel space, voice onset time contrast scores, normalized pairwise variability index, and articulation rate. Speech intelligibility scores were obtained from scaled estimates of sentences extracted from the paragraph.

Results

The findings indicated that the multiple regression models of speech intelligibility were different in Korean and AE, even with the same set of predictor variables and with speakers matched on speech intelligibility across languages. Analysis of the descriptive data for the acoustic variables showed the expected compression of the vowel space in speakers with PD in both languages, lower normalized pairwise variability index scores in Korean compared with AE, and no differences within or across language in articulation rate.

Conclusions

The results indicate that the basis of an intelligibility deficit in dysarthria is likely to depend on the native language of the speaker and listener. Additional research is required to explore other potential predictor variables, as well as additional language comparisons to pursue cross-linguistic considerations in classification and diagnosis of dysarthria types.

Reduced speech intelligibility is a common result of dysarthria regardless of its associated disease or neuropathology. One of the primary questions in the field has been how the speech subsystems (e.g., respiratory, phonatory, resonatory, articulatory), independently and in combination, contribute to reduced speech intelligibility. This question has motivated previous investigators to better understand the dysarthrias in relation to the underlying neuropathophysiology. The reasoning is that such understanding will point to optimal diagnosis and management decisions by showing unique associations between specific neuropathologies and specific subsystems of the speech production mechanism (Duffy, 2013; Kim, Kent, & Weismer, 2011).

The primary classification system for dysarthria—the Mayo Clinic system—was first described by Darley, Aronson, and Brown (1975) and later revised by Duffy (1995, 2005, 2013). This system is based on the reasoning expressed above, that there are unique links between underlying neuropathologies and specific physiological and acoustic deficits in the speech of persons with dysarthria. The Mayo Clinic categorization system for dysarthria has been criticized on both theoretical and empirical grounds (e.g., Kim, Kent, et al., 2011), but it remains a staple of clinical diagnostics and grouping criteria in studies of dysarthria.

One little-studied problem with the Mayo Clinic classification system is the absence of listener variables in both the classification of type of dysarthria and the magnitude of specific perceptual impressions of speech production. Monsen (1983) showed three decades ago that listener experience with the speech of persons with hearing impairment made a difference in speech intelligibility of the same speakers. In dysarthria, listener variables are known to affect speech perception, from the simplest level of phoneme identification (Kim, 2015) to the more sophisticated processes of lexical access for speech recognition (Borrie, 2015; Liss, Utianski, & Lansford, 2013) to global estimates of speech intelligibility (Lansford, Borrie, & Bystricky, 2016). A listener variable that has not been systematically explored is that of the native language of listeners (and the speakers to whom they are listening). This is important because the Mayo classification system was developed exclusively with speakers of American English (AE). Although the phonetic and rhythmic differences across languages suggest that many of the criteria for classification of a particular dysarthria in AE may not apply to dysarthria in different languages, the Mayo system is routinely used to classify speakers in studies of dysarthria in languages other than English (e.g., Mandarin Chinese: Jeng, Weismer, & Kent, 2006; Cantonese Chinese: Ma, Whitehill, & So, 2010; Whitehill & Ciocca, 2000; Whitehill, Ma, & Lee, 2003; Japanese: Nishio & Niimi, 2001; German: Ziegler, 2002). The Mayo classification categories may even be used to test hypotheses concerning their utility in differentiating the motor speech disorder in two disease types (French: Ozsancak et al., 2006).

To the best of our knowledge, only one study has explicitly addressed the possibility that variables affected in dysarthria may depend on the native language of speakers and listeners. Hartelius, Theodoros, Cahill, and Lillvik (2003) studied the perceptual impressions of connected speech obtained from native speakers of Swedish and Australian English who had been diagnosed with multiple sclerosis. Perceptual dimensions as described by Darley et al. (1975) were scaled by two native-language “expert” listeners in both languages. By obtaining the scalings for speech samples from both languages and all listeners, Hartelius et al. hoped to identify which speech characteristics of the motor speech disorder associated with multiple sclerosis were common to both languages and which might be unique to the individual languages. The descriptive findings indicated some shared perceptual characteristics between the two languages and some unique characteristics. Hartelius et al. did not perform an analysis of how the scaled dimensions related to an overall estimate of speech intelligibility.

An experimental approach to discovering links between speech physiological and/or acoustic variables with perceptual ratings of speech dimensions or overall speech intelligibility scores is to gather the relevant measures and construct bivariate or multivariate regression models of the data. Such models can introduce listener characteristics as a potential source of variance. For example, the variable of native speech perception strategies has been explored in the case of second language learners' perception of phonetic contrasts in the language being learned (see review in Escudero & Boersma, 2004). Speech perception strategies have also been examined in the perception of speech produced by persons with dysarthria. Among speakers and listeners of AE, it is clear that language-conditioned listening strategies exert a significant influence on the perception of dysarthric speech (for speakers and listeners of the same language: Liss, Spitzer, Caviness, & Adler, 1998, 2000).

Following the findings of Liss et al. (1998, 2000) and work on phonetic perception in second language learning, it seems logical to extend the influence of listener variables on speech intelligibility to different native languages. In regression models that account for variance in speech intelligibility scores (or specific perceptual dimensions), the weights of the same predictor variable (such as the size of the acoustic vowel space [AVS]) should be expected to vary depending on language; in some cases, a variable that makes a significant contribution to speech intelligibility in one language may not in another language. The present study is a first attempt to study this important aspect of understanding the link between a disease's effect on the speech mechanism and language-specific, perceptual aspects of dysarthria.

A well-designed, cross-linguistic study of the effect of language on predictive models of speech intelligibility in dysarthria ideally requires motivation, selection, and control of key categorical variables, measures for use in the statistical model, and properly chosen speech materials and listening tasks. In the present study, the categorical variables were neurological disease/dysarthria type (Parkinson's disease [PD] vs. neurological health) and native language (Korean vs. AE) of speakers and listeners. The measures used in the regression prediction model included size of the AVS, voice onset time (VOT) “space,” normalized pairwise variability index (nPVI-V), and articulation rate (AR). Speech materials for both languages were reading passages, and the listening task was scaled intelligibility on a 10-point equal-appearing interval scale.

Disease/Dysarthria Type

The present study addresses the question of possible cross-linguistic influences on intelligibility in persons with dysarthria by studying Korean and American speakers with PD and aged-matched controls. Speakers with PD and dysarthria typically have a weak voice, reduced prosody, and imprecise consonants; the global impression of the speech disorder is referred to as hypokinetic dysarthria (Duffy, 2013). Speech production of persons with PD has been well studied (Goberman & Elmer, 2005; Ludlow, Connor, & Bassich, 1987; Metter & Hanson, 1986; Skodda, Visser, & Schlegel, 2011), and the prevalence of PD made it likely that a sufficient number of speakers could be recruited in both language groups for this initial study.

Native Language of Speakers and Listeners and Measured Variables

Korean and AE were chosen for this first examination of cross-linguistic influences on speech intelligibility in dysarthria. The choice of these languages was motivated by both practical and theoretical reasons. The practical reason was the availability of a database of Korean-speaking persons with PD and a diagnosis of hypokinetic dysarthria. The theoretical reasons concerned the different phonological and rhythmic structures of the two languages, which led to hypotheses of the differential role of specific measures in contributing to a speech intelligibility deficit in dysarthria.

For example, many studies have demonstrated moderate correlations between size of the AVS and speech intelligibility, not only in dysarthria but also in other speech disorders. In dysarthria, the size of the AVS is typically smaller than in neurologically healthy speakers (Kim, Kent, et al., 2011; Liu, Tsao, & Kuhl, 2005). A larger impact of reduced vowel space on intelligibility might be expected in AE, as compared to Korean, because of the larger vowel inventory in AE. The vowel systems of AE and Korean differ most specifically in the smaller number of monophthongs and the absence of lax-tense contrasts in Korean (Shin, Kiaer, & Cha, 2013). Therefore, it is a reasonable supposition that the compression of the vowel space often observed in dysarthria is likely to affect intelligibility less in Korean because the presumed loss of contrastivity among vowels within the space is greater in the denser vowel space of AE.

Another phonetic difference between Korean and AE is the density of the voicing space for stop consonants, at least as indexed by measures of VOT. VOT is of interest to this study because of (a) frequent reports of reduced VOT values in (mostly AE) speakers with PD, primarily for voiceless stops (e.g., Flint, Black, Campbell-Taylor, Gailey, & Levinton, 1992; Morris, 1989; Weismer, 1984) and (b) the difference between AE and Korean wherein AE has two voicing cognates (voiced, voiceless) and Korean has three (lax, aspirated, tense). Although other cues are known to contribute to the voicing stop distinctions in AE and Korean stops (including burst intensity, f0 value at the following vowel onset, and closure duration; Cho, Jun, & Ladefoged, 2002; Han & Weitzman, 1970; Klatt, 1975), VOT is a major acoustic measure associated with voicing distinctions (Auzou et al., 2000; Hixon, Weismer, & Hoit, 2013). Moreover, at least one study of patients with cerebellar disease has associated reduction of VOT distinctions with misperception of the voicing status of the stops (Ackermann & Hertrich, 1997). Misperceptions at the segmental level presumably have some effect on the overall impression of speech intelligibility. On the basis of the higher density of the stop voicing space in Korean, as compared with AE, it was expected that the reduction of contrast among the three-way VOT contrast in Korean would show a stronger relation to intelligibility than reduction of the two-way contrast in AE.

A third measured variable reflects language-specific rhythmic characteristics. Korean is typically described as a syllable-timed language, whereas AE is described as stress-timed (Mok & Lee, 2008). In other words, successive syllables in a Korean utterance tend to have similar durations, whereas successive syllables in English tend to alternate between long and short durations. A measure of the rhythmic properties of connected speech is the Pairwise Variability Index (PVI; Low, Grabe, & Nolan, 2000). The PVI has been used to compare dialects and languages, and healthy and disordered speech. As expected, the PVI is lower in Korean than in AE (Arvaniti, 2012). For speakers with dysarthria, the PVI has been applied mostly as a descriptor distinguishing rhythmic patterns of various subtypes of dysarthria or as an acoustic measure relating to perceptual judgment of speech prosody or intelligibility (Kim, Kent, et al., 2011; Liss et al., 2009; Taylor et al., 2012). Several studies have reported that the PVI tends to be reduced in AE speakers with PD compared with healthy controls, indicating a tendency toward a syllable-timed rhythmic pattern (Kim, Kent, et al., 2011; Liss et al., 2009; Lowit, 2014). As pointed out by Liss et al. (2009), the impact of a specific rhythmic pattern on speech intelligibility among persons with hypokinetic dysarthria is likely to depend on the native language of the speaker and the listener. This is because differences in rhythmic structure of speech across languages may determine the degree to which listeners gain access to spoken words. The present experiment uses the nPVI-V to control for AR variation across utterances and speakers (Grabe & Low, 2002). Our expectation was that the nPVI-V would be reduced for speakers with PD compared with healthy controls within each language; however, the nPVI-V was expected to be tied more systematically to speech intelligibility in AE than in Korean. This expectation was driven by the assumption that the nPVI-V had little room to change among Korean speakers with PD.

Finally, AR is a frequently measured characteristic of typical and dysarthric speech production (Ackermann & Hertrich, 1994; Kent et al., 2000; Kim, Kent, et al., 2011). This variable was selected for the current analysis because among most of the dysarthrias, hypokinetic dysarthria is unique in having ARs that are not characteristically slow (Canter, 1963; Goberman, Coelho, & Robb, 2005; Kim, Kent, et al., 2011; Solomon & Hixon, 1993). Our hypothesis was that whatever effects occur on AR as a result of PD, they would likely be equivalent across languages. There has been no attempt to directly compare the “typical” (normal) rate characteristics between the two languages; however, when ARs from individual studies of Korean and AE are compared, the rates for spontaneous speech appear to be similar (4–5 syllables/sec; Cha, 2001; Lee, 2010; Solomon & Hixon, 1993).

Speech Materials and Listening Task

Measures of speech intelligibility vary by the type of speech material and the listener's task in generating an intelligibility score. The present study used scaled estimates of the global speech intelligibility of a read paragraph. The rating scale was common to listeners in both languages, and the selected passages in the two languages are frequently used for clinical purposes. The global nature of the speech intelligibility rating was deemed a proper test of language-specific relations between the predictor measures (the acoustic measures) and the outcome measure (scaled speech intelligibility) because specific perceptual ratings (such as consonant precision, or normalcy of speech rhythm) might be linked too closely to a specific language feature.

Summary and Research Questions

Classification of dysarthria was originally developed on the basis of speakers of AE (Darley et al., 1975). Studies of dysarthria in languages other than English are available (e.g., Duez, 2009; Gentil, 1990, 1992; Jeng et al., 2006; Kobayashi, Fukusako, Anno, & Hirose, 1976; Ma et al., 2010; Nishio & Niimi, 2001; Stokes & Whitehill, 1996; Whitehill & Ciocca, 2000; Whitehill et al., 2003; Ziegler, 2002; Ziegler, Hartmann, & Hoole, 1993) and often use the category labels of the English-based Mayo Clinic classification system to identify the type(s) of dysarthria under study. The phonetic and prosodic (including rhythmic) differences among languages of the world make it likely that the Mayo classification labels may not be universally applicable; surprisingly, this issue has not been addressed in the literature, although some of the reports cited immediately above remark on the possibility of interactions between native language and characteristics of dysarthria. This logic can be extended to the case of statistical models of speech intelligibility deficits in dysarthria: The speech production variables making significant contributions to variance in speech intelligibility scores may, to some extent, be language dependent. However, the main focus of the studies cited above was on developing profiles of dysarthria in the language under study rather than comparing the (presumed) same dysarthria in two different languages. If the prediction models are different in different languages, therapy targets may depend as much on language-varying phonetic factors as on dysarthria classification.

The overall purpose of the present study was to address the question, “Does a small, fixed set of acoustic measures obtained in two different languages predict variation in speech intelligibility in the same or different ways among groups of speakers with PD?” Toward that end, acoustic measures (predictor variables) were selected on the basis of their status as frequently studied acoustic characteristics of speech in hypokinetic dysarthria, as well the relevance of the measures to specific hypotheses concerning language-specific predictions. Three of the acoustic predictor variables (size of the AVS, VOT space for stops, and nPVI-v) permit language-specific hypotheses, whereas the fourth variable (AR) is not associated with a language-specific hypothesis and therefore functions as a control predictor. The specific hypotheses were as follows:

Variation in compression of the AVS would be more predictive of speech intelligibility variation in speakers with PD among AE speakers, compared with Korean speakers, because AE has a much denser vowel space.

Variation (compressions) of VOT differences between different voicing categories for stop consonants would be more predictive of speech intelligibility variation in speakers with PD among Korean speakers, compared with AE speakers, because the VOT contrast density is greater in Korean.

Variation of rhythmic contrast across successive syllables, as captured by the nPVI-V, would be more predictive of speech intelligibility variation in PD for AE, compared with Korean speakers, because the duration of Korean syllables in connected speech is roughly syllable-timed (equal duration for successive syllables), whereas the duration of English syllables is more variable and tends to equalize in hypokinetic dysarthria (Liss et al., 1998).

Variation in AR among speakers with PD would predict speech intelligibility in roughly the same way for both languages because there is no indication of language-specific effects on AR, and in fact AR is often within a normal range among speakers with PD and hypokinetic dysarthria.

Method

Participants

A total of 48 speakers comprised the four speaker groups: (a) 12 AE speakers with PD, (b) 12 AE-speaking healthy controls, (c) 12 Korean speakers with PD, and (d) 12 Korean-speaking healthy controls. Each speaker group included seven male and five female speakers. AE-speaker groups were first recruited in and around the Baton Rouge, Louisiana, area, and Korean-speaking participants were carefully selected on the basis of age and speech severity from the archived Myongji Parkinson database, which was developed and located at Myongji University, Seoul, Republic of Korea. For both language groups, inclusion criteria were (a) diagnosis of PD, (b) no history of other neurological disease (e.g., stroke), (c) age between 30 and 75 years, and (d) good exemplars of hypokinetic dysarthria with perceived symptoms consistent with the Mayo Clinic classification system (e.g., monopitch, reduced stress, weak voice, short rushes of speech, imprecise consonants). Table 1 summarizes speaker information of the four speaker groups.

Table 1.

Speaker information on the two groups, speakers with Parkinson's disease (PD) and healthy controls (HC), including age, post onset, and speech intelligibility ratings (median in parentheses).

| Speaker information category | American English |

Korean |

||

|---|---|---|---|---|

| PD | HC | PD | HC | |

| Age range and median (year) | 53–85 (60) | 49–85 (59) | 54–81 (63) | 52–72 |

| Post onset (year) | 4–24 (8) | — | 3–13 (6) | — |

| Speech intelligibility ratings (10-point interval scale) | 3.30–8.60 (6.6) | — | 3.15–8.80 (6.8) | — |

Procedures

All speakers were asked to read a paragraph. AE-speakers read The Caterpillar passage (Patel et al., 2013), and Korean speakers read the Autumn passage (Kim, 2012). These paragraphs are widely used in studies of motor speech disorders as standard reading passages for each language and are both designed to broadly sample the phonetic inventory of the language in a variety of contexts. The passages allow equivalent measures across languages, including the four acoustic variables used in this study.

For both languages, speech samples were collected in a quiet room with a high-quality microphone (AKG Perception 120 USB; Los Angeles, CA, Shure KSM 27; Niles, IL) or a portable recorder (Tascam DR-07 MKII; Montebello, CA) at a sampling rate of 44.1 kHz and with 16-bit quantization. Specific recording settings were not identical between the two languages. However, given the high quality of audio sound from both recording sites and the robustness of our acoustic measures of such potential factors (e.g., measures involving absolute intensity were not included), our data are considered repeatable and reliable.

Speech Intelligibility Scores

To determine the speech intelligibility of the participants, graduate students enrolled in a master's program of speech-language pathology at each institution participated as listeners. Six native speakers of the respective languages were asked to rate a set of speech stimuli on a 10-point Equal Appearing Interval scale (Gerratt, Kreiman, Antonanzas-Barroso, & Berke, 1993) where 1 was equated with totally unintelligible and 10 with completely intelligible. Preselected anchor examples were not provided for the listeners. For the intelligibility task, 10 utterances having at least seven consecutive syllables with no intervening pauses were randomly selected as speech stimuli from the aforementioned passages.

Special effort was made to match speech intelligibility between language groups on a speaker-by-speaker basis. For this purpose, after the AE speakers' intelligibility was obtained, Korean speakers with PD were individually selected from the Korean database (> 40 speakers) to match speech intelligibility of the AE speakers.

Acoustic Measurements and Derived Measures

As previously introduced in the hypotheses, we selected four acoustic parameters based on two general considerations: (a) speech deficits associated with Parkinson's disease and (b) language differences between AE and Korean. Prior to acoustic analysis, the passage was partitioned into utterances, which served as the unit of acoustic analysis for AR and nPVI-V. In this study, an utterance was defined as a unit of speech between two interword pauses of 150 ms or longer (Tsao & Weismer, 1997; Yunusova, Weismer, Kent, & Rusche, 2005). Utterances of seven syllables or longer were selected for calculation of both nPVI-V and AR, considering the wide range of utterance lengths in the reading passages and the potential effect of length variation on the measures (e.g., Weismer & Cariski, 1984).

Acoustic Vowel Space

AVS was derived from measures of the first (F1) and second (F2) formant frequencies from three shared corner vowels (/a/, /i/, /u/). F1 and F2 were measured at the temporal midpoint of the vowel (Hillenbrand, Getty, Clark, & Wheeler, 1995). A varying number of tokens were available for different vowels, mainly because of the phonetic structure of the passages. However, at least seven tokens of each vowel were included in computation of the average value of F1 and F2 to construct AVS. AVS was computed using the following equation:

where ABS means absolute value, F1i refers to the F1 value of vowel /i/, and other subscripts follow the same convention (Kent & Kim, 2003). In consideration of the gender effect, AVS results were transformed to a log scale for statistical analysis; averaged, raw frequency data are reported below.

VOT

Measurement of VOT was limited to the position of word-initial (AE) or eojeol-initial (Korean), given the difficulty of its measurement in noninitial position. In Korean, an eojeol (word phrase) refers to sequence of one or more syllables; it functions as the spacing unit in writing. From the passages, a total of 43 word-initial and 50 eojeol-initial stops were available for VOT analysis. VOT was measured as the time interval from the burst of the stop to the first glottal pulse of the following vowel (Auzou et al., 2000). Especially when the stops occurred after the first word (syllable) of a breath group, the onset and offset of VOT were carefully identified by examining both waveform and spectrogram displays.

Computation of the VOT contrast scores needs additional explanation. In this study, the contrast score was calculated as the mean value of the VOT ratio of cognate pairs (e.g., voiceless/voiced) across three places of articulation (bilabial, alveolar, and velar). For Korean, because of the additional cognate, the VOT ratio was summed between two pairs of ratios (tense-lax, lax-aspirated). Given the statistical redundancy, the third pair (i.e., tense-aspirated) was not included in the contrast score. It should be noted that the VOT contrast scores are greater for Korean compared with AE both for PD and healthy controls because of the computation approach; for this reason, VOT contrast scores were not compared between the two languages (see Results and Discussion).

nPVI-V

To compute nPVI-V, successive vowel durations within utterances at least seven syllables long were measured, except for the final vowel of an utterance. Below is the equation of nPVI-V computation (Low et al., 2000).

where m = the number of vowels in an utterance and d = the duration of the kth vowel.

AR

AR was measured by visually identifying the onset and offset of each utterance from waveform and spectrogram displays to allow measurement of utterance duration. AR was then calculated as the number of syllables produced per second (syl/s), by dividing the total number of syllables by utterance duration.

Summary of Measures

A summary of acoustic measures selected in the study and our expectations for each acoustic measure on the basis of speech characteristics of PD and linguistic differences between the two languages are provided in Table 2. Because of the nature of the selected measures, one AVS score and one VOT contrast score were obtained for each individual, and multiple nPVI-V and AR values per speaker were derived. In other words, the AVS and VOT contrast scores were calculated from the averaged formant values and VOTs across tokens in the passage, and nPVI-V and AR were computed per breath group.

Table 2.

Summary of selected acoustic measures expected to reflect speech characteristics of Parkinson's disease (PD) and/or linguistic differences between American English (AE) and Korean: acoustic vowel space (AVS), voice onset time (VOT), normalized pairwise variability index for vocalic intervals (nPVI-V), and articulation rate (AR).

| Measure | Related characteristics of PD | Related linguistic differences between AE and Korean |

||

|---|---|---|---|---|

| AE | Expected weight | Korean | ||

| AVS | Reduced | 11 or 12 monophthongs | > | 7 monophthongs |

| VOT | Reduced | 2 cognates (voiced, voiceless) | < | 3 cognates (lax, aspirated, tense) |

| nPVI-V | Slightly reduced to normal | Stress-timed | > | Syllable-timed |

| AR | Normal to fast | Similar | = | Similar |

Acoustic measurements were made using a computer program, TF 32, and manual modification was performed for obvious errors in the automatically tracked formant trajectories (Milenkovic, 2005). Reliability of acoustic measurements was checked by remeasuring approximately 10% of the data 3–5 months after the original measurements. Correlation coefficients for paired measures ranged from .91 to .99 for the four acoustic parameters. The differences between the first and second measurements were all nonsignificant.

Statistical Analysis

The present study used two statistical approaches. First, we conducted a series of t tests to compare the four speaker groups (AE vs. Korean, PD vs. healthy controls), and, second, we performed stepwise multiple regression analysis to compare the degree of contribution of each acoustic parameter to estimated speech intelligibility.

Results

Descriptive Data: Within and Across Languages

Descriptive statistics for the four acoustic variables are reported in Table 3 for the control and PD groups in the two languages. For AVS, log-transformed data are reported.

Table 3.

Descriptive summary of results (means and standard deviations) of the four acoustic variables for the four speaker groups: acoustic vowel space (AVS), voice onset time (VOT) contrast scores, normalized pairwise variability index for vocalic intervals (nPVI-V), and articulation rate (AR).

| Variables | AE-PD | AE-HC | KR-PD | KR-HC |

|---|---|---|---|---|

| AVS (in log) | 4.72 (0.34) | 5.21 (0.09) | 5.23 (0.25) | 5.48 (0.19) |

| VOT contrast scores | 2.72 (0.79) | 3.05 (0.47) | 4.22 (0.86) | 4.51 (0.58) |

| nPVI-V | 49.37 (18.82) | 51.71 (20.51) | 31.63 (8.04) | 36.07 (10.34) |

| AR (syl/s) | 4.84 (1.18) | 4.77 (0.94) | 5.25 (0.94) | 5.14 (0.90) |

Note. AE = American-English; KR = Korean; PD = Parkinson's disease; HC = Healthy controls; syl/s = syllables per second.

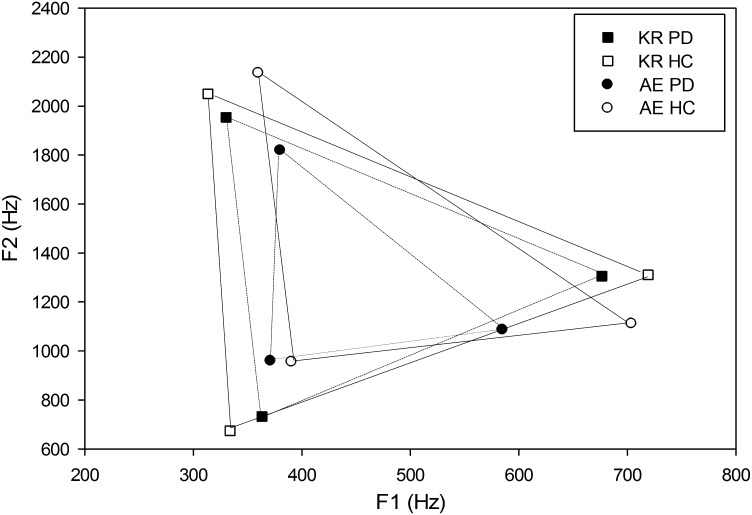

Within Language, Across Group

In both Korean and AE, AVS was greater in healthy controls compared with speakers with PD (see Figure 1). The t tests for independent groups showed that comparisons between healthy controls and speakers with PD, in both languages, were statistically significant (see Table 4). The smaller vowel spaces in speakers with PD were expected from previously published findings (Kim, Kent, et al., 2011; Skodda et al., 2011).

Figure 1.

Comparison of acoustic vowel space (men only) between the two languages, American English (AE) and Korean (KR), and the two speaking groups, speakers with Parkinson's disease (PD) and healthy controls (HC).

Table 4.

Summary of t test comparing the two speaker groups, speakers with Parkinson's disease (PD) and healthy controls (HC), within each language.

| Variable | English native |

Korean native |

||||

|---|---|---|---|---|---|---|

| Comparison between PD and HC | Mean difference | p Value | Comparison between PD and HC | Mean difference | p Value | |

| AVS (in log) | PD < HC | 0.49 | <.01** | PD < HC | 0.25 | <.04* |

| VOT contrast scores | PD < HC | 0.33 | NS | PD < HC | 1.51 | .02* |

| nPVI-V | PD < HC | 2.34 | NS | PD < HC | 4.44 | <.01** |

| AR (syl/sec) | PD > HC | 0.21 | NS | PD > HC | 0.11 | NS |

Note. AVS = acoustic vowel space; VOT = voice onset time; nPVI-V = normalized pairwise variability index for vocalic intervals; AR = articulation rate; NS = not significant; syl/s = syllables per second.

= p < .05;

= p < .01.

VOT contrast scores were smaller in both Korean and AE for speakers with PD compared with healthy controls; the difference was significant only among the Korean speakers (see Tables 3 and 4). The contrast between voiced and voiceless VOT in AE has been reported to be reduced in speakers with PD compared with controls (Morris, 1989; Weismer, 1984) or statistically equivalent between English-speaking groups (Fischer & Goberman, 2010). In Korean, there is evidence for reduction of the three-way VOT contrast for bilabial (Park, Sim, & Baik, 2005) and alveolar stops (Kang, Kim, Ban, & Seoung, 2009) when speakers with PD are compared with healthy controls (see Table 5 for raw VOT values).

Table 5.

Raw voice onset time data, means and standard deviations in ms, of the four speaker groups.

| Group | Bilabial |

Alveolar |

Velar |

||||||

|---|---|---|---|---|---|---|---|---|---|

| Voiced | Voiceless | Voiced | Voiceless | Voiced | Voiceless | ||||

| AE-PD | 19.86 (6.53) | 45.60 (19.68) | 20.03 (10.34) | 54.81 (13.07) | 19.56 (6.27) | 61.46 (11.14) | |||

| AE-HC | 14.51 (3.16) | 52.16 (12.77) | 19.34 (5.45) | 61.74 (10.98) | 29.89 (2.07) | 71.44 (15.35) | |||

| Tense | Lax | Aspirated | Tense | Lax | Aspirated | Tense | Lax | Aspirated | |

| KR-PD | 17.60 (9.99) | 51.68 (19.13) | 86.02 (36.13) | 17.36 (7.91) | 43.35 (13.90) | 73.60 (37.63) | 24.96 (11.10) | 61.43 (19.56) | 86.56 (26.83) |

| KR-HC | 15.08 (5.06) | 49.37 (13.31) | 75.26 (22.86) | 15.29 (4.83) | 40.14 (11.03) | 79.56 (20.33) | 21.65 (8.24) | 52.85 (9.34) | 89.37 (29.23) |

Note. AE = American English; KR = Korean; PD = Parkinson's disease; HC = Healthy controls.

In both languages, nPVI-V scores were greater in healthy controls compared with speakers with PD (Table 3). When compared to values reviewed by Arvaniti (2009), the nPVI-V values obtained for AE speakers in the present study are well matched to values reviewed for British English; for the current Korean speakers, nPVI-V values are similar to those reported for languages such as Mandarin and Japanese, which, like Korean, are often described as syllable- or mora-timed. The mean difference between healthy controls and speakers with PD was significant among Korean speakers (see Table 4). As discussed below, this finding, coupled with the absence of a statistically significant group difference within speakers of AE (i.e., controls vs. speakers with PD), is surprising.

AR within both languages was slightly greater among speakers with PD compared with healthy controls, but neither of the differences was statistically significant. The mean ARs in Table 3 are somewhat higher than averaged reading ARs for several languages and dialects of AE reviewed by Jacewicz, Fox, O'Neill, and Salmons (2009), but they are not outside the range of some of these rates. In addition, several studies reviewed by Jacewicz et al. (2009), as well as their own work, reported reading ARs based on relatively short sentences, rather than paragraph reading. In fact, ARs reported for paragraph reading by Tsao and Weismer (1997) are, when averaged across their slow and fast talkers, very similar to rates reported in Table 3.

Mean speech intelligibility among English speakers with PD was 6.6 on the 10-point rating scale, with a range across the 14 speakers of 3.3 to 8.6. The corresponding data for Korean speakers had a mean of 6.8 and a range of 3.15 to 8.80 (see Table 1). The mean difference was not statistically different, as expected from the intelligibility match described above.

Across Language, Within Group

Statistical comparisons of acoustic variables across the two languages were performed only for the healthy controls, and only for the three variables for which the measurement value was directly comparable (as described above, the VOT contrast score in Korean is not directly comparable to the parallel score in English). Results indicated that AR was not significantly different between the two languages [t(22) = −1.25, p = .22]. However, Korean speakers had significantly greater AVS (in log) and significantly smaller nPVI-V when compared with AE speakers [t(22) = 4.80, p < .01; and t(22) = 5.48, p < .01, respectively]. These findings are consistent with previous reports of greater AVS for Korean compared with AE adults (e.g., Chung et al., 2012) and smaller nPVI-V values in languages with a timing structure like Korean (Arvaniti, 2009). Note the fairly large difference in the raw AVS between healthy speakers of Korean and AE, even though the vowel spaces are constructed from the same set of three shared vowels.

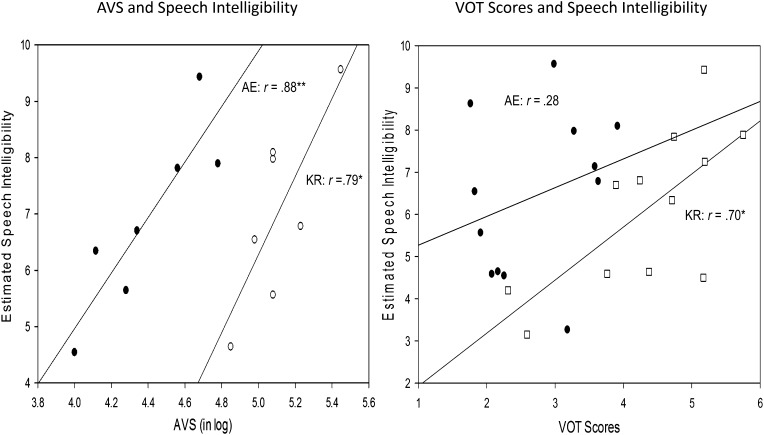

Significant Bivariate Correlations and Multiple Regression Analyses

Before we performed a stepwise regression to determine which simultaneous combinations of the acoustic variables made significant contributions to the variation in speech intelligibility scores, we computed within-language bivariate correlations across participants with PD between each of the acoustic variables and speech intelligibility. Among AE speakers with PD, only the correlation between AVS (log) and intelligibility was significant [r(12) = .69, p = .01]; for the Korean speakers, AVS (log) and the VOT contrast score were significantly correlated with speech intelligibility [r(12) = .81, p < .01; and r(12) = .77, p < .01, respectively] (see Figure 2).

Figure 2.

Results of regression analysis of acoustic vowel space (AVS, men only) and voice onset time (VOT) contrast scores against perceived speech intelligibility. Filled and unfilled circles indicate American English (AE) and Korean (KR) data, respectively. * = p < .05; ** = p < .01.

The stepwise multiple regression model among AE speakers with PD yielded AVS (log) as the single significant predictor of variation in speech intelligibility. This model accounted for 61.9% of the variance in speech intelligibility. The Korean regression model was a three-variable solution, with AVS, VOT, and AR accounting for 65.4%, 13.4%, and 10.9%, respectively, of the variation in speech intelligibility. The complete Korean model accounted for 89.7% of the variance in speech intelligibility. The regression results are summarized in Table 6.

Table 6.

Results of stepwise multiple regression analysis: predictive models of speech intelligibility in American English (AE) and Korean (KR).

| Group | Variables entered | R squared | F | p | beta |

|---|---|---|---|---|---|

| AE | AVS | .619 | 15.590 | .003 | 4.408 |

| Intelligibility scores | −14.375 + (4.408 × AVS) | ||||

| KR | AVS | .654 | 18.890 | .001 | 3.788 |

| VOT | .788 | 16.745 | .001 | 0.963 | |

| AR | .897 | 23.114 | .000 | −0.162 | |

| Intelligibility scores | −11.495 + (3.788 × AVS) + (0.963 × VOT) − (1.62 × AR) | ||||

Note. AVS = acoustic vowel space; VOT = voice onset time; AR = articulation rate.

Discussion

The overall purpose of the present study was to demonstrate proof of concept that an important component of an intelligibility deficit in dysarthria is the native language of the speaker and the listener. Toward that end, a single set of four acoustic predictors was used in two groups of patients with PD, one whose native language was Korean and the other whose native language was AE. Speech intelligibility scale values were obtained from listeners whose native language was the same as that of the speakers. The proof of concept is the finding that the multiple regression solutions are different in the two languages. In both languages, AVS made the largest contribution to the variance in speech intelligibility scores, but in English AVS was the only significant variable in the regression solution. In Korean, two additional variables, VOT contrast and AR, made unique, significant contributions to the regression model. Overall, the three significant variables in the Korean solution accounted for 89.7% of the variance in speech intelligibility scores, whereas the single-variable AE solution accounted for 61.9% of the variance. Because the acoustic predictor variables were common to the two languages and the distribution of speech intelligibility scores for speakers with PD was nearly identical across languages, the best explanation of different regression results in the two languages is likely to be found in the structural differences between Korean and English and how they affect listening strategies. It is in this sense that we regard native language as a listener variable.

These structural differences suggested language-specific predictions concerning the differential role of specific acoustic variables in accounting for variance in speech intelligibility scores. The predictions for two of the variables were tied to relative segmental contrast density in the two languages. The logic was that compression of the acoustic space for a particular sound class in a language with relatively higher contrast density would be more deleterious to scaled speech intelligibility compared with a language with lower density for that sound class (Miller, Lowit, & Kuschmann, 2014). The prediction that compression of VOT would have a greater impact on speech intelligibility among Korean speakers with PD, compared with AE speakers with PD, was supported by the current findings. In Korean, the VOT index made a significant, unique contribution to the variance of speech intelligibility scores, accounting for 13.4% of the variance; in AE, the VOT index was not part of the significant solution.

The hypothesis of compression of the vowel space in dysarthria having different effects on speech intelligibility in Korean versus AE was not supported by the current data; both effects were quite large but were roughly equivalent in magnitude. AVS is a popular metric in dysarthria research but one whose precise implication for vowel contributions to speech intelligibility deficits has yet to be worked out. Kim, Hasegawa-Johnson, and Perlman (2011) reviewed work on vowel perception and its relation to compression of the vowel space in dysarthria and noted that the available data did not permit a firm conclusion concerning changes in vowel space area and contrastivity of the set of vowels in a language's vowel system. Kim, Hasegawa-Johnson, et al. demonstrated, however, that for nine persons with spastic dysarthria, compression of the vowel space, defined by a perimeter connecting the three corner vowels /i/, /a/, and /u/, correlated fairly well with variation in speech intelligibility in much the same way as an average index of acoustic contrast among six vowels correlated with speech intelligibility. These findings seem to provide support for the assumption that compression of the corner vowel space is associated with reduction of vowel contrasts within the vowel system (see also Liu et al., 2005). This assumption prompted the hypothesis in the current investigation of differential effects on speech intelligibility of vowel space compression in Korean versus AE. According to this hypothesis, equal compressions of the Korean and AE vowel space (up to a point) should have a greater effect on contrastivity within the denser AE vowel system, and hence a greater impact on speech intelligibility. This expectation was consistent with the previously reported tendency for the acoustic distinctiveness of vowels to predict both overall intelligibility and specific vowel identity in a variety of speakers with dysarthria (Lansford & Liss, 2014a). At least in the current findings, the expectation was not confirmed.

Two related questions can be raised concerning the roughly equivalent and dominant role of AVS in predicting speech intelligibility in both Korean and AE speakers with PD. First, why aren't the relative densities of the two vowel spaces reflected in the respective weights of the two multiple regression equations? Whereas it is true that the regression coefficient for the AE AVS was larger than the coefficient in Korean, the similarity of the AVS effect is much more compelling than this slope difference. One possibility is that the size of the vowel space is principally a reflection of the severity of the speech disorder, with this general indication of articulatory reduction tied to variations in speech intelligibility. This was the claim made by Weismer, Jeng, Laures, Kent, and Kent (2001) in their discussion of the “acoustics of severity.” The claim is supported partially by data reported in Lansford and Liss (2014b). To view variations in AVS as primarily reflecting speech disorder severity is not to deny the importance of “local” acoustic contrastivity among vowels in determining speech intelligibility. Rather, it is to claim that the accumulated contribution to overall speech intelligibility scores of acoustic contrastivity among possible vowel pairs is embedded within the more dominant contribution of overall speech severity (i.e., speech intelligibility). In the present investigation, therefore, the dominant and roughly equivalent weights of AVS in both the Korean and AE regression solutions make sense, because the speech severity variation among the speakers with PD was matched across languages and extends across a large range of the intelligibility scale (see Table 1).

Second, an additional aspect of the current data that supports the notion of AVS as a general index of speech severity, rather than a straightforward determinant of contrast viability, is the relative sizes of the vowel space across the two languages for both healthy controls and speakers with PD. For healthy speakers, the Korean vowel space defined by the three corner vowels and expressed in original units (Hz2) was nearly 88% larger than the AE vowel space. As noted above, this is consistent with data reported by Chung et al. (2012) for independent groups of Korean and AE speakers. Given the extra theoretical “room” for vowel space compression in Korean, the statistical equivalence of the AVS weight in the Korean and American regression models is not consistent with the assumption that size of the AVS and contrastivity are tightly linked. In addition, Korean and AE speakers with PD had vowels spaces that were 56% and 32% smaller, respectively, than their corresponding control groups. In fact, the sizes of the vowel space for Korean speakers with PD and the AE control speakers were nearly identical (169,666 Hz2 and 162,123 Hz2, respectively). These numbers also reduce confidence in some direct relationship between vowel space area and contrast goodness. On the basis of the current findings and those of Lansford and Liss (2014a), it seems that the contrast density hypothesis for the AVS measure, or measures like it (see review of such measures in Lansford & Liss, 2014b), is not well supported. It is interesting to note that Smiljanic and Bradlow (2005) failed to show that expansion of the vowel space as a result of clear speech was different in two languages (Croatian and AE) with very different vowel inventory densities. Smiljanic and Bradlow concluded that vowel contrast enhancement expected from clear speech was not dependent on the degree of expansion of the corner vowel space. Still, the case for AVS as an index that provides information different from speech severity should be pursued by replicating the current experiment with two languages having a greater difference in vowel density (such as AE with 10 or 11 monophthongs vs. Spanish or Greek with five vowels), or by selecting a group of speakers with dysarthria who range in predetermined intervals across a vowel contrast index and whose varying speech intelligibility scores can be controlled statistically in seeking the independent contribution of vowel “goodness” to speech intelligibility variation.

The significant contribution of AR in the Korean regression model was surprising because the mean rate in speakers with dysarthria was not significantly different from the mean rate in Korean control speakers, or from the mean rate in AE speakers in either the control or PD group. Moreover, analysis of bivariate correlations of each acoustic variable with speech intelligibility failed to show a significant effect when AR was the acoustic variable correlated with intelligibility. Yet in the multiple regression analysis for Korean speakers with PD, AR was the final variable selected by the stepwise algorithm, adding a significant increment of 11% variance to the overall, three-variable model.

Inspection of a scatterplot for Korean speakers with PD in which AR was shown as a function of speech intelligibility score suggests that the significant effect may be the result of a single outlier. The Korean speaker with the lowest speech intelligibility score (3.15 on the 10-point scale) had a mean AR of 4.0 syl/s, a full syllable per second lower than the next “slowest” AR; the remaining 11 Korean speakers with dysarthria had intelligibility scores ranging between 4.2 and 8.6 on the scale, yet had tightly clustered ARs between five and six syllables per second that did not appear to vary systematically according to intelligibility. On the basis of this post hoc, qualitative analysis of the role of AR in the multiple regression model, the significant effect should be regarded with caution.

Finally, nPVI-V did not make significant contributions to the variance in intelligibility scores in either language, failing to support the prediction of a language-specific effect in which the reduction of stress timing in AE would affect intelligibility more than any timing effects observed in Korean. In fact, both languages showed decreased nPVI-V in the PD groups compared with the control groups, with the surprising finding that the effect was significant only in Korean (see Table 6). Why a language with syllable-timed tendencies would show a significant difference toward isochrony in speakers with PD, whereas a language with stress-timed tendencies would not show this effect, is not clear. This is especially perplexing because previous studies in AE have shown an effect on the perception of speech produced by speakers with PD whose syllable rhythm shifts in the direction of isochrony (Liss et al., 1998). More empirical work on actual vowel durations across a read passage is required to better understand this finding.

Conclusion

The present study was designed as an evaluation of the role of native language in variables that contribute to an intelligibility deficit in one type of dysarthria. The study was also motivated by recent literature that reviews the effect of unique speech features, for instance, tone or intonation in Chinese, as well as by analogy to the aphasia literature in which language differences have been reported to account for more variance in aphasic syndromes than aphasia types (e.g., Menn & Obler, 1990; Vaid & Pandit, 1991; Wulfeck et al., 1989). Four acoustic variables connected with the speech deficit in dysarthria associated with PD were chosen as predictor variables for multiple regression models in which scaled speech intelligibility was the outcome variable. The two languages studied, Korean and AE, have different structural characteristics for three of the predictor variables, which provided the basis for language-specific predictions of their relative contributions to speech intelligibility. If language is not relevant to the variables that contribute to an intelligibility deficit in dysarthria, the multiple regression solutions should have been essentially the same in the two languages. The results showed that this was not the case, with Korean having three significant predictor variables whereas AE had only one. The second significant predictor variable in the Korean solution was an index of contrastivity for the stop voicing distinction, which supported the specific prediction concerning the differential effect of this measure in the two languages. However, the AVS measure dominated the regression solutions in both languages, a finding at odds with the predicted, language-specific effect. That prediction stated that compression of the vowel space would have greater effect on speech intelligibility among AE speakers with PD because English has a denser vowel inventory than Korean. Several possible explanations for this failed prediction were considered, with the conclusion that size of the vowel space is primarily a reflection of overall speech severity as indexed by speech intelligibility measures. The significant effect of AR in the Korean model was judged to be an artifact of one speaker's rate, but the possible differential effect of rate on intelligibility in different languages should be explored further. The different regression solutions in the two languages support the need for additional research on language-specific effects on models and classification of dysarthria, which until recently have been neuropathology oriented and largely centered on AE. Listener (Liss et al., 1998, 2000) and language effects must be included in a full accounting of dysarthria.

Acknowledgments

This study was supported by National Institute on Deafness and Other Communication Disorders Grant NIDCD012405, awarded to Yunjung Kim. Part of the results were presented at the Conference on Motor Speech in 2014 (Sarasota, FL). The authors thank Julie Liss for her valuable comments on our previous manuscript.

Funding Statement

This study was supported by National Institute on Deafness and Other Communication Disorders Grant NIDCD012405, awarded to Yunjung Kim. Part of the results were presented at the Conference on Motor Speech in 2014 (Sarasota, FL).

References

- Ackermann H., & Hertrich I. (1994). Speech rate and rhythm in cerebellar dysarthria: An acoustic analysis of syllabic timing. Folia Phoniatrica et Logopaedica, 46, 70–78. [DOI] [PubMed] [Google Scholar]

- Ackermann H., & Hertrich I. (1997). Voice onset time in ataxic dysarthria. Brain and Language, 56, 321–333. [DOI] [PubMed] [Google Scholar]

- Arvaniti A. (2009). Rhythm, timing, and the timing of rhythm. Phonetica, 66, 46–63. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Arvaniti A. (2012). The usefulness of metrics in the quantification of speech rhythm. Journal of Phonetics, 40, 351–373. [Google Scholar]

- Auzou P., Ozsancak C., Morris R. J., Jan M., Eustache F., & Hannequin D. (2000). Voice onset time in aphasia, apraxia of speech and dysarthria: Review. Clinical Linguistics and Phonetics, 14, 131–150. [Google Scholar]

- Borrie S. A. (2015). Visual speech information: A help or hindrance in perceptual processing of dysarthric speech. The Journal of the Acoustical Society of America, 137, 1473–1480. [DOI] [PubMed] [Google Scholar]

- Canter G. (1963). Speech characteristics of patients with Parkinson's disease. I. Intensity, pitch, and duration. Journal of Speech and Hearing Disorders, 28, 221–229. [DOI] [PubMed] [Google Scholar]

- Cha J. (2001). Comparison of speech rates between syllable repetition and reading tasks (Unpublished master's thesis). Yonsei University, Seoul, Republic of Korea. [Korean] [Google Scholar]

- Cho T., Jun S.-A., & Ladefoged P. (2002). Acoustic and aerodynamic correlates of Korean stops and fricatives. Journal of Phonetics, 30, 193–228. [Google Scholar]

- Chung H., Kong E.-J., Edwards J., Weismer G., Fourakis M., & Hwang Y. (2012). Cross-linguistic studies of children's and adults' vowel spaces. The Journal of the Acoustical Society of America, 131, 442–454. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Darley F. L., Aronson A. E., & Brown J. R. (1975). Motor speech disorders. Philadelphia, PA: Saunders. [Google Scholar]

- Duez D. (2009). Segmental duration in Parkinsonian French speech. Folia Phoniatrica et Logopaedica, 61, 239–246. [DOI] [PubMed] [Google Scholar]

- Duffy J. R. (1995). Motor speech disorders: Substrates, differential diagnosis, and management. St. Louis, MO: Elsevier. [Google Scholar]

- Duffy J. R. (2005). Motor speech disorders: Substrates, differential diagnosis, and management (2nd ed.). St. Louis, MO: Elsevier. [Google Scholar]

- Duffy J. R. (2013). Motor speech disorders: Substrates, differential diagnosis, and management (3rd ed.). St. Louis, MO: Elsevier. [Google Scholar]

- Escudero P., & Boersma P. (2004). Bridging the gap between L2 speech perception research and phonological theory. Studies in Second Language Acquisition, 26, 551–585. [Google Scholar]

- Fischer E., & Goberman A. M. (2010). Voice onset time in Parkinson disease. Journal of Communication Disorders, 43, 21–34. [DOI] [PubMed] [Google Scholar]

- Flint A., Black S., Campbell-Taylor I., Gailey G., & Levinton C. (1992). Acoustic analysis in the differentiation between Parkinson's disease and major depression. Journal of Psycholinguistic Research, 21, 383–399. [DOI] [PubMed] [Google Scholar]

- Gentil M. (1990). Dysarthria in Friedreich disease. Brain and Language, 38, 438–448. [DOI] [PubMed] [Google Scholar]

- Gentil M. (1992). Phonetic intelligibility in dysarthria for the use of French language clinicians. Clinical Linguistics and Phonetics, 6, 179–189. [DOI] [PubMed] [Google Scholar]

- Gerratt B. R., Kreiman J., Antonanzas-Barroso N., & Berke G. S. (1993). Comparing internal and external standards in voice quality judgments. Journal of Speech and Hearing Research, 36, 14–20. [DOI] [PubMed] [Google Scholar]

- Goberman A. M., Coelho C. A., & Robb M. P. (2005). Prosodic characteristics of Parkinsonian speech: The effect of levodopa-based medication. Journal of Medical Speech-Language Pathology, 13, 51–68. [Google Scholar]

- Goberman A. M., & Elmer L. W. (2005). Acoustic analysis of clear versus conversational speech in individuals with Parkinson disease. Journal of Communication Disorders, 38, 215–230. [DOI] [PubMed] [Google Scholar]

- Grabe E., & Low E. L. (2002). Durational variability in speech and the rhythm class hypothesis. In Gussenhoven C. & Warner N. (Eds.), Laboratory phonology (pp. 515–546). New York: Mouton de Gruyter. [Google Scholar]

- Han M. S., & Weitzman R. S. (1970). Acoustic features of Korean /P, T, K/, /p, t, k/ and /ph, th, kh/. Phonetica, 22, 112–128. [Google Scholar]

- Hartelius L., Theodoros D., Cahill L., & Lillvik M. (2003). Comparability of perceptual analysis of speech characteristics in Australian and Swedish speakers with multiple sclerosis. Folia Phoniatrica et Logopaedica, 55, 177–188. [DOI] [PubMed] [Google Scholar]

- Hillenbrand J. M., Getty L. A., Clark M. J., & Wheeler K. (1995). Acoustic characteristics of American English vowels. The Journal of the Acoustical Society of America, 97, 3099–3111. [DOI] [PubMed] [Google Scholar]

- Hixon T., Weismer G., & Hoit J. (2013). Preclinical speech science: Anatomy, physiology, acoustics, perception (2nd ed.). San Diego, CA: Plural Publishing. [Google Scholar]

- Jacewicz E., Fox R. A., O'Neill C., & Salmons J. (2009). Articulation rate across dialect, age, and gender. Language Variation and Change, 21, 233–256. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jeng J. Y., Weismer G., & Kent R. D. (2006). Production and perception of Mandarin tone in adults with cerebral palsy. Clinical Linguistics and Phonetics, 20, 67–87. [DOI] [PubMed] [Google Scholar]

- Kang Y.-A., Kim Y.-D., Ban J.-C., & Seoung C.-J. (2009). A comparison of the voice differences of patients with idiopathic Parkinson's disease and a normal-aging group. Journal of the Korean Society of Speech Sciences, 1, 99–107. [Google Scholar]

- Kent R. D., Kent J. F., Duffy J. R., Thomas J. E., Weismer G., & Stuntebeck S. (2000). Ataxic dysarthria. Journal of Speech, Language, and Hearing Research, 43, 1275–1289. [DOI] [PubMed] [Google Scholar]

- Kent R. D., & Kim Y.-J. (2003). Toward an acoustic typology of motor speech disorders. Clinical Linguistics and Phonetics, 17, 427–445. [DOI] [PubMed] [Google Scholar]

- Kim H. (2015). Familiarization effects on consonant intelligibility in dysarthric speech. Folia Phoniatrica et Logopaedica, 67, 245–252. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kim H.-H. (2012). Neurologic speech language disorders. Seoul, Republic of Korea: Sigma Press. [Google Scholar]

- Kim H., Hasegawa-Johnson M., & Perlman A. (2011). Vowel contrast and speech intelligibility in dysarthria. Folia Phoniatrica et Logopaedica, 63, 187–194. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kim Y.-J., Kent R. D., & Weismer G. (2011). An acoustic study of the relationships among neurologic disease, dysarthria type and severity of dysarthria. Journal of Speech, Language, and Hearing Research, 54, 417–429. [DOI] [PubMed] [Google Scholar]

- Klatt D. H. (1975). Voice-onset time, frication, and aspiration in word-initial consonant clusters. Journal of Speech and Hearing Research, 18, 687–703. [DOI] [PubMed] [Google Scholar]

- Kobayashi N., Fukusako Y., Anno M., & Hirose H. (1976). Characteristic patterns of speech in cerebellar dysarthria. Journal of Speech and Hearing Disorders, 5, 63–68. [Google Scholar]

- Lansford K. L., Borrie S. A., & Bystricky L. (2016). Use of crowdsourcing to assess the ecological validity of perceptual-training paradigms in dysarthria. American Journal of Speech-Language Pathology, 25, 233–239. [DOI] [PubMed] [Google Scholar]

- Lansford K. L., & Liss J. M (2014a). Vowel acoustics in dysarthria: Mapping to perception. Journal of Speech, Language, and Hearing Research, 57, 68–80. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lansford K. L., & Liss J. M. (2014b). Vowel acoustics in dysarthria: Speech disorder diagnosis and classification. Journal of Speech, Language, and Hearing Research, 57, 57–67. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lee H. (2010). Speech rate and pause characteristics in speakers with stuttering (Unpublished master's thesis). Yonsei University, Seoul, Republic of Korea. [Korean] [Google Scholar]

- Liss J. M., Spitzer S., Caviness J. N., & Adler C. (1998). Syllable strength and lexical boundary decisions in the perception of hypokinetic dysarthric speech. The Journal of the Acoustical Society of America, 104, 3415–3424. [DOI] [PubMed] [Google Scholar]

- Liss J. M., Spitzer S., Caviness J. N., & Adler C. (2000). Lexical boundary error analysis in hypokinetic and ataxic dysarthria. The Journal of the Acoustical Society of America, 107, 2457–2466. [DOI] [PubMed] [Google Scholar]

- Liss J. M., Utianski R., & Lansford K. (2013). Crosslinguistic application of English-centric rhythm descriptors in motor speech disorders. Folia Phoniatrica et Logopaedica, 65, 3–19. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Liss J. M., White L., Mattys S., Lansford K., Lotto A. J., Spitzer S. M., & Caviness J. N. (2009). Quantifying speech rhythm abnormalities in the dysarthria. Journal of Speech, Language, and Hearing Research, 52, 1334–1352. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Liu H.-M., Tsao F.-M., & Kuhl P. K. (2005). The effect of reduced vowel working space on speech intelligibility in Mandarin-speaking young adults with cerebral palsy. The Journal of the Acoustical Society of America, 117, 3879–3889. [DOI] [PubMed] [Google Scholar]

- Low E. L., Grabe E., & Nolan F. (2000). Quantitative characterizations of speech rhythm: Syllable-timing in Singapore English. Language & Speech, 43, 377–401. [DOI] [PubMed] [Google Scholar]

- Lowit A. (2014). Quantification of rhythm problems in disordered speech: A re-evaluation. Philosophical Transactions of the Royal Society of London B: Biological Sciences, 369(1658). https://doi.org/10.1098/rstb.2013.0404 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ludlow C. L., Connor N. P., & Bassich C. J. (1987). Speech timing in Parkinson's and Huntington's disease. Brain and Language, 32, 195–214. [DOI] [PubMed] [Google Scholar]

- Ma J. K.-Y., Whitehill T. L., & So S. Y.-S. (2010). Intonation contrast in Cantonese speakers with hypokinetic dysarthria associated with Parkinson's disease. Journal of Speech, Language, and Hearing Research, 53, 836–849. [DOI] [PubMed] [Google Scholar]

- Menn L., & Obler L. K. (1990). Agrammatic aphasia: Cross-language narrative sourcebook. Amsterdam, the Netherlands: John Benjamins. [Google Scholar]

- Metter E., & Hanson W. (1986). Clinical and acoustical variability in hypokinetic dysarthria. Journal of Communication Disorders, 19, 347–366. [DOI] [PubMed] [Google Scholar]

- Milenkovic P. (2005). TF 32 [Computer program]. Madison, WI: University of Wisconsin–Madison. [Google Scholar]

- Miller N., Lowit A., & Kuschmann A. (2014). Introduction: Cross-language perspectives on motor speech disorders. In Miller N. & Lowit A. (Eds.), Motor speech disorders: A cross-language perspective (pp. 7–28). Bristol, UK: Multilingual Matters. [Google Scholar]

- Mok P., & Lee S. I. (2008). Korean speech rhythm using rhythmic measures. Paper presented at the 18th International Congress of Linguists (CIL18). Seoul, Republic of Korea. [Google Scholar]

- Monsen R. B. (1983). The oral speech intelligibility of hearing-impaired talkers. Journal of Speech and Hearing Disorders, 48, 286–296. [DOI] [PubMed] [Google Scholar]

- Morris R. J. (1989). VOT and dysarthria: A descriptive study. Journal of Communication Disorders, 22, 23–33. [DOI] [PubMed] [Google Scholar]

- Nishio M., & Niimi S. (2001). Speaking rate and its components in dysarthric speakers. Clinical Linguistics and Phonetics, 15, 309–317. [Google Scholar]

- Ozsancak C., Auzou P., Jan M., Defebvre L., Derambure P., & Destee A. (2006). The place of perceptual analysis of dysarthria in the differential diagnosis of corticobasal degeneration and Parkinson's disease. Journal of Neurology, 253, 92–97. [DOI] [PubMed] [Google Scholar]

- Park S., Sim H., & Baik J. S. (2005). Production ability for Korean bilabial stops in Parkinson's disease. Journal of Multilingual Communication Disorders, 3, 90–102. [Google Scholar]

- Patel R., Connaghan K., Franco D., Edsall E., Forgit D., Olsen L., … Russell S. (2013). “The Caterpillar”: A novel reading passage for assessment of motor speech disorders. American Journal of Speech-Language Pathology, 22, 1–9. [DOI] [PubMed] [Google Scholar]

- Shin J., Kiaer J., & Cha J. (2013). The sounds of Korean. New York, NY: Cambridge University Press. [Google Scholar]

- Skodda S., Visser W., & Schlegel U. (2011). Vowel articulation in Parkinson's disease. Journal of Voice, 25, 467–472. [DOI] [PubMed] [Google Scholar]

- Smiljanic R., & Bradlow A. R. (2005). Production and perception of clear speech in Croatian and English. The Journal of the Acoustical Society of America, 118, 1677–1688. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Solomon N. P., & Hixon T. J. (1993). Speech breathing in Parkinson's disease. Journal of Speech and Hearing Research, 36, 294–310. [DOI] [PubMed] [Google Scholar]

- Stokes S. F., & Whitehill T. L. (1996). Speech error patterns in Cantonese speaking children with cleft palate. European Journal of Disorders of Communication, 31, 477–496. [DOI] [PubMed] [Google Scholar]

- Taylor C., Aird V., Power E., Davies E., Madelaine C., McCarry A., & Ballard K. J. (2012). Objective measurement of dysarthric speech following traumatic brain injury: Clinical application of acoustic analysis. Journal of Clinical Practice in Speech-Language Pathology, 14, 129–135. [Google Scholar]

- Tsao Y.-C, & Weismer G. (1997). Interspeaker variation in habitual speaking rate: Evidence for a neuromuscular component. Journal of Speech, Language, and Hearing Research, 40, 858–866. [DOI] [PubMed] [Google Scholar]

- Vaid J., & Pandit R. (1991). Sentence interpretation in normal and aphasic Hindi speakers. Brain and Language, 41, 250–274. [DOI] [PubMed] [Google Scholar]

- Weismer G. (1984). Articulatory characteristics of Parkinsonian dysarthria. In McNeil M. R., Rosenbek J. C., & Aronson A. (Eds.), The dysarthrias: Physiology-acoustic-perception-management (pp. 101–130). San Diego, CA: College-Hill Press. [Google Scholar]

- Weismer G., & Cariski D. (1984). On speakers' abilities to control speech mechanism output: Theoretical and clinical implications. In Lass N. J. (Ed.), Speech and language: Advances in basic research and practice (Vol. 10, pp. 185–241). Orlando, FL: Academic Press. [Google Scholar]

- Weismer G., Jeng J., Laures R., Kent R., & Kent J. (2001). Acoustic and intelligibility characteristics of sentence production in neurogenic speech disorders. Folia Phoniatrica et Logopaedica, 53, 1–18. [DOI] [PubMed] [Google Scholar]

- Whitehill T. L., & Ciocca V. (2000). Speech errors in Cantonese speaking adults with cerebral palsy. Clinical Linguistics and Phonetics, 14, 111–130. [Google Scholar]

- Whitehill T. L., Ma J. K., & Lee A. S. (2003). Perceptual characteristics of Cantonese hypokinetic dysarthria. Clinical Linguistics and Phonetics, 17, 265–271. [DOI] [PubMed] [Google Scholar]

- Wulfeck B., Bates E., Juarez L., Opie M., Friederici A., MacWhinney B., & Zurif E. (1989). Pragmatics in aphasia: Crosslinguistic evidence. Language and Speech, 32, 315–336. [DOI] [PubMed] [Google Scholar]

- Yunusova Y., Weismer G., Kent R. D., & Rusche N. M. (2005). Breath-group intelligibility in dysarthria: Characteristics and underlying correlates. Journal of Speech, Language, and Hearing Research, 48, 1294–1310. [DOI] [PubMed] [Google Scholar]

- Ziegler W. (2002). Task-related factors in oral motor control: Speech and oral diadochokinesis in dysarthria and apraxia of speech. Brain and Language, 80, 556–575. [DOI] [PubMed] [Google Scholar]

- Ziegler W., Hartmann E., & Hoole P. (1993). Syllabic timing in dysarthria. Journal of Speech and Hearing Research, 36, 683–693. [DOI] [PubMed] [Google Scholar]