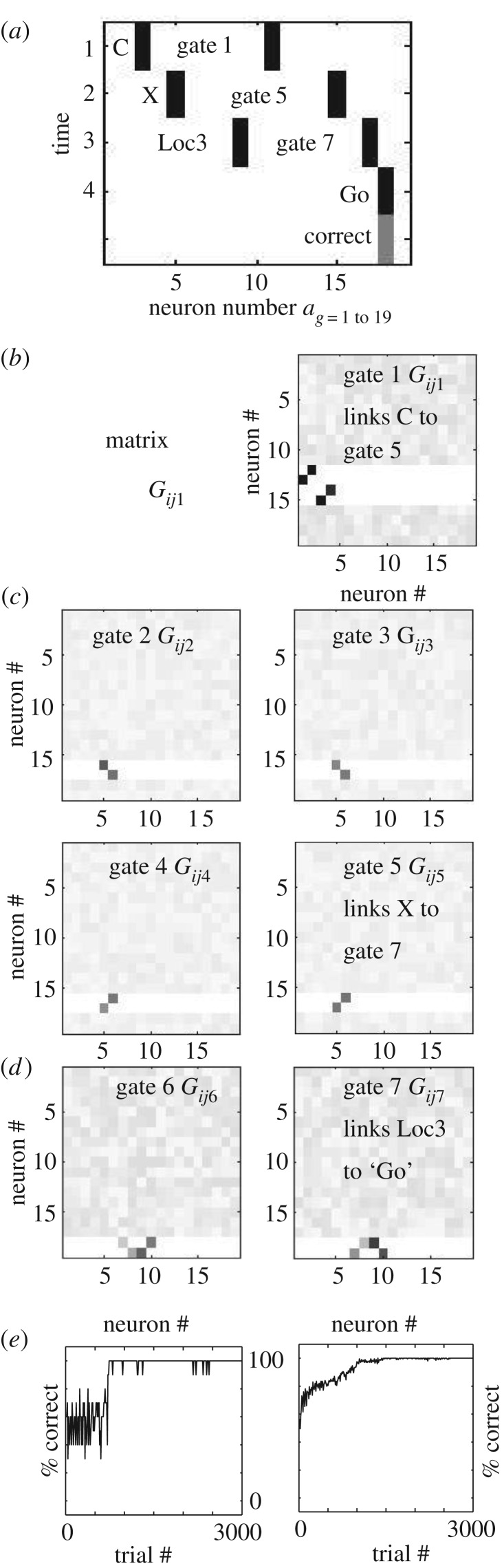

Figure 3.

Simulation of task after training. (a) On each trial, the full network was presented with a sequence of binary patterns with active units (black rectangles) representing one item from A,B,C,D (top row, ‘C’) followed by one item from X,Y (second row, ‘X’) followed by one of 4 location cues (third row, ‘Loc3’). Each trial started with activity in gate 1 (index 11), and after learning cue C activates gate 5 (index 15), cue X activates gate 7 (index 17), and Loc3 activates the Go response via weights shown in part b. These network weights were previously strengthened when the network was rewarded for a correct Go response or NoGo based on the combinations shown in figure 1. The network learned to correctly respond to all combinations. (b) After learning, gate 1 has a weight matrix Gij1 with four strong weights (black squares) linking the four first cues to specific gates, so input ‘C’ in column 3 activates output row corresponding to gate 5 (index 15). The grey scale values represent the random initial weights that were not modified due to the absence of pre- or post-synaptic activity. Darker grey represents stronger weights. (c) The next four gates link the second cue to a different set of gates. In this example, active gate 5 (index 15) allows input X to activate gate 7. (d) The next two gates link the location context to a correct response. In this example, active gate 7 (index 17) allows Loc3 to activate Go. (e) Left: Performance of a single network over 3000 training trials, showing relatively rapid increase in performance from random (50%) to 100% correct around trial 800. Right: Performance change averaged over 20 networks, showing that all networks converge over time to 100% correct responses on all 32 trial types.