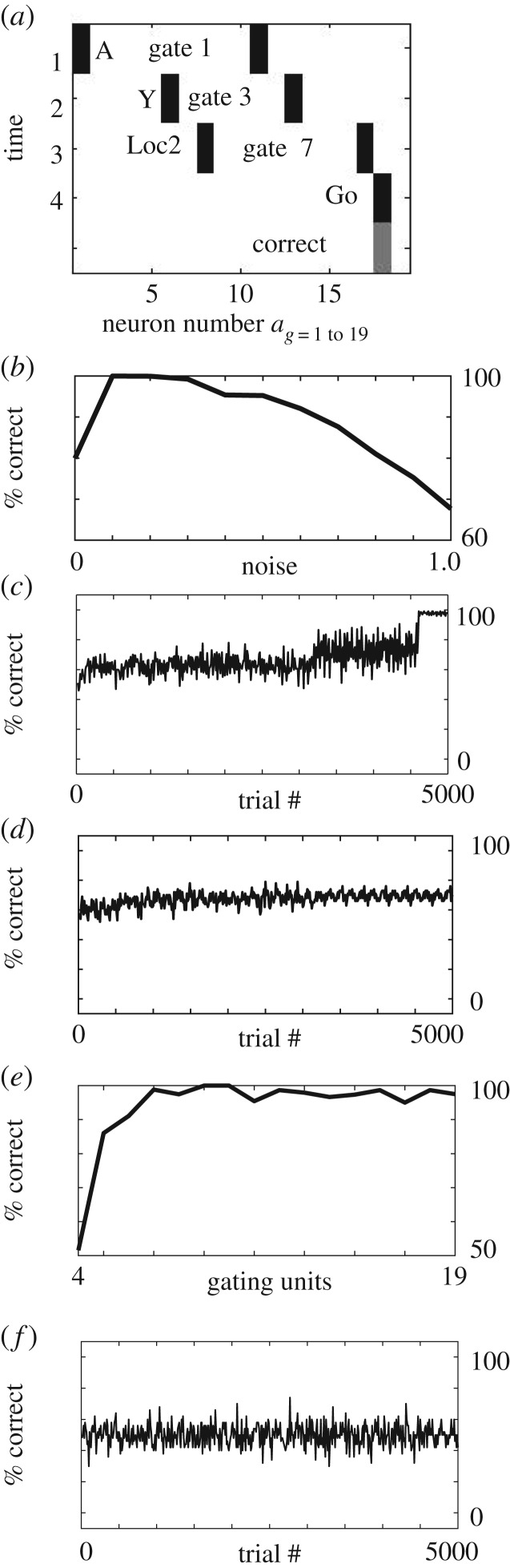

Figure 4.

More examples of neural networks trained on task. (a) A different example, after different training. In this case, gate 1 (index 11) links input cue ‘A’ to gate 3 (index 13). Gate 3 links cue ‘Y’ to gate 7 (index 17). Gate 7 links ‘Loc2’ to Go response which is correct. (b) Performance of the network for different levels of noise (0.0 to 1.0) when trained on all 32 combinations. The network does not perform well with zero noise, because it does not explore enough gating patterns, but does perform well for noise of 0.1 and 0.2. Higher levels of noise cause a decrease in correct steady state responding. (c) Example of generalization from average across 20 networks, showing that when presented with only 18 of the 32 combinations, the networks attain about 75% performance. Then on the last 400 trials, most of the networks (19 out of 20) can correctly generalize to 100% correct responses when presented with all 32 pattern combinations (without further learning). (d) With noise set to zero, the average performance of 20 networks does not learn the rules and remains at 50% performance and does not generalize, indicating sensitivity to specific network parameters. (e) Performance of the network for different numbers of gating units, showing that insufficient numbers prevent good performance. Best performance is attained first with seven units, which allows four gating units to be activated by the four input items A,B,C,D. (f) With purely Hebbian connectivity without gating units and gated weights, a network cannot learn to attain better than 50% performance, due to incompatible responses that must be routed through the same neuron.