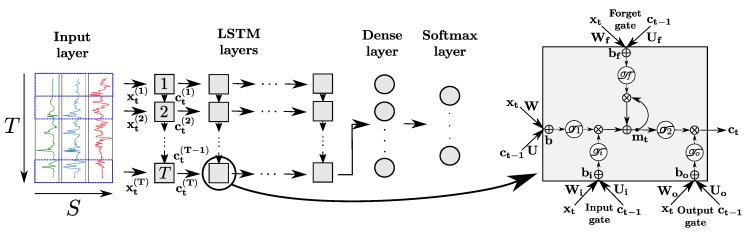

Figure 5.

Architecture of a LSTM network for sensor-based HAR (left). The intermediate and last LSTM layers are organized in a many-to-many and many-to-one layout, respectively. Each LSTM layer is composed of LSTM cells (right). , and represent the cell input, memory, and output at time t, respectively. In addition, ⨁ and ⨂ refer to the element-wise addition and multiplication, respectively. , , and designate internal weight matrices, bias vector and activation function (for gate ). and are internal activation functions applied on the input and memory of the cell, respectively.