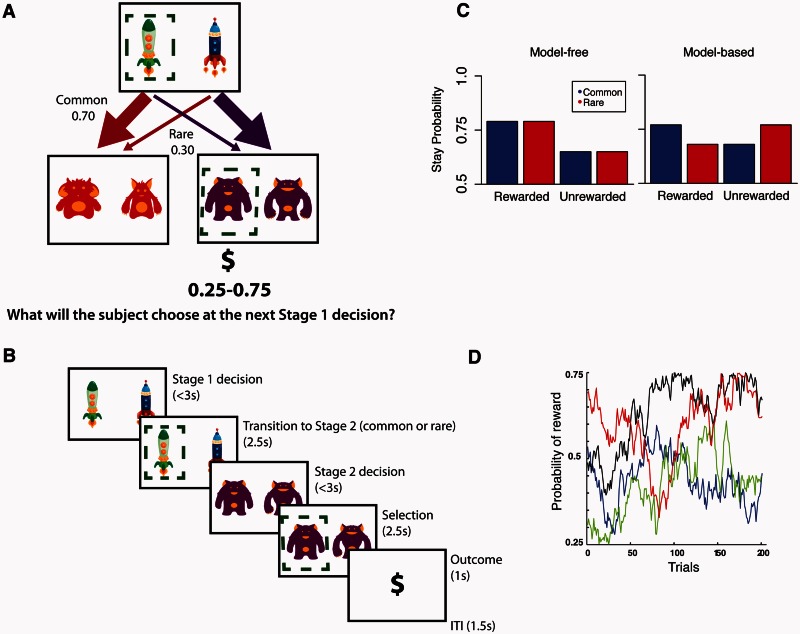

Figure 1.

Trial structure, logic and simulated data for the task. (A) Task design. At Stage 1, participants chose between two spaceships. This choice determined the transition to the next stage according to a fixed probability scheme: each spaceship was predominantly associated with one or the other Stage 2 states (i.e. planets) and led there 70% of the time. At Stage 2, participants chose one of two aliens to discover if they would be rewarded. Each alien was associated with a probabilistic reward (range: 0.25–0.75) that changed gradually over time as determined by a Gaussian random walk. (B) Timing of stages within a single trial. (C) Simulated data. Model-based and model-free strategies predict different patterns of behaviour. A pure model-free learner (left) shows only a main effect of reward, choosing to stay with the same Stage 1 choice after a reward irrespective of the transition type (common versus rare) associated with that reward on the last trial. Conversely, a pure model-based learner (right) is influenced by the interaction between reward and transition type on the last trial. For instance, after winning on the planet less commonly visited (rare transition) a participant will be less likely to stay with the same spaceship and will instead pick the alternate spaceship in order to maximize chances of returning to that same planet. Previous studies show that healthy participants use a mixture of both model-free and model-based processes (Daw et al., 2011; Eppinger et al., 2013; Otto et al., 2013b). (D) Example of one of the sets of Gaussian random walks that determined the probability of reward at each of the four Stage 2 options.