Significance

Body-centered (egocentric) and world-centered (allocentric) spatial reference frames are both important for spatial navigation. We have previously shown that vestibular heading signals, which are initially coded in a head-centered reference frame, are no longer head-centered in the ventral intraparietal (VIP) area, but instead are represented in either a body- or world-centered frame, as the two frames were not dissociated. Here, we report a flexible switching between egocentric and allocentric reference frames in a subpopulation of VIP neurons, depending on gaze strategy. Other VIP neurons continue to represent heading in a body-centered reference frame despite changes in gaze strategy. These findings suggest that the vestibular representation of heading in VIP is dynamic and may be modulated by task demands.

Keywords: ventral intraparietal area, reference frame, vestibular, body/world-centered, egocentric/allocentric

Abstract

By systematically manipulating head position relative to the body and eye position relative to the head, previous studies have shown that vestibular tuning curves of neurons in the ventral intraparietal (VIP) area remain invariant when expressed in body-/world-centered coordinates. However, body orientation relative to the world was not manipulated; thus, an egocentric, body-centered representation could not be distinguished from an allocentric, world-centered reference frame. We manipulated the orientation of the body relative to the world such that we could distinguish whether vestibular heading signals in VIP are organized in body- or world-centered reference frames. We found a hybrid representation, depending on gaze direction. When gaze remained fixed relative to the body, the vestibular heading tuning of VIP neurons shifted systematically with body orientation, indicating an egocentric, body-centered reference frame. In contrast, when gaze remained fixed relative to the world, this representation changed to be intermediate between body- and world-centered. We conclude that the neural representation of heading in posterior parietal cortex is flexible, depending on gaze and possibly attentional demands.

Spatial navigation is a complex problem requiring information from both visual and vestibular systems. Heading, the instantaneous direction of translation of the observer, represents one of the key variables needed for effective navigation and is represented in a number of cortical and subcortical regions (1–12). Although visual heading information is generally represented in an eye-centered reference frame by neurons in various brain areas (13–18), the vestibular representation of heading appears to be more diverse (8, 15, 19). For example, vestibular heading signals follow a representation that is intermediate between head- and eye-centered in the dorsal medial superior temporal area (MSTd) (15, 19), but intermediate between head- and body-centered in the parietoinsular vestibular cortex (19). Most remarkably, vestibular heading signals in the ventral intraparietal (VIP) area were shown to remain largely stable with respect to the body/world, despite variations in eye or head position, suggesting either a body- or world-centered representation (19).

In general, the role of the parietal cortex in navigation has been linked to egocentric representations. For example, human fMRI studies of visual navigation reported that the parietal cortex codes visual motion information in an egocentric reference frame (20–27) and coactivates with the hippocampus, in which spatial maps are represented in allocentric coordinates (28–31). Furthermore, rodent studies have suggested that the parietal cortex, hippocampus, and retrosplenial cortex are all involved in transformations between egocentrically and allocentrically coded information (32–35). Therefore, one may hypothesize that VIP represents vestibular heading signals in an egocentric, or body-centered, reference frame.

Chen et al. (19) showed that the heading tuning of macaque area VIP neurons remains stable with respect to the body/world when eye or head position is varied, but body- and world-centered reference frames were not dissociated in those experiments. Here, we test whether the heading representation in VIP is allocentric (i.e., world-centered) or egocentric (i.e., body-centered) by constructing an experimental setup that allows effective dissociation between body- and world-centered reference frames. This was done by installing a servo-controlled yaw rotator beneath the primate chair, such that it could be used to vary body orientation relative to the world, while keeping eye-in-head and head-on-body orientations fixed (body-fixed gaze condition; Fig. 1A). We found that vestibular heading tuning of VIP neurons shifted with body orientation, consistent with a body-centered reference frame. However, when the same body orientation changes were accompanied by rotations of eye-in-head, such that gaze direction remained fixed in the world (world-fixed gaze condition; Fig. 1B), some VIP neurons had tuning curves that became invariant to changes in body orientation. This suggests a flexible transformation of the heading representation toward a world-centered reference frame. We discuss how these findings relate to other discoveries regarding flexible and intermediate reference frame representations in a variety of systems.

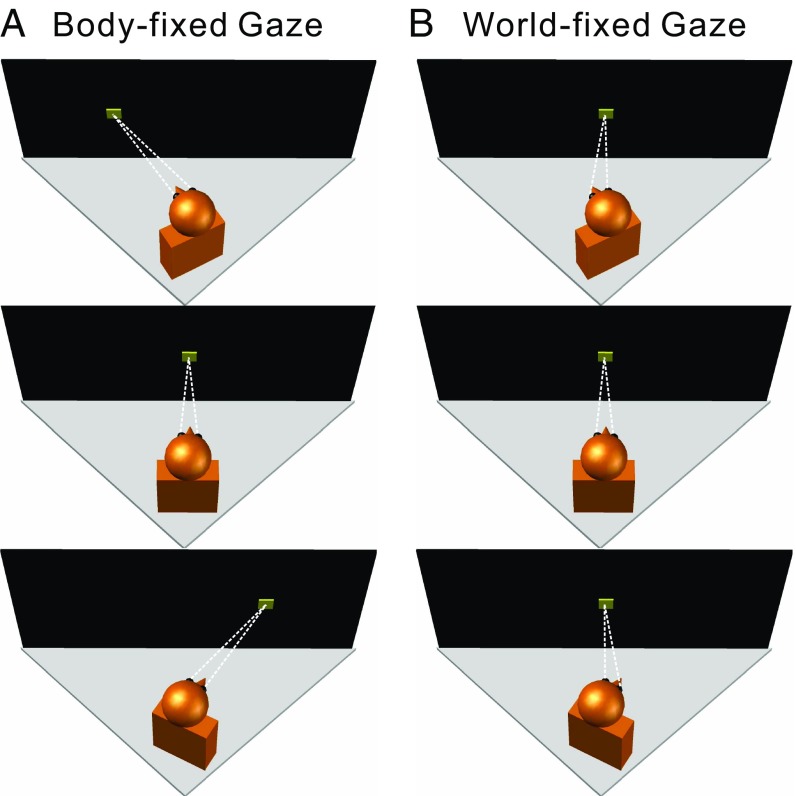

Fig. 1.

Experimental design. Schematic illustration of the various combinations of body/eye orientations relative to the motion platform (gray triangles) and display screen (black rectangle). The monkey’s head/body orientation was varied relative to the motion platform and screen (Top, 20° left; Middle, straight ahead, 0°; Bottom, 20° right) by using a yaw rotator that was mounted on top of the motion platform, below the monkey. The monkey’s head was fixed relative to the body, but the eye-in-head position could be manipulated by fixating one of three targets (yellow squares) on the screen. (A) In the body-fixed gaze condition, the monkey fixated a visual target that was at a fixed location (straight ahead) relative to the head/body, such that eye-in-head position was constant. (B) In the world-fixed gaze condition, the animal was required to maintain fixation on a world-fixed target, such that eye-in-head position covaried with head/body orientation.

Results

Animals were passively translated along 1 of 10 different heading directions in the horizontal plane, to measure heading tuning curves (Methods and ref. 19). To dissociate body- and world-centered reference frames, body orientation relative to the world was varied by passively rotating the monkey’s body around the axis of the trunk to one of three body orientations: 20° left, center, or 20° right (Fig. 1). Note that, in our apparatus (see SI Methods for details), body orientation relative to the world was the same as body orientation relative to the visual frame defined by the motion platform and coil frame (which were themselves translated).

We have previously shown that vestibular heading tuning in VIP does not shift with changes in eye-in-head or head-on-body positions (19). Here, we investigated the possibility that the reference frame of vestibular heading tuning might depend on whether gaze remained fixed relative to the body (body-fixed gaze condition; Fig. 1A) or fixed relative to the world (world-fixed gaze condition; Fig. 1B). Thus, for each of the three body orientations, a fixation target controlled gaze to be either straight forward relative to the body (and head, since head position relative to the body was fixed in these experiments) or straight forward relative to the world (i.e., facing the front of the coil frame which housed the projection screen). This resulted in a total of five body/eye position combinations.

Quantification of Shifts of Vestibular Heading Tuning.

Responses of VIP neurons were measured for 10 translation directions and 5 body/eye position combinations, as illustrated for a typical neuron in Fig. 2A. For each combination of body orientation and eye position, a heading tuning curve was constructed from mean firing rates computed in a 400-ms time window centered on the “peak time” (see SI Methods for details). The five tuning curves were sorted into two conditions: body-fixed gaze (Fig. 2B) and world-fixed gaze (Fig. 2C; note that the tuning curve for [body-in-world, eye-in-head] = [0°, 0°] was common to both conditions). Because heading is expressed relative to the world in these plots, a lack of tuning curve shifts would reflect a world-centered reference frame. In contrast, if the three tuning curves were systematically displaced from one another by an amount equal to the change in body orientation, this would indicate a body-centered reference frame. For the example neuron of Fig. 2, the three tuning curves showed systematic shifts in the body-fixed gaze condition (Fig. 2B), suggesting a body-centered reference frame. However, tuning shifts were much smaller in the world-fixed gaze condition (Fig. 2C).

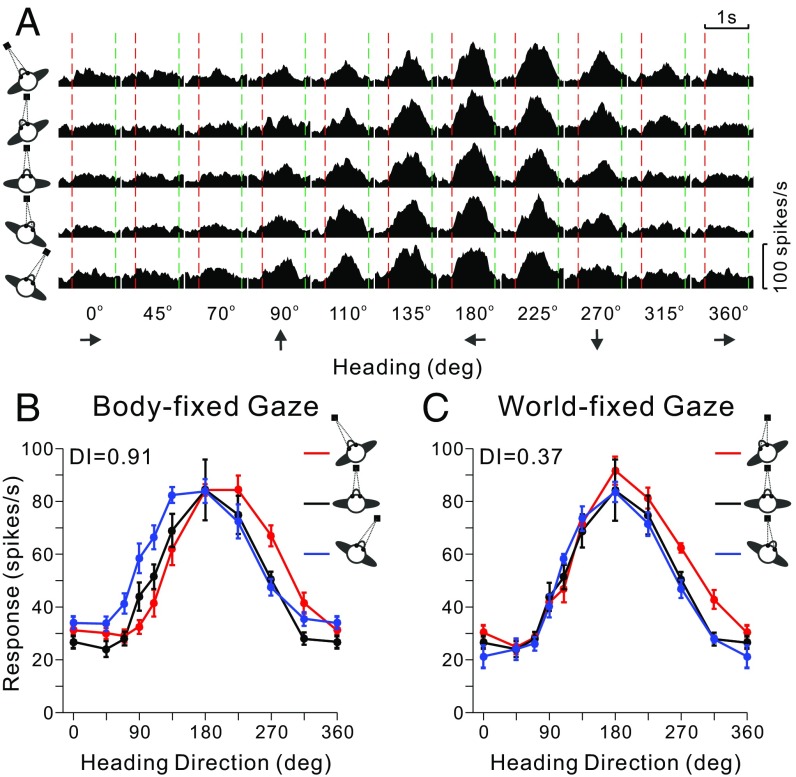

Fig. 2.

Data from an example VIP neuron. (A) Response peristimulus time histograms are shown for the 10 headings (x axis) and each of the five combinations of [body-in-world, eye-in-head] positions: [−20°, 0°], [−20°, 20°], [0°, 0°], [20°, −20°], [20°, 0°] (rows, top to bottom). The red and green vertical dashed lines represent the start and end of platform motion (which followed a straight trajectory for each different heading with a Gaussian velocity profile). (B) Tuning curves for the body-fixed gaze condition. The three tuning curves show mean firing rate (±SEM) as a function of heading for the three combinations of [body-in-world, eye-in-head] position ([−20°, 0°], [0°, 0°], [20°, 0°]) that have constant eye-in-head position, as indicated by the red, black, and blue curves, respectively. (C) Tuning curves for the world-fixed gaze condition for the three combinations of [body-in-world, eye-in-head] position ([−20°, 20°], [0°, 0°], [20°, −20°]) that involve fixation on the same world-fixed target.

To quantify the shift of each pair of tuning curves relative to the change in body orientation, a displacement index (DI) was computed by using an established cross-covariance method (13–15, 17, 19), which took into account the entire tuning function rather than just one parameter such as the peak or the vector sum direction. Thus, the DI is robust to changes in the gain or width of the tuning curves and can tolerate a variety of tuning shapes. For the typical VIP neuron of Fig. 2, the mean DI (averaged across all three pairs of body orientations) for the body-fixed gaze condition was close to 1 (0.91), consistent with a body-centered representation of heading. In contrast, the mean DI for the world-fixed gaze condition was 0.37, indicating a representation intermediate between body- and world-centered, but closer to world-centered.

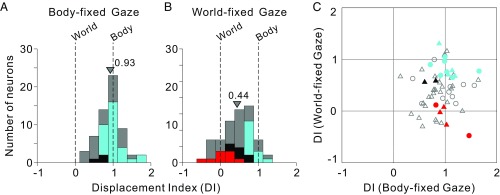

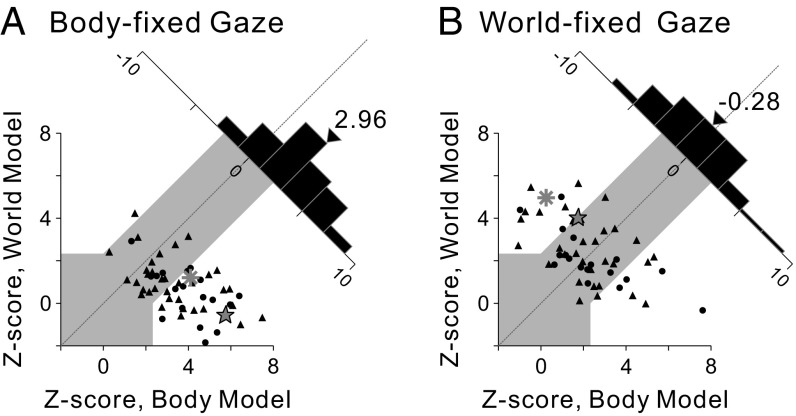

Average DI values are summarized in Fig. 3, separately for the body- and world-fixed gaze conditions (Figs. S1 and S2 summarize DI values separately for left and right body orientations). The central tendencies were computed as mean values, as the distributions did not differ significantly from normality (P = 0.32, Lilliefors test). DI values clustered around 1 in the body-fixed gaze condition (Fig. 3A), with a mean value of 0.93 ± 0.07 SE that was not significantly different from 1 (P = 0.09, t test). Thus, at the population level, the mean DI was not significantly shifted away from the expectation for a purely body-centered reference frame in the body-fixed gaze condition. Based on confidence intervals (CIs) computed by bootstrap for each neuron, we further classified each VIP neuron as body-centered, world-centered, or “intermediate” (see SI Methods for details). As color-coded in Fig. 3A, for the body-fixed gaze condition, 31 of 60 neurons were classified as body-centered (cyan), none were classified as world-centered (red), and 3 of 60 were classified as intermediate (black). The remainder could not be classified unambiguously (gray; SI Methods).

Fig. 3.

Summary of spatial reference frames for vestibular heading tuning in VIP, as quantified by the DI. (A and B) DI values of 1 and 0 indicate body- and world-centered tuning, respectively. Data are shown for both body-fixed gaze (A; n = 60) and world-fixed gaze (B; n = 61) conditions. Cyan and red bars in A and B represent neurons that are statistically classified as body- and world-centered, respectively (see Methods for details). Black bars represent neurons that are classified as intermediate, whereas gray bars represent neurons that are unclassified. Arrowheads indicate mean DI values for each distribution. Mean values of the DI distributions for monkeys F and X were not significantly different (body-fixed gaze: P = 0.06; world-fixed gaze: P = 0.28, t tests); thus, data for A and B were pooled across monkeys. (C) Scatter plot comparing DI values for the body- and world-fixed gaze conditions. Circles and triangles denote data from monkeys F and X, respectively. Colors indicate cells classified as body centered in both conditions (cyan), intermediate in both conditions (black), or body-centered in the body-fixed gaze condition but world-centered in the world-fixed gaze condition (red). Open symbols denote unclassified neurons (Table S1).

In the world-fixed gaze condition (Fig. 3B), DI values clustered between 0 and 1, with a mean value of 0.44 ± 0.10 (SE), which was significantly different from both 0 and 1 (P = 1.62 × 10−7 and P = 1.04 × 10−11, respectively, t tests). Thus, at the population level, results from the world-fixed gaze condition suggested a reference frame that was intermediate between body- and world-centered, on average. However, the reference frames of individual neurons were more variable (color code in Fig. 3B): Thirteen of 61 neurons were classified as body-centered (cyan), 9 of 61 were classified as world-centered (red), 7 of 61 were classified as intermediate (black), and the remainder were unclassified (gray). Notably, among the nine world-centered neurons (red) in the world-fixed gaze condition, five were categorized as body-centered, one as intermediate, and three as unclassified in the body-fixed gaze condition (Fig. 3C; see also Table S1). Thus, the reference frames of individual neurons can change with gaze direction strategy, highlighting the flexibility of reference frames of neural representations in parietal cortex.

Gain Effects.

Gain fields driven by a gaze signal (e.g., eye or head position) have been proposed to support neuronal computations in different reference frames (36–38). Using fits of von Mises functions, which generally provide an excellent description of heading tuning curves, we computed the ratio of response amplitudes for left and center body orientations (AL20/A0), as well as for right and center body orientations (AR20/A0). We found significant gain fields for a number of neurons (Fig. S3; see also SI Methods for details), which were similar for the two gaze conditions (P = 0.07, Wilcoxon signed rank test; Fig. S4). Notably, gaze direction (eye-in-world) in the present experiments varied with body orientation in the body-fixed gaze condition, whereas it was fixed in the world-fixed gaze condition. The significant correlation in gain fields between the two gaze conditions (Fig. S4; type II regression, leftward body orientation: R = 0.37, P = 8.61 × 10−3, slope = 2.0, 95% CI = [0.43, 6.83]; rightward body orientation: R = 0.45, P = 1.16 × 10−3, slope = 0.85, 95% CI = [0.47, 2.79]) suggested that the gain fields did not derive solely from gaze signals, but possibly from body-in-world orientation signals, which were the same in both conditions. There was no correlation between tuning curve shifts and gain fields on a cell-by-cell basis in either the body- or world-fixed gaze conditions (P = 0.97, P = 0.55; Pearson correlation).

Model-Based Analysis of Reference Frames.

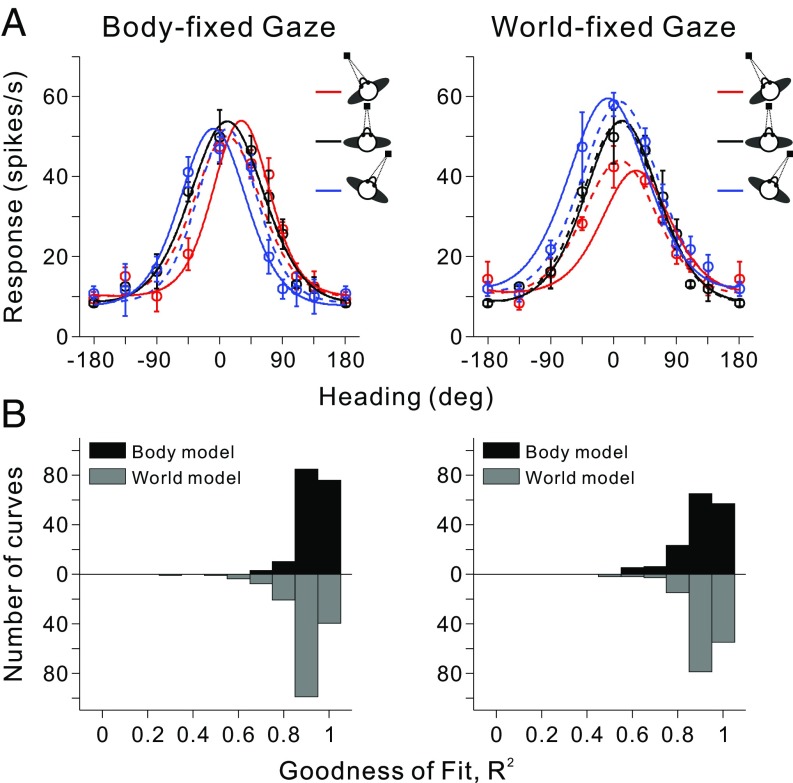

The DI metric quantifies shifts in tuning across changes in body orientation, but may not account for other sources of variance in tuning due to a change in body position. To quantify how consistent the full dataset from each neuron was with each of the two reference frame hypotheses, heading tuning curves from either the body- or world-fixed gaze condition were fit simultaneously with body- and world-centered models by using a set of von Mises functions (Fig. 4; see SI Methods for details). In the body-centered model, the von Mises functions were shifted by the amount of body rotation; in the world-centered model, all of the von Mises functions peaked at the same heading. Both models allowed variations in the amplitude and width of tuning curves as a function of body orientation. The goodness-of-fit of each model was measured by computing a correlation coefficient between the fitted function and the data (Fig. 4B). The median R2 for the body-centered model was significantly greater (P = 1.86 × 10−8, Wilcoxon rank sum test) than that for the world-centered model in the body-fixed gaze condition (Fig. 4 B, Left). In contrast, median R2 values for the two models were not significantly different (P = 0.67) in the world-fixed gaze condition (Fig. 4 B, Right), which is compatible with the finding that average DI values are intermediate between 0 and 1 in the world-fixed gaze condition. Correlation coefficients were converted into partial correlation coefficients and normalized by using Fisher’s r-to-Z transform to enable meaningful comparisons between the two models, independent of the number of data points (39, 40).

Fig. 4.

Model fits to vestibular heading tuning curves. (A) Heading tuning curves for an example VIP neuron (mean firing rate ± SEM) are shown for both the body-fixed (Left) and world-fixed (Right) gaze conditions. For each condition, the three tuning curves were fit simultaneously with body-centered (solid curves) and world-centered (dashed curves) models that are based on von Mises functions (see Methods for details). (B) Distributions of R2 values, which measure goodness-of-fit. For each condition, black bars represent fits with the body-centered model, whereas gray bars denote fits with the world-centered model (data for the world-centered model are plotted in the downward direction for ease of comparison). Note that only neurons with significant tuning for all three gaze positions have been included in this comparison (body-fixed gaze, n = 57; world-fixed gaze, n = 52). The median R2 values for monkeys F and X were not significantly different for either the body-fixed gaze condition (body-centered model, P = 0.57; world-centered model, P = 0.41; Wilcoxon rank sum test) or the world-fixed gaze condition (body-centered model, P = 0.67; world-centered model, P = 0.48); thus, data were pooled across animals in these distributions.

Z scores for the two models are compared in Fig. 5 for populations of neurons tested in the body- and world-fixed gaze conditions, respectively. Data points falling within the gray region could not be classified statistically, but data points falling into the areas above or below the gray region were classified as being significantly better fit (P < 0.01) by the model represented on the ordinate or abscissa, respectively. In the body-fixed gaze condition (Fig. 5A), 61.4% (35 of 57) of VIP neurons were classified as body-centered, 1.8% (1 of 57) were classified as world-centered, and 36.8% (21 of 57) were unclassified. The mean Z score difference was 2.96, which is significantly greater than 0 (P = 1.13 × 10−6, t test). In the world-fixed gaze condition (Fig. 5B), 15.4% (8 of 52) of the neurons were classified as body-centered, 23.1% (12 of 52) were classified as world-centered, and 61.5% (32 of 52) were unclassified (Table S2). The mean Z-score difference was −0.28, which is not significantly different from 0 (P = 0.49, t test). Thus, in line with the DI results, the model-fitting analysis indicated that vestibular heading signals in VIP are represented in a body-centered reference frame for the body-fixed gaze condition and in a range of reference frames between body- and world-centered in the world-fixed gaze condition.

Fig. 5.

Model-based classification of spatial reference frames for vestibular heading tuning. Z-scored partial correlation coefficients between the data and the two models (body- and world-centered) are shown for the body-fixed gaze condition (A; n = 57) and the world-fixed gaze condition (B; n = 52). The gray region marks the boundaries of CIs that distinguish the models statistically. Circles and triangles denote data from monkeys F and X, respectively. The star and asterisk represent the example neurons of Figs. 2 and 4, respectively. Diagonal histograms represent distributions of differences between Z scores for the two models. Arrowheads indicate the mean values for each distribution. Median values of Z-scored partial correlation coefficients were not significantly different for monkeys F and X in either the body-fixed gaze condition (body-centered model, P = 0.11; world-centered model, P = 0.19; Wilcoxon rank-sum test) or in the world-fixed gaze condition (body-centered model, P = 0.86; world-centered model, P = 0.14); thus, data were pooled across animals.

Discussion

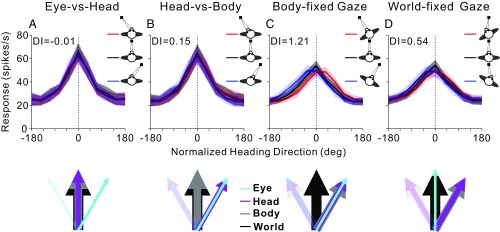

The present results, together with our previous findings that distinguished between eye-, head-, and body-centered reference frames in VIP (19), are summarized in Fig. 6 as normalized average heading tuning curves. When the monkey’s body is fixed in the world (such that body and world reference frames overlap), the average heading tuning curves remain invariant (do not shift) to changes in eye-in-head or head-on-body orientation, as shown in Fig. 6 A and B (data from ref. 19). Thus, we concluded previously that vestibular heading tuning is represented in either a body- or world-centered reference frame (19). Here, we have extended these findings by dissociating body- and world-centered reference frames. When eye-in-head and head-on-body positions did not change (body-fixed gaze condition), the average heading tuning curves shifted by an amount roughly equal to the change in body orientation (Fig. 6C), confirming a body-centered representation. Strikingly, when eye-in-head position changed, such that gaze remained fixed in the world (world-fixed gaze condition; Fig. 6D), the heading tuning shifted from body-centered toward world-centered. Together, these results show that vestibular heading signals in VIP are flexibly represented in either body or world coordinates depending on gaze strategy. During real-world navigation, it may be advantageous for heading signals to be flexibly represented in either egocentric or allocentric reference frames.

Fig. 6.

Summary of vestibular heading representation in VIP. Average normalized heading tuning curves and DI values for data from Chen et al. (19) (A and B) and for the current data (C and D). Colored lines and error bands indicate the mean firing rate ± SEM. Vertical dotted lines show the direction around which tuning curves are aligned (see SI Methods for details). The colored arrows below each graph indicate the direction of reference frames in each condition. The direction of the world reference (thick black arrow) is the same in all schematics. In A (eye vs. head), eye position (cyan arrows) was varied, while head and body positions (magenta and gray arrows) were kept constant. In B (head vs. body), eye and head positions changed together, while body orientation was fixed. In C (body-fixed gaze), eye, head, and body all varied together. In D (world-fixed gaze), head and body changed, while eye position was fixed in the world.

Gaze Modulation and Gain Field Effects.

A recent study by Clemens et al. (41) showed that self-motion perception is affected by world- or body-fixed fixation, such that perceived translations are shorter with a body-fixed than a world-fixed fixation point. Assuming that neurons of human VIP (hVIP) have properties similar to those in macaque VIP, translation would be coded relative to the body by hVIP neurons in the body-fixed fixation condition, but relative to the world in the world-fixed fixation condition of Clemens et al. (41). It is thus possible that translation distance (a world-centered variable) is underestimated in their body-fixed fixation condition. However, there is no evidence that VIP is causally involved in self-motion perception (42); thus, the contribution of gaze signals to perceived translation may arise from other cortical areas, including frontal eye fields (4), parieto-insular vestibular cortex (43), or MSTd (42).

Gain fields driven by a gaze signal have been proposed to support neuronal computations in different reference frames (36–38). We also found that vestibular heading tuning in VIP could be scaled by a gaze signal (19), because gain fields for the eye-vs.-head and head-vs.-body conditions in our previous study were positively correlated when gaze direction was manipulated similarly in both conditions. However, in the current study, the gaze direction (eye-in-world) was varied by body orientation in the body-fixed gaze condition, whereas it was fixed in the world-fixed gaze condition. Nevertheless, we found a significant correlation between gain-field effects for the two task conditions (Fig. S4), suggesting that the observed gain fields do not derive solely from gaze signals, but possibly depend on signals regarding body-in-world orientation, which varied identically in our two task conditions.

Flexible and Diverse Representations of Heading.

A coding scheme in VIP that involves flexible reference frames may be dynamically updated by attended cues in the world. Indeed, in monkeys performing a visual motion detection task, attention not only modulated behavioral performance, but also neural responses in VIP (44). Furthermore, our previous results (14) and those of others (18) have suggested that, when measuring receptive fields (RFs), the spatial reference frames of visual responses in VIP could depend on how attention is allocated during stimulus presentation. If attention is directed to the fixation point, the reference frame could be eye-centered; if attention is attracted away from the fixation point toward the stimulus itself, the reference frame could shift from eye-centered to head-centered. Therefore, the vestibular representation of heading signals in VIP might also be modulated by attention. Notably, however, such a gaze (or attention)-based signal does not seem to modulate neural responses in all conditions; for example, the vestibular heading tuning did not shift with gaze direction when egocentric and allocentric reference frames overlapped (Fig. 6 A and B). Thus, the respective roles of attention and other signals (e.g., efference copy signals, visual landmarks, or spatial memory traces) in driving the flexible representation that we have observed remain unclear.

Such flexible representations of spatial information have been reported. For example, in fMRI studies, human middle temporal (MT) complex was described to be retinotopic when attention was focused on the fixation point and spatiotopic when attention was directed to the motion stimuli themselves (45). Also, BOLD responses evoked by moving stimuli in human visual areas (including MT, MST, lateral occipital cortex, and V6r) were reported to be retinotopic when performing a demanding attentive task, but spatiotopic under more unconstrained conditions (46). In a reach planning task to targets defined by visual or somatosensory stimuli, fMRI activation reflecting the motor goal in reach-coding areas was encoded in gaze-centered coordinates when targets were defined visually, but in body-centered coordinates when targets were defined by unseen proprioceptive cues (47). Furthermore, when monkeys did a similar reaching task, area 5d neurons coded the position of the hand relative to gaze before presentation of the reach target, but switched to coding target location relative to hand position soon after target presentation (48). This result suggested that area 5d may represent postural and spatial information in the reference frame that is most pertinent at each stage of the task.

It is important to note that VIP is a multimodal area, and different sensory signals are coded in diverse spatial reference frames. Specifically, facial tactile RFs are coded in a head-centered reference frame (17), whereas auditory RFs are organized in a continuum between eye- and head-centered coordinates (49). Visual RFs and heading tuning (optic flow) are represented mainly in an eye-centered reference frame (13, 14, 49). In contrast, the present and previous (19) results suggest that the vestibular heading tuning in VIP is coded in body- and world-centered reference frames.

Flexibility and diversity in spatial reference frame representations have also been reported in a number of other sensory signals and brain areas (13–17, 19, 37, 47–57). Spatial reference frame representations do not only show flexibility and diversity, but also dynamic properties. For example, Lee and Groh (58) reported that the reference frame of auditory signals in primate superior colliculus neurons changes dynamically from an intermediate eye- and head-centered frame to a predominantly eye-centered frame around the time of the eye movement. An important feature of the present and previous studies is the prevalence of intermediate reference frame representations (16, 51, 52, 59–61). Despite debates regarding whether this diversity represents meaningless noise or a purposeful strategy, theory and computational models have proposed that broadly distributed and/or intermediate reference frames may arise naturally when considering the posterior parietal cortex as an intermediate layer that uses basis functions to perform multidirectional coordinate transformations (62) and may be consistent with optimal population coding (63–65).

Potential Connections with the Hippocampal Formation.

Whereas multiple types of egocentric representations (e.g., eye-, head-, body-, or limb-centered) are commonly found in the brain, allocentric coding has typically been confined to spatial neurons in the hippocampal formation and related limbic areas. In particular, place cells (66), head direction cells (67), and grid cells (68), found in rodents (69–71), bats (72–74), and even nonhuman primates (75–77) and humans (20, 25, 78–81), are critical to space representation and navigation. In fact, during visually guided navigation in virtual reality environments, both fMRI (20, 25, 81–85) and neural recording studies (78, 86) have shown that the parietal cortex is generally coactivated with the hippocampus and/or entorhinal cortex.

Notably, there appears to be no evidence for a direct atomical connection between the hippocampal–entorhinal circuit and VIP. In macaques, areas 7a and the lateral intraparietal (LIP) area are directly anatomically linked to the hippocampal formation (87–97), but it is not clear whether VIP is directly connected to area 7a. However, VIP and LIP are interconnected (98), as are areas 7a and LIP (98). Thus, VIP may connect to the hippocampal–entorhinal circuit via LIP and/or area 7a.

Therefore, outputs from the hippocampus and entorhinal cortex could be passed to posterior parietal cortex and downstream motor and premotor cortices by several pathways (99). As vestibular signals are important for spatial representations and navigation (100, 101), as well as for head orientation (71) and hippocampal spatial representations (102, 103), the body-centered representation of vestibular signals in VIP might be transformed and modulated during the world-fixed gaze condition by integrating world-centered information represented in the hippocampal–entorhinal circuit. Future studies will be necessary to address these possibilities further.

Methods

Each animal was seated with its head fixed in a primate chair, which was located inside of a coil frame that was used to measure eye movements. The chair was secured on top of a computer-controlled yaw motor that could be used to passively rotate the animal in the horizontal plane. The yaw motor, a rear-projection screen, and a video projector were all secured onto a six-degree-of-freedom motion platform that allowed physical translation (1). Body orientation, relative to the motion platform apparatus, was varied by using the yaw motor to dissociate body- and world-centered reference frames. In addition, the location of the eye fixation target was manipulated to control gaze direction. In the body-fixed gaze condition, gaze direction was always aligned with body orientation; in the world-fixed gaze condition, body orientation was varied, but gaze was fixed on a world-stationary target. The sides of the coil frame were covered by a black, nonreflective material, such that the monkey could only see the local environment within the coil frame. Note, however, that the background illumination from the projector allowed animals to register multiple allocentric cues within the local environment on top of the motion platform. Thus, the animal had cues to judge their body orientation relative to the allocentric reference frame of the motion platform and coil frame. All procedures were approved by the Institutional Animal Care and Use Committee at Baylor College of Medicine and were in accordance with National Institutes of Health guidelines.

We computed an average DI to assess the shift of heading tuning curves relative to the change in body orientation for each condition (body- and world-fixed gaze). Tuning curves for each condition (body- and world-fixed gaze) were also fitted simultaneously with body- or world-centered models. The reference frames of vestibular heading signals in VIP are summarized in Fig. 6, including data from our previous study (19) as well as the present study. Full methods and analyses can be found in SI Methods.

Supplementary Material

Acknowledgments

We thank Amanda Turner and Jing Lin for excellent technical assistance. This study was supported by National Natural Science Foundation of China Grant 31471047 and Fundamental Research Funds for the Central Universities Grant 2015QN81006 (to X.C.); and by National Institutes of Health Grants R01-DC014678 (to D.E.A.) and R01-EY016178 (to G.C.D.).

Footnotes

The authors declare no conflict of interest.

This article contains supporting information online at www.pnas.org/lookup/suppl/doi:10.1073/pnas.1715625115/-/DCSupplemental.

References

- 1.Gu Y, Watkins PV, Angelaki DE, DeAngelis GC. Visual and nonvisual contributions to three-dimensional heading selectivity in the medial superior temporal area. J Neurosci. 2006;26:73–85. doi: 10.1523/JNEUROSCI.2356-05.2006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Chen A, DeAngelis GC, Angelaki DE. Representation of vestibular and visual cues to self-motion in ventral intraparietal cortex. J Neurosci. 2011;31:12036–12052. doi: 10.1523/JNEUROSCI.0395-11.2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Chen A, DeAngelis GC, Angelaki DE. Macaque parieto-insular vestibular cortex: Responses to self-motion and optic flow. J Neurosci. 2010;30:3022–3042. doi: 10.1523/JNEUROSCI.4029-09.2010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Gu Y, Cheng Z, Yang L, DeAngelis GC, Angelaki DE. Multisensory convergence of visual and vestibular heading cues in the pursuit area of the frontal eye field. Cereb Cortex. 2016;26:3785–3801. doi: 10.1093/cercor/bhv183. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Fan RH, Liu S, DeAngelis GC, Angelaki DE. Heading tuning in macaque area V6. J Neurosci. 2015;35:16303–16314. doi: 10.1523/JNEUROSCI.2903-15.2015. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Liu S, Yakusheva T, DeAngelis GC, Angelaki DE. Direction discrimination thresholds of vestibular and cerebellar nuclei neurons. J Neurosci. 2010;30:439–448. doi: 10.1523/JNEUROSCI.3192-09.2010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Bryan AS, Angelaki DE. Optokinetic and vestibular responsiveness in the macaque rostral vestibular and fastigial nuclei. J Neurophysiol. 2009;101:714–720. doi: 10.1152/jn.90612.2008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Shaikh AG, Meng H, Angelaki DE. Multiple reference frames for motion in the primate cerebellum. J Neurosci. 2004;24:4491–4497. doi: 10.1523/JNEUROSCI.0109-04.2004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Yakusheva TA, et al. Purkinje cells in posterior cerebellar vermis encode motion in an inertial reference frame. Neuron. 2007;54:973–985. doi: 10.1016/j.neuron.2007.06.003. [DOI] [PubMed] [Google Scholar]

- 10.Shaikh AG, Ghasia FF, Dickman JD, Angelaki DE. Properties of cerebellar fastigial neurons during translation, rotation, and eye movements. J Neurophysiol. 2005;93:853–863. doi: 10.1152/jn.00879.2004. [DOI] [PubMed] [Google Scholar]

- 11.Meng H, May PJ, Dickman JD, Angelaki DE. Vestibular signals in primate thalamus: Properties and origins. J Neurosci. 2007;27:13590–13602. doi: 10.1523/JNEUROSCI.3931-07.2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Morrone MC, et al. A cortical area that responds specifically to optic flow, revealed by fMRI. Nat Neurosci. 2000;3:1322–1328. doi: 10.1038/81860. [DOI] [PubMed] [Google Scholar]

- 13.Chen X, DeAngelis GC, Angelaki DE. Eye-centered representation of optic flow tuning in the ventral intraparietal area. J Neurosci. 2013;33:18574–18582. doi: 10.1523/JNEUROSCI.2837-13.2013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Chen X, DeAngelis GC, Angelaki DE. Eye-centered visual receptive fields in the ventral intraparietal area. J Neurophysiol. 2014;112:353–361. doi: 10.1152/jn.00057.2014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Fetsch CR, Wang S, Gu Y, DeAngelis GC, Angelaki DE. Spatial reference frames of visual, vestibular, and multimodal heading signals in the dorsal subdivision of the medial superior temporal area. J Neurosci. 2007;27:700–712. doi: 10.1523/JNEUROSCI.3553-06.2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Mullette-Gillman OA, Cohen YE, Groh JM. Eye-centered, head-centered, and complex coding of visual and auditory targets in the intraparietal sulcus. J Neurophysiol. 2005;94:2331–2352. doi: 10.1152/jn.00021.2005. [DOI] [PubMed] [Google Scholar]

- 17.Avillac M, Denève S, Olivier E, Pouget A, Duhamel JR. Reference frames for representing visual and tactile locations in parietal cortex. Nat Neurosci. 2005;8:941–949. doi: 10.1038/nn1480. [DOI] [PubMed] [Google Scholar]

- 18.Duhamel JR, Bremmer F, Ben Hamed S, Graf W. Spatial invariance of visual receptive fields in parietal cortex neurons. Nature. 1997;389:845–848. doi: 10.1038/39865. [DOI] [PubMed] [Google Scholar]

- 19.Chen X, DeAngelis GC, Angelaki DE. Diverse spatial reference frames of vestibular signals in parietal cortex. Neuron. 2013;80:1310–1321. doi: 10.1016/j.neuron.2013.09.006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Maguire EA, et al. Knowing where and getting there: A human navigation network. Science. 1998;280:921–924. doi: 10.1126/science.280.5365.921. [DOI] [PubMed] [Google Scholar]

- 21.Gauthier B, van Wassenhove V. Time is not space: Core computations and domain-specific networks for mental travels. J Neurosci. 2016;36:11891–11903. doi: 10.1523/JNEUROSCI.1400-16.2016. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Sherrill KR, et al. Functional connections between optic flow areas and navigationally responsive brain regions during goal-directed navigation. Neuroimage. 2015;118:386–396. doi: 10.1016/j.neuroimage.2015.06.009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Rosenbaum RS, Ziegler M, Winocur G, Grady CL, Moscovitch M. “I have often walked down this street before”: fMRI studies on the hippocampus and other structures during mental navigation of an old environment. Hippocampus. 2004;14:826–835. doi: 10.1002/hipo.10218. [DOI] [PubMed] [Google Scholar]

- 24.Byrne P, Becker S. Modeling mental navigation in scenes with multiple objects. Neural Comput. 2004;16:1851–1872. doi: 10.1162/0899766041336468. [DOI] [PubMed] [Google Scholar]

- 25.Spiers HJ, Maguire EA. A navigational guidance system in the human brain. Hippocampus. 2007;17:618–626. doi: 10.1002/hipo.20298. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Gramann K, et al. Human brain dynamics accompanying use of egocentric and allocentric reference frames during navigation. J Cogn Neurosci. 2010;22:2836–2849. doi: 10.1162/jocn.2009.21369. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Wolbers T, Wiener JM, Mallot HA, Büchel C. Differential recruitment of the hippocampus, medial prefrontal cortex, and the human motion complex during path integration in humans. J Neurosci. 2007;27:9408–9416. doi: 10.1523/JNEUROSCI.2146-07.2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Fidalgo C, Martin CB. The hippocampus contributes to allocentric spatial memory through coherent scene representations. J Neurosci. 2016;36:2555–2557. doi: 10.1523/JNEUROSCI.4548-15.2016. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Guderian S, et al. Hippocampal volume reduction in humans predicts impaired allocentric spatial memory in virtual-reality navigation. J Neurosci. 2015;35:14123–14131. doi: 10.1523/JNEUROSCI.0801-15.2015. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Gomez A, Cerles M, Rousset S, Rémy C, Baciu M. Differential hippocampal and retrosplenial involvement in egocentric-updating, rotation, and allocentric processing during online spatial encoding: An fMRI study. Front Hum Neurosci. 2014;8:150. doi: 10.3389/fnhum.2014.00150. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Suthana NA, Ekstrom AD, Moshirvaziri S, Knowlton B, Bookheimer SY. Human hippocampal CA1 involvement during allocentric encoding of spatial information. J Neurosci. 2009;29:10512–10519. doi: 10.1523/JNEUROSCI.0621-09.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Wilber AA, Clark BJ, Forster TC, Tatsuno M, McNaughton BL. Interaction of egocentric and world-centered reference frames in the rat posterior parietal cortex. J Neurosci. 2014;34:5431–5446. doi: 10.1523/JNEUROSCI.0511-14.2014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Whitlock JR, Sutherland RJ, Witter MP, Moser MB, Moser EI. Navigating from hippocampus to parietal cortex. Proc Natl Acad Sci USA. 2008;105:14755–14762. doi: 10.1073/pnas.0804216105. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Save E, Poucet B. Hippocampal-parietal cortical interactions in spatial cognition. Hippocampus. 2000;10:491–499. doi: 10.1002/1098-1063(2000)10:4<491::AID-HIPO16>3.0.CO;2-0. [DOI] [PubMed] [Google Scholar]

- 35.Oess T, Krichmar JL, Röhrbein F. A computational model for spatial navigation based on reference frames in the hippocampus, retrosplenial cortex, and posterior parietal cortex. Front Neurorobot. 2017;11:4. doi: 10.3389/fnbot.2017.00004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Brotchie PR, Andersen RA, Snyder LH, Goodman SJ. Head position signals used by parietal neurons to encode locations of visual stimuli. Nature. 1995;375:232–235. doi: 10.1038/375232a0. [DOI] [PubMed] [Google Scholar]

- 37.Snyder LH, Grieve KL, Brotchie P, Andersen RA. Separate body- and world-referenced representations of visual space in parietal cortex. Nature. 1998;394:887–891. doi: 10.1038/29777. [DOI] [PubMed] [Google Scholar]

- 38.Cohen YE, Andersen RA. A common reference frame for movement plans in the posterior parietal cortex. Nat Rev Neurosci. 2002;3:553–562. doi: 10.1038/nrn873. [DOI] [PubMed] [Google Scholar]

- 39.Angelaki DE, Shaikh AG, Green AM, Dickman JD. Neurons compute internal models of the physical laws of motion. Nature. 2004;430:560–564. doi: 10.1038/nature02754. [DOI] [PubMed] [Google Scholar]

- 40.Smith MA, Majaj NJ, Movshon JA. Dynamics of motion signaling by neurons in macaque area MT. Nat Neurosci. 2005;8:220–228. doi: 10.1038/nn1382. [DOI] [PubMed] [Google Scholar]

- 41.Clemens IA, Selen LP, Pomante A, MacNeilage PR, Medendorp WP. Eye movements in darkness modulate self-motion perception. eNeuro. 2017;4:ENEURO.0211-16.2016. doi: 10.1523/ENEURO.0211-16.2016. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Chen A, Gu Y, Liu S, DeAngelis GC, Angelaki DE. Evidence for a causal contribution of macaque vestibular, but not intraparietal, cortex to heading perception. J Neurosci. 2016;36:3789–3798. doi: 10.1523/JNEUROSCI.2485-15.2016. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.Gu Y, DeAngelis GC, Angelaki DE. Causal links between dorsal medial superior temporal area neurons and multisensory heading perception. J Neurosci. 2012;32:2299–2313. doi: 10.1523/JNEUROSCI.5154-11.2012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44.Cook EP, Maunsell JH. Attentional modulation of behavioral performance and neuronal responses in middle temporal and ventral intraparietal areas of macaque monkey. J Neurosci. 2002;22:1994–2004. doi: 10.1523/JNEUROSCI.22-05-01994.2002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45.Burr DC, Morrone MC. Spatiotopic coding and remapping in humans. Philos Trans R Soc Lond B Biol Sci. 2011;366:504–515. doi: 10.1098/rstb.2010.0244. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46.Crespi S, et al. Spatiotopic coding of BOLD signal in human visual cortex depends on spatial attention. PLoS One. 2011;6:e21661. doi: 10.1371/journal.pone.0021661. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47.Bernier PM, Grafton ST. Human posterior parietal cortex flexibly determines reference frames for reaching based on sensory context. Neuron. 2010;68:776–788. doi: 10.1016/j.neuron.2010.11.002. [DOI] [PubMed] [Google Scholar]

- 48.Bremner LR, Andersen RA. Temporal analysis of reference frames in parietal cortex area 5d during reach planning. J Neurosci. 2014;34:5273–5284. doi: 10.1523/JNEUROSCI.2068-13.2014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49.Schlack A, Sterbing-D’Angelo SJ, Hartung K, Hoffmann KP, Bremmer F. Multisensory space representations in the macaque ventral intraparietal area. J Neurosci. 2005;25:4616–4625. doi: 10.1523/JNEUROSCI.0455-05.2005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 50.Crowe DA, Averbeck BB, Chafee MV. Neural ensemble decoding reveals a correlate of viewer- to object-centered spatial transformation in monkey parietal cortex. J Neurosci. 2008;28:5218–5228. doi: 10.1523/JNEUROSCI.5105-07.2008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 51.Rosenberg A, Angelaki DE. Gravity influences the visual representation of object tilt in parietal cortex. J Neurosci. 2014;34:14170–14180. doi: 10.1523/JNEUROSCI.2030-14.2014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 52.Metzger RR, Mullette-Gillman OA, Underhill AM, Cohen YE, Groh JM. Auditory saccades from different eye positions in the monkey: Implications for coordinate transformations. J Neurophysiol. 2004;92:2622–2627. doi: 10.1152/jn.00326.2004. [DOI] [PubMed] [Google Scholar]

- 53.Mullette-Gillman OA, Cohen YE, Groh JM. Motor-related signals in the intraparietal cortex encode locations in a hybrid, rather than eye-centered reference frame. Cereb Cortex. 2009;19:1761–1775. doi: 10.1093/cercor/bhn207. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 54.Pesaran B, Nelson MJ, Andersen RA. Dorsal premotor neurons encode the relative position of the hand, eye, and goal during reach planning. Neuron. 2006;51:125–134. doi: 10.1016/j.neuron.2006.05.025. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 55.McGuire LM, Sabes PN. Heterogeneous representations in the superior parietal lobule are common across reaches to visual and proprioceptive targets. J Neurosci. 2011;31:6661–6673. doi: 10.1523/JNEUROSCI.2921-10.2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 56.Sereno MI, Huang RS. A human parietal face area contains aligned head-centered visual and tactile maps. Nat Neurosci. 2006;9:1337–1343. doi: 10.1038/nn1777. [DOI] [PubMed] [Google Scholar]

- 57.Leoné FT, Monaco S, Henriques DY, Toni I, Medendorp WP. Flexible reference frames for grasp planning in human parietofrontal cortex. eNeuro. 2015;2:ENEURO.0008-15.2015. doi: 10.1523/ENEURO.0008-15.2015. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 58.Lee J, Groh JM. Auditory signals evolve from hybrid- to eye-centered coordinates in the primate superior colliculus. J Neurophysiol. 2012;108:227–242. doi: 10.1152/jn.00706.2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 59.Maier JX, Groh JM. Multisensory guidance of orienting behavior. Hear Res. 2009;258:106–112. doi: 10.1016/j.heares.2009.05.008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 60.Batista AP, et al. Reference frames for reach planning in macaque dorsal premotor cortex. J Neurophysiol. 2007;98:966–983. doi: 10.1152/jn.00421.2006. [DOI] [PubMed] [Google Scholar]

- 61.Chang SW, Snyder LH. Idiosyncratic and systematic aspects of spatial representations in the macaque parietal cortex. Proc Natl Acad Sci USA. 2010;107:7951–7956. doi: 10.1073/pnas.0913209107. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 62.Pouget A, Snyder LH. Computational approaches to sensorimotor transformations. Nat Neurosci. 2000;3:1192–1198. doi: 10.1038/81469. [DOI] [PubMed] [Google Scholar]

- 63.Deneve S, Latham PE, Pouget A. Efficient computation and cue integration with noisy population codes. Nat Neurosci. 2001;4:826–831. doi: 10.1038/90541. [DOI] [PubMed] [Google Scholar]

- 64.Deneve S, Pouget A. Bayesian multisensory integration and cross-modal spatial links. J Physiol Paris. 2004;98:249–258. doi: 10.1016/j.jphysparis.2004.03.011. [DOI] [PubMed] [Google Scholar]

- 65.Pouget A, Deneve S, Duhamel JR. A computational perspective on the neural basis of multisensory spatial representations. Nat Rev Neurosci. 2002;3:741–747. doi: 10.1038/nrn914. [DOI] [PubMed] [Google Scholar]

- 66.O’Keefe J, Dostrovsky J. The hippocampus as a spatial map. Preliminary evidence from unit activity in the freely-moving rat. Brain Res. 1971;34:171–175. doi: 10.1016/0006-8993(71)90358-1. [DOI] [PubMed] [Google Scholar]

- 67.Taube JS, Muller RU, Ranck JB., Jr Head-direction cells recorded from the postsubiculum in freely moving rats. I. Description and quantitative analysis. J Neurosci. 1990;10:420–435. doi: 10.1523/JNEUROSCI.10-02-00420.1990. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 68.Hafting T, Fyhn M, Molden S, Moser MB, Moser EI. Microstructure of a spatial map in the entorhinal cortex. Nature. 2005;436:801–806. doi: 10.1038/nature03721. [DOI] [PubMed] [Google Scholar]

- 69.Rowland DC, Roudi Y, Moser MB, Moser EI. Ten years of grid cells. Annu Rev Neurosci. 2016;39:19–40. doi: 10.1146/annurev-neuro-070815-013824. [DOI] [PubMed] [Google Scholar]

- 70.Barry C, et al. The boundary vector cell model of place cell firing and spatial memory. Rev Neurosci. 2006;17:71–97. doi: 10.1515/revneuro.2006.17.1-2.71. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 71.Taube JS. The head direction signal: Origins and sensory-motor integration. Annu Rev Neurosci. 2007;30:181–207. doi: 10.1146/annurev.neuro.29.051605.112854. [DOI] [PubMed] [Google Scholar]

- 72.Rubin A, Yartsev MM, Ulanovsky N. Encoding of head direction by hippocampal place cells in bats. J Neurosci. 2014;34:1067–1080. doi: 10.1523/JNEUROSCI.5393-12.2014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 73.Yartsev MM, Ulanovsky N. Representation of three-dimensional space in the hippocampus of flying bats. Science. 2013;340:367–372. doi: 10.1126/science.1235338. [DOI] [PubMed] [Google Scholar]

- 74.Yartsev MM, Witter MP, Ulanovsky N. Grid cells without theta oscillations in the entorhinal cortex of bats. Nature. 2011;479:103–107. doi: 10.1038/nature10583. [DOI] [PubMed] [Google Scholar]

- 75.Killian NJ, Jutras MJ, Buffalo EA. A map of visual space in the primate entorhinal cortex. Nature. 2012;491:761–764. doi: 10.1038/nature11587. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 76.Matsumura N, et al. Spatial- and task-dependent neuronal responses during real and virtual translocation in the monkey hippocampal formation. J Neurosci. 1999;19:2381–2393. doi: 10.1523/JNEUROSCI.19-06-02381.1999. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 77.Robertson RG, Rolls ET, Georges-François P, Panzeri S. Head direction cells in the primate pre-subiculum. Hippocampus. 1999;9:206–219. doi: 10.1002/(SICI)1098-1063(1999)9:3<206::AID-HIPO2>3.0.CO;2-H. [DOI] [PubMed] [Google Scholar]

- 78.Jacobs J, et al. Direct recordings of grid-like neuronal activity in human spatial navigation. Nat Neurosci. 2013;16:1188–1190. doi: 10.1038/nn.3466. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 79.Navarro Schröder T, Haak KV, Zaragoza Jimenez NI, Beckmann CF, Doeller CF. Functional topography of the human entorhinal cortex. eLife. 2015;4:e06738. doi: 10.7554/eLife.06738. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 80.Maass A, Berron D, Libby LA, Ranganath C, Düzel E. Functional subregions of the human entorhinal cortex. eLife. 2015;4:e06426. doi: 10.7554/eLife.06426. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 81.Brown TI, et al. Prospective representation of navigational goals in the human hippocampus. Science. 2016;352:1323–1326. doi: 10.1126/science.aaf0784. [DOI] [PubMed] [Google Scholar]

- 82.Baumann O, Mattingley JB. Medial parietal cortex encodes perceived heading direction in humans. J Neurosci. 2010;30:12897–12901. doi: 10.1523/JNEUROSCI.3077-10.2010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 83.Doeller CF, Barry C, Burgess N. Evidence for grid cells in a human memory network. Nature. 2010;463:657–661. doi: 10.1038/nature08704. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 84.Grön G, Wunderlich AP, Spitzer M, Tomczak R, Riepe MW. Brain activation during human navigation: Gender-different neural networks as substrate of performance. Nat Neurosci. 2000;3:404–408. doi: 10.1038/73980. [DOI] [PubMed] [Google Scholar]

- 85.Sherrill KR, et al. Hippocampus and retrosplenial cortex combine path integration signals for successful navigation. J Neurosci. 2013;33:19304–19313. doi: 10.1523/JNEUROSCI.1825-13.2013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 86.Sato N, Sakata H, Tanaka YL, Taira M. Navigation-associated medial parietal neurons in monkeys. Proc Natl Acad Sci USA. 2006;103:17001–17006. doi: 10.1073/pnas.0604277103. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 87.Lavenex P, Suzuki WA, Amaral DG. Perirhinal and parahippocampal cortices of the macaque monkey: Projections to the neocortex. J Comp Neurol. 2002;447:394–420. doi: 10.1002/cne.10243. [DOI] [PubMed] [Google Scholar]

- 88.Suzuki WA, Amaral DG. Perirhinal and parahippocampal cortices of the macaque monkey: Cortical afferents. J Comp Neurol. 1994;350:497–533. doi: 10.1002/cne.903500402. [DOI] [PubMed] [Google Scholar]

- 89.Andersen RA, Asanuma C, Essick G, Siegel RM. Corticocortical connections of anatomically and physiologically defined subdivisions within the inferior parietal lobule. J Comp Neurol. 1990;296:65–113. doi: 10.1002/cne.902960106. [DOI] [PubMed] [Google Scholar]

- 90.Insausti R, Amaral DG. Entorhinal cortex of the monkey: IV. Topographical and laminar organization of cortical afferents. J Comp Neurol. 2008;509:608–641. doi: 10.1002/cne.21753. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 91.Muñoz M, Insausti R. Cortical efferents of the entorhinal cortex and the adjacent parahippocampal region in the monkey (Macaca fascicularis) Eur J Neurosci. 2005;22:1368–1388. doi: 10.1111/j.1460-9568.2005.04299.x. [DOI] [PubMed] [Google Scholar]

- 92.Insausti R, Muñoz M. Cortical projections of the non-entorhinal hippocampal formation in the cynomolgus monkey (Macaca fascicularis) Eur J Neurosci. 2001;14:435–451. doi: 10.1046/j.0953-816x.2001.01662.x. [DOI] [PubMed] [Google Scholar]

- 93.Ding SL, Van Hoesen G, Rockland KS. Inferior parietal lobule projections to the presubiculum and neighboring ventromedial temporal cortical areas. J Comp Neurol. 2000;425:510–530. doi: 10.1002/1096-9861(20001002)425:4<510::aid-cne4>3.0.co;2-r. [DOI] [PubMed] [Google Scholar]

- 94.Rockland KS, Van Hoesen GW. Some temporal and parietal cortical connections converge in CA1 of the primate hippocampus. Cereb Cortex. 1999;9:232–237. doi: 10.1093/cercor/9.3.232. [DOI] [PubMed] [Google Scholar]

- 95.Cavada C, Goldman-Rakic PS. Posterior parietal cortex in rhesus monkey: I. Parcellation of areas based on distinctive limbic and sensory corticocortical connections. J Comp Neurol. 1989;287:393–421. doi: 10.1002/cne.902870402. [DOI] [PubMed] [Google Scholar]

- 96.Seltzer B, Pandya DN. Further observations on parieto-temporal connections in the rhesus monkey. Exp Brain Res. 1984;55:301–312. doi: 10.1007/BF00237280. [DOI] [PubMed] [Google Scholar]

- 97.Blatt GJ, Andersen RA, Stoner GR. Visual receptive field organization and cortico-cortical connections of the lateral intraparietal area (area LIP) in the macaque. J Comp Neurol. 1990;299:421–445. doi: 10.1002/cne.902990404. [DOI] [PubMed] [Google Scholar]

- 98.Lewis JW, Van Essen DC. Corticocortical connections of visual, sensorimotor, and multimodal processing areas in the parietal lobe of the macaque monkey. J Comp Neurol. 2000;428:112–137. doi: 10.1002/1096-9861(20001204)428:1<112::aid-cne8>3.0.co;2-9. [DOI] [PubMed] [Google Scholar]

- 99.Kobayashi Y, Amaral DG. Macaque monkey retrosplenial cortex: III. Cortical efferents. J Comp Neurol. 2007;502:810–833. doi: 10.1002/cne.21346. [DOI] [PubMed] [Google Scholar]

- 100.Andersen RA, Shenoy KV, Snyder LH, Bradley DC, Crowell JA. The contributions of vestibular signals to the representations of space in the posterior parietal cortex. Ann N Y Acad Sci. 1999;871:282–292. doi: 10.1111/j.1749-6632.1999.tb09192.x. [DOI] [PubMed] [Google Scholar]

- 101.Angelaki DE, Cullen KE. Vestibular system: The many facets of a multimodal sense. Annu Rev Neurosci. 2008;31:125–150. doi: 10.1146/annurev.neuro.31.060407.125555. [DOI] [PubMed] [Google Scholar]

- 102.Stackman RW, Clark AS, Taube JS. Hippocampal spatial representations require vestibular input. Hippocampus. 2002;12:291–303. doi: 10.1002/hipo.1112. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 103.Rancz EA, et al. Widespread vestibular activation of the rodent cortex. J Neurosci. 2015;35:5926–5934. doi: 10.1523/JNEUROSCI.1869-14.2015. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.