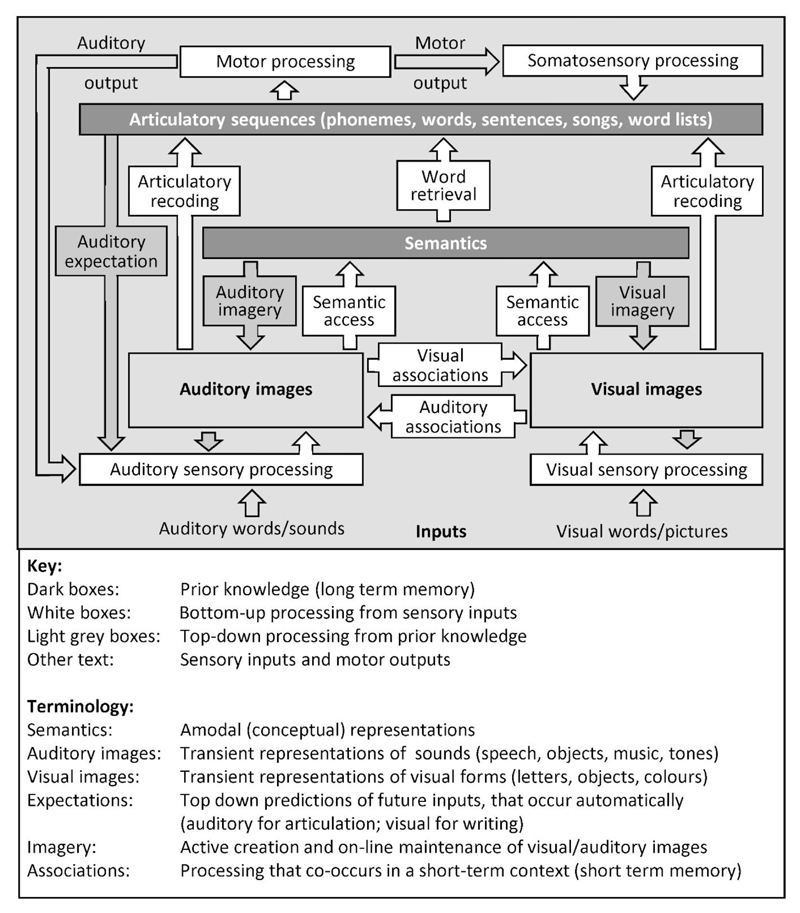

Figure 6. A physiologically constrained model of word processing.

The model describes processing that is required for speech recognition but not specific to speech recognition. Incoming visual or auditory stimuli (e.g. a written or spoken word) are first processed in the primary sensory areas of the brain. By integrating these sensory features with prior knowledge, we form a visual or auditory mental image of the presented stimulus (that the subject may or may not be aware of). Auditory images of speech are equivalent to phonological (input) representations but the model uses generic terms to emphasize that the same brain regions are also involved in auditory images of non-speech sounds (Dick et al., 2007; Leech et al., 2009; Price et al., 2005; Saygin et al., 2003). If the sensory inputs carry semantic cues (e.g. familiar words, pictures of familiar objects or sounds of familiar objects), semantic associations can be retrieved and linked to the articulatory patterns associated with the word or object name (word retrieval stage). If there are no semantic cues available, articulatory plans can only be retrieved from the non-semantic parts of speech stimuli, e.g. the sublexical parts of an unfamiliar pseudoword (a pronounceable nonword). Finally, the articulatory plans are used to drive motor activity in the face, mouth and larynx when the task involves a speech response. This generates auditory and somatosensory processing (i.e. we hear and feel the movement in the speech articulators). This model was adapted from that in Price (2012) and updated with Philipp Ludersdorfer and Marion Oberhuber.