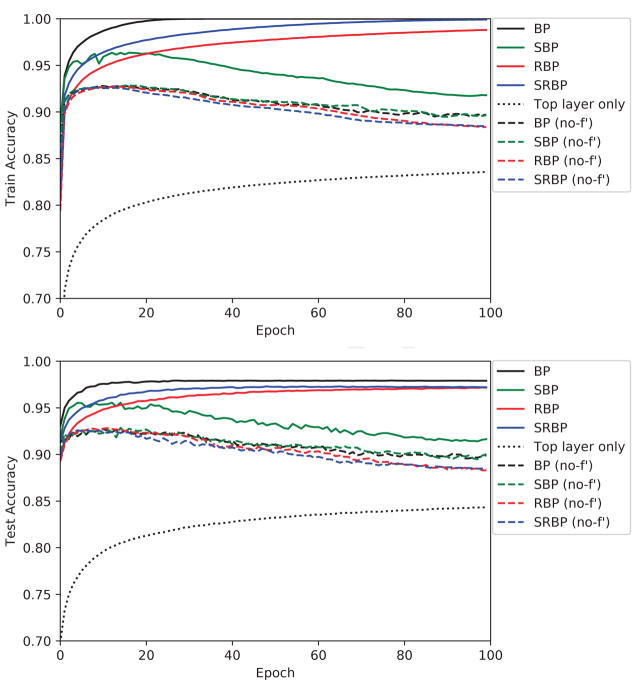

Figure 1.

MNIST training (upper) and test (lower) accuracy, as a function of epoch, for nine different learning algorithms: backpropagation (BP), skip BP (SBP), random BP (RBP), skip random BP (SRBP), the version of each algorithm in which the error signal is not multiplied by the derivative of the post-synaptic transfer function (no-f′), and the case where only the top layer is trained while the lower layer weights are fixed (Top Layer Only). Note that these algorithms differ only in how they backpropagate error signals to the lower layers; the top layer is always updated according to the typical gradient descent rule. Models are trained five times with different weight initializations; the trajectory of the mean is shown here.