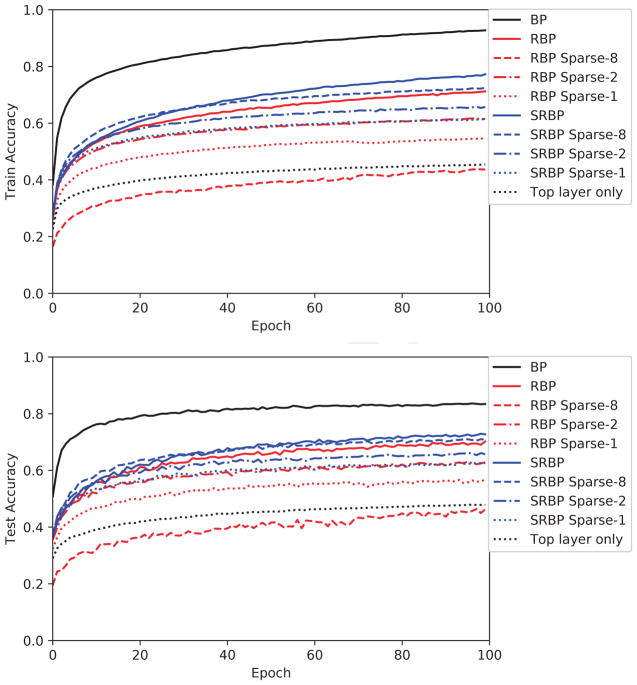

Figure 6.

CIFAR-10 training (upper) and test (lower) accuracy for the sparse versions of the RBP and SRBP algorithms. Experiments are run with different levels of sparsity by controlling the expected number n of non-zero connections sent from one neuron to any other layer it is connected to in the backward learning channel. The random backpropagation matrix connecting any two layers is created by sampling each entry using a (0,1) Bernoulli distribution, where each element is 1 with probability p = n/(fan − in) and 0 otherwise. We compare to the (Normal) versions of RBP and SRBP, where the elements of these matrices are initialized from a standard Normal distribution scaled in the same way as the forward weight matrices [11]. Models are trained five times with different weight initializations; the trajectory of the mean is shown here.