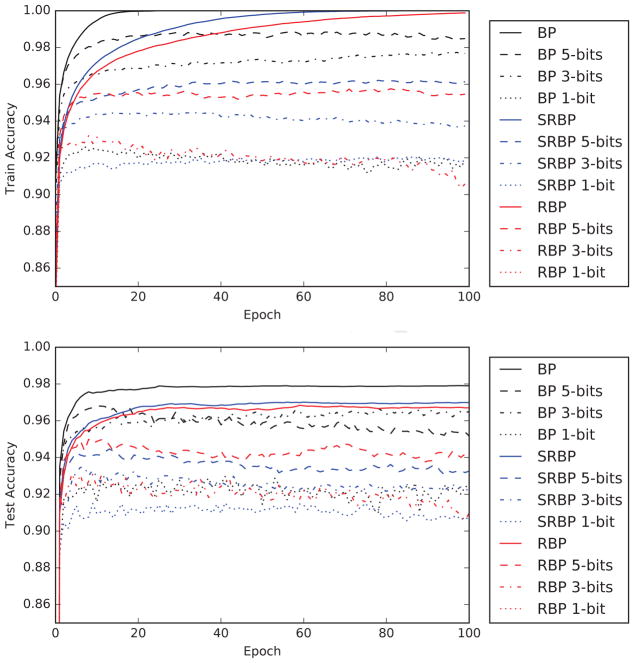

Figure 8.

MNIST training (upper) and test (lower) accuracy, as a function of training epoch, for the sparse versions of the RBP and SRBP algorithms. Experiment are carried with different levels of quantization of the weight updates by controlling the bitwidth bits, according to the formula given in the text (Equation 19). Quantization is applied to each example-specific update, before summing the updates within a minibatch.