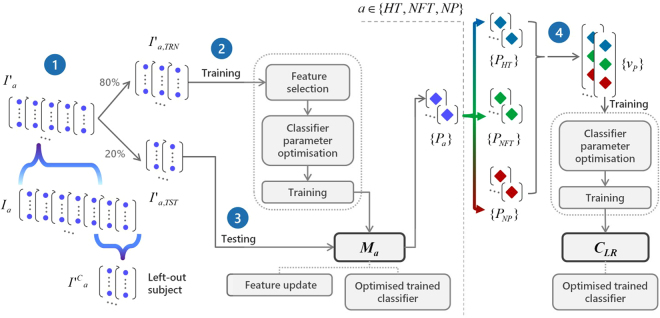

Figure 5.

Training procedure of each leave-one-subject-out loop. (1) The computed Ia feature vector sets, a∈{HT,NFT,NP}, with each feature vector representing a typing session, are split in two parts, i.e., I′a and (the left-out subject feature vector sets). The I′a sets are further split in two subsets, i.e., the I′a,TRN (80%) and the I′a,TST (20%). (2) The I′a,TRN subsets are used for feature selection, hyper parameter optimisation using an inner 4-fold cross-validation, and training of three classifiers, resulting in three independent models Ma, each one dedicated to a specific keystroke dynamics variable (HT, NFT and NP). (3) The I′a,TST subsets are then used to test the three Ma models, resulting in three classification probabilities sets {Pa}, a∈{HT,NFT,NP}. (4) Sets {PHT}, {PNFT}, and {PNP} are fused in single feature vectors, forming a set {vP} that serves to optimise (as in (2)) and train a Logistic Regression classifier CLR. The end product of the training procedure is a configuration of three optimised/trained models (first stage) that independently classify each typing session according to each keystroke dynamics variable, and an optimised/trained Logistic Regression classifier (second stage) that aggregates the latter decisions to reach a final verdict on whether the typing session belongs to a PD patient or not. The latter two-stage configuration is tested using the left-out subject’s sets (see also Fig. 1(b)).