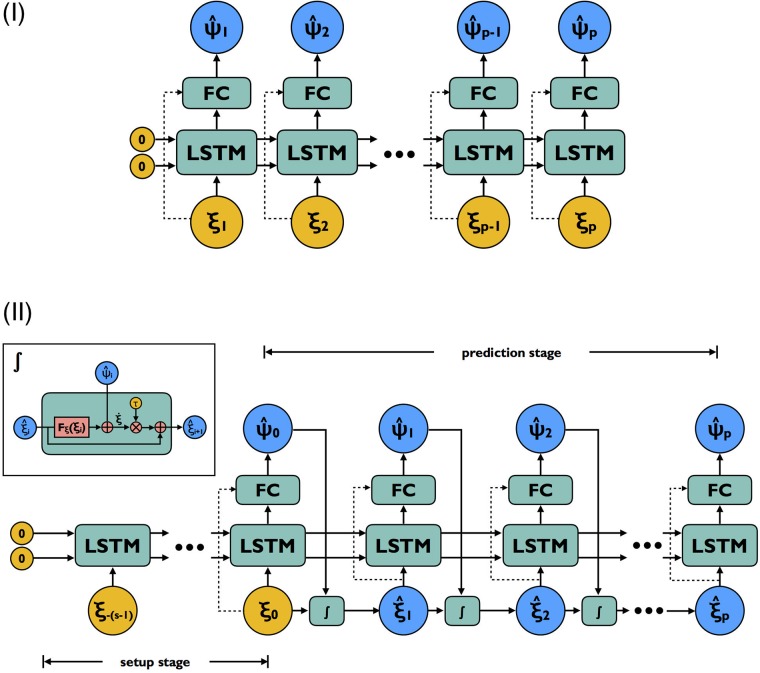

Fig 2. Computational graph for model architecture I and II.

Yellow nodes are input provided to the network corresponding to sequence of states and blue nodes are prediction targets corresponding to the complementary dynamics (plus states for architecture II). Blocks labeled ‘FC’ are fully-connected layers with ReLU activations. Dashed arrows represent optional connections depending on the capacity of LSTM relative to the dimension of ξ. Both architectures share the same set of trainable weights. For architecture I, predictions are made as input is read; input is always accurate regardless of any prediction errors made in previous steps. This architecture is used only for training. Architecture II makes prediction in a sequence-to-sequence (setup sequence to prediction sequence) fashion. Errors made early do impact all predictions that follow. This architecture is used for fine-tuning weights and multi-step-ahead prediction.