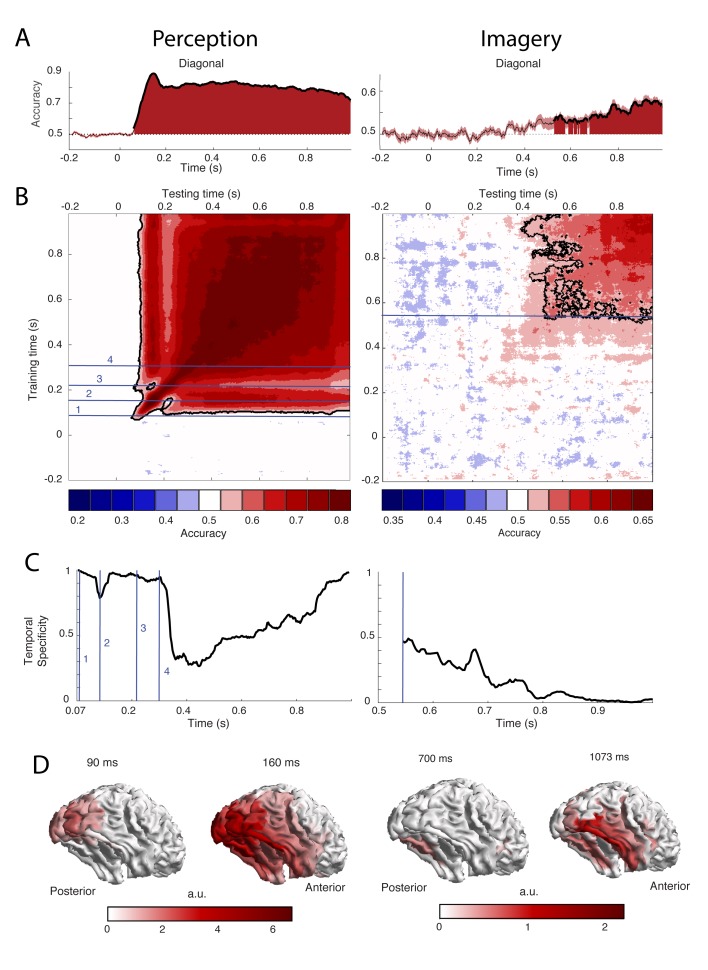

Figure 2. Decoding performance of perception and imagery over time.

(A) Decoding accuracy from a classifier that was trained and tested on the same time points. Filled areas and thick lines indicate significant above chance decoding (cluster corrected, p<0.05). The shaded area represents the standard error of the mean. The dotted line indicates chance level. For perception, zero signifies the onset of the stimulus, for imagery, zero signifies the onset of the retro-cue. (B) Temporal generalization matrix with discretized accuracy. Training time is shown on the vertical axis and testing time on the horizontal axis. Significant clusters are indicated by black contours. Numbers indicate time points of interest that are discussed in the text. (C) Proportion of time points of the significant time window that had significantly lower accuracy than the diagonal, that is specificity of the neural representation at each time point during above chance diagonal decoding (D) Source level contribution to the classifiers at selected training times. Source data for the analyses reported here are available in Figure 2—source data 1.