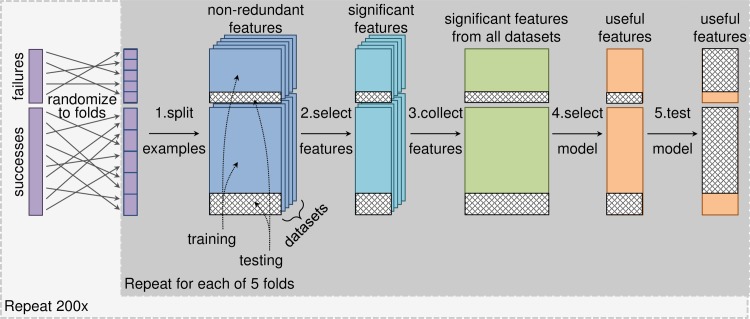

Fig 2. Modeling pipeline.

We trained a classifier to predict phase III clinical trial outcomes, using 5-fold cross-validation repeated 200 times to assess the stability of the classifier and estimate its generalization performance. For each fold of cross-validation, modeling began with the non-redundant features for each dataset. Step 1: We split the targets with phase III outcomes into training and testing sets. Step 2: We performed univariate feature selection using permutation tests to quantify the significance of the difference between the means of the successful and failed targets in the training examples. We controlled for target class as a confounding factor by only shuffling outcomes within target classes. We accepted features with adjusted p-values less than 0.05 after correcting for multiple hypothesis testing using the Benjamini-Yekutieli method. Step 3: We aggregated significant features from all datasets into a single feature matrix. Step 4: We performed incremental feature elimination with an inner 5-fold cross-validation loop repeated 20 times to select the type of classifier (Random Forest or logistic regression) and smallest subset of features that had cross-validation area under the receiver operating characteristic curve (AUROC) and area under the precision-recall curve (AUPR) values within 95% of maximum. Step 5: We refit the selected model using all the training examples and evaluated its performance on the test examples.