Summary

Deep learning technology is rapidly advancing and is now used to solve complex problems. Here, we used deep learning in convolutional neural networks to establish an automated method to identify endothelial cells derived from induced pluripotent stem cells (iPSCs), without the need for immunostaining or lineage tracing. Networks were trained to predict whether phase-contrast images contain endothelial cells based on morphology only. Predictions were validated by comparison to immunofluorescence staining for CD31, a marker of endothelial cells. Method parameters were then automatically and iteratively optimized to increase prediction accuracy. We found that prediction accuracy was correlated with network depth and pixel size of images to be analyzed. Finally, K-fold cross-validation confirmed that optimized convolutional neural networks can identify endothelial cells with high performance, based only on morphology.

Keywords: deep learning, induced pluripotent stem cell, endothelial cell, artificial intelligence, machine learning

Graphical Abstract

Highlights

-

•

Neural networks were trained to spot endothelial cells on phase-contrast images

-

•

Performance was correlated with network depth and pixel size of training images

-

•

Optimized networks identify endothelial cells with high accuracy

Kusumoto et al. developed an automated system to identify endothelial cells derived from induced pluripotent stem cells, based only on morphology. Performance, as assessed by F1 score and accuracy, was correlated with network depth and pixel size of training images. K-fold validation confirmed that endothelial cells are identified automatically with high accuracy using only generalized morphological features.

Introduction

Machine learning consists of automated algorithms that enable learning from large datasets to resolve complex problems, including those encountered in medical science (Gorodeski et al., 2011, Heylman et al., 2015, Hsich et al., 2011). In deep learning, a form of machine learning, patterns from several types of data are automatically extracted (Lecun et al., 2015) to accomplish complex tasks such as image classification, which in conventional machine learning requires feature extraction by a human expert. Deep learning eliminates this requirement by identifying the most informative features using multiple layers in neural networks, i.e., deep neural networks (Hatipoglu and Bilgin, 2014), which were first conceived in the 1940s to mimic human neural circuits (McCulloch and Pitts, 1943). In such neural networks, each neuron receives weighted data from upstream neurons, which are then processed and transmitted to downstream neurons. Ultimately, terminal neurons calculate a predicted value based on processed data, and weights are then iteratively optimized to increase the agreement between predicted and observed values. This technique is rapidly advancing due to innovative algorithms and improved computing power (Bengio et al., 2006, Hinton et al., 2006). For example, convolutional neural networks have now achieved almost the same accuracy as a clinical specialist in diagnosing diabetic retinopathy and skin cancer (Esteva et al., 2017, Gulshan et al., 2016). Convolutional neural networks have also proved useful in cell biology such as morphological classification of hematopoietic cells, C2C12 myoblasts, and induced pluripotent stem cells (iPSCs) (Buggenthin et al., 2017, Niioka et al., 2018, Yuan-Hsiang et al., 2017).

iPSCs, which can be established from somatic cells by expression of defined genes (Takahashi and Yamanaka, 2006), hold great promise in regenerative medicine (Yuasa and Fukuda, 2008), disease modeling (Tanaka et al., 2014), drug screening (Avior et al., 2016), and precision medicine (Chen et al., 2016). iPSCs can differentiate into numerous cell types, although differentiation efficiencies vary among cell lines and are sensitive to experimental conditions (Hu et al., 2010, Osafune et al., 2008). In addition, differentiated cell types are difficult to identify without molecular techniques such as immunostaining and lineage tracing. We hypothesized that phase-contrast images contain discriminative morphological information that can be used by a convolutional neural network to identify endothelial cells. Accordingly, we investigated whether deep learning techniques can be used to identify iPSC-derived endothelial cells automatically based only on morphology.

Results

Development of an Automated System to Identify Endothelial Cells

We differentiated iPSCs as previously described (Patsch et al., 2015), obtaining mesodermal cells at around day 3 and specialized endothelial cells at around day 5 (Figure S1A). At day 6, structures that resemble vascular tubes were formed (Figure S1B). CD31 staining confirmed that endothelial cells were obtained at an efficiency of 20%–35%, as assessed by flow cytometry. Differentiation efficiency was strongly variable (Figure S1C), highlighting the need for an automated cell identification system to assess iPSC differentiation or to identify and quantify the cell types formed.

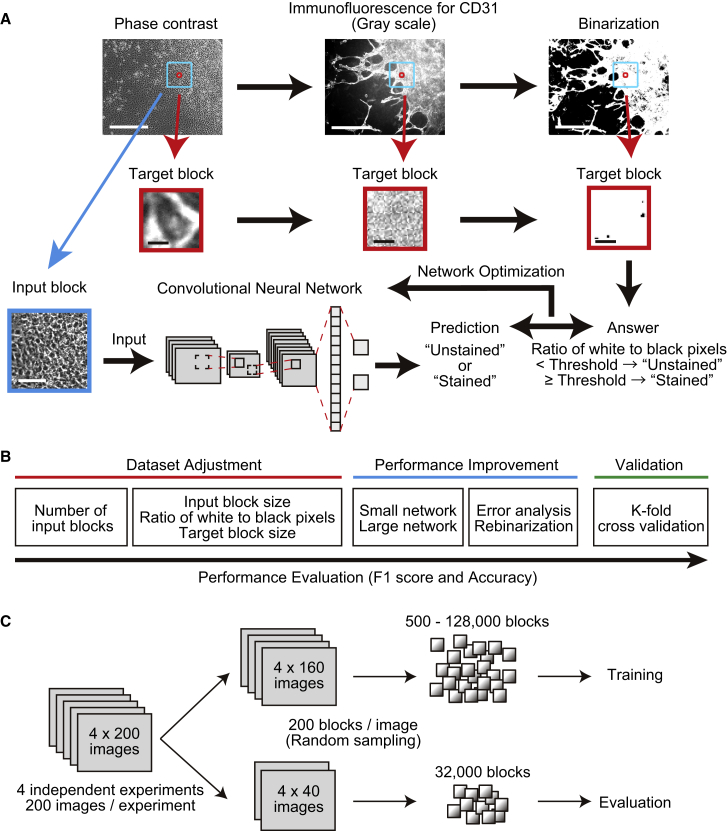

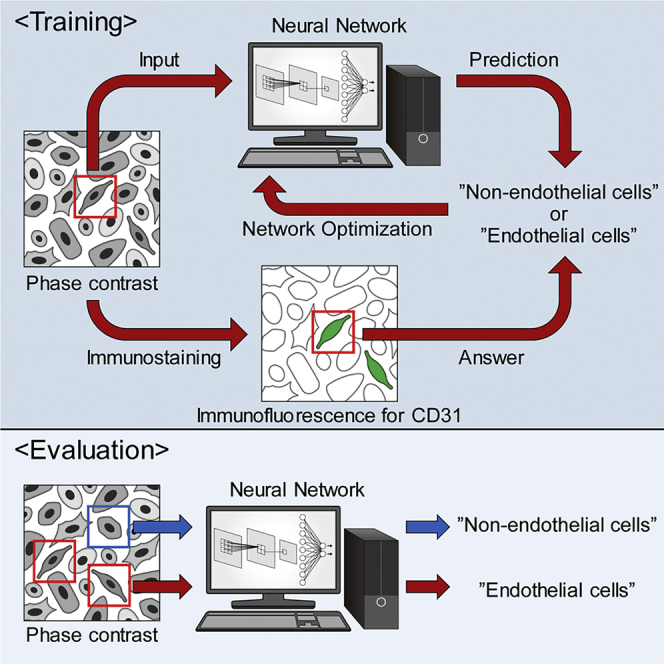

The basic strategy to identify endothelial cells by convolutional neural networks is shown in Figure 1A. In brief, differentiated iPSCs were imaged by phase contrast and by immunofluorescence staining for CD31, a marker of endothelial cells. The latter were then binarized into white and black pixels corresponding to raw pixels above and below a threshold value, respectively. Subsequently, input blocks were extracted randomly from phase-contrast images, and matching target blocks equivalent to or within input blocks were extracted from both phase-contrast and binarized immunofluorescence images. Binarized target blocks were then classified as unstained (0) or stained (1) depending on the ratio of white pixels to black, to generate answers. Finally, input blocks were analyzed in LeNet, a small network (Lecun et al., 1998), and AlexNet, a large network (Krizhevsky et al., 2012), to predict phase-contrast target blocks as unstained or stained. Predictions were compared with answers obtained from binarized target blocks, and weights were automatically and iteratively optimized to train the neural networks and thereby increase accuracy (Figure 1A).

Figure 1.

Analysis of Induced Pluripotent Stem Cell-Derived Endothelial Cells Using Convolutional Neural Networks

(A) Training protocol. Input blocks were extracted from phase-contrast images and predicted by networks to be unstained (0) or stained (1) for CD31. Target blocks containing single cells were extracted from immunofluorescent images of the same field, binarized based on CD31 staining, and classified as stained or unstained based on the ratio of white pixels to black. Network weights were then automatically and iteratively adjusted to maximize agreement between predicted and observed classification. Scale bars, 400 μm (upper panels), 5 μm (middle panels), and 80 μm (bottom panels).

(B) Optimization of experimental parameters to maximize F1 score and accuracy.

(C) Two hundred images each were obtained from four independent experiments. Images were randomized at 80:20 ratio into training and evaluation sets, and 200 blocks were randomly extracted from each image.

Networks were then optimized according to Figure 1B. Number of blocks, input block size, and target block size were first optimized using the small network, along with staining threshold, the ratio of white pixels to black for a target block to be classified as stained. To improve performance, as assessed by F1 score and accuracy, the small network was compared with the large network, observed errors were analyzed, and binarized target blocks were rebinarized by visual comparison of raw fluorescent images with phase-contrast images. Finally, the optimized network was validated by K-fold cross-validation (Figure 1B). To this end, we obtained 200 images from each of four independent experiments, of which 640 were used for training and 160 for validation to collect data shown in Figures 2 and 3. From each image, 200 blocks were randomly extracted, and 500–128,000 of the blocks were used for training while 32,000 blocks were used for validation (Figure 1C).

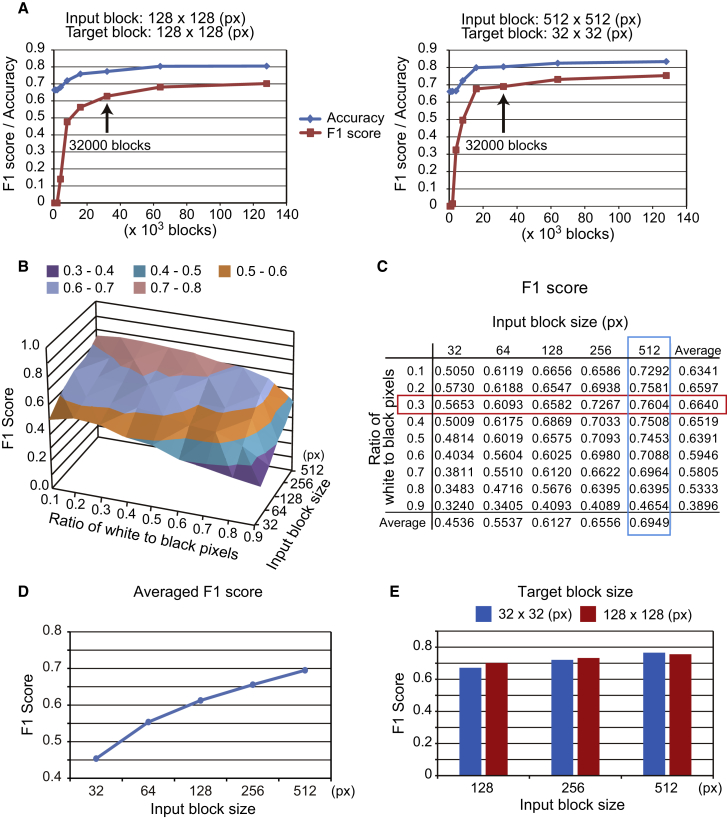

Figure 2.

Dataset Adjustment

(A) F1 score and accuracy as a function of number of input blocks. Left: network performance using 128 × 128-pixel (px) input blocks and 128 × 128-px target blocks. Right: performance using 512 × 512-px input blocks and 32 × 32-px target blocks.

(B and C) F1 score as a function of input block size and staining threshold. The optimal threshold is boxed in red and the optimal input block size is boxed in blue.

(D) Average F1 score for different input block sizes.

(E) F1 score for different target block sizes.

See also Figure S2 and Tables S1–S3.

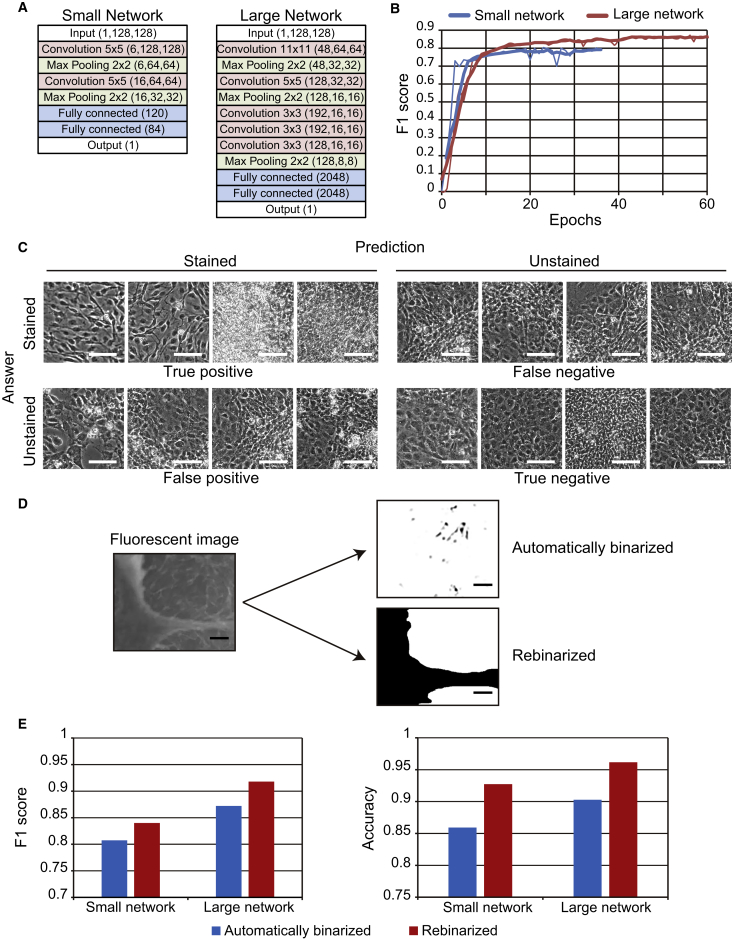

Figure 3.

Network Optimization

(A) Comparison of LeNet and AlexNet, which are small and large deep neural networks.

(B) F1 score learning curves from the small and large network.

(C) Representative true positive, false positive, true negative, and false negative images. Scale bars, 80 μm.

(D) Immunofluorescent images were binarized automatically, or rebinarized by manual comparison of raw fluorescent images to phase-contrast images. Scale bars, 100 μm.

(E) F1 score and accuracy were compared following training of the small and large network on automatically binarized or rebinarized target blocks.

See also Figures S3 and S4; Table S4.

Improvement of F1 Score and Accuracy by Optimization

To train the networks we optimized several experimental conditions, including number of input blocks, target block size, and input block size. Performance was evaluated based on F1 scores, which aggregates recall and precision, and on accuracy, which is the fraction of correct predictions. As noted, we first used 500–128,000 blocks for training (Figure 1C) to determine the number of blocks required to achieved convergence (Table S1). Inflection points in F1 scores and accuracy were observed at 16,000 blocks, and convergence was achieved at 32,000 blocks for an input and target block size of 128 × 128 pixels, as well as for an input block size of 512 × 512 pixels and a target block size of 32 × 32 pixels (Figure 2A). Hence, 32,000 blocks were used for training in subsequent experiments. Next, the optimal combination of block size and staining threshold was determined by input blocks of 32 × 32, 64 × 64, 128 × 128, 256 × 256, and 512 × 512 pixels. We note that 32 × 32-pixel blocks contained only single cells, while 512 × 512-pixel blocks contained entire colonies and surrounding areas (Figure S2A). Based on F1 scores, performance was best from an input block size of 512 × 512 pixels combined with a staining threshold of 0.3 (Figures 2B and 2C; Table S2). Both F1 score and accuracy increased with input block size (Figures 2D, S2B, and S2C), indicating that areas surrounding cells should be included to increase accuracy. In contrast, target block size did not affect predictive power (Figure 2E) or the correlation between input block size and F1 scores and accuracy (Figure S2D and Table S3).

Effect of Network Size on Predictive Power

As network architecture is critical to performance, we compared the predictive power of the small network LeNet (Lecun et al., 1998) after training on 128,000 blocks with that of the large network AlexNet (Krizhevsky et al., 2012) (Figure 3A). F1 scores and accuracy from the latter were higher (Figures 3B and S3A), suggesting that extraction of complex features by a large network improves cell identification by morphology. Performance was further enhanced by analyzing true positives, true negatives, false positives, and false negatives (Figures 3C and S3B). We found that true positives and true negatives were typically obtained in areas with uniformly distributed cells. In contrast, areas with heterogeneous appearance, such as at the border between abundantly and sparsely colonized surfaces, often led to false positives or false negatives. To examine whether F1 scores are influenced by heterogeneous appearance (Figure S4A), we scored the complexity of all 32,000 512 × 512-pixel validation blocks as the average difference between adjacent pixels, normalized to the dynamic range (Saha and Vemuri, 2000). Blocks with complexity of <0.04 were considered sparsely colonized, while blocks with complexity of 0.04 to 0.08 typically contained uniformly distributed cells with clear boundaries. All other images had complexity >0.08 and contained dense colonies with indistinct cell borders. In both the small and large networks (Figures S4B, S4C, and S4D), F1 scores were highest for blocks with complexity of 0.04 to 0.08 (typically 0.06), implying that variations in cell density and morphology affect network performance, in line with incorrect predictions as shown in Figures 3C and S3B. In light of this result, we speculated that weak staining, non-specific fluorescence, and autofluorescence in dense colonies may also degrade performance. Accordingly, we rebinarized target blocks by visual comparison with raw fluorescent images (Figure 3D). Following this step, 26,861 of 128,000 blocks (21%) were classified as stained, while fully automated binarization scored 40,852 of 128,000 blocks (32%) as stained (Table S4A). Notably, the F1 score and accuracy rose above 0.9 and 0.95, respectively, in the large network (Figure 3E and Table S4A).

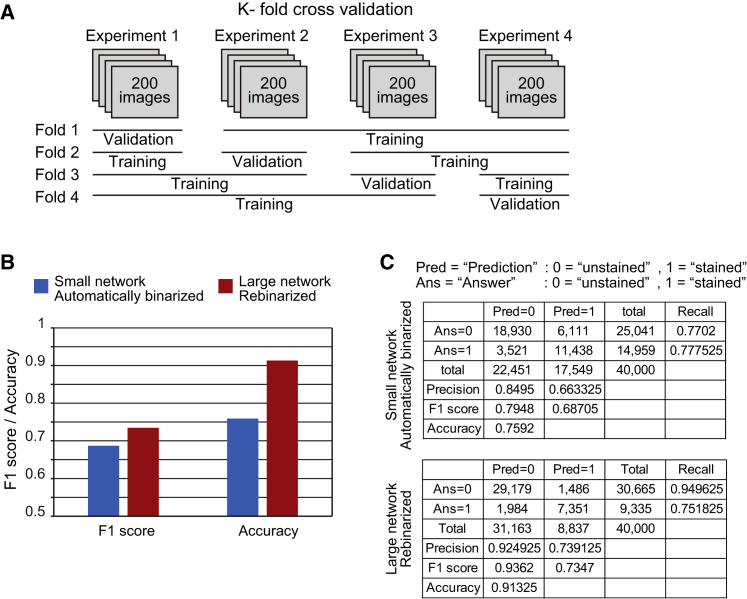

K-Fold Cross-Validation

Finally, we assessed network performance and generalization by K-fold cross-validation, in which k subsets of data are divided into k − 1 training datasets and one validation dataset. Training and validation are then performed k times using different combinations of training and validation datasets. In our case, 800 images were collected in four independent experiments, of which various combinations of 600 images from three experiments were used for training and 200 images from one experiment were used for validation (Figure 4A). The F1 score and accuracy were approximately 0.7 and higher than 0.7 for the small network with automatically binarized target blocks, but over 0.75 and over 0.9, respectively, for the large network with rebinarized target blocks (Figures 4B and 4C; Table S4B).

Figure 4.

K-Fold Cross-Validation

(A) K-fold cross-validation based on four independent datasets, of which three were used for training and one was used for validation, in all possible combinations.

(B) K-fold cross-validation was performed using the small network trained on automatically binarized target blocks, and using the large network trained on rebinarized target blocks. Data are macro averaged F1 score and accuracy.

(C) Detailed K-fold cross-validation data.

See also Table S4.

Discussion

In this study, we demonstrated that deep learning techniques are effective in identifying iPSC-derived endothelial cells. Following optimization of parameters such as number of input blocks, target block size, input block size, staining threshold, and network size, we achieved satisfactory F1 scores and accuracy. Notably, we found that a larger input block increases prediction accuracy, indicating that the environment surrounding cells is an essential feature, as was also observed for differentiated C2C12 myoblasts (Niioka et al., 2018). We note that the immediate microenvironment is also an essential determinant of differentiation (Adams and Alitalo, 2007, Lindblom et al., 2003, Takakura et al., 2000), and that the positive correlation between input block size and F1 score or accuracy may prove helpful in future strategies to identify differentiated cells by morphology.

In comparison with other machine learning techniques, deep learning is straightforward and achieves high accuracies. Indeed, deep learning algorithms have won the ImageNet Large-Scale Visual Recognition Challenge since 2012 (He et al., 2015, Krizhevsky et al., 2012, Szegedy et al., 2014, Zeng et al., 2016), and have also proved useful in cell biology (Buggenthin et al., 2017, Niioka et al., 2018, Van Valen et al., 2016, Yuan-Hsiang et al., 2017). Although we used the older-generation networks LeNet and AlexNet, newer networks achieve even better accuracy in image classification (Esteva et al., 2017, Gulshan et al., 2016). Several techniques, such as increasing network depth (Simonyan and Zisserman, 2014), residual learning (He et al., 2015), and batch normalization (Ioffe and Szegedy, 2015), may also enhance performance, although these were not implemented in this study, since results were already satisfactory.

Inspection revealed some issues in binarizing heterogeneous areas in images with weak staining, non-specific fluorescence, and autofluorescence. To lower the number of false positives and improve performance, we rebinarized these images by comparing raw fluorescent images with phase-contrast images. In addition, cell density significantly affected F1 scores, implying that cells should be cultured carefully to a suitable density, or that networks should be trained to distinguish between true and false positives, especially when images are heterogeneous. Finally, K-fold cross-validation showed that iPSC-derived endothelial cells were identified with accuracy approximately 0.9 and F1 score 0.75, in line with similar attempts (Buggenthin et al., 2017, Niioka et al., 2018, Yuan-Hsiang et al., 2017).

Importantly, the data show that iPSC-derived endothelial cells can be identified based on morphology alone, requiring only 100 μs per block in a small network and 275 μs per block in a large network (Figure S4E). As morphology-based identification does not depend on labeling, genetic manipulation, or immunostaining, it can be used for various applications requiring native, living cells. Thus, this system may enable analysis of large datasets and advance cardiovascular research and medicine.

Experimental Procedures

iPSC Culture

iPSCs were maintained in mTeSR with 0.5% penicillin/streptomycin on culture dishes coated with growth factor-reduced Matrigel, and routinely passaged every week. Media were changed every other day. Detailed protocols are described in Supplemental Experimental Procedures.

Endothelial Cell Differentiation

iPSCs cultured on Matrigel-coated 6-well plates were enzymatically detached on day 7, and differentiated into endothelial cells as described in Supplemental Experimental Procedures.

Flow Cytometry

At day 6 of differentiation, cells were dissociated, stained with APC-conjugated anti-CD31, and sorted on BD FACSAria III. As a negative control, we used unstained cells. Detailed protocols are described in Supplemental Experimental Procedures.

Immunocytochemistry

At day 6 of differentiation, cells were fixed with 4% paraformaldehyde, blocked with ImmunoBlock, probed with primary antibodies to CD31, and labeled with secondary antibodies as described in Supplemental Experimental Procedures.

Preparation of Datasets

All phase-contrast and immunofluorescent images were acquired at day 6 of differentiation. Two hundred images were automatically obtained from each of four independent experiments. Of these, 640 were used for training and 160 were used for validation in Figures 2 and 3. For K-fold validation in Figure 4, 600 images from three experiments were used for training and 200 images from one experiment were used for validation, in all possible combinations. Datasets were constructed by randomly extracting 200 input blocks from each phase-contrast image. On the other hand, target blocks were extracted from binarized immunofluorescent images. Detailed procedures are described in Supplemental Experimental Procedures.

Deep Neural Networks

We used LeNet, a small network that contains two convolution layers, two max pooling layers, and two fully connected layers, as well as AlexNet, a large network that contains five convolution layers, three max pooling layers, and three fully connected layers. Network structures are shown in Figure 3A and Supplemental Experimental Procedures.

Performance Evaluation

Performance was evaluated based on F1 scores, an aggregate of recall and precision, and on accuracy, the fraction of correct predictions. Detailed information is provided in Supplemental Experimental Procedures.

Author Contributions

D.K., T. Kunihiro, and S.Y. designed experiments. D.K., M.L., T. Kunihiro, S.Y., Y.K., M.K., T. Katsuki, S.I., T.S., and K.F. collected data. D.K., M.L., and T. Kunihiro analyzed data. K.F. supervised the research. D.K. and S.Y. wrote the article.

Acknowledgments

We thank all of our laboratory members for assistance. This research was supported by Grants-in-Aid for Scientific Research (JSPS KAKENHI grant numbers 16H05304 and 16K15415), by SENSHIN Medical Research Foundation, by Suzuken Memorial Foundation, and by Keio University Medical Science Fund. K.F. is a co-founder of and has equity in Heartseed. T.K. is an employee of Sony Imaging Products & Solutions.

Published: May 10, 2018

Footnotes

Supplemental Information includes Supplemental Experimental Procedures, four figures, and four tables and can be found with this article online at https://doi.org/10.1016/j.stemcr.2018.04.007.

Supplemental Information

References

- Adams R.H., Alitalo K. Molecular regulation of angiogenesis and lymphangiogenesis. Nat. Rev. Mol. Cell Biol. 2007;8:464–478. doi: 10.1038/nrm2183. [DOI] [PubMed] [Google Scholar]

- Avior Y., Sagi I., Benvenisty N. Pluripotent stem cells in disease modelling and drug discovery. Nat. Rev. Mol. Cell Biol. 2016;17:170–182. doi: 10.1038/nrm.2015.27. [DOI] [PubMed] [Google Scholar]

- Bengio Y., Lamblin P., Popovici D., Larochelle H. Greedy layer-wise training of deep networks. In: Schölkopf B., Platt J.C., Hoffman T., editors. Proceedings of the 19th International Conference on Neural Information Processing Systems. MIT Press; 2006. pp. 153–160. [Google Scholar]

- Buggenthin F., Buettner F., Hoppe P.S., Endele M., Kroiss M., Strasser M., Schwarzfischer M., Loeffler D., Kokkaliaris K.D., Hilsenbeck O. Prospective identification of hematopoietic lineage choice by deep learning. Nat. Methods. 2017;14:403–406. doi: 10.1038/nmeth.4182. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chen I.Y., Matsa E., Wu J.C. Induced pluripotent stem cells: at the heart of cardiovascular precision medicine. Nat. Rev. Cardiol. 2016;13:333–349. doi: 10.1038/nrcardio.2016.36. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Esteva A., Kuprel B., Novoa R.A., Ko J., Swetter S.M., Blau H.M., Thrun S. Dermatologist-level classification of skin cancer with deep neural networks. Nature. 2017;542:115–118. doi: 10.1038/nature21056. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gorodeski E.Z., Ishwaran H., Kogalur U.B., Blackstone E.H., Hsich E., Zhang Z.M., Vitolins M.Z., Manson J.E., Curb J.D., Martin L.W. Use of hundreds of electrocardiographic biomarkers for prediction of mortality in postmenopausal women: the Women's Health Initiative. Circ. Cardiovasc. Qual. Outcomes. 2011;4:521–532. doi: 10.1161/CIRCOUTCOMES.110.959023. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gulshan V., Peng L., Coram M., Stumpe M.C., Wu D., Narayanaswamy A., Venugopalan S., Widner K., Madams T., Cuadros J. Development and validation of a deep learning algorithm for detection of diabetic retinopathy in retinal fundus photographs. JAMA. 2016;316:2402–2410. doi: 10.1001/jama.2016.17216. [DOI] [PubMed] [Google Scholar]

- Hatipoglu, N., and Bilgin, G. (2014). Classification of histopathological images using convolutional neural network. Paper presented at: 2014 4th International Conference on Image Processing Theory, Tools and Applications (IPTA).

- He, K., Zhang, X., Ren, S., and Sun, J. (2015). Deep residual learning for image recognition. https://doi.org/10.1109/CVPR.2016.90.

- Heylman C., Datta R., Sobrino A., George S., Gratton E. Supervised machine learning for classification of the electrophysiological effects of chronotropic drugs on human induced pluripotent stem cell-derived cardiomyocytes. PLoS One. 2015;10:e0144572. doi: 10.1371/journal.pone.0144572. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hinton G.E., Osindero S., Teh Y.W. A fast learning algorithm for deep belief nets. Neural Comput. 2006;18:1527–1554. doi: 10.1162/neco.2006.18.7.1527. [DOI] [PubMed] [Google Scholar]

- Hsich E., Gorodeski E.Z., Blackstone E.H., Ishwaran H., Lauer M.S. Identifying important risk factors for survival in patient with systolic heart failure using random survival forests. Circ. Cardiovasc. Qual. Outcomes. 2011;4:39–45. doi: 10.1161/CIRCOUTCOMES.110.939371. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hu B.Y., Weick J.P., Yu J., Ma L.X., Zhang X.Q., Thomson J.A., Zhang S.C. Neural differentiation of human induced pluripotent stem cells follows developmental principles but with variable potency. Proc. Natl. Acad. Sci. USA. 2010;107:4335–4340. doi: 10.1073/pnas.0910012107. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ioffe S., Szegedy C. Batch normalization: accelerating deep network training by reducing internal covariate shift. ArXiv. 2015 https://arxiv.org/pdf/1502.03167.pdf [Google Scholar]

- Krizhevsky A., Sutskever I., Hinton G.E. ImageNet classification with deep convolutional neural networks. In: Pereira F., Burges C.J.C., Bottou L., Weinberger K.Q., editors. Proceedings of the 25th International Conference on Neural Information Processing Systems. Curran Associates Inc.; 2012. pp. 1097–1105. [Google Scholar]

- Lecun Y., Bengio Y., Hinton G. Deep learning. Nature. 2015;521:436–444. doi: 10.1038/nature14539. [DOI] [PubMed] [Google Scholar]

- Lecun Y., Bottou L., Bengio Y., Haffner P. Gradient-based learning applied to document recognition. Proc. IEEE. 1998;86:2278–2324. [Google Scholar]

- Lindblom P., Gerhardt H., Liebner S., Abramsson A., Enge M., Hellstrom M., Backstrom G., Fredriksson S., Landegren U., Nystrom H.C. Endothelial PDGF-B retention is required for proper investment of pericytes in the microvessel wall. Genes Dev. 2003;17:1835–1840. doi: 10.1101/gad.266803. [DOI] [PMC free article] [PubMed] [Google Scholar]

- McCulloch W.S., Pitts W. A logical calculus of the ideas immanent in nervous activity. Bull. Math. Biol. 1943;5:115–133. [PubMed] [Google Scholar]

- Niioka H., Asatani S., Yoshimura A., Ohigashi H., Tagawa S., Miyake J. Classification of C2C12 cells at differentiation by convolutional neural network of deep learning using phase contrast images. Hum. Cell. 2018;31:87–93. doi: 10.1007/s13577-017-0191-9. [DOI] [PubMed] [Google Scholar]

- Osafune K., Caron L., Borowiak M., Martinez R.J., Fitz-Gerald C.S., Sato Y., Cowan C.A., Chien K.R., Melton D.A. Marked differences in differentiation propensity among human embryonic stem cell lines. Nat. Biotechnol. 2008;26:313–315. doi: 10.1038/nbt1383. [DOI] [PubMed] [Google Scholar]

- Patsch C., Challet-Meylan L., Thoma E.C., Urich E., Heckel T., O'Sullivan J.F., Grainger S.J., Kapp F.G., Sun L., Christensen K. Generation of vascular endothelial and smooth muscle cells from human pluripotent stem cells. Nat. Cell Biol. 2015;17:994–1003. doi: 10.1038/ncb3205. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Saha, S., and Vemuri, R. (2000). An analysis on the effect of image activity on lossy coding performance. Paper presented at: 2000 IEEE International Symposium on Circuits and Systems Emerging Technologies for the 21st Century Proceedings (IEEE Cat No 00CH36353).

- Simonyan K., Zisserman A. Very deep convolutional networks for large-scale image recognition. ArXiv. 2014 https://arxiv.org/pdf/1409.1556.pdf [Google Scholar]

- Szegedy C., Liu W., Jia Y., Sermanet P., Reed S., Anguelov D., Erhan D., Vanhoucke V., Rabinovich A. Going deeper with convolutions. ArXiv. 2014 https://arxiv.org/pdf/1409.4842.pdf [Google Scholar]

- Takahashi K., Yamanaka S. Induction of pluripotent stem cells from mouse embryonic and adult fibroblast cultures by defined factors. Cell. 2006;126:663–676. doi: 10.1016/j.cell.2006.07.024. [DOI] [PubMed] [Google Scholar]

- Takakura N., Watanabe T., Suenobu S., Yamada Y., Noda T., Ito Y., Satake M., Suda T. A role for hematopoietic stem cells in promoting angiogenesis. Cell. 2000;102:199–209. doi: 10.1016/s0092-8674(00)00025-8. [DOI] [PubMed] [Google Scholar]

- Tanaka A., Yuasa S., Mearini G., Egashira T., Seki T., Kodaira M., Kusumoto D., Kuroda Y., Okata S., Suzuki T. Endothelin-1 induces myofibrillar disarray and contractile vector variability in hypertrophic cardiomyopathy-induced pluripotent stem cell-derived cardiomyocytes. J. Am. Heart Assoc. 2014;3:e001263. doi: 10.1161/JAHA.114.001263. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Van Valen D.A., Kudo T., Lane K.M., Macklin D.N., Quach N.T., DeFelice M.M., Maayan I., Tanouchi Y., Ashley E.A., Covert M.W. Deep learning automates the quantitative analysis of individual cells in live-cell imaging experiments. PLoS Comput. Biol. 2016;12:e1005177. doi: 10.1371/journal.pcbi.1005177. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Yuan-Hsiang, C., Abe, K., Yokota, H., Sudo, K., Nakamura, Y., Cheng-Yu, L., and Ming-Dar, T. (2017). Human induced pluripotent stem cell region recognition in microscopy images using convolutional neural networks. Annual International Conference of the IEEE Engineering in Medicine and Biology Society 2017, 4058–4061. [DOI] [PubMed]

- Yuasa S., Fukuda K. Cardiac regenerative medicine. Circ. J. 2008;72(Suppl A):A49–A55. doi: 10.1253/circj.cj-08-0378. [DOI] [PubMed] [Google Scholar]

- Zeng X., Ouyang W., Yan J., Li H., Xiao T., Wang K., Liu Y., Zhou Y., Yang B., Wang Z. Crafting GBD-Net for object detection. ArXiv. 2016 doi: 10.1109/TPAMI.2017.2745563. https://arxiv.org/pdf/1610.02579.pdf [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.