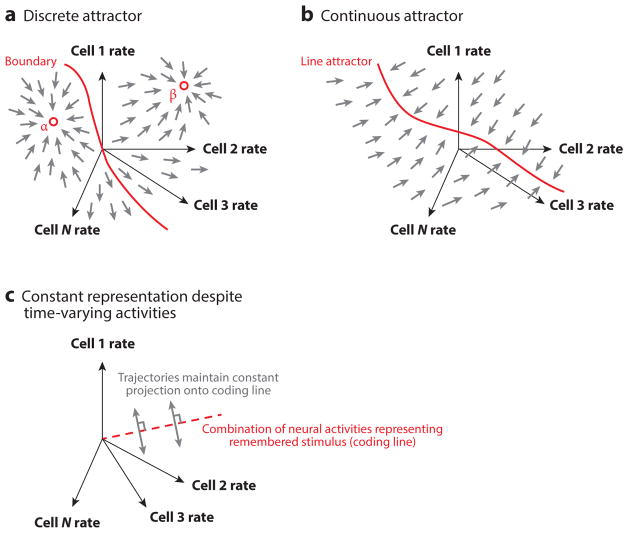

Figure 2.

Network models capable of generating persistent representations. (a) In discrete attractor models, a population of neurons is described by their firing rates (axes of the diagram). The network dynamics cause movement within this space: At each point, the small arrows indicate the direction in which the population activities move. (This is known as a direction field in mathematics, and one can visualize trajectories of the population by connecting neighboring arrows in a head-to-tail fashion.) Here, all direction arrows point toward either point αor point β, and so the network activity patterns will evolve toward one of these two activity patterns. Which of these patterns gets generated depends on whether the inputs push the network to the left of the marked boundary or the right. This boundary is known as a separatrix. (b) Continuous attractor models are similar to the model in panel a, but now the direction arrows all point toward a continuous line. The network dynamics cause the activity patterns to evolve to points (patterns) on the marked line. (c) In models displaying continuous representations despite time-varying neural activities, the remembered stimulus value is assumed to be encoded in a combination of neural firing rates: in other words, by the projection of the population firing rate vector onto a line (coding line) in the space of neural activities. Here, the dynamical evolution of the neural activities is orthogonal to that coding line, and so the changes in neural firing rates do not change the projection of the firing rate vector onto that line. Consequently, the representation is stably maintained.