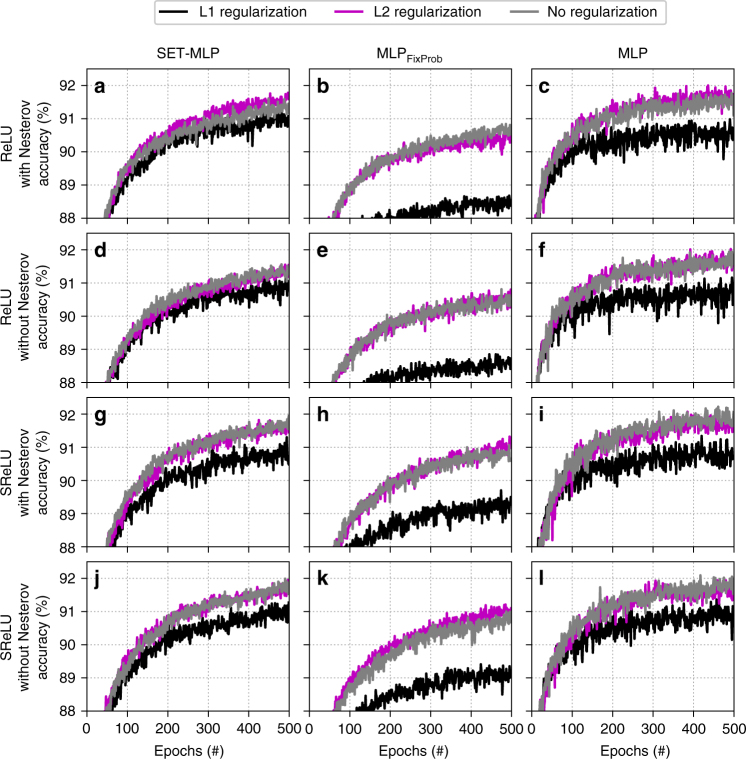

Fig. 6.

Models accuracy using three weights regularization techniques on the Fashion-MNIST dataset. All models have been trained with stochastic gradient descent, having the same hyper-parameters, number of hidden layers (i.e. three), and number of hidden neurons per layer (i.e. 1000). a–c use ReLU activation function for the hidden neurons and Nesterov momentum; d–f use ReLU activation function without Nesterov momentum; g–i use SReLU activation function and Nesterov momentum; and j–l use SReLU activation function without Nesterov momentum. a,d,g,j present experiments with SET-MLP; b,e,h,k with MLPFixProb; and c,f,i,l with MLP