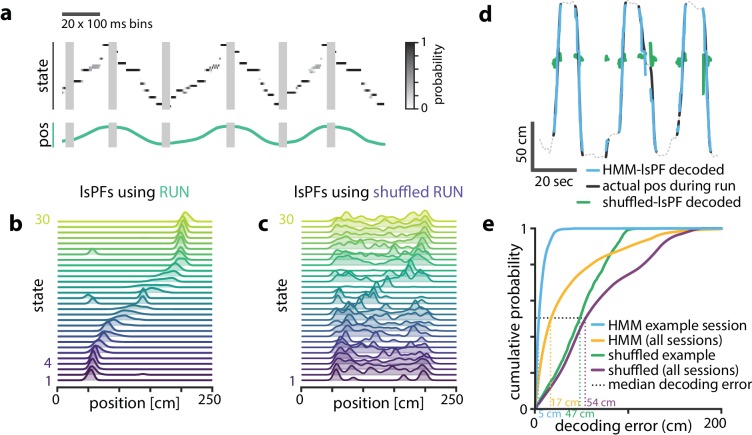

Figure 3. Latent states capture positional code.

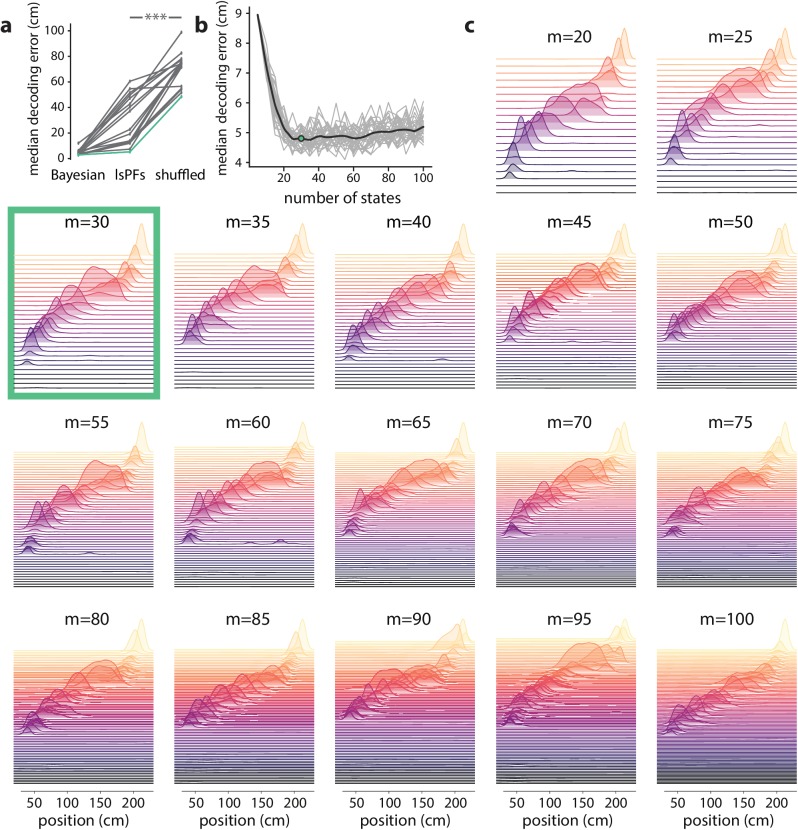

Latent states capture positional code. (a) Using the model parameters estimated from PBEs, we decoded latent state probabilities from neural activity during periods when the animal was running. An example shows the trajectory of the decoded latent state probabilities during six runs across the track. (b) Mapping latent state probabilities to associated animal positions yields latent-state place fields (lsPFs) which describe the probability of each state for positions along the track. (c) Shuffling the position associations yields uninformative state mappings. (d) For an example session, position decoding during run periods through the latent space gives significantly better accuracy than decoding using the shuffled tuning curves. The dotted line shows the animal’s position during intervening non run periods. (e) The distribution of position decoding accuracy over all sessions () was significantly greater than chance. ().