Fig. 1.

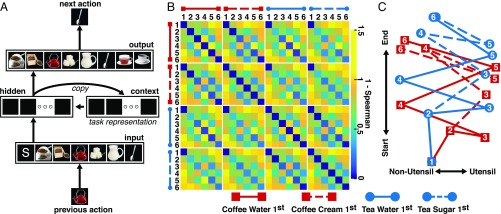

Computational model. (A) The recurrent neural network is composed of 7 input units, 15 hidden units, and 8 output units with fully connected feed-forward projections. A fourth context layer with 15 units receives an exact copy of the hidden unit activation values on each sequence step and returns those values to the hidden layer on the subsequent step. The model is trained to predict each action in a sequence, given the previous action as input. For example, the model predicts a “stir” action (spoon unit) given an “add water” action (teapot unit) as input. Arrows indicate connections; squares represent individual units. Midcingulate cortex representations are derived from the activation values of the context layer units (“task representation”) (SI Appendix). (B) Representational dissimilarity matrix derived from the context unit activation values. The 24 states along each axis correspond to the six actions associated with each of the four task sequences given in Fig. 2. Blue vs. yellow squares indicate greater vs. lesser similarity between representations, respectively. (C) Multidimensional scaling of the context unit activation values (28, 41) across the 24 states for a representative simulation, revealing dimensions that are sensitive to sequence progression (“start” vs. “end”) and action type (“non-utensil” vs. “utensil”).