Abstract

A pixel-by-pixel tissue classification framework using multiple contrasts obtained by Jones matrix optical coherence tomography (JM-OCT) is demonstrated. The JM-OCT is an extension of OCT that provides OCT, OCT angiography, birefringence tomography, degree-of-polarization uniformity tomography, and attenuation coefficient tomography, simultaneously. The classification framework consists of feature engineering, k-means clustering that generates a training dataset, training of a tissue classifier using the generated training dataset, and tissue classification by the trained classifier. The feature engineering process generates synthetic features from the primary optical contrasts obtained by JM-OCT. The tissue classification is performed in the feature space of the engineered features. We applied this framework to the in vivo analysis of optic nerve heads of posterior eyes. This classified each JM-OCT pixel into prelamina, lamina cribrosa (lamina beam), and retrolamina tissues. The lamina beam segmentation results were further utilized for birefringence and attenuation coefficient analysis of lamina beam.

OCIS codes: (170.4500) Optical coherence tomography, (170.4460) Ophthalmic optics and devices, (110.5405) Polarimetric imaging, (170.4470) Ophthalmology, (110.4500) Optical coherence tomography

1. Introduction

Optic nerve head (ONH) morphology and its biomechanics are of interest in the ophthalmic community because this knowledge is important in monitoring the progression of myopia and glaucoma [1, 2]. However, most current methods used for the investigation of ONH morphology and biomechanics are ex vivo. This requires sectioning, which is highly invasive or involves the use of animal models. There is a demand for a tool for the three dimensional (3-D) non-invasive investigation of the morphological and biomechanical properties of ONH. Such a tool potentially enables regular follow-ups to aid in clinical investigation.

Optical coherence tomography (OCT) is a 3-D non-invasive imaging technique that is widely clinically accepted in ophthalmology. However, there are two main limitations with respect to ONH morphology and biomechanical imaging in vivo in clinics. First, OCT cannot be used for the direct measurement of biomechanical properties. Although optical coherence elastography [3, 4], which is a functional extension of OCT to image biomechanical properties, is an established tool, it involves active pressure. And hence, it is not the most preferable tool for clinical posterior eye investigation. Second, to examine the property of ONH tissues, methods to automatically delineate fine structures of the tissues, such as lamina cribrosa beam and lamina pores, are required. The importance of 3-D automated segmentation was highlighted previously using OCT [5–7]. However, segmentation has not been fully automated in a true 3-D sense.

Jones matrix OCT (JM-OCT) has been shown to provide more contrasts than conventional OCT imaging in a single imaging acquisition [8–10]. These contrasts include scattering (OCT intensity), attenuation coefficient, birefringence [11], OCT-angiography [12] and degree of polarization uniformity [13]. Among them, tissue birefringence is mainly originated from collagen. And hence, it is associated with tissue biomechanics. This birefringence-biomechanics relation has been observed in several tissues including skin [14], sclera [15–17] and lamina cribrosa [18–20]. Although it is indirect, JM-OCT may provide us with relevant biomechanical information in vivo [14–17].

In addition to the biomechanical assessment capability of JM-OCT, the multiple contrasts of JM-OCT enable robust methods for tissue delineation and classification. JM-OCT has been shown to be successful in, for example, anterior eye segment tissue discrimination [21], retinal pigment epithelium (RPE) segmentation [22–25], choroid and scleral segmentation [24–27], multi-contrast based superpixel-based segmentation [28], and fibrotic tissue segmentation in bleb evaluation [29, 30]. Additionally, further signal processing methods to enhance the segmentation capability of JM-OCT, such as superpixels [28], were demonstrated. Hence, using JM-OCT for ONH tissue delineation is a rational approach.

In this paper, we develop a framework for tissue classification by exploiting multiple contrasts of JM-OCT. This method involves pixel-wise tissue classification, unlike the conventional boundary delineation technique for tissue segmentation [31, 32]. Using this method, we segmented the prelamina, lamina cribrosa (beam) and retrolamina regions. Additionally, the method also enables segmentation of lamina beam tissue. The method is based on a combination of unsupervised and supervised machine learning methods. The training dataset for supervised tissue (pixel) classifier is generated using an unsupervised method. Thus, this combination enables tissue classification using multi-contrast JM-OCT data. The quantitative analyses of lamina beam birefringence and attenuation coefficient are also demonstrated by using the segmentation result of lamina beam.

2. Theory and methods

The multi-contrast OCT data used in this research were acquired by a custom-made JM-OCT system. The details of the JM-OCT system are discussed in Section 2.1. The tissue classification framework used in this study involved the semi-automatic generation of a dataset, referred to as the “training dataset,” and training a tissue classifier with the training dataset. The classification was then used to classify previously unseen JM-OCT data (JM-OCT image pixels), referred to as the “test data,” into particular tissue classes. The details of the classification framework are described in Section 2.2. ONH analysis based on the segmented ONH tissues is then described in 2.3.

2.1. JM-OCT system

A 1-µm JM-OCT system specifically designed for posterior eye segment imaging was used to scan human ONHs. JM-OCT is a polarization and flow sensitive OCT and the details are described elsewhere [10, 11]. The system has a lateral resolution of 21 µm and a depth resolution of 6.2 µm in tissue. The scan rate is 100,000 A-lines/s. The probe power on the cornea was 1.4 mW which is under the American National Standards Institute (ANSI) safety standard [33].

The JM-OCT system multiplexes images that correspond to two incident polarization states at two depths, and simultaneously measures two spectral interferograms using a polarization diversity detector. Thus, the JM-OCT data consist of four OCT images that could be combined to obtain functional contrasts, including scattering intensity (OCT), OCT angiography (OCTA), degree of polarization uniformity (DOPU), local birefringence (BR) and attenuation coefficient (AC). In addition, frame (B-scan) acquisition is repeated four times at the same position on the retina.

The scattering intensity OCT was computed from the set of four OCT images of a Jones matrix as follows. First, two images of the same detection channel are combined coherently by a coherent composition method [10]. It gives complex OCT images corresponding to two detection polarization channels. And for each channel, four repeating frame images were obtained. The images were converted into squared intensity, the four repeating frames are averaged in intensity, and the averaged intensity images of two detection polarization channels were summed. This process finally gives polarization artifact-free OCT intensity tomographies.

OCTA was obtained using complex correlation analysis with Makita’s noise-correction [12] among the four repeated frames.

BR was computed using a local Jones matrix analysis method [34] and maximum a-posteriori (MAP) BR estimator [35]. Before applying the MAP BR estimator, the four repeated frames, i.e., four Jones matrices, were combined by adaptive Jones matrix averaging method [9, 10]. The local Jones matrix analysis was applied with 6-pixel depth separation (24-µm) and the MAP estimation was performed with a spatial kernel of 2 pixels (42 µm, lateral) × 2 pixels (8 µm, depth).

DOPU was computed over a spatial kernel of 3 × 3 pixels over four repeated frames and two input polarizations with noise correction [13].

AC was computed using a model-based reconstruction method presented by Vermeer et al. [36] with modification for polarization diversity detection [37]. Specifically, we used Eq. (14) in Ref. [37] for the AC computation where OCT intensity, I(zi), was the above mentioned polarization artifact-free OCT intensity (Eq. (9) in Ref. [37]). We omitted the signal roll off correction for this AC computation because the signal roll off appeared as a nearly constant bias of the AC and did not affect the subsequent tissue classification.

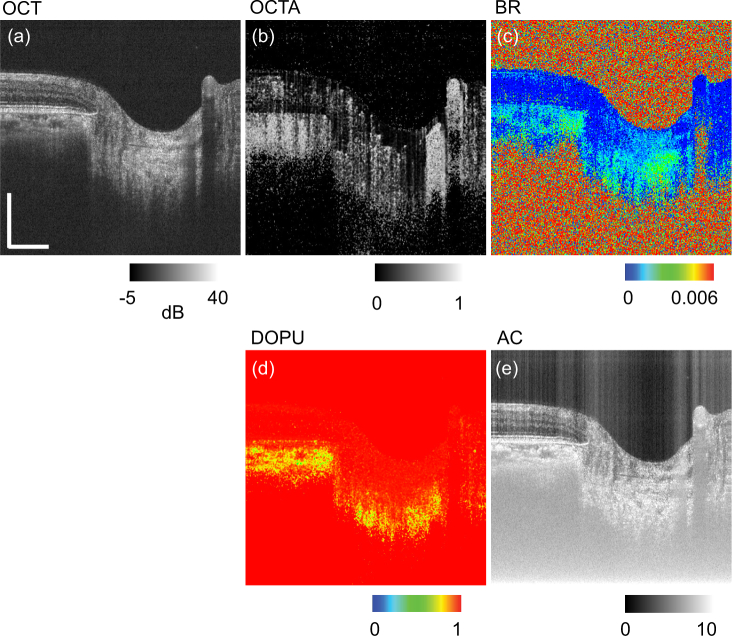

More details of JMOCT-related signal processing are summarized in Refs. [10,11]. An example set of cross-sectional ONH images of the above five contrasts are shown in Fig. 1 as follows: (a) OCT, (b) OCTA, (c) BR, (d) DOPU, and (e) AC.

Fig. 1.

Example of multiple contrasts of an ONH cross-section obtained by JM-OCT: (a) OCT intensity (OCT), (b) OCT angiography (OCTA), (c) birefringence (BR), (d) degree of polarization uniformity (DOPU), and (e) attenuation coefficient (AC). Scale bars indicate 0.5 mm × 0.5 mm.

2.2. The tissue classification framework

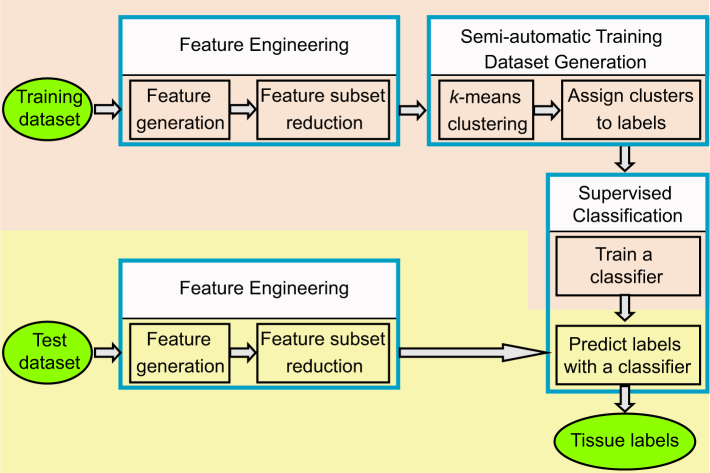

The tissue classification framework used in this study consists of four phases: feature engineering, semi-automatic generation of training dataset, supervised training of tissue classifier, and tissue classification by the trained classifier. This framework is summarized in Fig. 2. The entire framework was run on two disjoint subset of data: one for training and the other for classification testing. Each of these phases is discussed in detail in the following.

Fig. 2.

The framework of our tissue classification method. It consists of training set generation (pink region) and classification process (yellow region). The circle and rectangle objects represent the data and the operations, respectively.

2.2.1. Feature engineering

The steps to create new feature set, which is going to be used for tissue classification, from the raw JM-OCT contrasts (primary features) are described in this section. Throughout the remainder of the paper, M represents the number of features, i.e., the dimensions of the feature space. The subscript with M denotes whether the feature set is primary (p), extended (ext) or reduced (r), as explained later in this section. Each of the features is represented by Xi, where i varies from 1 to M. Feature engineering consists of two steps; the generation of extended feature set and subsequent reduction.

In the extended feature generation process, the five primary features (Mp = 5) including OCT, OCTA, BR, DOPU, and AC, are extend into a Mext = 16 features. The extended feature set includes OCT, OCTA, BR, DOPU, AC, OCT × (1-DOPU) × OCTA, AC × (1-DOPU) × OCTA, OCT × BR, AC × BR, AC × DOPU, AC × OCTA, DOPU × OCTA, BR × DOPU, OCTA/AC, OCT/AC, and OCT ⊕ AC. Among the extended features, OCT, OCTA, BR, DOPU, and AC are direct copies of the primary features. OCT × (1-DOPU) × OCTA and AC × (1-DOPU) × OCTA are expected to be sensitive to a hyper-scattering, melanin-containing, and vascular rich tissue. OCT × BR and AC × BR become large for hyper-scattering and highly birefringent tissues, such as collagen-rich tissue. AC × DOPU is expected to be sensitive to hyper-scattering and less-melanin tissues. Because hyper-scattering tissues, such as RPE and choroid, contain melanin, this feature is expected to distinguish less-melanin high-density tissues from melanin-rich high density tissues. AC × OCTA and DOPU × OCTA are expected to be sensitive to highly vascular tissue. BR × DOPU is included to be sensitive to less-melanin fibrotic tissues, such as nerve fibers and/or collagen. OCTA/AC and OCT/AC are included to separate vessels and their shadow. OCT ⊕ AC is a bit-wise exclusive OR (XOR) operation where OCT are in dB scale and both OCT and AC are in unsigned 8-bit integer representation. Although the physical interpretation of this feature is not fully clear, it was included in order to highlight the difference between OCT and AC. The rationality of this feature is further discussed in Section 4.1. Each feature is then rescaled using z-score normalization [38] so that it has a zero mean and an unit variance.

The extended feature set is then truncated by removing redundant features. The Pearson’s correlation coefficients of all pairs of features are computed. If the correlation coefficients of a particular feature pair exceed 0.95, then one of the feature of the pair is eliminated. If one of the feature in the correlated pair is a primary feature, then the other is eliminated. In our particular research on ONH, this process eliminated four features: AC × BR, which correlated with BR (ρ = 0.971); AC × OCTA, which correlated with OCTA (ρ = 0.973); DOPU × OCTA, which correlated with OCTA (ρ = 0.996); and BR × DOPU, which correlated with BR (ρ = 0.997). Thus, the size of the reduced feature set was Mr = 12 and it included OCT, OCTA, BR, DOPU, AC, OCT × (1-DOPU) × OCTA, AC × (1-DOPU) × OCTA, OCT × BR, AC × DOPU, OCTA/AC, OCT/AC, and OCT ⊕ AC. This feature reduction reduces the dimension of the feature space used in the subsequent processes including the generation of training dataset, which is based on k-means clustering, classifier training, and final tissue classification. Thus, it reduces the processing time of these processes.

2.2.2. Semi-automatic generation of training dataset

In our segmentation framework, the training dataset used for subsequent training of a random forest classifier is semi-automatically generated. This process consists of two main steps: an unsupervised method for clustering in Mr-dimensional feature space and manual interpretation of the clusters that assign particular tissue labels to each cluster. The details are as follows.

The first step involves pixel-by-pixel clustering in the Mr-dimensional feature space, which is 12-dimensional in our particular case. Clustering is performed by k-means clustering algorithm. To make clustering meaningful, the number of clusters (k) should be larger than the number of tissue types in the image. By contrast, too many clusters makes the subsequent manual interpretation difficult and time-consuming. In our particular implementation, k = 72 was empirically chosen. Clustering was performed over a maximum of 300 iterations for a single trial. Ten trials were performed with different initial distributions of clusters (seeds), and the best results were selected as the final clustering result. The goodness of each trial was assessed by computing a metric so called as “inertia,” which is the summation of squared distances from each pixel to the centroid of the corresponding cluster in Mr-dimensional feature space. The k-means clustering algorithm was implemented using the machine learning library scikit-learn 0.18 on Python 2.7.

In the second step, a particular tissue label was manually assigned to each cluster. For this manual tissue label assignment, the pixels of each cluster were overlaid on the OCT image, and an expert grader (DK) reviewed this OCT image and assigned a proper tissue label based on anatomical knowledge [39, 40]. This two-step process dramatically reduced the effort of the training dataset generation process in comparison to a standard method, which involves fully manual segmentation of multiple tissue types, as discussed in Section 4.5.

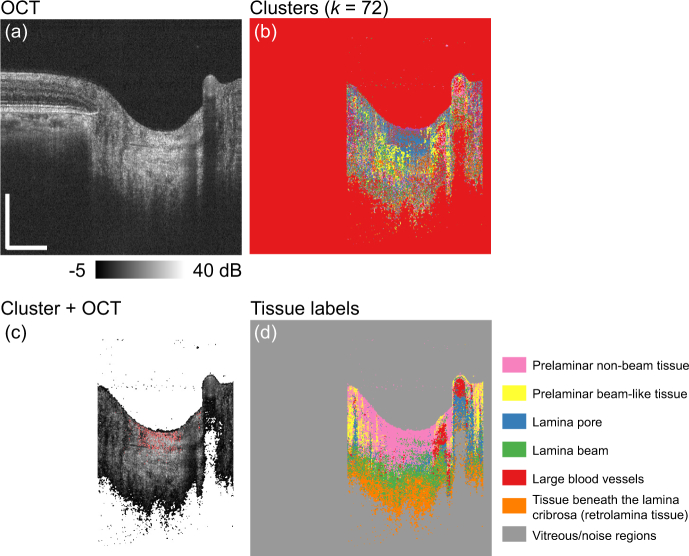

Figure 3 exemplifies the training dataset generation process. Figure 3(a) is an example of the OCT image. In our particular implementation for ONH analysis, only the region within the optic disc was used (see Section 2.3.2 for details). Fig. 3(b) shows all 72 clusters, where each cluster is displayed with each randomly selected color. Fig. 3(c) shows the manual tissue label assignment process, where pixels that belong to one of the clusters are highlighted in red and overlaid on the OCT image. The expert grader selected one of the most suitable tissue labels (classes) for the cluster. In our particular ONH implementation, there were seven tissue labels (classes) (see Section 2.3 for details). This process was performed for all the k = 72 clusters. Fig. 3(d) shows the distributions of the tissue labels, where each label is displayed with each random color.

Fig. 3.

Example of manual tissue label assignment: (a) an OCT image, (b) clusters with each different color, (c) example of a cluster (red) overlaid on the OCT, (d) tissue labels as each label is displayed with each color. Scale bars indicate 0.5 mm × 0.5 mm.

2.2.3. Supervised classification

The third step is training of the multi-class classifier using the generated training dataset. In our particular implementation, the classifier was a random forest classifier. The random forest consisted of 10 decision trees. The quality of the tree-node split was defined using the Gini impurity. The maximum number of features, based on which the split was chosen, was , and the maximum depth of a tree was 20. Each tree was trained using a randomly selected subset of pixels by following the standard training method of a random forest classifier. The random forest classifier was implemented using the same library as k-means clustering (scikit-learn 0.18 on Python 2.7).

In the final step, each pixel in the test dataset was classified into one of the tissue classes using the trained classifier.

2.3. ONH analysis

The tissue classification framework discussed in the previous section is a general framework and it could be customized to several types of JM-OCT images, such as anterior eye [29,30], posterior eye [11, 41, 42], heart [43], and skin [44]. In this study, we specialized the algorithm for the classification of lamina cribrosa and its surrounding tissues in the ONH region. Particularly, the primary focus was to segment the lamina beam for 3-D morphological and functional assessment.

2.3.1. Measurement protocol and the subjects

Six eyes of three Asian subjects, including normal and myopic cases, were imaged for the study. A 3 mm × 3 mm area around the ONH was scanned using a horizontal fast raster scan protocol, which consisted of 512 Alines/B-scan times 256 B-scans times 4 repeats at each B-scan location. Each volume acquisition took approximately 6.6 seconds.

The biometric parameters including refractive error, axial eye length (AL), and intraocular pressure (IOP) were measured using RT-7000 (Tomey Corp.), IOL Master (Carl Zeiss), and Goldmann applanation tonometer, respectively. The biometric measurement was performed within an hour of the OCT imaging session by experienced ophthalmologists at the University of Tsukuba.

The mean age of the subjects was 42.5 ± 11.5 years (mean ± standard deviation; range: 30.4 to 56 years old). A JM-OCT volume was obtained for each eye, so six volumes were obtained from the three subjects in total. The right eyes were used for the training dataset and the left eyes were used for the test dataset. The details of the subjects used in the study are summarized in Table 1.

Table 1.

Subject details and the biometrics of the subjects. SE, AL, and IOP are for spherical equivalent refractive error, axial eye length, and intraocular pressure, respectively. Subjects-1 and 2 are regarded as myopic in this study, while Subject-3 is regarded as emmetropic.

| Subject ID | Age (y/o) | Sex | Eye | SE (D) | AL (mm) | IOP (mmHg) | Usage |

|---|---|---|---|---|---|---|---|

| Subject-1 | 41.2 | M | R | −7.50 | 27.12 | 17 | Training |

| L | −7.25 | 27.0 | 16 | Test | |||

|

| |||||||

| Subject-2 | 56.0 | M | R | −8.00 | 27.38 | 17 | Training |

| L | −7.75 | 26.74 | 18 | Test | |||

|

| |||||||

| Subject-3 | 30.4 | F | R | −0.50 | 23.72 | 14 | Training |

| L | −0.25 | 23.62 | 14 | Test | |||

The study protocol adhered to the tenets of the Declaration of Helsinki, and was approved by the Institutional Review Board of the University of Tsukuba.

2.3.2. Preprocessing, tissue classification, and post-processing for the ONH dataset

The following preprocessing was performed to reduce the number of pixels in the training dataset and hence, reduce the training time. First, the OCT-intensity-based threshold was applied to remove very low intensity (noise) pixels from the training dataset. The threshold was applied after two-dimensional median filtering (3 pixels (lateral) × 3 pixels (axial) kernel over the B-scan). Second, for each of the training dataset volumes, only three B-scans each per volume was randomly selected to reduce the size of the training dataset. Thus, nine B-scans of three right-eye volumes from three subjects were jointly used as the input to k-means clustering, and hence used as the training dataset.

Third, a manual delineation of the optic disc was performed to use only the optic disc region for training. The manual delineation was performed by an expert (DK) by detecting the limit of the Bruch’s membrane in the OCT images. The OCT-intensity-based threshold was applied to both training and test data, whereas the delineation of the optic disc was performed only for training data. Thus, tissue classification was performed not only on the pixels within the optic disc, but also on the pixels outside the optic disc. Although the classification were performed for the entire volume of the test dataset, only the tissue labels obtained within the optic disc were considered meaningful and worth further interpretation.

k-means clustering for the training dataset generation and the random forest classifier were implemented using the Python-based machine learning library scikit-learn 0.18 on Python 2.7. Python libraries for numerical computation, Numpy 1.11 and Scipy 0.19, were additionally used. Processing was performed on a CPU (non-GPU) environment on Windows 10. The classifier was trained to classify each pixel into one of seven tissue classes: prelaminar beam-like tissue, prelaminar non-beam tissue, lamina beam, lamina pore, tissue beneath the lamina cribrosa (retrolamina tissue), large blood vessels, and vitreous/noise region. Three meta-labels are then obtained from the classified tissue labels. The meta-label of prelamina tissue is the combination of prelamina beam-like and prelamina non-beam tissue labels. The meta-labels of the lamina beam and retrolamina are identical to the classification labels of lamina beam and retrolamina tissues, respectively. In this particular study, the tissue of main focus was the lamina beam.

Before further analyzing the lamina property using the classification result, inter-frame motion was corrected using the Register Virtual Stack Slices plugin and Transform Virtual Stack Slices plugin on an open-source image processing platform Fiji [45], where the motion was detected from the OCT intensity volume, and the motion in all types of the volumes were corrected by the detected motion. For en face visualizations in Section 3, the optic disc was manually defined by looking at the OCT projection image over the entire depth, and its best fit ellipse was drawn as a region-of-interest (ROI). The myopic conus region were excluded from the ROI.

We used the segmented lamina beam as a 3-D mask to obtain the lamina beam birefringence and attenuation coefficient maps, where the birefringence and attenuation coefficient were the BR and AC data of the same JM-OCT volume. The lamina beam maps were further sectorized. The sectors were defined within the elliptic ROI. The ellipse was first split into two concentric ellipses. The long and short diameter of the smaller ellipse were half the respective diameters of the larger ellipse. Each ellipse was further split into eight equi-angle radial pieces; thus, we finally had 16 sectors, as later shown in Figs. 9 and 10. The mean birefringence was computed for each sector within a thin depth region of 5 pixels. For comparison, a sectorized mean birefringence map of bulk ONH was also created. To exclude blood vessels, a 3-D binary mask was created by applying the moments binarization method [46] (Fiji standard implementation) to the full-depth OCT volume. The mean attenuation was computed for each sector within the depth region of 5 pixels. The averaging of attenuation was performed on a linear scale; however, color representation is on a logarithmic scale. For bulk ONH attenuation coefficient maps were also computed. For bulk ONH maps, blood vessels were excluded using the same mask applied to the bulk ONH birefringence maps.

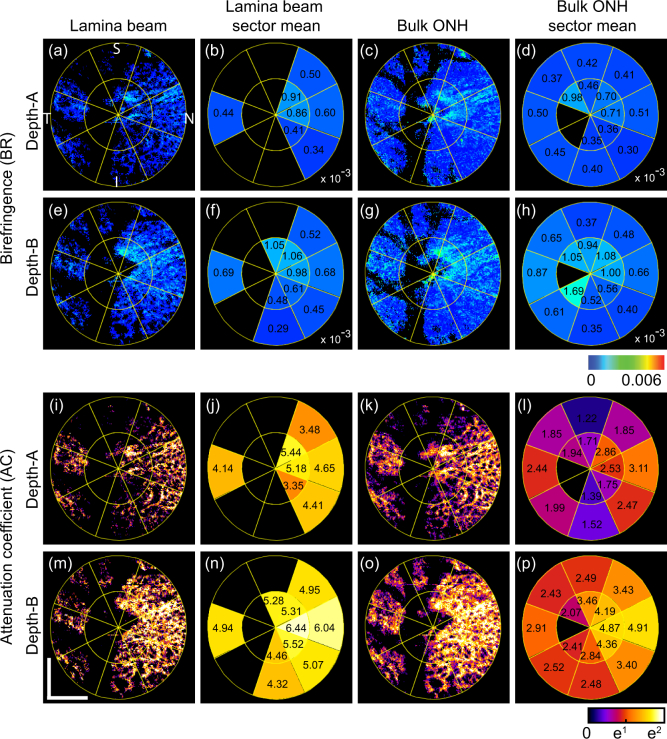

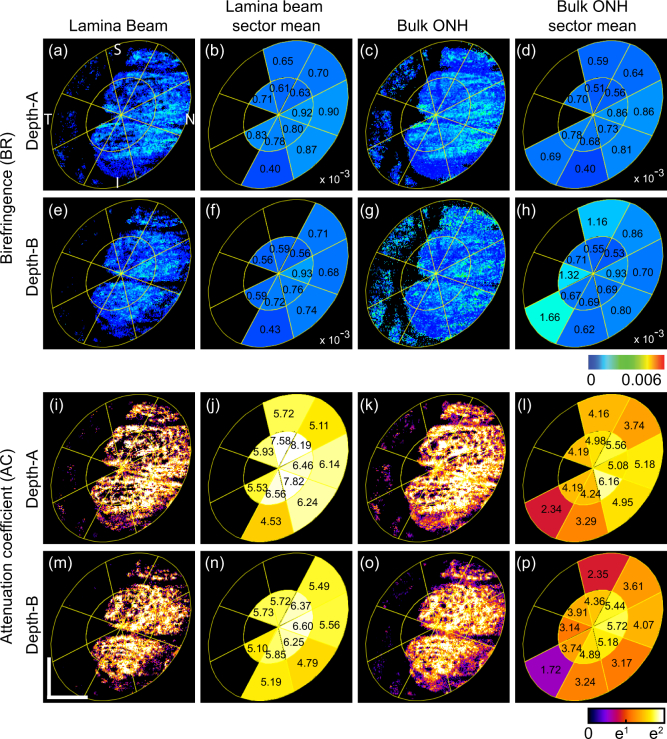

Fig. 9.

En face birefringence maps (first and second rows) and attenuation coefficient maps (third and fourth rows) for the emmetropic case (Subject-3) that corresponds to Fig. 5. The first and the third rows correspond to depth-A, while the second and the fourth rows correspond to depth-B as shown in Fig. 5(a). (a) and (e) are en face lamina beam birefringence, where the non-lamina beam tissues were masked out by using the segmented lamina beam. (b) and (f) are sectorized birefringence maps of the lamina beam. (c) and (g) are bulk ONH birefringence, while (d) and (h) are sectorized birefringence maps of the bulk ONH. (i) and (m) are en face lamina beam attenuation coefficient. (j) and (n) are sectorized attenuation coefficient maps of the lamina beam. (k) and (o) are bulk ONH attenuation coefficient, while (l) and (p) are sectorized attenuation coefficient maps of the bulk ONH. Scale bars indicate 0. 5 mm × 0.5 mm.

Fig. 10.

En face birefringence maps (first and second rows) and attenuation coefficient maps (third and fourth rows) for the myopic case (Subject-1) that corresponds to Fig. 6. Subfigure configuration is identical to Fig. 9. Scale bars indicate 0. 5 mm × 0.5 mm.

For comparison, the sectorized mean birefringence and attenuation coefficient maps of lamina pore were obtained. The lamina pore region is defined as non-lamina-beam and non-blood vessel pixels within the bulk lamina region. It should be noted that this lamina pore is not identical to the tissue label of the lamina pore described in Section 2.3.2. Quadrant sectors (temporal, superior, nasal, and inferior) were used for simplicity of comparison rather than the 16 sectors.

3. Results

3.1. Tissue segmentations

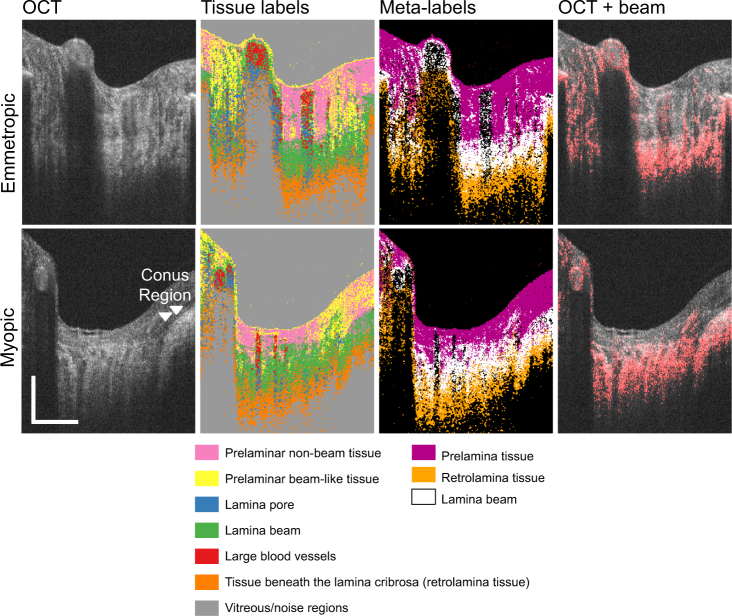

Figure 4 shows examples of ONH tissue classification results as cross-sectional images, where only the regions within the optic disc are shown. The first and the second rows show the cases of emmetropia (left eye of Subject-3) and myopia (left eye of Subject-1), respectively. The first column shows the OCT images. The second column shows the output of the classifier, that is, the tissue label images, where each tissue type (label) is shown in a different color. The third column shows the “meta-label” images, where each meta-label is a combination of classified tissue labels. In the tissue label and meta-label images, the boundary of lamina cribrosa and retrolamina tissues is relatively poorly delineated, whereas the junction between the prelamina tissue and lamina cribrosa shows relatively sharp appearance. This agrees with histological knowledge, that is, lamina-cribrosa-to-retrolamina transition is moderate, whereas that between the prelamina and lamina cribrosa is sharp [47]. The fourth column shows the OCT cross-section, where the lamina beam pixels are highlighted in red. The segmented lamina beam pixels are well co-registered with the lamina beam appearances in the OCT intensity images. This agreement between the segmented lamina beam and OCT intensity is more clearly shown in the en face images in Figs. 5 and 6.

Fig. 4.

Examples of tissue classification results. First and second rows are for an emmetropic case (left eye of Subject-3) and a myopic case (left eye of Subject-1), respectively. Each column, from left to right, represents the images: OCT, tissue labels, meta-labels, and lamina beam pixels (red) overlaid on the OCT. Meta-labels are for prelamina tissue, lamina beam, and retrolamina tissue. Scale bars indicate 0.5 mm × 0.5 mm.

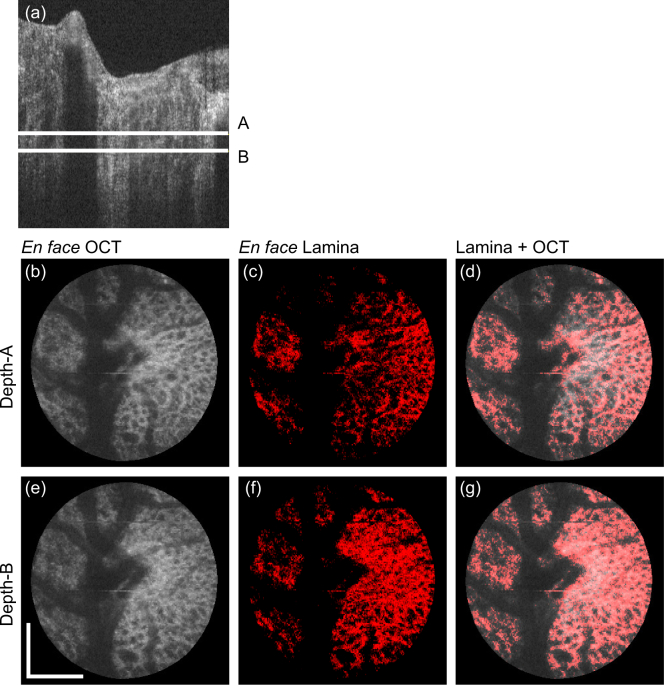

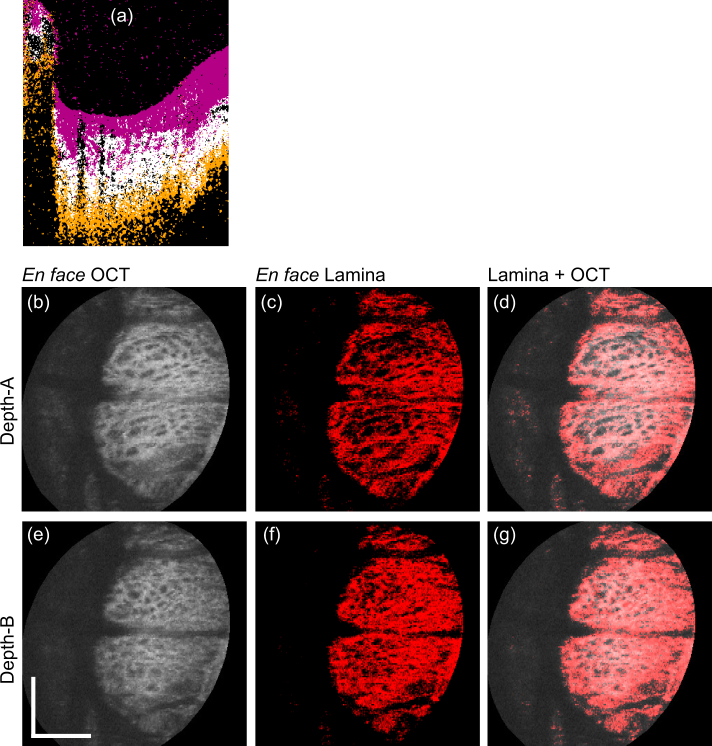

Fig. 5.

En face projection images of the emmetropic case that corresponds to the first row of Fig. 4. (a) Representative B-scan, where two depth positions (A and B) are indicated. (b)–(d) and (e)–(g) are en face slices obtained at the depth-A and depth-B, respectively. (b) and (e) are the OCT intensity, (c) and (f) are the segmented lamina beam, and (d) and (g) are the OCT intensity on which the lamina beam pixels are overlaid in red. Scale bars indicate 0. 5 mm × 0.5 mm.

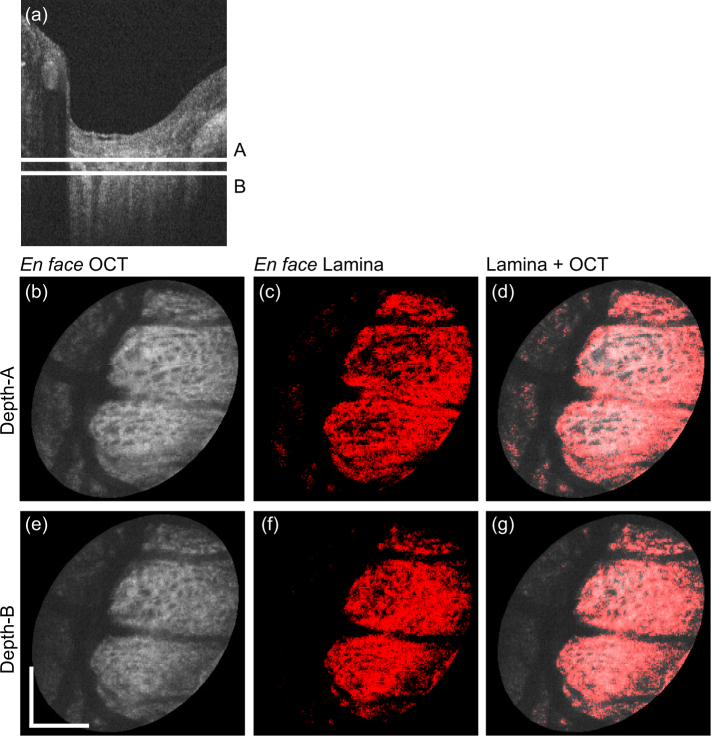

Fig. 6.

En face projection images of the myopic case that corresponds to the second row of Fig. 4. Subfigure configuration is identical to Fig. 5. Scale bars indicate 0.5 mm × 0.5 mm.

The entire B-scans of the same volumes in Fig. 4 are processed by the same trained classifier, and en face lamina beam images were created. Figures 5 and 6 show the emmetropic (left eye of Subject-3) and myopic (left eye of Subject-1) cases that correspond to the first and second rows of Fig. 4, respectively. Figures 5(a) and 6(a) are representative of the cross-sectional OCT, where two depth positions (A and B) are indicated by white horizontal lines. Figures 5(b)–(d) and 6(b)–(d) are en face slices at depth-A, whereas Figs. 5(e)–(g) and 6(e)–(g) are at depth-B. Figures 5(b), 5(e), 6(b), and 6(e) are the OCT intensity slice, which are depth-averages of 5-pixel-depth slabs centered at depths A and B, respectively. Figures 5(c), 5(f), 6(c), and 6(f) are the en face segmented lamina-beam images at the depths A and B, respectively. These en face images are also the depth average of 5-pixel-depth, and the averaging was performed as the lamina beam pixels as 1.0 and other pixels as 0.0. Figures 5(d), 5(g), 6(d), and 6(g) are the segmented lamina beam (red) overlaid on the OCT intensity slice. The en face images show a clearer contrast of the meshwork structure of the lamina beam than the cross-sectional images. Particularly in the emmetropic case (Fig. 5), the depth wise difference of the lamina beam pattern is clearly shown.

3.2. Comparison with manual segmentation

The accuracy of the segmentation results was examined by comparing the results obtained from manual segmentation. Manual segmentation was performed for a cross-sectional image and two en face sections per subject. The cross-section and en face slices were the same as those shown in Figs. 4, 5 and 6. For the cross-sectional image, an expert manually traced the boundary among the prelamina, lamina cribrosa and retrolamina regions by looking at the OCT intensity image. For the en face slices, the lamina pore and noise regions were manually highlighted and then the segmented region was inverted to highlight the lamina beam; that is, the lamina beam region was segmented as non-lamina-pore and non-noise regions. The agreement between manual and automatic segmentation was evaluated using Dice coefficients.

The computed Dice coefficients of the pre-lamina tissue in the cross-section were 0.55, 0.53, and 0.50 for Subjects-1, 2, and 3, respectively. Those for the retrolamina tissue were 0.45, 0.13, and 0.31 for Subjects-1, 2, and 3, respectively. The Dice coefficients of the lamina beam were 0.79 and 0.77 for Subject-1, 0.78 and 0.79 for Subject-2, and 0.55 and 0.69 for Subject-3. The two Dice coefficients of each subject correspond to Depths A and B, respectively.

For lamina beam tissue, moderate to high Dice coefficients, that is, moderate to high agreement between manual and automatic segmentation, were found. By contrast, the Dice coefficients of pre-lamina and retrolamina tissues were not high. The relatively low Dice coefficients can be partially accounted for by non-perfect manual segmentation and a difference in the definition of the tissue regions between manual and automatic segmentation. For example, the lamina pore and lamina beam in the cross-sectional scattering OCT were also difficult to manually delineate. Hence, we delineated lamina-to-pre-lamina and lamina-to-retrolamina interfaces rather than segmenting each lamina beam. This resulted in an evident shortcoming such that the lamina pore regions were not classified as pre-lamina or retrolamina regions using manual segmentation, whereas they were classified as either pre-lamina or retrolamina regions using automatic segmentation. In addition, the boundary between lamina cribrosa and retrolamina tissues is anatomically not sharp [47]. Thus, manual delineation of the lamina-retrolamina boundary is inevitably not perfect. Manual segmentation for a lamina beam in en face slice is also not fully reliable; because of its low intensity contrast and limited transversal resolution of OCT.

3.3. Effect of correlation between the test and training datasets

In our main result in Section 3.1, we used the collateral eyes of the same subjects to train the classifier (with right eyes) and validate the classifier (with left eyes). Although the training and validation were performed using different eyes, the eyes from the same subjects might have some correlation. To evaluate the effect of the collateral correlation, we trained the classifier using the right eyes of two of the three subjects, and examined the classifier using the left and right eyes of the residual subject. Thus, it is a leave-one-out validation.

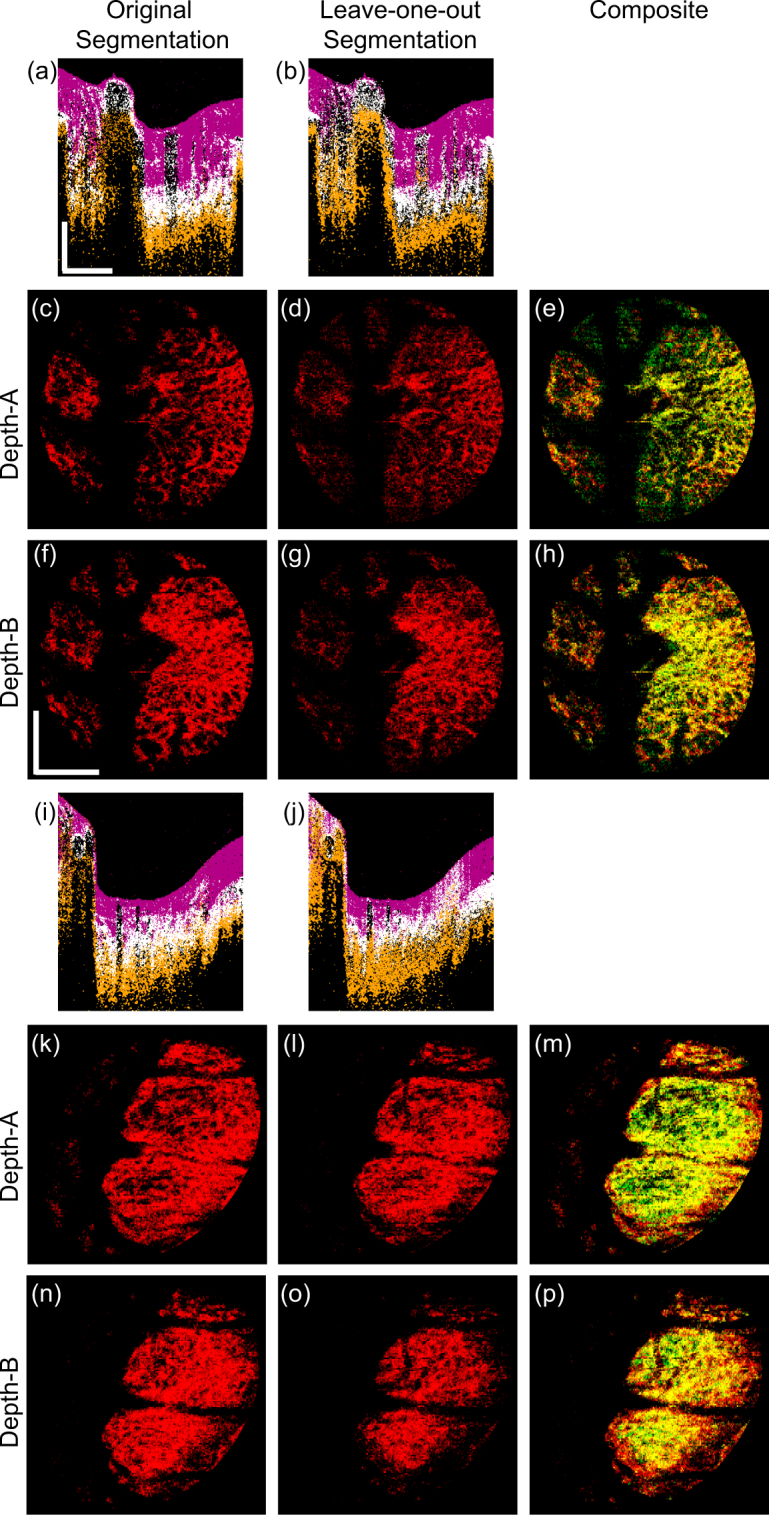

Because all six trials demonstrated similar results, two representatives are shown in Fig. 7. The upper half of the figure shows images of the left eye of Subject-3 (emmetropia), whereas the lower half shows the left eye of Subject-1 (myopia). The first column shows the original segmentation results which are identical to Figs. 4, 5 (c), 5 (f), 6 (c), and 6 (f). The second column shows the results that correspond to the first column but were obtained using leave-one-out validation. The third column shows the red-green composite of the en face segmentation results, where the original and leave-one-out segmentation results are in red and green, respectively. The overlapping pixels of the two segmentation appears in yellow. The first and fourth rows show cross-sectional meta-label images, the second and fifth rows show the en face lamina beam at Depth-A, and the third and sixth rows show that at Depth-B. The depths are indicated in Figs. 5 and 6.

Fig. 7.

A comparison of the original and leave-one-out segmentations. The upper half of the figure shows the images of the left eye of Subject-3 (emmetropia); and the lower half shows the left eye of Subject-1 (myopia). The first and second columns show the original and leave-one-out segmentations. The third column shows the red-green composite of the first and second column images, where the original and leave-one-out segmentation results are in red and green, respectively. The first and fourth rows show the cross-sectional meta-label images, the second and fifth rows show the en face lamina beam at Depth-A, and the third and sixth rows show that at Depth-B. Scale bars indicate 0.5 mm × 0.5 mm.

Although some minor discrepancies were found in the low intensity region and periphery, the original and leave-one-out segmentations of the lamina beam agreed reasonably.

3.4. Long-term robustness of the classifier

To test the long-term robustness of the trained classifier, the classifier trained in Section 3.1 for Figs. 5 and 6 was applied to the another dataset of Subject-1 that was acquired 6 months after the acquisition of the training dataset.

The results are summarized in Fig. 8. Figure 8(a) shows the cross-sectional meta-label image, and the alignment of en face images [Figs. 8(b)–(g)] is the same as the corresponding original segmentation results at the baseline time point [Fig. 6]. By comparing this result of after 6 months with the baseline result, a high consistency is seen in the segmentation results. This demonstrates the reasonable long-term robustness of our method.

Fig. 8.

Segmentation results of the myopic eye (Subject-1). Segmentation was performed using the same trained classifier as Fig. 6 however, the data were acquired 6 months after the training dataset was acquired. (a) represents the cross-sectional image of the meta-labels. The alignment of (b)–(g) was identical to that of Fig. 6. Scale bars indicate 0.5 mm × 0.5 mm.

3.5. Lamina beam birefringence and attenuation coefficient analysis

The lamina beam and lamina pore are two distinguished tissues. The lamina beam consists of collagen and its ultrastructure supports the mechanical characteristic of the lamina cribrosa. Hence, the birefringence and scattering characteristic analysis of only lamina beam regions can be more sensitive to pathologic tissue alteration than the analysis of the entire lamina cribrosa. The lamina beam segmentation described in this paper allows us to specifically assess the optical properties of the lamina beam.

Figures 9 and 10 show the lamina beam birefringence and attenuation coefficient maps of emmetropic (left eye of Subject-3) and myopic (left eye of Subject-1) cases, respectively. The first two rows are birefringence maps, while the third and fourth rows are attenuation coefficient maps. The first to last columns of the figures are en face slices of lamina beam birefringence/attenuation coefficient (first column), sectorized mean birefringence/attenuation coefficient of lamina beam (second column), bulk lamina birefringence/attenuation coefficient (third column), and the sectorized mean birefringence/attenuation coefficient of bulk ONH (fourth column). The first and third rows of both figures correspond to depth-A of Figs. 5 and 6, whereas the second and fourth rows correspond to depth-B. The sector borders are overlaid on the maps.

In the mean lamina beam birefringence maps [Figs. 9(b), 9(f), 10(b), and 10(f)], the inter-sector difference is clear. In depth-A of both cases, the beam birefringence is generally higher than the bulk ONH birefringence. In depth-B, the mean birefringence values of the beam and bulk ONH are more similar than the depth-A. It would be explained by the higher density of beam at depth-B than depth-A.

In the attenuation maps, significantly higher sectorized mean attenuation can be found in lamina beam attenuation maps than the bulk ONH attenuation maps at both depths and both cases. It is also because of the contribution of low scattering non-beam tissue in the bulk ONH attenuation maps.

Because the beam birefringence and attenuation coefficient maps reflect only the beam property, it would be more sensitive to the tissue alteration of the lamina cribrosa. Thus, it could be useful for the detection of early pathologic alteration.

The mean birefringence and attenuation coefficients of the lamina-beam and lamina pore regions in the quadrant sectors are summarized in Table 2, where the upper values in each cell are for the lamina beam and the lower values in brackets are for the lamina pore. For all sectors of both depths, the mean attenuation coefficients of the lamina beam were found to be higher than those of the lamina pore. For six out of 16 combinations of sectors and depths, the mean birefringence of the lamina beam was found to be higher than those of the lamina pore. This contradicts our initial expectation that the lamina beam would generally have higher birefringence than the lamina pore. However, it is consistent with the fact that birefringence had relatively low feature importance for the classification as later discussed in Section 4.2.

Table 2.

Sector-wise mean values of birefringence and the attenuation coefficient. The values outside and inside the brackets represent the lamina-beam and non-lamina beam, respectively. Values in red indicate the region in which lamina beam values are higher than non-lamina beam values.

| Birefringence (× 10−3) | Attenuation coefficient (mm−1) | ||||||||

|---|---|---|---|---|---|---|---|---|---|

| Temporal | Superior | Nasal | Inferior | Temporal | Superior | Nasal | Inferior | ||

| Subject-3 Emmetropia | Depth-A | 0.40 | 0.38 | 0.63 | 0.33 | 4.00 | 3.29 | 4.36 | 3.98 |

| (0.55) | (0.46) | (0.47) | (0.35) | (1.51) | (1.29) | (1.43) | (1.28) | ||

| Depth-B | 0.63 | 0.56 | 0.71 | 0.45 | 4.89 | 4.56 | 5.66 | 4.95 | |

| (0.87) | (0.59) | (0.63) | (0.40) | (1.95) | (1.86) | (2.16) | (1.89) | ||

|

| |||||||||

| Subject-1 Myopia | Depth-A | 0.73 | 0.66 | 0.87 | 0.61 | 5.14 | 5.97 | 6.52 | 5.14 |

| (0.95) | (0.53) | (0.71) | (0.55) | (2.05) | (2.55) | (2.62) | (2.03) | ||

| Depth-B | 0.63 | 0.68 | 0.77 | 0.57 | 6.00 | 5.62 | 5.68 | 5.51 | |

| (1.88) | (0.94) | (0.78) | (0.99) | (1.72) | (2.08) | (1.98) | (1.78) | ||

4. Discussions

4.1. Rationality of XOR feature

Bit-wise XOR of OCT and AC (OCT-⊕-AC) has been used as a feature in the present tissue classification method. This feature was introduced as one of the extended features and also was retained in the reduced feature. So it was used for the tissue classification by the random-forest classifier.

Although the physical interpretation of this feature is still an open issue, it was found that this feature positively affects the lamina beam segmentation. This positive effect was shown by the following two findings.

The first finding is that OCT-⊕-AC showed 5th highest feature importance among the twelve reduced features. Here, the feature importance was Gini importance [48] and was obtained as a built-in attribute of the sci-kit learn implementation of the random-forest classifier.

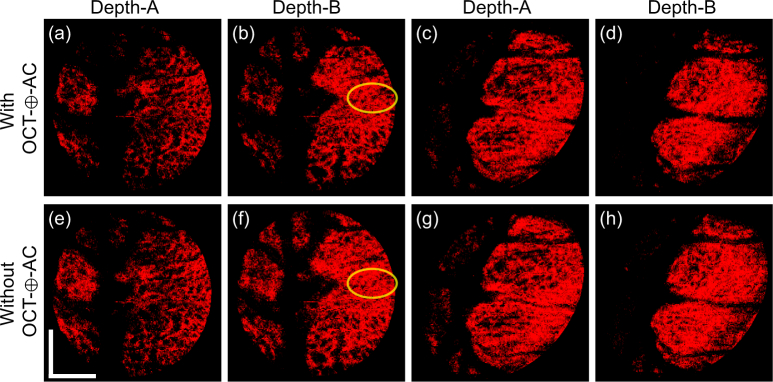

The second finding is shown in the comparison of lamina beam segmentation results obtained with and without OCT-⊕-AC feature (Fig. 11). The upper row [Figs. 11(a)–(d)] are segmented lamina beam with the OCT-⊕-AC feature, which are identical to Figs. 5(c), 5(f), 6(c), and 6(f), respectively. The lower row [Figs. 11(e)–(h)] are the same segmentation results but obtained without the OCT-⊕-AC feature. The images were obtained at two depths [depths A and B indicated in Figs. 5(a) and 6(a)]. The left four and right four images were obtained from the emmetropic subject (Subject-3) and the myopic subject (Subject-1), respectively. It is seen that the segmentation results with the OCT-⊕-AC feature shows clearer structure of lamina pores than that without the OCT-⊕-AC feature as exemplified by yellow circles. These two findings support the inclusion of this feature.

Fig. 11.

The comparison of lamina beam segmentation results with and without OCT-⊕-AC feature. (a)–(d) are segmented lamina beam with the OCT-⊕-AC feature, while (e)–(h) are without the OCT-⊕-AC feature. The images are obtained at two depths as indicated in the figure The left four images (a), (b), (e), and (f) are from the emmetropic subject (Subject-3) and right four images (c), (d), (g), and (h) are from the myopic subject (Subject-1). (a)–(d) are identical to Figs. 5(c), 5(f), 6(c), and 6(f), respectively.

There must be other synthesized features which do not have straight physical interpretation but are still useful for tissue classification. Usage of standard machine learning techniques will enable the usage of such features. For example, a neural network based auto-encoder can generate new synthesized features without considering its physical interpretations (see also Section 4.4). A support vector machine classifier also generates high-order features by itself owing to its kernel methods.

4.2. Features and classification

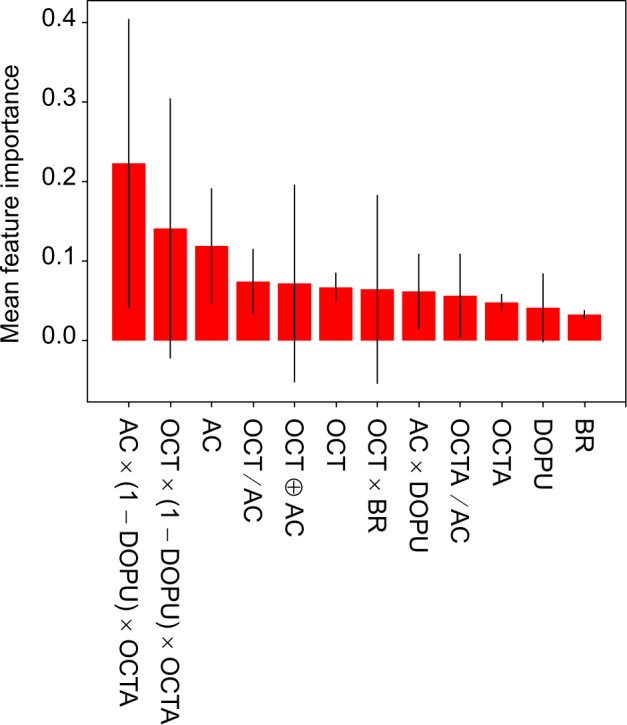

The trained random forest algorithm provided the importance metric (Gini importance [48]) for each feature. Figure 12 shows the mean feature importance and its standard deviation over the 10 decision trees used in our trained random forest classifier. AC × (1-DOPU) × OCTA and OCT × (1-DOPU) × OCTA were the top two features with the highest mean feature importance. It can also be seen that the AC and features associated with AC seems to play a major role in the classification, followed by OCT, OCTA, DOPU and BR, in that order. It is also seen in the results shown in Section 3.5 that the mean AC values showed an evident difference between lamina and non-lamina tissues, while the difference was not clear in BR.

Fig. 12.

The mean and standard deviations of the Gini importance for each feature. The mean was computed over 10 decision trees in the trained random forest classifier. The heights of the red bars indicate the mean importance of each feature; and the black lines indicate the standard deviation.

Azuma et al. recently demonstrated a generation of features by combining multiple contrasts of JM-OCT [25], and the features are sensitive for specific tissues. The feature specific to RPE was AC × (1 − DOPU) × (1 − OCTAb) where OCTAb is a binarized OCTA. It is noteworthy that this RPE feature is similar to the 1st important feature and it can be generated by combining our 1st, 3rd and 8th important features, although the OCTA used in our study was not binarized.

4.3. Potential clinical applications

Glaucoma and myopia are known to be associated with ONH alterations [49–51]. Particularly, glaucoma is believed to show ultrastructural alterations in its very early stage, even before the thinning of retinal nerve fiber layer [52].

Our method enables the specific investigation of only the lamina beam as demonstrated in Section 3.5. Not only birefringence analysis, but also the lamina beam segmentation result can be used to analyze other optical properties. For example, the attenuation coefficient is also expected to be an early biomarker of glaucoma [36,53]. The attenuation coefficient assessment specific to the lamina beam may also be useful for glaucoma detection.

4.4. Potential usage of other machine learning algorithms

In the current method, we first expanded the feature space from Mp (= 5) to Mext (= 16) by combining the original optical features. Then, it was reduced by checking the correlation among the extended features. Although this empirical feature engineering was satisfactory for our specific purpose, it would be good if this feature engineering was not empirical so as to generalize our tissue classification framework to the other types of tissues. Neural network-based auto-encoders can potentially be used for the non-empirical feature engineering.

In the current method, we used a random forest classifier. However, other classifiers, such as support vector machines, can also be used. Although the current random forest classifier was satisfactory for our specific purpose, there is still scope for optimization, particularly for tailoring the method to other tissue types.

4.5. Processing time

The processing time could be calculated as the total time involved in the entire processing, including the training and testing phases. Broadly, it can be divided into processing the JMOCT contrasts for feature engineering, generation of the training dataset, and predicting tissue labels on the test data.

The first stage was data preparation, including JM-OCT image construction, general post-processing, and feature engineering. The generation of five primary contrasts and feature engineering took approximately 35 minutes for a single volume.

The second stage was the training stage, which involved the unsupervised learning stage of k-means clustering and the manual process of assigning the clusters to pre-assigned tissue labels. k-means clustering over three B-scans of 512 × 500 for three volumes took approximately 11.4 minutes. The manual cluster assignment took another 10 minutes, and training this dataset with the random forest classifier took 17.4 seconds. In total, approximately 22 minutes was required for the generation of the training dataset using the random forest classifier.

The third stage was the supervised classification of each volume dataset. It took approximately 25 seconds to predict the tissue labels over the entire volume of test dataset of 512 × 500 × 256 pixels.

The implementation was run on a Windows 10 PC with Intel (R) Core (TM) i9-7900X CPU (3.30GHz) and 128 GB RAM.

4.6. Limitations of the present method

Although reasonable segmentation results were demonstrated for a single myopic case and a single emmetropic case, the present method still has some limitations.

Although the method was described as pixel-by-pixel classification, some of the JM-OCT contrasts were computed using spatial kernels. For example, OCTA and DOPU were computed using a spatial kernel of 3 × 3 pixels (lateral × axial; 63 µm × 12 µm). BR was computed from two pixels with 6-pixel (24-µm) depth separation, and then a MAP birefringence estimator [35] was applied with a spatial kernel of 2 × 2 pixels (lateral × axial; 42 µm × 12 µm). Thus, the resolution of the segmentation was limited by the sizes of the kernels and it may have reduced the contrasts of the OCTA, DOPU, and BR images.

In the present study, the size and variability of the training and test datasets were limited. The versatility of the segmentation method should be evaluated with larger and more varied datasets.

The current algorithm still exhibits some evident misclassification. For example, as seen in the meta-labels of Fig. 4, the walls of some large blood vessels are misclassified as lamina beam. Also, most of the pixels in the shadow of the large blood vessels are misclassified as retrolamina pixels. So, the interpretation of segmentation results around the large blood vessels should be done with care. Although our current algorithm was designed not to use morphological information, the morphological information can be additionally used, in the future, to mitigate this misclassification.

The advantage of the present method is the absence of a manually generated training dataset. However, the absence of manual segmentation results as a reference standard resulted in the difficulty of the accurate validation of automatic segmentation. As discussed in Section 3.2, the manual segmentation of some tissues cannot be perfect. Thus, we need to create a proper strategy to accurately evaluate the segmentation results.

The segmented lamina beam was used to analyze the birefringence and scattering (attenuation coefficient) property of the lamina beam in Section 3.5. It should be noted that the obtained birefringence and attenuation coefficient can be affected by the segmentation results, whereas the segmentation is affected by the birefringence and attenuation coefficient. This circular dependency should be carefully considered to interpret the results.

5. Conclusion

A general framework for nearly unsupervised pixel-by-pixel tissue classification using multiple contrasts obtained from JM-OCT was presented. The framework was specifically customized to ONH tissue segmentation. The segmentation of prelamina, lamina cribrosa (lamina beam), and retrolamina were successfully demonstrated. As a potential clinical application, lamina beam birefringence maps and attenuation coefficient maps were generated using the segmentation results and corresponding JM-OCT contrast. This may enable the fine assessment of lamina property and may be used for the detection of early pathologic alterations. This JM-OCT-based tissue classification framework is versatile and could be used for other types of tissues.

It should be noted that the present algorithm is a proof of principle of semi-supervised segmentation of optic nerve head tissues using multiple contrasts of JM-OCT. Although reasonable segmentation results were demonstrated, they were not perfect. Future work must include the improvement of both feature engineering and the classification method, introduction of sophisticated pre- and post-processing steps, and a more quantitative evaluation of the sensitivity and specificity of the segmentation.

Acknowledgment

We gratefully acknowledge technical support from Yuta Ueno from the University of Tsukuba and research administrative work of Tomomi Nagasaka from the University of Tsukuba.

Funding

Japan Society for the Promotion of Science (JSPS, 15K13371); Japanese Ministry of Education, Culture, Sports, Science and Technology (MEXT) through a contract of the Regional Innovation Ecosystem Development Program.

Disclosures

DK, YJH: Tomey Corp. (F), Nidek (F), Kao (F); YY, SM: Tomey Corp. (F, P), Nidek (F), Kao (F). YJH is currently employed by Koh Young Technology.

References and links

- 1.Sigal I. A., Wang B., Strouthidis N. G., Akagi T., Girard M. J. A., “Recent advances in OCT imaging of the lamina cribrosa,” Br. J. Ophthalmol. 98, ii34–ii39 (2014). 10.1136/bjophthalmol-2013-304751 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Mari J.-M., Aung T., Cheng C.-Y., Strouthidis N. G., Girard M. J. A., “A digital staining algorithm for optical coherence tomography images of the optic nerve head,” Transl. Vis. Sci. Technol. 6, 1–12 (2017). 10.1167/tvst.6.1.8 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Kennedy B. F., Kennedy K. M., Sampson D. D., “A Review of Optical Coherence Elastography: Fundamentals, Techniques and Prospects,” IEEE J. Sel. Topics Quantum Electron. 20, 272–288 (2014). 10.1109/JSTQE.2013.2291445 [DOI] [Google Scholar]

- 4.Larin K. V., Sampson D. D., “Optical coherence elastography - OCT at work in tissue biomechanics [Invited],” Biomed. Opt. Express 8, 1172–1202 (2017). 10.1364/BOE.8.001172 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Nadler Z., Wang B., Wollstein G., Nevins J. E., Ishikawa H., Kagemann L., Sigal I. A., Ferguson R. D., Hammer D. X., Grulkowski I., Liu J. J., Kraus M. F., Lu C. D., Hornegger J., Fujimoto J. G., Schuman J. S., “Automated lamina cribrosa microstructural segmentation in optical coherence tomography scans of healthy and glaucomatous eyes,” Biomed. Opt. Express 4, 2596–2608 (2013). 10.1364/BOE.4.002596 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Nadler Z., Wang B., Wollstein G., Nevins J. E., Ishikawa H., Bilonick R., Kagemann L., Sigal I. A., Ferguson R. D., Patel A., Hammer D. X., Schuman J. S., “Repeatability of in vivo 3d lamina cribrosa microarchitecture using adaptive optics spectral domain optical coherence tomography,” Biomed. Opt. Express 5, 1114–1123 (2014). 10.1364/BOE.5.001114 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Wang B., Nevins J. E., Nadler Z., Wollstein G., Ishikawa H., Bilonick R. A., Kagemann L., Sigal I. A., Grulkowski I., Liu J. J., Kraus M., Lu C. D., Hornegger J., Fujimoto J. G., Schuman J. S., “Reproducibility of In-Vivo OCT Measured Three-Dimensional Human Lamina Cribrosa Microarchitecture,” PLoS ONE 9, e95526 (2014). 10.1371/journal.pone.0095526 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Baumann B., Choi W., Potsaid B., Huang D., Duker J. S., Fujimoto J. G., “Swept source / Fourier domain polarization sensitive optical coherence tomography with a passive polarization delay unit,” Opt. Express 20, 10218–10230 (2012). 10.1364/OE.20.010229 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Lim Y., Hong Y.-J., Duan L., Yamanari M., Yasuno Y., “Passive component based multifunctional Jones matrix swept source optical coherence tomography for Doppler and polarization imaging,” Opt. Lett. 37, 1958–1960 (2012). 10.1364/OL.37.001958 [DOI] [PubMed] [Google Scholar]

- 10.Ju M. J., Hong Y.-J., Makita S., Lim Y., Kurokawa K., Duan L., Miura M., Tang S., Yasuno Y., “Advanced multi-contrast Jones matrix optical coherence tomography for Doppler and polarization sensitive imaging,” Opt. Express 21, 19412–19436 (2013). 10.1364/OE.21.019412 [DOI] [PubMed] [Google Scholar]

- 11.Sugiyama S., Hong Y.-J., Kasaragod D., Makita S., Uematsu S., Ikuno Y., Miura M., Yasuno Y., “Birefringence imaging of posterior eye by multi-functional Jones matrix optical coherence tomography,” Biomed. Opt. Express 6, 4951–4974 (2015). 10.1364/BOE.6.004951 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Makita S., Kurokawa K., Hong Y.-J., Miura M., Yasuno Y., “Noise-immune complex correlation for optical coherence angiography based on standard and Jones matrix optical coherence tomography,” Biomed. Opt. Express 7, 1525–1548 (2016). 10.1364/BOE.7.001525 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Makita S., Hong Y.-J., Miura M., Yasuno Y., “Degree of polarization uniformity with high noise immunity using polarization-sensitive optical coherence tomography,” Opt. Lett. 39, 6783–6786 (2014). 10.1364/OL.39.006783 [DOI] [PubMed] [Google Scholar]

- 14.Sakai S., Yamanari M., Lim Y., Nakagawa N., Yasuno Y., “In vivo evaluation of human skin anisotropy by polarization-sensitive optical coherence tomography,” Biomed. Opt. Express 2, 2623–2631 (2011). 10.1364/BOE.2.002623 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Yamanari M., Ishii K., Fukuda S., Lim Y., Duan L., Makita S., Miura M., Oshika T., Yasuno Y., “Optical rheology of porcine sclera by birefringence imaging,” PLoS ONE 7, e44026 (2012). 10.1371/journal.pone.0044026 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Nagase S., Yamanari M., Tanaka R., Yasui T., Miura M., Iwasaki T., Goto H., Yasuno Y., “Anisotropic alteration of scleral birefringence to uniaxial mechanical strain,” PLoS ONE 8, e58716 (2013). 10.1371/journal.pone.0058716 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Yamanari M., Nagase S., Fukuda S., Ishii K., Tanaka R., Yasui T., Oshika T., Miura M., Yasuno Y., “Scleral birefringence as measured by polarization-sensitive optical coherence tomography and ocular biometric parameters of human eyes in vivo,” Biomed. Opt. Express 5, 1391–1402 (2014). 10.1364/BOE.5.001391 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Jan N.-J., Grimm J. L., Tran H., Lathrop K. L., Wollstein G., Bilonick R. A., Ishikawa H., Kagemann L., Schuman J. S., Sigal I. A., “Polarization microscopy for characterizing fiber orientation of ocular tissues,” Biomed. Opt. Express 6, 4705–4718 (2015). 10.1364/BOE.6.004705 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Sigal I. A., Flanagan J. G., Tertinegg I., Ethier C. R., “Modeling individual-specific human optic nerve head biomechanics. Part I: IOP-induced deformations and influence of geometry,” Biomech. Model. Mechanobiol. 8, 85–98 (2009). 10.1007/s10237-008-0120-7 [DOI] [PubMed] [Google Scholar]

- 20.Voorhees A. P., Jan N.-J., Sigal I. A., “Effects of collagen microstructure and material properties on the deformation of the neural tissues of the lamina cribrosa,” Acta Biomater. 58, 278–290 (2017). 10.1016/j.actbio.2017.05.042 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Miyazawa A., Yamanari M., Makita S., Miura M., Kawana K., Iwaya K., Goto H., Yasuno Y., “Tissue discrimination in anterior eye using three optical parameters obtained by polarization sensitive optical coherence tomography,” Opt. Express 17, 17426–17440 (2009). 10.1364/OE.17.017426 [DOI] [PubMed] [Google Scholar]

- 22.Götzinger E., Pircher M., Geitzenauer W., Ahlers C., Baumann B., Michels S., Schmidt-Erfurth U., Hitzenberger C. K., “Retinal pigment epithelium segmentation by polarization sensitive optical coherence tomography,” Opt. Express 16, 16410–16422 (2008). 10.1364/OE.16.016410 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Baumann B., Götzinger E., Pircher M., Sattmann H., Schütze C., Schlanitz F., Ahlers C., Schmidt-Erfurth U., Hitzenberger C. K., “Segmentation and quantification of retinal lesions in age-related macular degeneration using polarization-sensitive optical coherence tomography,” J. Biomed. Opt. 15, 061704 (2010). 10.1117/1.3499420 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Sugiyama S., Hong Y.-J., Kasaragod D., Makita S., Miura M., Ikuno Y., Yasuno Y., “Quantitative polarization and flow evaluation of choroid and sclera by multifunctional Jones matrix optical coherence tomography,” Proc. SPIE 9693, 96930M (2016). [Google Scholar]

- 25.Azuma S., Makita S., Miyazawa A., Ikuno Y., Miura M., Yasuno Y., “Pixel-wise segmentation of severely pathologic retinal pigment epithelium and choroidal stroma using multi-contrast Jones matrix optical coherence tomography,” Biomed. Opt. Express 9, 2955–2973 (2018). 10.1364/BOE.9.002955 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Duan L., Yamanari M., Yasuno Y., “Automated phase retardation oriented segmentation of chorio-scleral interface by polarization sensitive optical coherence tomography,” Opt. Express 20, 3353–3366 (2012). 10.1364/OE.20.003353 [DOI] [PubMed] [Google Scholar]

- 27.Torzicky T., Pircher M., Zotter S., Bonesi M., Götzinger E., Hitzenberger C. K., “Automated measurement of choroidal thickness in the human eye by polarization sensitive optical coherence tomography,” Opt. Express 20, 7564–7574 (2012). 10.1364/OE.20.007564 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Miyazawa A., Hong Y.-J., Makita S., Kasaragod D., Yasuno Y., “Generation and optimization of superpixels as image processing kernels for Jones matrix optical coherence tomography,” Biomed. Opt. Express 8, 4396–4418 (2017). 10.1364/BOE.8.004396 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Yamanari M., Tsuda S., Kokubun T., Shiga Y., Omodaka K., Yokoyama Y., Himori N., Ryu M., Kunimatsu-Sanuki S., Takahashi H., Maruyama K., Kunikata H., Nakazawa T., “Fiber-based polarization-sensitive OCT for birefringence imaging of the anterior eye segment,” Biomed. Opt. Express 6, 369–389 (2015). 10.1364/BOE.6.000369 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Kasaragod D., Fukuda S., Ueno Y., Hoshi S., Oshika T., Yasuno Y., “Objective evaluation of functionality of filtering bleb based on polarization-sensitive optical coherence tomography,” Invest. Ophthalmo. Vis. Sci. 57, 2305–2310 (2016). 10.1167/iovs.15-18178 [DOI] [PubMed] [Google Scholar]

- 31.Garvin M. K., Abramoff M. D., Kardon R., Russell S. R., Wu X., Sonka M., “Intraretinal Layer Segmentation of Macular Optical Coherence Tomography Images Using Optimal 3-D Graph Search,” IEEE Transactions on Medical Imaging 27, 1495–1505 (2008). 10.1109/TMI.2008.923966 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Mishra A., Wong A., Bizheva K., Clausi D. A., “Intra-retinal layer segmentation in optical coherence tomography images,” Opt. Express 17, 23719–23728 (2009). 10.1364/OE.17.023719 [DOI] [PubMed] [Google Scholar]

- 33.American National Standards Institute , ANSI Z136.1-2014 American National Standard for Safe Use of Lasers (Laser Institute of America, Orlando, FL, 2014). [Google Scholar]

- 34.Makita S., Yamanari M., Yasuno Y., “Generalized Jones matrix optical coherence tomography: performance and local birefringence imaging,” Opt. Express 18, 854–876 (2010). 10.1364/OE.18.000854 [DOI] [PubMed] [Google Scholar]

- 35.Kasaragod D., Makita S., Hong Y.-J., Yasuno Y., “Noise stochastic corrected maximum a posteriori estimator for birefringence imaging using polarization-sensitive optical coherence tomography,” Biomed. Opt. Express 8, 653–669 (2017). 10.1364/BOE.8.000653 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Vermeer K. A., Mo J., Weda J. J. A., Lemij H. G., de Boer J. F., “Depth-resolved model-based reconstruction of attenuation coefficients in optical coherence tomography,” Biomed. Opt. Express 5, 322–337 (2013). 10.1364/BOE.5.000322 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Chan A. C., Hong Y.-J., Makita S., Miura M., Yasuno Y., “Noise-bias and polarization-artifact corrected optical coherence tomography by maximum a-posteriori intensity estimation,” Biomed. Opt. Express 8, 2069–2087 (2017). 10.1364/BOE.8.002069 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Acharya U. R., Dua S., Du X., S V. S., Chua C. K., “Automated diagnosis of glaucoma using texture and higher order spectra features,” IEEE Trans. Inf. Technol. Biomed. 15, 449–455 (2011). 10.1109/TITB.2011.2119322 [DOI] [PubMed] [Google Scholar]

- 39.Yang H., Qi J., Hardin C., Gardiner S. K., Strouthidis N. G., Fortune B., Burgoyne C. F., “Spectral-domain optical coherence tomography enhanced depth imaging of the normal and glaucomatous nonhuman primate optic nerve head,” Invest. Ophthalmo. Vis. Sci. 53, 394–405 (2012). 10.1167/iovs.11-8244 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Strouthidis N. G., Grimm J., Williams G. A., Cull G. A., Wilson D. J., Burgoyne C. F., “A comparison of optic nerve head morphology viewed by spectral domain optical coherence tomography and by serial histology,” Invest. Ophthalmo. Vis. Sci. 51, 1464–1474 (2010). 10.1167/iovs.09-3984 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Miura M., Makita S., Yasuno Y., Tsukahara R., Usui Y., Rao N. A., Ikuno Y., Uematsu S., Agawa T., Iwasaki T., Goto H., “Polarization-sensitive optical coherence tomographic documentation of choroidal melanin loss in chronic vogt-koyanagi-harada disease,” Invest. Ophthalmo. Vis. Sci. 58, 4467–4476 (2017). 10.1167/iovs.17-22117 [DOI] [PubMed] [Google Scholar]

- 42.Miura M., Makita S., Sugiyama S., Hong Y.-J., Yasuno Y., Elsner A. E., Tamiya S., Tsukahara R., Iwasaki T., Goto H., “Evaluation of intraretinal migration of retinal pigment epithelial cells in age-related macular degeneration using polarimetric imaging,” Sci. Rep. 7, 3150 (2017). 10.1038/s41598-017-03529-8 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.Fan C., Yao G., “Imaging myocardial fiber orientation using polarization sensitive optical coherence tomography,” Biomed. Opt. Express 4, 460–465 (2013). 10.1364/BOE.4.000460 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44.Li E., Makita S., Hong Y.-J., Kasaragod D., Yasuno Y., “Three-dimensional multi-contrast imaging of in vivo human skin by Jones matrix optical coherence tomography,” Biomed. Opt. Express 8, 1290–1305 (2017). 10.1364/BOE.8.001290 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45.Schindelin J., Arganda-Carreras I., Frise E., Kaynig V., Longair M., Pietzsch T., Preibisch S., Rueden C., Saalfeld S., Schmid B., Tinevez J.-Y., White D. J., Hartenstein V., Eliceiri K., Tomancak P., Cardona A., “Fiji: an open-source platform for biological-image analysis,” Nature Methods 9, 676–682 (2012). 10.1038/nmeth.2019 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46.Tsai W.-H., “Moment-preserving thresholding: A new approach,” Graphical Models and Image Processing 19, 377–393 (1984). [Google Scholar]

- 47.Oyama T., Abe H., Ushiki T., “The connective tissue and glial framework in the optic nerve head of the normal human eye: light and scanning electron microscopic studies,” Arch. Histol. Cytol. 69, 341–356 (2006). 10.1679/aohc.69.341 [DOI] [PubMed] [Google Scholar]

- 48.Breiman L., “Random forests,” Machine Learning 45, 5–32 (2001). 10.1023/A:1010933404324 [DOI] [Google Scholar]

- 49.Han J. C., Cho S. H., Sohn D. Y., Kee C., “The characteristics of lamina cribrosa defects in myopic eyes with and without open-angle glaucoma,” Invest. Ophthalmo. Vis. Sci. 57, 486–494 (2016). 10.1167/iovs.15-17722 [DOI] [PubMed] [Google Scholar]

- 50.Reynaud J., Lockwood H., Gardiner S. K., Williams G., Yang H., Burgoyne C. F., “Lamina cribrosa microar-chitecture in monkey early experimental glaucoma: Global change,” Invest. Ophthalmo. Vis. Sci. 57, 3451–3469 (2016). 10.1167/iovs.16-19474 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 51.Burgoyne C., “Myopic eyes and glaucoma,” J. Glaucoma 13, 85 (2004). 10.1097/00061198-200402000-00017 [DOI] [PubMed] [Google Scholar]

- 52.Kim J.-A., Kim T.-W., Weinreb R. N., Lee E. J., Girard M. J. A., Mari J. M., “Lamina cribrosa morphology predicts progressive retinal nerve fiber layer loss in eyes with suspected glaucoma,” Sci. Rep. 8, 738 (2018). 10.1038/s41598-017-17843-8 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 53.Thepass G., Lemij H. G., Vermeer K. A., “Attenuation coefficients from SD-OCT data: Structural information beyond morphology on RNFL integrity in glaucoma,” Journal of Glaucoma 26, 1001–1009 (2017). 10.1097/IJG.0000000000000764 [DOI] [PubMed] [Google Scholar]