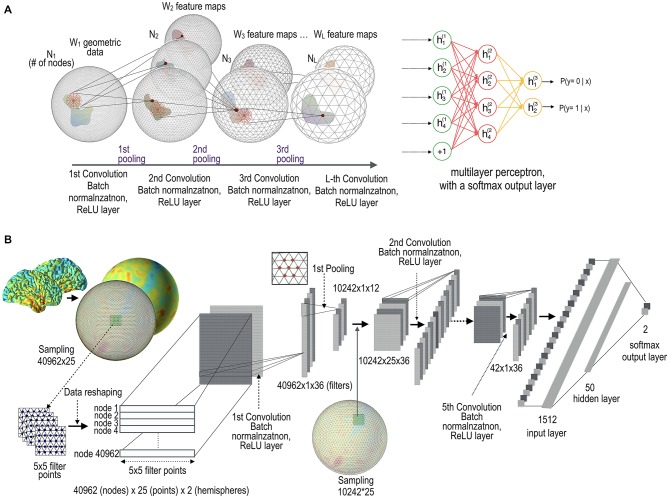

Figure 1.

Architecture of geometric convolutional neural network (gCNN). (A) Conceptual architecture of the gCNN. When data on the cortical surface enter the convolution layer with batch normalization and rectified linear unit (ReLU) output function layers, W feature maps (corresponding to the number of filters at each convolution layer) are generated. The dimension of the nodes decreases from N1 to NL as the data pass through the pooling layers. The gCNN ends with a multilayer classifier. (B) Implementation level architecture of the gCNN. The input data 40,962 (nodes) × 25 (filter sample points) × 2 (hemispheres) are convolved with 36 filters, which are reduced to 42 (nodes) × 36 (filtered outputs, i.e., features) after five convolution and pooling steps. As the data pass through the layers, the number of features increases but the dimension of the nodes decreases. Finally, the convolution-pooling data enter the fully connected multilayer perceptron comprising a hidden layer with 50 nodes and a softmax output layer with two nodes.