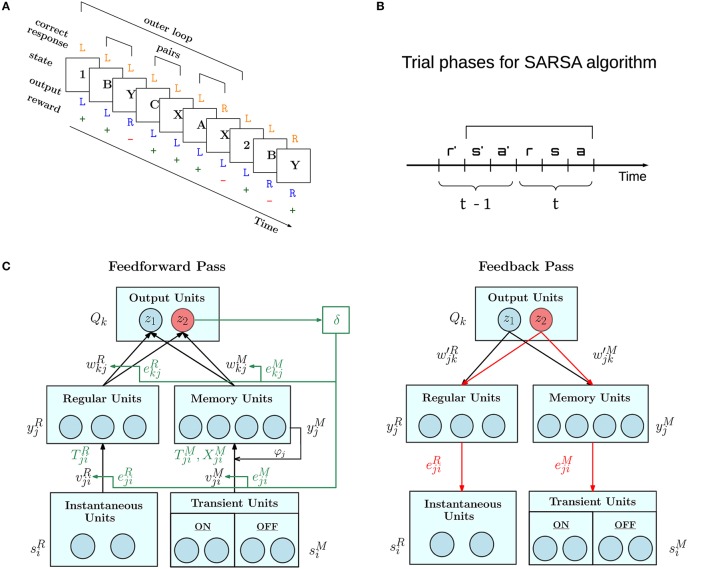

Figure 1.

Overview of AuGMEnT network operation. (A) Example of trials in the 12AX task. Task symbols appear sequentially on a screen organized in outer loops, which start with a digit, either 1 or 2, followed by a random number of letter pairs (e.g., B-Y, C-X and A-X). On the presentation of each symbol, the agent must choose a Target (R) or Non-Target (L) response. If the chosen and correct responses match, the agent receives a positive reward (+), otherwise it gets a negative reward (−). Figure is adapted from Figure 1 of O'Reilly and Frank (2006). (B) AuGMEnT operates in discrete time steps each comprising the reception of reward (r), input of state or stimulus (s) and action taken (a). It implements the State-Action-Reward-State-Action (SARSA, in figure s'a'rsa) reinforcement learning algorithm (Sutton and Barto, 2018). In time step t, reward r is obtained for the previous action a' taken in time step t − 1. The network weights are updated once the next action a is chosen. (C) The AuGMEnT network is structured in three layers with different types of units. Each iteration of the learning process consists of a feedforward pass (left) and a feedback pass (right). In the feedforward pass (black lines and text), sensory information about the current stimulus in the bottom layer, is fed to regular units without memory (left branch) and units with memory (right branch) in the middle layer, whose activities in turn are weighted to compute the Q-values in the top layer. Based on the Q-values, the current action is selected (e.g., red z2). The reward obtained for the previous action is used to compute the TD error δ (green), which modifies the connection weights, that contributed to the selection of the previous action, in proportion to their eligibility traces (green lines and text). After this, temporal eligibility traces, synaptic traces and tags (in green) on the connections are updated to reflect the correlations between the current pre and post activities. Then, in the feedback pass, spatial eligibility traces (in red) are updated, attention-gated by the current action (e.g., red z2), via feedback weights.