Abstract

In anaesthesia, the use of comparative performance reports, their impact on patient care and their acceptability is yet to be fully clarified. Since April 2010, postoperative data on theatre cases in our trust have been analysed and individual comparative performance reports distributed to anaesthetists. Our primary aim was to investigate whether this process was associated with improvement in overall patient care. A short survey was used to assess our secondary aim, the usefulness and acceptability of the process. There were significant improvements in the odds of all outcomes other than vomiting: 39% improvement in hypothermia (p<0.001); 9.9% improvement in severe pain (p<0.001%); 9.6% improvement in moderate pain (p<0.001); 5.3% improvement in percentage pain free (p=0.04); 9.7% improvement in nausea (p=0.02); 30% improvement in unexpected admissions (p=0.001). 100% of consultant respondents agreed that performance reports prompted reflective practice and that this process had the potential to improve patient care. The provision of comparative performance reports was thus associated with an improvement in outcomes while remaining acceptable to the anaesthetists involved.

Keywords: anaesthesia, quality measurement, performance measures, continuous quality improvement

Problem

It is widely accepted that both data collection and reporting are mandatory for clinical improvement; however, due to the novelty of reported outcome measures within anaesthesia, little is currently known of their impact on the quality of patient care or of their acceptability to the individual anaesthetist. Further, there are inherent practical difficulties in collecting postoperative data for the anaesthetists involved, which has historically contributed to the fact that such evaluation is not routinely undertaken. There is thus no agreed consensus regarding the definition of ‘quality’ within anaesthesia, nor how any such comparative data should be collected, used and distributed.

Background

The importance of healthcare service quality measurement, at both individual and organisational level, has been highlighted recently by the process of revalidation and the introduction of government policy documents.1 2 Indeed, the failure of these processes has been highlighted as a contributing factor to instances of well-publicised healthcare service failures.3 4 In anaesthesia, there is currently a lack of consensus as to how we should describe quality and provide clinical outcome data.5–7 The National Institute for Academic Anaesthesia is currently reviewing the use of quality measures within anaesthesia with the aim of providing validated quantifying criteria focused on safety, effectiveness and patient experience,6 8 and some work has already been done to measure the quality of anaesthesia from a patient’s perspective.9

Aims and objectives

We aimed to explore whether, over a 3-year period, postoperative patient care would be affected by the use of comparative ‘performance reports’ in anaesthetics. Secondarily, we aimed to assess whether this process led to increased ‘reflective practice’ and if anaesthetists considered this an acceptable process. This study is unique in that it does not use or assess the impact of a specific, interventional tool implemented over subsequent improvement cycles. Rather, its aim is to assess whether the simple process of receiving feedback may prompt informal, reflective practice, resulting in subsequent modification of the individual anaesthetist’s performance and, ultimately, an improvement in patient care.

Methods

Permission to analyse our database of outcome measures was received from our Research and Development Committee. In accordance with governance concerning previously recorded, non-identifiable data, the committee decided that Research Ethics Committee approval was not required.

Creating performance reports

Since April 2010, patient outcome data have been recorded by the recovery nurses in our post anaesthetic care unit (PACU) and entered into a bespoke PACU module within our electronic theatre management system,10 collating it with the patients’ theatre data, including the operation type, names of surgeons and anaesthetists, key theatre time points and type of anaesthetic. Outcome data recorded include lowest temperature, worst pain score (verbal scale of 0–10), incidence of nausea, incidence of vomiting and unexpected admissions. The data were exported from the theatre management system to produce customised spreadsheets for analysis.

All theatre cases requiring an anaesthetist, with the exclusion of cataract surgery, paediatric cases and obstetric deliveries, were analysed at 6-monthly intervals and individual comparative performance reports distributed to all permanent staff by trust email. Reports were made available to trainees at their request. Importantly, the reports included the individual’s performance and, for comparison, the mean results for the department and the ranges of performance for the individual’s peer group, for example, consultants.

In keeping with our primary objective, no further advice or particular intervention was included within the email. This allowed us to assess whether the simple process of receiving feedback was alone enough to improve patient care by prompting reflective practice in anaesthetists. We did not record any interventions or changes to anaesthetic practice in response to the data: this, we felt, could have introduced experimental artefact to our study, subliminally causing anaesthetists to form a fixed idea of our study’s objective and then unconsciously modifying their behaviour in response.

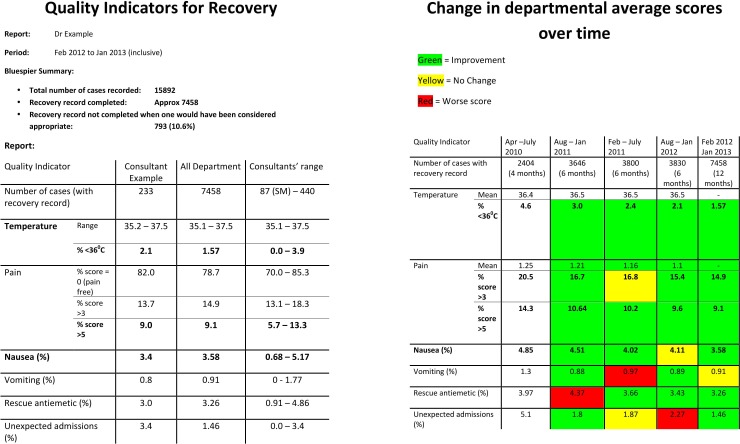

The reports were confidential, with no individual results identifiable to the wider department. Figure 1 shows an exemplar ‘performance report’ and ‘departmental average’ table, provided by email to each anaesthetist at 6-monthly intervals.

Figure 1.

Exemplar ‘performance report’ and ‘departmental averages’ table received by each anaesthetist at 6-monthly intervals.

Analysing impact of performance reports

The database was investigated from April 2010 to February 2013. Variables were analysed as binary outcomes (eg, pain score equal to zero). Each of these outcomes was analysed using logistic-transformed proportions (for monthly time-series) and logistic regression (for individual patient level). The time series data were tested for stationarity, and models were compared with de-trending by differencing or by linear trend subtraction. The trend was tested against a null hypothesis of no trend, using linear regression. A final model was produced using an autoregressive integrated moving average (ARIMA) process. Fitted values and prediction intervals were then back-transformed to proportions to produce the graphs. Analysis was conducted using Stata V.11.2 (StataCorp, College Station, Texas, USA).

To investigate whether any potential change in patient-reported outcome measures could be explained by significant changes in anaesthetic prescribing, trends in monthly pharmacy stock ordering of theatre antiemetic and opioid analgesics, over the same time period, were also analysed using the same process of time-series analysis as above, controlling for the number of cases. In all cases, the best-fit model for the time series data was a simple linear trend of the logistic over time (ie, ARIMA (0, 0, 0) after subtraction of the linear trend).

Finally, to investigate whether there was any resistance to the performance reports, and to assess their perceived usefulness and acceptability, a survey of the consultant body was conducted using Survey Monkey.11 Consultants were invited to an online questionnaire and asked to respond either ‘Strongly Agree’, ‘Agree’, ‘Neutral’, ‘Disagree’ or ‘Strongly Disagree’ to each of the following statements:

My comparative anaesthetic performance reports have enabled me to reflect on my clinical practice.

My comparative anaesthetic performance reports have provided useful information for my annual appraisal by providing me with a tool to evaluate my clinical practice.

I would be interested to receive more clinical outcome data on the patients I have anaesthetised.

I have concerns that the comparative outcome data may be used for reasons other than reflective practice, such as performance management.

Overall, comparative anaesthetic performance reports have the ability to improve patient care.

Results

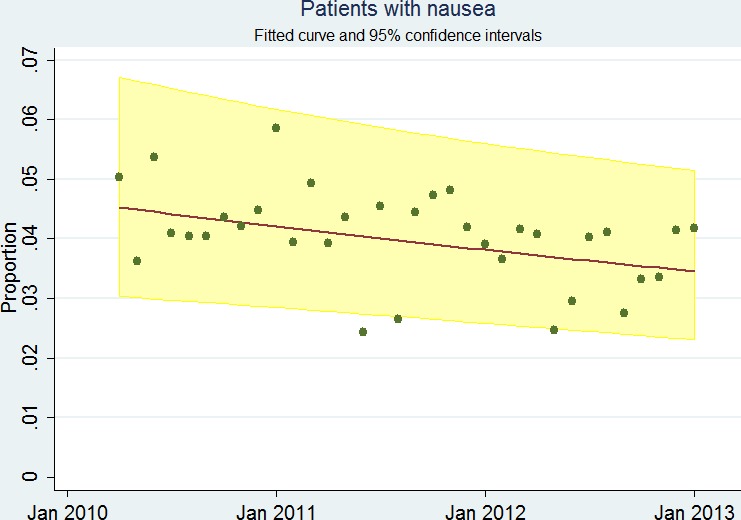

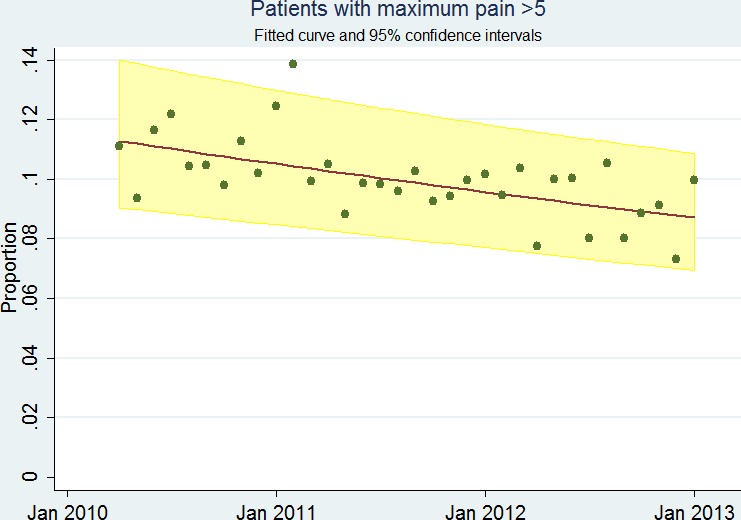

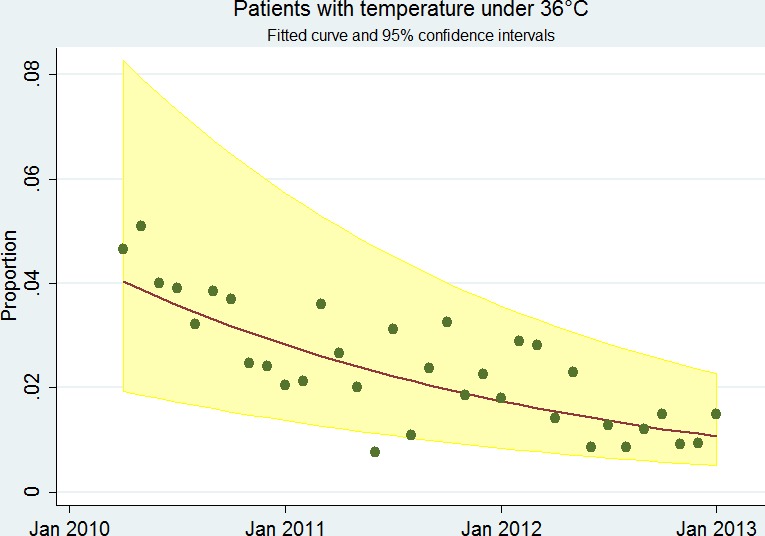

Of the 24 025 cases meeting the inclusion criteria, 21 217 (88.3%) had recovery data recorded. There were statistically significant yearly improvements in the odds of all clinical outcomes other than vomiting: 39% improvement in hypothermia (p<0.001, 95% CI 29% to 48%); 9.9% improvement in severe pain (p<0.001%, 95% CI 5.2% to 14.4%); 9.6% improvement in moderate pain (p<0.001, 95% CI 5.2% to 13.7%); 5.3% improvement in percentage pain free (p=0.04, 95% CI 0.2% to 10.3%); 9.7% improvement in nausea (p=0.02, 95% CI 1.6% to 14.6%); 30% improvement in unexpected admissions (p=0.001, CI 13% to 44%). Vomiting showed a non-significant trend in improvement, 6.8% (p=0.28). Three examples of these changes over time are shown in figures 2–4.

Figure 2.

Proportion of patients reporting nausea in post anaesthetic care unit over time. Shown with fitted curve and 95% CIs.

Figure 3.

Proportion of patients with maximum pain score >5 in post anaesthetic care unit over time. Shown with fitted curve and 95% CIs.

Figure 4.

Proportion of patients with temperature under 36°C in post anaesthetic care unit over time. Shown with fitted curve and 95% CIs.

Analysis of pharmacy stock-ordering for antiemetics showed no evidence of increase over the same 3-year period, with the exception of ondansetron 4 mg vials (with an increase ordering of 5.1 vials per month from April 2010 to February 2013 (p<0.001, 95% CI 3.9 to 6.2)). There was no change in the stock-ordering of opiates over the same time period with the exception of morphine 50 mg syringes, which reduced by 3.3 syringes per month (p<0.001; 95% CI 2.7 to 3.8) and fentanyl 50 µg/mL vials, which increased by 2.4 vials per month (p<0.001; 95% CI 1.0 to 3.9).

Fourteen of fifteen consultants responded to our Survey Monkey questionnaire, giving a response rate of 93%. One hundred per cent of respondents strongly agreed, or agreed, that comparative performance reports enabled personal reflection on their clinical practice, were beneficial in providing useful information for their annual appraisals, had the potential to improve patient care and that they would be interested to receive more outcome data on their cases. Fifty per cent of surveyed anaesthetists had initial concerns that performance reports might be used for reasons other than reflective practice, such as assessment of performance at job planning. However, this barrier was overcome as these respondents ultimately acknowledged that this was not the case.

Discussion

Our retrospective analysis has shown that with the recording of quality outcome measures in PACU, and the subsequent provision of individualised recovery reports, there was an associated improvement in patient-reported outcome measures over time. We demonstrated statistically significant improvements in the incidences of hypothermia, moderate and severe pain, pain-free recovery, nausea and unexpected admissions. A short departmental survey confirmed the acceptability of this feedback process to the individual anaesthetists involved.

The process of revalidation is intended to stimulate clinicians to reflect actively on their clinical performance, and patient-reported outcome data are believed to be central to this reflection. However, within anaesthesia, the definition of quality lacks clarity. Indeed, most anaesthetists remain unaware of the clinical outcome of the patients they anaesthetise. This is in stark contrast to the outcome data provided to individual surgeons working on the same clinical caseload. Given this situation, it is unsurprising that there is little evidence in anaesthesia to show that individual clinical feedback results in a significant improvement in patient care. Recently, Hocking et al used subjectively derived patient feedback to produce comparative reports for anaesthetists and observed an improvement in patient experience over time9: we believe that our study, using objective parameters, supports and indeed strengthens their findings.

The control of postoperative pain and the avoidance of post operative nausea and vomiting (PONV) are ranked highly by patients as anaesthetic outcomes to avoid, and therefore represent important quality indicators.9 12 Perioperative hypothermia has consistently been shown to affect surgical outcome adversely and is also therefore an important quality indicator.13 A number of other postoperative quality indicators exist, for example regional anaesthesia block success, and we have since continued to add to the outcome indicators recorded.

This process has the potential to be expanded and refined to include other clinical parameters that may be used to describe ‘quality’, for example, overall patient satisfaction score. Further, this process could easily be used to highlight possible areas for intervention and improvement, both individually and departmentally. While the process of receiving performance reports by individual anaesthetists may prompt increased personal reflective practice, areas requiring collective improvement on a departmental scale may be identified and then be used to inform quality improvement processes. We are currently exploring the possibility of combining our outcome data with other information already recorded and used by our surgical colleagues. Examples include length of stay, returns to theatre, and overall morbidity and mortality. With the increasing recognition of anaesthetists as perioperative physicians playing a crucial role in improving patient outcomes, providing this outcome data to anaesthetists would seem vital to the quality improvement process.

Limitations

As with any quality improvement feedback loop, the process is only as good as the accuracy of the data being entered. Although the issue of observer bias was minimised by our outcome parameters being entirely quantitative in nature, it might be suggested that the recording of recovery scores by nurses may be subject to an element of subjective interpretation. Another potential source of error is in the accuracy of data allocation to individual anaesthetists: for example, when multiple anaesthetists are present in theatre or when a trainee is managing a case with distant supervision. Our solution to this potential problem was the verification of staff identification and presence, incorporated into the WHO team briefing. However, this remains a potential source of error for reports received by individuals.

It is significant that our study deliberately suggested no specific intervention and simply provided performance reports. This was intentional, as our primary objective was to see whether the creation and dissemination of performance reports alone might be associated with improved clinical care stimulated via reflective practice. Therefore, no causality can be inferred from the observed improvement in clinical care and the production of performance reports. Indeed, the Hawthorne effect (whereby individuals modify their practice simply because they are aware that they are being monitored) may itself be responsible for a proportion of the changes noted in our department over time. We might also suggest, however, that any such effect experienced in the initial stages of our study might actually have transmuted into a genuine, sustained change in practice over time associated with the informal process of self-reflection. Indeed, although not formally analysed in this study, improved departmental outcomes continued to be sustained 24 months later.

It is highly possible that other external variables may also have impacted on the observed results. For instance, although little caseload change occurred over the study period for the department as a whole, other initiatives such as the introduction of the WHO safety checklist, preoperative cardiopulmonary exercise testing for major colorectal surgery cases and the enhanced recovery programme in orthopaedics may have influenced recovery data. Indeed, the latter is responsible for the significantly reduced ordering of 50 mg morphine patient controlled analgesia system (PCAS) prefilled syringes. However, we also know from the departmental survey that the provision of the comparative feedback reports did indeed result in individuals reflecting on their practice. Additionally, the outcome data allowed identification of areas of practice as a department that benefited from targeted education and training, for example, the provision of perioperative guidance for the management of day-case laparoscopic cholecystectomy, where consistently high recovery pain scores were identified. This highlights clearly the potential use of our system in identifying areas for improvement on a departmental scale and directing the development of specific quality improvement interventions to address these.

Despite being well received by the department, initial concerns were raised regarding the discrepancies in caseloads between anaesthetists, and how such differences would manifest within the context of the departmental average and range, an obvious example of this being the presumed higher incidence of PONV classically observed in gynaecological procedures. Subdividing data based on specialty, or to individual surgeons, is a potential future step and could be achieved relatively easily with our software. However, it must be stressed that for the purposes of reflective practice and revalidation, it is the individual’s performance over time that needs to be demonstrated, not relative performance within a department.

Although our study provides strong evidence to support the creation and dissemination of performance reports in our anaesthetic department over the 3-year period in question, logistical and professional commitments prevented us from exploring the further longevity of the positive impact in clinical outcomes. We continue to collect PACU data on eligible patients, but the spreadsheet analysis and dissemination of reports ended when our lead consultant undertook other managerial responsibilities within the department. It would therefore be helpful to conduct an up-to-date evaluation of departmental PACU data achieved in 2018. This could provide a comparison with our study period, through which the impact of performance reports could be inferred. We acknowledge that long-term sustainability of our system should be assessed by re-initiating the routine recording of PACU data and dissemination of performance reports in our department 4 years on. This would be greatly aided by the generation of automated reports, freeing up clinical time, and is currently being explored.

Footnotes

Contributors: TC: project conception, planning, conduction, distribution of performance reports, initial write-up, revision of manuscript. MR: initial write-up. AR: conducted survey, initial write-up, revision of manuscript. TL: statistical analysis.

Funding: This research received no specific grant from any funding agency in the public, commercial or not-for-profit sectors.

Competing interests: None declared.

Patient consent: Not required.

Provenance and peer review: Not commissioned; externally peer reviewed.

Data sharing statement: No additional data are available.

References

- 1. Department of Health. High Quality Care for All: NHS Next Stage Review Final Report. London: Department of Health, 2008. [Google Scholar]

- 2. Department of Health. Equity and Excellence: Liberating the NHS. London: The Stationery Office, 2010. [Google Scholar]

- 3. Francis R. Independent Inquiry into Care Provided by Mid Staffordshire NHS Foundation Trust January 2005–March 2009. London: The Stationery Office, 2010. [Google Scholar]

- 4. Kennedy I. Learning from Bristol: the Report of the Public Inquiry into children’s heart surgery at the Bristol Royal Infirmary 1984–1995. The Bristol Royal Infirmary Inquiry 2001. [DOI] [PubMed] [Google Scholar]

- 5. Haller G, Stoelwinder J, Myles PS, et al. . Quality and safety indicators in anesthesia. Anesthesiology 2009;110:1158–75. 10.1097/ALN.0b013e3181a1093b [DOI] [PubMed] [Google Scholar]

- 6. Murphy PJ. Measuring and recording outcome. Br J Anaesth 2012;109:92–8. 10.1093/bja/aes180 [DOI] [PubMed] [Google Scholar]

- 7. Benn J, Arnold G, Wei I, et al. . Using quality indicators in anaesthesia: feeding back data to improve care. Br J Anaesth 2012;109:80–91. 10.1093/bja/aes173 [DOI] [PubMed] [Google Scholar]

- 8. Grocott M. Quality measures in anaesthesia. Bull Roy Coll Anaesth 2011;69:15 http://www.rcoa.ac.uk/docs/Bulletin69.pdf [Google Scholar]

- 9. Hocking G, Weightman WM, Smith C, et al. . Measuring the quality of anaesthesia from a patient’s perspective: development, validation, and implementation of a short questionnaire. Br J Anaesth 2013;111:979–89. 10.1093/bja/aet284 [DOI] [PubMed] [Google Scholar]

- 10. Bluespier Theatre Manager. Information. http://www.bluespier.com (accessed Nov 2014).

- 11. Survey Monkey online tool. 2014. https://www.surveymonkey.com/home/ (accessed Nov 2014)

- 12. Lee A, Gin T, Lau AS, et al. . A comparison of patients’ and health care professionals’ preferences for symptoms during immediate postoperative recovery and the management of postoperative nausea and vomiting. Anesth Analg 2005;100:87–93. 10.1213/01.ANE.0000140782.04973.D9 [DOI] [PubMed] [Google Scholar]

- 13. National Institute for Health and Care Excellence (NICE) Clinical Guideline. 2008:65 https://www.nice.org.uk/guidance/cg65 (accessed Nov 2014). [Google Scholar]