Abstract

Classification of ultrasound (US) kidney images for diagnosis of congenital abnormalities of the kidney and urinary tract (CAKUT) in children is a challenging task. It is desirable to improve existing pattern classification models that are built upon conventional image features. In this study, we propose a transfer learning-based method to extract imaging features from US kidney images in order to improve the CAKUT diagnosis in children. Particularly, a pre-trained deep learning model (imagenet-caffe-alex) is adopted for transfer learning-based feature extraction from 3-channel feature maps computed from US images, including original images, gradient features, and distanced transform features. Support vector machine classifiers are then built upon different sets of features, including the transfer learning features, conventional imaging features, and their combination. Experimental results have demonstrated that the combination of transfer learning features and conventional imaging features yielded the best classification performance for distinguishing CAKUT patients from normal controls based on their US kidney images.

Index Terms: Chronic kidney disease, Ultrasound imaging, Transfer learning

1. INTRODUCTION

Children with congenital abnormalities of the kidney and urinary tract (CAKUT) face many challenges and early detection of CAKUT can help prevent the progression of kidney disease to kidney failure [1]. Ultrasound (US) imaging plays a key role in CAKUT diagnosis because anatomic measures derived from US imaging data, such as renal elasticity, renal parenchymal area, maximum renal length, and cortical thickness, are correlated with kidney function [2, 3]. More recently, texture analysis of imaging data has demonstrated improved performance for predicting change in kidney function [4].

Pattern recognition methods have been used to aid kidney disease diagnosis based on US imaging data. Particularly, a decision support system has been developed to classify US images of normal controls and renal disease patients based on second order grey level co-occurrence matrix statistical features [5]; neural networks in conjunction with principal component analysis have been used to classify US kidney images [6], and support vector machine (SVM) classifiers have been built upon texture features extracted from regions of interest of US images to classify kidney images [7, 8]. These studies have demonstrated that pattern classifiers built upon imaging features could obtain promising performance for classifying US imaging data.

The success of deep learning techniques in recent years have witnessed promising performance in learning imaging features for a variety of pattern recognition tasks [9–11]. In these studies, convolutional neural networks (CNNs) are widely adopted to learn informative imaging features by optimizing pattern recognition cost functions. Therefore, it is expected that incorporating the deep learning techniques into US imaging data analysis would improve US image classification and subsequently improve diagnosis of CAKUT in children based on US imaging data.

Since a large dataset is typically needed to build a generalizable deep learning based classification model [12], for applications with small datasets the deep learning tools are often adopted in a transform learning setting, i.e., applying deep learning models trained based on a large dataset for one problem to a different but related problem with a relatively small training dataset [13]. The transfer learning strategy has achieved promising performance in pattern recognition studies of medical image data [14–19]. Furthermore, it has been demonstrated that classifiers built upon combination of transfer learning features and handcrafted features typically achieve better pattern recognition performance than those built upon transfer learning features and hand-crafted features alone [17–19].

Building upon the successful deep learning and transfer learning techniques, we develop a pattern recognition method for distinguishing children with CAKUT from healthy kids based on their US kidney imaging data. Particularly, we adopt a pre-trained model of CNNs, namely imagenet-caffe-alex [20], for extracting imaging features from 2D US kidney images. Since the US kidney images have only one channel of intensity values while the imagenet-caffe-alex model requires color images with 3 channels, two more feature maps are computed from each original US image, including a gradient feature map and a distanced transformation feature map, and they are integrated with the original US image to be used as a 3-channel input to the imagenet-caffe-alex model. Moreover, hand-crafted texture features are also extracted from each US image. Finally, SVM classifiers are built upon the transfer learning features and hand-crafted texture features to distinguish US kidney images of children with CAKUT from those of healthy kids. We have validated our method based on an US imaging dataset obtained from 50 children with CAKUT and 50 healthy kids at the Children’s Hospital of Philadelphia (CHOP). Experiment results have demonstrated that our method could achieve promising pattern classification performance for aiding diagnosis of CAKUT.

2. METHODS

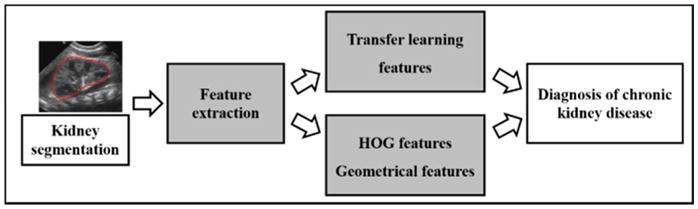

Our method consists of 3 components, including kidney segmentation, feature extraction, and SVM based classification, as illustrated in Fig. 1.

Fig. 1.

Flowchart for CAKUT diagnosis based on US images.

2.1 Kidney Segmentation and image normalization

We adopted a graph-cuts method to segment kidneys in US images [21]. Particularly, the graph-cuts method segments kidneys in US images based on both image intensity information and texture features extracted using Gabor filters. This method has achieved promising segmentation performance for segmenting bilateral kidneys in US images. The average Dice index of the segmentation results compared with manual segmentation results was 0.96.

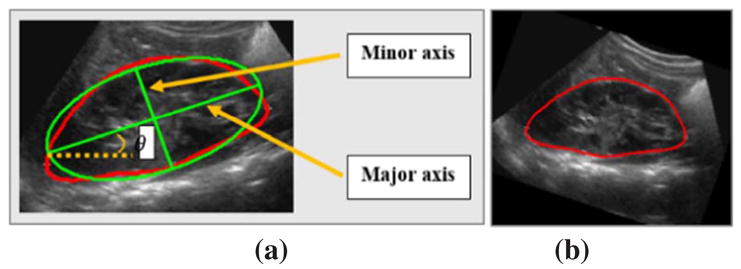

To make US kidney images of different subjects directly comparable, we normalized US kidney images of different subjects. First, the orientation of kidneys in US images is estimated based on ellipse fitting, including the ellipse’s major axis, minor axis, and the orientation θ between the major axis and X-axis, as illustrated in Fig. 2. Second, based on the estimated ellipse information, each US kidney image is reoriented along the major axis and resized to have the same size as the images used in the deep learning model to be described. Particularly, the reoriented image is centered to the ellipse’s center and rescaled according to the ellipse’s major axis and minor axis to have the same size. Finally, image intensity values outside of the kidney are set to zero.

Fig. 2.

Ellipse estimation (a) and image rotation (b).

2.2 Feature Extraction

Two different methods are used to extract image features from the normalized kidney images, including the transfer learning based on a deep CNN model and conventional texture features.

2.2.1 Feature Extraction by Transfer Learning

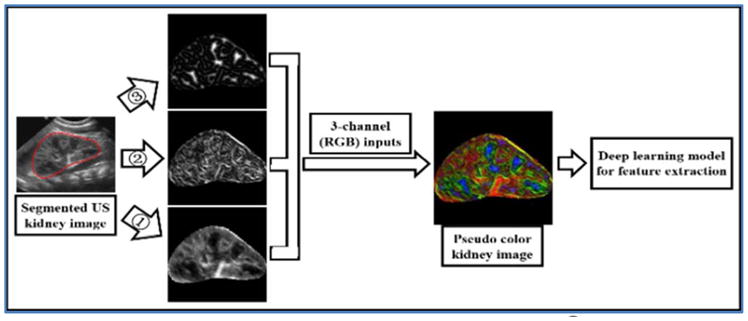

A pre-trained CNN model (imagenet-caffe-alex) is adopted from MatConvNet to extract features from the kidney images [20]. The imagenet-caffe-alex model was trained on 1.2 million 3-channle images of the ImageNet LSVRC-2010 for classifying images into 1000 different classes. Since the kidney US images only have one channel of intensity values, we propose to adopt original kidney image, gradient image, and distanced transform image to build a 3-channel image, as illustrated in Fig. 3.

Fig. 3.

Feature extraction by transfer learning. ➀ original US kidney image; ➁ gradient image; ➂ distanced transform image.

In particular, 3 feature maps are computed from each normalized US kidney image, including original kidney US image fI(x,y), gradient feature map fG(x,y), and distanced transform feature map fD(x,y). First, the original US kidney image intensity values are normalized to [0, 255]. Second, a gradient feature map fG(x,y) is computed as:

| (1) |

where gx(x,y) = (fI(x+1,y) − fI(x−1,y))/2, gy(x,y) = (fI(x,y+1) − fI(x,y−1))/2, x and y are coordination of pixels. Third, a distance transform feature map is computed using the VLfeat toolbox [22] by

| (2) |

Particularly, the distance transform map characterizes the distance of each pixel to its nearest element in an edge map obtained by applying Canny edge detector to the original image [23]. Both the gradient and distance transformation feature maps are normalized to [0, 255].

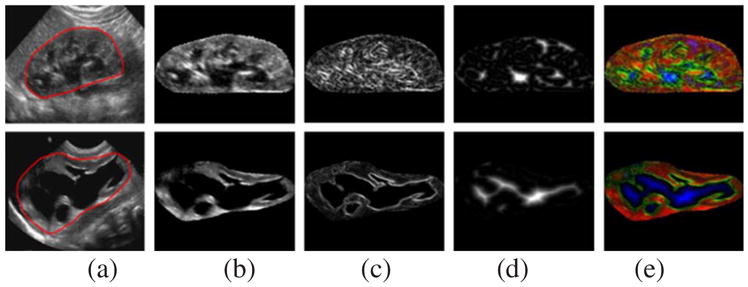

Finally, each kidney image’s original kidney US image fI(x,y), gradient feature map fG(x,y), and distanced transform feature map fD(x,y) are used as R-channel, G-channel, and B-channel respectively to form a 3-channel image so that the imagenet-caffe-alex model could be adopted to extract features. Two randomly selected kidney US images, their RGB channels, and their pseudo color images are shown in Fig. 4.

Fig. 4.

US kidney images and feature maps. (a) US images with kidney contours in red; (b) original image feature fI(x,y); (c) gradient feature fG(x,y); (d) distanced transform feature fD(x,y), and (e) pseudo color images.

2.2.2 Conventional Image Features

Conventional image features are also extracted from the US kidney images, including histogram of oriented gradients (HOG) features and geometrical features of the kidneys.

(1) HOG Features

The HOG decomposes an image into small squared cells, computes the histogram of oriented gradients in each cell, normalizes the result using a block-wise pattern, and returns a descriptor for each cell. Same as the distanced transform, we utilize the VLFeat toolbox [22] to compute the HOG features with default parameters except that the cell size is set to N0/10, where N0 is the size of the input images to the deep learning model.

(2) Geometrical Features

The geometrical features include shape-related measures and block-related measures of the kidneys. Particularly, the shape-related features are defined as , where L1 and L2 are lengths of the major and minor axes of the kidney as defined by the ellipse estimation of the kidney. The block-related features are ratios of areas of black holes inside kidney to the whole kidney region. Because no suitable threshold is available for segmenting holes from all kidney images, we uniformly set 10 thresholds of [3:3:30] to segment black holes, and the resulting ratios constitute the block-related features Vblock = [ratio1, ⋯, ratio10]. Finally, from each kidney image, we obtain a set of geometric features Vgeometric = [Vshape, Vblock].

2.3 Diagnosis of CAKUT

The diagnosis of CAKUT based on US kidney images is modeled as a pattern classification problem based on US image features. Particularly, a L2-regularized L1-loss SVM is utilized to build classifiers based on the extracted image features by optimizing

| (5) |

where f→i is the feature vector of the ith US kidney image, w⃗ is the weighting vector to be learned from training data.

The L2-regularized L1-loss SVM optimization problem can be solved using a dual coordinate descent method. Particularly, a publicly available software package LIBLINEAR with its default parameters is utilized to build the SVM classifiers [24]. Once we get the weighting vector w⃗, the category of the ith kidney image can be estimated by

| (6) |

3. EXPERIMENTAL RESULTS

We have validated our method based on kidney images collected at the Children’s Hospital of Philadelphia. The dataset contains kidney images obtained from 50 normal subjects and 50 patients. Particularly, all the 50 normal subjects had both left and right kidney images, 35 patients had abnormal kidneys in both sides, 39 patients had abnormal kidneys in the left side, and 46 patients had abnormal kidneys in the right side. We built SVM classifiers based on all the available data for the left, right, and bilateral kidney images separately, and 10-fold cross-validation was adopted to evaluate the classification performance. The 10-fold cross-validation was repeated for 100 times to estimate the classification performance.

In order to investigate if the transfer learning features and the conventional image features provide complementary information for distinguishing abnormal and normal kidney images, we compared classifiers built upon different sets of features, including the transfer learning features alone, the conventional features alone, and their combination.

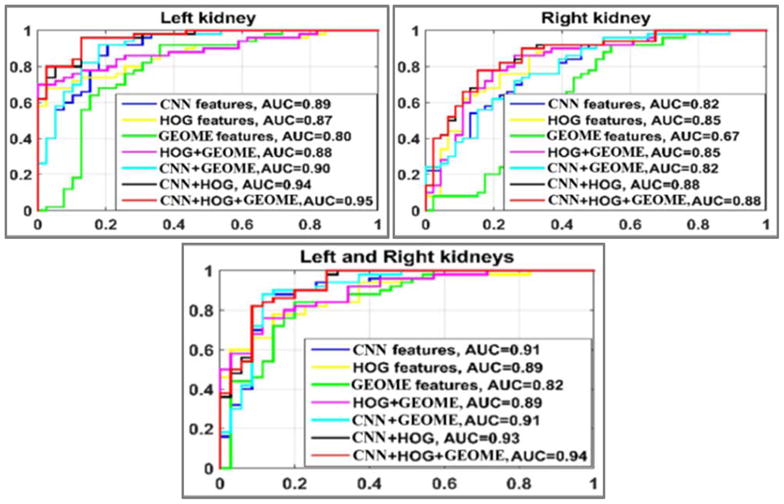

As shown in Fig. 5, the classifiers built upon the combination of the transfer learning features and the conventional features had the largest area under the receiver operating characteristic (ROC) curve (AUC), indicating that integrating the transfer learning features and the conventional imaging features could improve the classification of US kidney images. Table 1 summarizes mean classification accuracy and AUC values of 100 runs of the 10-fold cross-validation experiments. These results further demonstrated the advantages of combination (CNN+HOG+Geometrical features) over others.

Fig. 5.

ROC curves of different classifiers, estimated based one run of 10-fold cross-validation. GEOME: geometrical features.

Table 1.

10-fold cross-validation (mean±standard*e-2).

| CNN | HOG | GEOM | HOG + GEOME | CNN + GEOME | CNN + HOG | CNN +HOG +GEOME | |

|---|---|---|---|---|---|---|---|

| classification accuracy | |||||||

| Left | 0.85±1.8 | 0.77±2.3 | 0.78±1.5 | 0.79±2.7 | 0.87±1.5 | 0.84±1.4 | 0.84±1.6 |

| Right | 0.75±2.0 | 0.74±2.5 | 0.67±1.7 | 0.74±2.4 | 0.74±2.1 | 0.81±1.8 | 0.81±1.8 |

| Both | 0.85±1.2 | 0.78±2.4 | 0.76±1.8 | 0.79±2.3 | 0.85±1.4 | 0.86±2.1 | 0.87±2.1 |

| area under the ROC curve | |||||||

| Left | 0.88±0.8 | 0.84±1.5 | 0.80±0.9 | 0.86±1.5 | 0.88±0.9 | 0.92±0.8 | 0.92±0.7 |

| Right | 0.83±1.3 | 0.83±1.6 | 0.67±1.7 | 0.83±1.6 | 0.83±1.2 | 0.88±1.0 | 0.88±1.1 |

| Both | 0.89±0.8 | 0.87±1.6 | 0.82±1.3 | 0.87±1.5 | 0.89±0.8 | 0.92±0.7 | 0.92±0.7 |

GEOME: Geometrical features.

4. CONCLUSIONS

In this paper, we proposed a transfer learning-based method for CAKUT diagnosis based on US kidney images. The classification experiments for distinguishing CAKUT patients from normal controls based on their kidney US images have demonstrated that integrating the transfer learning features and conventional image features could improve the classification of US kidney images.

Acknowledgments

This work was supported in part by the National Key Basic Research and Development Program of China [2015CB856404]; the National Natural Science Foundation of China [61473296]; the Promotive Research Fund for Excellent Young and Middle-Aged Scientists of Shandong Province [BS2014DX012]; China Postdoctoral Science Foundation [2015M581203]; the International Postdoctoral Exchange Fellowship Program [20160032]; and National Institutes of Health grants [EB022573, MH107703, DK114786, DA039215, and DA039002].

References

- 1.National Institute of Diabetes and Digestive and Kidney Diseases. United States Renal Data System 2010 Annual Data Report. Washington, D.C: 2010. [Google Scholar]

- 2.Meola M, et al. Imaging in Chronic Kidney Disease. Contrib Nephrol. 2016;188:69–80. doi: 10.1159/000445469. [DOI] [PubMed] [Google Scholar]

- 3.Lin HYH, et al. Association of Renal Elasticity and Renal Function Progression in Patients with Chronic Kidney Disease Evaluated by Real-Time Ultrasound Elastography. Scientific Reports. 2017 Feb 27;7 doi: 10.1038/srep43303. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Kline TL, et al. Image texture features predict renal function decline in patients with autosomal dominant polycystic kidney disease. Kidney International. 2017 doi: 10.1016/j.kint.2017.03.026. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Sharma K, Virmani J. A dicision support system for classification of normal and medical renal disease using ultrasound images: A decision support system for medical renal diseases. International Journal of Ambient Computing and Intelligence. 2017;8:52–69. [Google Scholar]

- 6.Abou-Chadi FEZ, Mekky N. Classification of ultrasound kidney images using PCA and neural networks. International Journal of Advanced Computer Science and Applications. 2015;6:53–57. [Google Scholar]

- 7.Bama S, Selvathi D. Discrete tchebichef moment based machine learning method for the classification of disorder with ultrasound kidney images. Biomedical Research. 2016:s223–s229. [Google Scholar]

- 8.Subramanya MB, et al. SVM-based CAC system for B-mode kidney ultrasound images. Journal of Digital Imaging. 2015;28:448–458. doi: 10.1007/s10278-014-9754-4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Esteva A, et al. Dermatologist-level classification of skin cancer with deep neural networks. Nature. 2017;542:115–118. doi: 10.1038/nature21056. 02/02/print. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Gulshan V, et al. Development and validation of a deep learning algorithm for detection of diabetic retinopathy in retinal fundus photographs. JAMA. 2016;316:2402–2410. doi: 10.1001/jama.2016.17216. [DOI] [PubMed] [Google Scholar]

- 11.Li H, et al. Deep Ordinal Ranking for Multi-Category Diagnosis of Alzheimer’s Disease using Hippocampal MRI data. 2017 Sep 05;2017 arXiv:1709.01599. [Google Scholar]

- 12.Goodfellow I, et al. Deep Learning. MIT Press; 2016. [Google Scholar]

- 13.Pratt LY. Discriminability-based transfer between neural networks. NIPS Conference: Advances in Neural Information Processing Systems; 1993. pp. 204–211. [Google Scholar]

- 14.Shin H, et al. Deep convolutional neural networks for computer aided detection-CNN atrchitectures-dataset characteristics and transfer learning. IEEE Transactions on Medical Imaging. 2016;35:1285–1298. doi: 10.1109/TMI.2016.2528162. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Weiss K, et al. A survey of transfer learning. Journal of Big Data. 2016;3(9):1–40. [Google Scholar]

- 16.Pan SJ, Yang Q. A survey on transfer learning. IEEE Transactions on Knowledge and Data Engineering. 2010;22:1345–1359. [Google Scholar]

- 17.Cheng PM, Malhi HS. Transfer learning with convolutional neural networks for classification of abdominal ultrasound images. Journal of Digital Imaging. 2017;30:234–243. doi: 10.1007/s10278-016-9929-2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Ciompi F, et al. Automatic classification of pulmonary peri-fissural nodules in computed tomography using an ensemble of 2D views and a convolutional neural network out of the box. Medical Image Analysis. 2015;26:195–202. doi: 10.1016/j.media.2015.08.001. [DOI] [PubMed] [Google Scholar]

- 19.Bar Y, et al. Chest pathology detection using deep learning with non-medical training. presented at the In Processings of IEEE 12th ISBI; New York, NY, USA. 2015. [Google Scholar]

- 20.Krizhevsky A, et al. ImageNet classification with deep convolutional neural networks. Advances in Neural Information Processing Systems 25 (NIPS 2012) 2012:1097–1105. [Google Scholar]

- 21.Zheng Q, et al. A dynamic graph-cuts method with integrated multiple feature maps for segmenting kidneys in ultrasound images. 2017 doi: 10.1016/j.acra.2018.01.004. arXiv:1706.03372. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Vedaldi A, Fulkerson B. VLFeat: An open and portable library of computer vision algorithms. presented at the in Proceedings of the 18th Annual ACM International Conference on Multimedia; Firenze, Italy. 2010. [Google Scholar]

- 23.Felzenszwalb PF, Huttenlocher DP. Techinical Report. Cornell University; 2004. Distance transforms of sampled functions. [Google Scholar]

- 24.Fan RE, et al. LIBLINEAR: A library for large linear classification. Journal of Machine Learning Research. 2008;9:1871–1874. [Google Scholar]