Abstract

In humans and monkeys, face perception activates a distributed cortical network that includes extrastriate, limbic, and prefrontal regions. Within face-responsive regions, emotional faces evoke stronger responses than neutral faces (“valence effect”). We used fMRI and Dynamic Causal Modeling (DCM) to test the hypothesis that emotional faces differentially alter the functional coupling among face-responsive regions. Three monkeys viewed conspecific faces with neutral, threatening, fearful, and appeasing expressions. Using Bayesian model selection, various models of neural interactions between the posterior (TEO) and anterior (TE) portions of inferior temporal (IT) cortex, the amygdala, the orbitofrontal (OFC), and ventrolateral prefrontal cortex (VLPFC) were tested. The valence effect was mediated by feedback connections from the amygdala to TE and TEO, and feedback connections from VLPFC to the amygdala and TE. Emotional faces were associated with differential effective connectivity: Fearful faces evoked stronger modulations in the connections from the amygdala to TE and TEO; threatening faces evoked weaker modulations in the connections from the amygdala and VLPFC to TE; and appeasing faces evoked weaker modulations in the connection from VLPFC to the amygdala. Our results suggest dynamic alterations in neural coupling during the perception of behaviorally relevant facial expressions that are vital for social communication.

Keywords: connectivity, DCM, emotion, faces, primates

Introduction

Face perception is a highly developed visual skill in human and nonhuman primates. In the human brain, face stimuli elicit activation in a distributed neural system of multiple, bilateral regions that includes extrastriate, limbic, and prefrontal regions (Haxby et al. 2000; Ishai et al. 2005). The “core” regions (inferior occipital gyrus [IOG], fusiform gyrus [FG]) process invariant facial features, whereas the superior temporal sulcus (STS) and the “extended” regions (amygdala, inferior frontal gyrus, insula, and orbitofrontal cortex [OFC]) process changeable aspects of faces (Haxby et al. 2000, 2002; Ishai et al. 2005; Kranz and Ishai 2006). Activation in the face network is modulated by cognitive factors such as expertise, attention, imagery, and emotion (Gauthier et al. 1999; Ishai et al. 2000, 2002, 2004; Vuilleumier et al. 2001). Analysis of effective connectivity, using Dynamic Causal Modeling (DCM, Friston et al. 2003), reveals that the major entry node in the face network is the lateral FG and that the functional coupling between the core and the extended systems is content-dependent (Fairhall and Ishai 2007). Specifically, emotional faces increase the coupling between the FG and the amygdala, whereas attractive faces increase the coupling between the FG and the OFC (Fairhall and Ishai 2007). Furthermore, explicit processing of facial affect increases the effective connectivity from the IOG to ventrolateral prefrontal cortex (VLPFC, Dima et al. 2011), whereas the amygdala strongly influences FG function during face perception, and this influence is modulated by experience and stimulus salience (Herrington et al. 2011). Previous DCM studies of face perception in humans have also shown that effective connectivity between regions is task-specific. Viewing faces is associated with an increase in bottom-up, feedforward connectivity from extrastriate face-selective regions to prefrontal cortex, whereas the generation of mental images of faces is associated with an increase in top-down, feedback connectivity from prefrontal to extrastriate regions (Mechelli et al. 2004). Moreover, perceptual decisions about faces result in an increase in top-down connectivity from ventromedial frontal cortex to the FG (Summerfield et al. 2006).

Recent functional magnetic resonance imaging (fMRI) studies in behaving monkeys have revealed activation in multiple face-responsive regions in the temporal lobe (Pinsk et al. 2005; Tsao, Moeller, et al. 2008; Bell et al. 2009), as well as in limbic and prefrontal cortices (Hadj-Bouziane et al. 2008; Tsao, Schweers et al. 2008). Electrical microstimulation combined with simultaneous fMRI suggests that the face-responsive regions form an interconnected hierarchical network (Moeller et al. 2008). Using fMRI, Hadj-Bouziane and colleagues have shown valence effects in the monkey brain: Enhanced activation to emotional facial expressions was observed in the amygdala and IT cortex (Hadj-Bouziane et al. 2008). Interestingly, in monkeys with amygdala lesions, the valence effect was strongly disrupted within IT cortex, suggesting that the feedback projections from the amygdala to IT cortex mediate the valence effect found there (Hadj-Bouziane et al. 2012). Although in monkeys, as in humans, face perception evokes activations in a widely distributed network of regions, it is currently unknown to what extent effective connectivity in the monkey face network is homologous to the human face network, namely, whether it is task- and stimulus-dependent.

We used fMRI and DCM with Bayesian model selection to test the hypothesis that emotional faces differentially alter the functional coupling among face-responsive regions in the monkey brain. To that end, 3 monkeys viewed neutral, threatening, fearful, and appeasing facial expressions. A model was constructed based on known anatomical connections among areas TEO, TE, the amygdala, VLPFC, and OFC (Webster et al. 1991; Amaral et al. 1992; Saleem et al. 2008, 2014), and various models of effective connectivity between these face-responsive regions were compared. Specifically, we tested whether the valence effect in IT cortex was the result of feedback connections not only from the amygdala but also from VLPFC and OFC. We chose these 2 face-responsive regions in prefrontal cortex due to their role in emotion-related cognitive behavior: Previous studies have shown that VLPFC is sensitive to changes in facial features, expressions, and the angle of gaze (Romanski and Diehl 2011), whereas damage to the OFC is associated with deficits in the recognition of facial expression (Willis et al. 2014).

Experimental Procedures

Subjects and General Procedures

Three male macaque monkeys (Monkeys I, P, and T, Macaca mulatta, 7–9 years, 6–8 kg) were used. All procedures followed the Institute of Laboratory Animal Research (part of the National Research Council of the National Academy of Sciences) guidelines and were approved by the NIMH Animal Care and Use Committee. Each monkey was surgically implanted with an MR-compatible headpost under sterile conditions using isoflurane anesthesia. After recovery, subjects were trained to sit in a plastic restraint chair and fixate a central target for long durations with their heads fixed, facing a screen on which visual stimuli were presented (Hadj-Bouziane et al. 2012; Liu et al. 2013).

Data Acquisition

Before each scanning session, an exogenous contrast agent (monocrystalline iron oxide nanocolloid [MION]) was injected into the femoral or external saphenous vein (10–12 mg/kg) to increase the contrast/noise ratio and to optimize the localization of fMRI signals (Vanduffel et al. 2001; Leite et al. 2002). Imaging data from monkeys P and T were collected in a 3T GE scanner with a surface coil array (8 elements). Twenty-seven 1.5-mm coronal slices (no gap) were acquired using single-shot interleaved gradient-recalled Echo Planar Imaging (EPI) with a sensitivity-encoding sequence (SENSE, acceleration factor 2, Pruessmann 2004). Imaging parameters were as follows: voxel size: 1.5 × 1.5625 × 1.5625 mm, field of view (FOV): 100 × 100 mm, matrix size: 64 × 64, echo time (TE): 17.9 ms, repetition time (TR): 2 s, flip angle: 90°. An anatomical scan was also acquired in the same session to serve as an anatomical reference (Fast Spoiled Gradient Recalled [FSPGR] sequence, voxel size: 1.5 × 0.390625 × 0.390625 mm, FOV: 100 × 100 mm, matrix size: 256 × 256, TE: 2.932 ms, TR: 6.24 ms, flip angle: 12°).

Imaging data from Monkey I were collected in a 4.7T Bruker scanner with a surface coil array (8 elements). Twenty-eight 1.5-mm coronal slices (no gap) were acquired using single-shot interleaved gradient-recalled EPI. Imaging parameters were as follows: voxel size: 1.5 mm isotropic, FOV: 96 × 48 mm, matrix size: 64 × 32, TE: 12.85 ms, TR: 2 s, flip angle: 90°. A low-resolution anatomical scan was also acquired in the same session to serve as an anatomical reference (Modified Driven Equilibrium Fourier Transform [MDEFT] sequence, voxel size: 1.5 × 0. 5 × 0.5 mm, FOV: 96 × 48 mm, matrix size: 192 × 96, TE: 3.95 ms, TR: 11.25 ms, flip angle: 12°).

Although the fMRI signals from Monkey I, who was scanned in the 4.7T, were slightly larger (especially in TE and TEO) than the fMRI signals from Monkeys P and T, who were scanned in the 3T, there were no significant differences in the results (valence effect and DCM winning model) based on data with or without Monkey I. We report the DCM results for 3 monkeys to increase the statistical power.

To facilitate cortical surface alignments, we acquired high-resolution T1-weighted whole-brain anatomical scans in a 4.7T Bruker scanner with an MDEFT sequence. Imaging parameters were as follows: voxel size: 0.5 × 0.5 × 0.5 mm, TE: 4.9 ms, TR: 13.6 ms, flip angle: 14°.

Experimental Design and Task

All stimuli used in this experiment were identical to the ones previously used (Hadj-Bouziane et al. 2008; Hadj-Bouziane et al. 2012). The stimuli were color images of facial expressions displayed by 8 unfamiliar male and female macaque monkeys (frontal view): neutral, fearful (fear grin), threatening (aggressive, open-mouth threat), and appeasing (lip smack) (Fig. 1). We presented these 4 different facial expressions to each monkey in a block design using Presentation® software (version 12.2, www.neurobs.com). Stimuli spanned a visual angle of 11° (maximal horizontal and/or vertical extent) and were presented foveally for 700 ms on a uniform gray background, with a fixation square (0.2° in red) superimposed on each image, followed by a 300-ms blank period. The monkeys were required to maintain fixation on the square superimposed on the stimuli in order to receive a liquid reward. In the reward schedule, the frequency of reward increased as the duration of fixation increased (Hadj-Bouziane et al. 2012; Liu et al. 2013). Eye position was monitored with an infrared pupil tracking system (iView, Inc.).

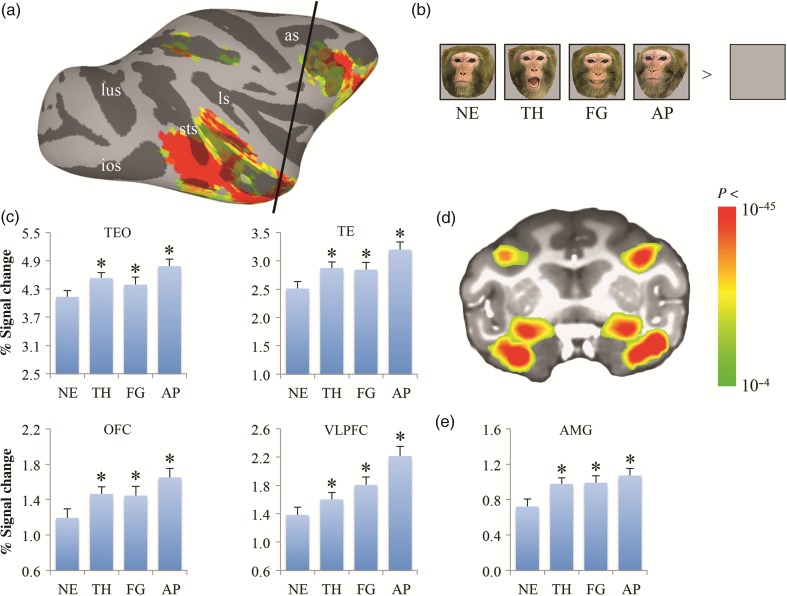

Figure 1.

fMRI responses to neutral and emotional faces. Face-responsive (neutral, threatening, fearful, and appeasing faces > blank screen) activation map is shown on the lateral view of the right hemisphere of the inflated cortex (a) and on one coronal slice through the amygdala (d) of Monkey P (collected on a 3T MR scanner): the location of the coronal slice is marked by the black line on the lateral view. The color bar shows the statistical values of the contrast between faces and a blank screen. Averaged fMRI responses across all 3 subjects to various facial expressions within the selective ROIs are shown in c and e. Examples of monkey facial expression stimuli used in the experiments are shown in b. Asterisks on histograms indicate a significant difference between emotional and neutral faces (*P < 0.001). as, arcuate sulcus; ios, inferior occipital sulcus; ls, lateral sulcus; lus, lunate sulcus; pmts, posterior middle temporal sulcus; STS, superior temporal sulcus; NE: neutral; TH: threat (aggressive); FG: fearful (fear grin); AP: appeasing (lip smack).

Different stimuli from each category of facial expression were presented in blocks of 32 s each, interleaved with 20-s fixation blocks (neutral gray background). Individual runs began and ended with a fixation block, and each categorical block was presented once in each run, in a pseudorandom order. Different pseudorandom sequences were used in each run. The 3 monkeys were scanned in 2 separate sessions with at least 1-month interval, resulting in a total of 42–50 runs per monkey.

Data Analysis

Preprocessing

Functional data were preprocessed using Analysis of Functional NeuroImages (AFNI) software (Cox 1996). Images were realigned to the mean volume of each session. The data were smoothed with a 2-mm full-width half-maximum Gaussian kernel. Signal intensity was normalized to the mean signal value within each run. General linear model (GLM) Statistical Parametric Mapping (version SPM8, Wellcome Department of Imaging Neuroscience) was used for GLM analyses.

For each voxel in the present experiment, we performed a single univariate linear model fit to estimate the response amplitude for each condition. The model included a hemodynamic response predictor for each block condition and regressors of no interest (baseline, movement parameters from realignment corrections, and signal drifts [linear as well as quadratic]). A GLM and an MION kernel were used to model the hemodynamic response function.

Regions of Interest (ROIs)

For each monkey, face-responsive voxels corresponded to voxels significantly more active for faces (neutral, threatening, fearful, and appeasing) compared with a blank screen (P < 10−4 uncorrected). In addition, we drew the following anatomical regions from the right hemisphere, as described in the Saleem and Logothetis stereotaxic atlas (Saleem and Logothetis 2012): 1) IT cortex: the posterior portion (area TEO) and the anterior portion (area TE); 2) the amygdala (AMG); 3) the prefrontal cortex (PFC): OFC and VLPFC.

Although there may be regions, which do not have significant fluctuations but act as “third region mediators,” including these regions in the DCM analysis is unlikely to affect our connectivity findings, which are predicated on the types of inputs we are interested in assessing, that is, the type of facial expression that the monkeys observed. We therefore followed the standard DCM protocol and included all regions that show significant task modulations under a GLM and mass univariate analysis.

We defined ROIs within these anatomical regions by identifying the peak of the face-responsive activation and then drawing a sphere around the peak. A 3-mm sphere was used for the AMG, OFC, and VLPFC. Due to the larger extent of activation in TEO and TE, a 4-mm sphere was used for these regions. Within these ROIs, we performed an ANOVA across all 3 subjects, testing for the effect of expression (neutral, threatening, fearful, and appeasing), followed by multiple paired t tests. Then, for the DCM analysis, we extracted the signal in response to the different facial expressions from these ROIs using the principle eigenvariate from these voxel collections.

Dynamic Causal Modeling

In DCM, connectivity among neuronal states is inferred based on regional hemodynamic responses. This approach, whereby unobservable neuronal interactions are modeled as a system of coupled differential equations, provides a way to test the direction of inputs and their propagation through a brain network. As such, the methodology is a model-based deconstruction of connectivity which relies on expressing different hypotheses through different connectivity architectures (Friston et al. 2003). In animals, there is a growing literature using DCM of electrophysiological signals acquired invasively. Many of these invasive studies have focused on validating this form of this approach using more detailed neural mass modeling. Empirical evidence has been provided in various studies, including an MRI study of vagus nerve stimulation, developmental studies, pharmacological manipulations, attentional paradigms, and issues related to source reconstruction and model comparison (David, Guillemain; et al. 2008; David, Wozniak, et al. 2008; Reyt et al. 2010; Bastos et al. 2015; Moran et al. 2008, 2011, 2015; Papadopoulou et al. 2016). Here, we apply the more coarse neural models for hemodynamic outputs, where neural activity is represented as a single active state in order to identify the connectivity changes associated with different facial expressions.

Based on fMRI time series extracted from the ROIs described earlier (TEO, TE, AMG, OFC, and VLPFC), DCM analyses were performed using DCM10, as implemented in SPM8 (Friston et al. 2003; Stephan et al. 2004). The regressors from the GLM described earlier were used to define the onsets of conditions, that is, marking the onsets of individual conditions (neutral, threatening, fearful, and appeasing). We further included a separate input vector representing all conditions (ALL). Then, 3 matrices were used to specify the network connectivity for the DCM estimation procedure: 1) Matrix A, which represents the endogenous (fixed) connection among the regions of the model, (2) Matrix B, which represents the modulation of an external input on a fixed connection, (3) Matrix C, which represents the direct driving input into the system.

Our DCM analysis was performed using a nested procedure. The modulatory effects of the model specifications were as follows: bilinear, single-state per region, and deterministic. First, we investigated condition-dependent connectivity among the selected ROIs to select modulated connections with significant posterior evidence. In other words, all the tested models were manipulated with regard to their modulation (Matrix B) but not endogenous connectivity (Matrix A) or direct inputs (Matrix C). The endogenous connections were specified based on previous findings on anatomical connections among the selected ROIs: area TEO projects to TE, which projects to the amygdala, while feedback projections from the amygdala terminate in area TEO and TE; and bidirectional connections exist between TE and TEO and among TE, the amygdala, OFC, and VLPFC (Webster et al. 1991; Amaral et al. 1992; Saleem et al. 2008, 2014). The regressor for all faces (ALL) provided direct input (C) to TEO (Fig. 2a). The model selection was based on Bayesian model comparison (Penny et al. 2004), where models differed according to where facial expression modulated endogenous connections. Within these particular connection sets, each expression was modeled with separate potential modulatory effects (i.e., distinct B parameters for neutral, fearful, threatening, and appeasing). A fixed-effects model comparison procedure was performed where Bayes factors were used to select the winning model among competing models across all the 3 subjects and 2 sessions.

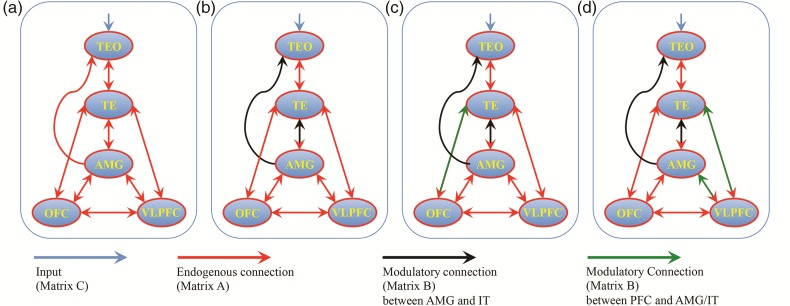

Figure 2.

(a) The endogenous connection among the selected ROIs. (b) Winning model for modulations evoked by facial expressions on the connections between the amygdala and inferior temporal (IT) cortex (TEO and TE). (c) Winning model for modulations evoked by facial expressions on the connections between OFC, the amygdala, and IT cortex (c). (d) Winning model for modulations evoked by facial expressions on the connections between VLPFC, the amygdala, and IT cortex. Red lines show the endogenous, known anatomical connections (Matrix A). Black lines show the modulatory effects evoked by emotional facial expressions between the amygdala and IT cortex (Matrix B). Green lines show the modulatory effects on connections among OFC, VLPFC, the amygdala, and IT cortex evoked by facial expression. Blue lines show the input into the system (Matrix C).

Our model comparison first focused on testing connection modulations between the amygdala and IT cortex (TEO and TE) by facial expression. This first stage was designed to directly compare the results of the DCM analysis with that of our previous study in monkeys with amygdala lesions, which showed that the feedback projections from the amygdala to IT cortex mediate the valence effect (Hadj-Bouziane et al. 2012). This strategy was also employed for computational expediency. Seven models were calculated and then compared (Supplementary Fig. 1): feedforward models (model #1: TEO → TE, TE → AMG); feedback models (model#2: AMG → TE, TE → TEO; model #3: AMG → TE, AMG → TEO; model #4: AMG → TE, TE → TEO, AMG → TEO); and both feedforward and feedback models (model #5: model #1 + model #2; model #6: model #1 + model #3; model #7: model #1 + model #4).

Based on the winning model from the above selection (1a), we allowed for modulations along the connections that were identified there and, in addition, explored further modulatory connections among OFC, the amygdala and TE induced by facial expression. All the possible models (4 fixed connections [OFC → AMG, AMG → OFC, OFC → TE, TE → OFC] resulting 2^4 = 16 possible modulations) were calculated and then compared (Supplementary Fig. 2). The same process was conducted for connections among VLPFC, the amygdala, and TE.

Then, based on the above winning models (1b), we finally explored an expanded modulatory connection set between OFC and VLPFC. All the possible models were calculated and then compared (2 fixed connections [OFC → VLPFC, VLPFC → OFC] and 2 models [one among OFC, AMG, and TE; another one among VLPFC, AMG, and TE] resulting 2^(2 + 2) = 16 possible modulations [see supplementary Fig. 2]).

Using the final model with optimized modulatory effects, we analyzed the connectivity parameters and their modulation by different facial expressions. We used the Bayesian parameter averages and their cumulative normal distribution, to compare posterior expectations to a group prior of zero to assess significant connection modulations induced by different facial expressions across all subjects and sessions.

Results

Responses to Neutral and Emotional Faces

Using the contrast of all faces versus a blank screen, we found that face-responsive voxels were widely distributed bilaterally across the lower bank of the STS and inferior temporal gyrus posteriorly and anteriorly (areas TEO and TE), within the dorsal portion of the lateral and basal nuclei of the amygdala, and within PFC, including OFC and VLPFC in all 3 subjects (Fig. 1a,d).

We performed an ANOVA with a factor of expression (neutral, threatening, fearful, and appeasing), followed by post hoc analyses. We evaluated responses to various facial expressions by contrasting each category of emotional faces (threatening, fearful, and appeasing) with neutral faces. In all face-responsive ROIs, we found enhanced responses to fearful and appeasing faces (P < 0.001). The response to appeasing faces was stronger than the response to threatening faces (P < 0.005) (Fig. 1c,e).

Model Comparison and Selection

First, we compared the seven models with various feedback and feedforward modulations of connections between the amygdala and IT cortex (Supplementary Fig. 1). The feedback model (AMG → TE, AMG → TEO) was superior to the other models with a posterior probability (namely the probability that a model provides the best explanation for the measured data across subjects, see Penny et al. 2004) of >99% across all 3 subjects (Fig. 2b). Then, based on this winning model, for connections among OFC, the amygdala and TE, the feedback model (OFC → TE) was superior to the other 15 models with a posterior probability of >99% (Fig. 2c), whereas for connections among VLPFC, the amygdala, and TE, the feedback model (VLPFC → TE, VLPFC → AMG) was superior to the other 15 models with a posterior probability of >99% (Fig. 2d). Finally, based on winning models for connections among OFC/VLPFC, the amygdala, and TE, we compared 16 models for modulations of connections between OFC/VLPFC (Supplementary Fig. 2). We found that there was no significant modulation on connections between OFC and VLPFC. The feedback model (VLPFC → TE, VLPFC → AMG) was superior to the other models with a posterior probability of >99% (Fig. 2d).

Modulation of Connectivity by Different Facial Expressions

Based on the final winning model (Fig. 2d), we compared modulations in these connections (AMG → TEO, AMG → TE, VLPFC → TE, and VLPFC → AMG) by different facial expressions (Fig. 3). All 4 facial expressions evoked significant positive modulations in connections of AMG → TE, VLPFC → TE, and VLPFC → AMG but negative modulations in the connection of AMG → TEO. Positive modulations by all 4 facial expressions in the connections AMG → TE and VLPFC → TE were stronger than those in the connection of VLPFC → AMG (P < 0.001). Fearful faces evoked greater positive modulation in the connection of AMG → TE as compared with the connection of VLPFC → TE (P < 0.001).

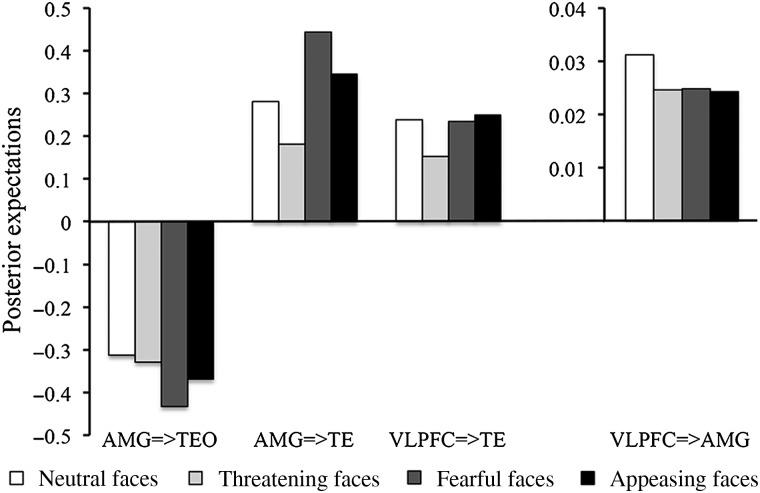

Figure 3.

Posterior expectations of effective connectivity evoked by various facial expressions.

Compared with neutral faces, threatening faces evoked significantly smaller positive modulations in connections of AMG → TE (P < 0.001) and VLPFC → TE (P < 0.001); fearful faces evoked significantly greater positive modulations in the connection of AMG → TE (P < 0.001) and greater negative modulations in the connection of AMG → TEO (P < 0.001); appeasing faces evoked significantly smaller positive modulations in the connection of VLPFC → AMG (P = 0.049). The comparisons of fearful versus neutral and threatening versus neutral were not significant (P = 0.072 and P = 0.062, respectively).

Discussion

In this study, we employed conventional SPM with DCM analyses to investigate the functional connections between regions of the neural network that mediates face perception in the monkey brain. To our knowledge, this is the first use of DCM and Bayesian model selection to explore effective connectivity between extrastriate, limbic, and prefrontal face-responsive regions in the macaque brain.

Consistent with previous findings (Hadj-Bouziane et al. 2008, 2012), we found valence effects, namely enhanced responses to emotional as compared with neutral faces, in all face-responsive ROIs (Fig. 1). Using a model (Fig. 2a) based on known anatomical connections among areas TEO, TE, the amygdala, VLPFC, and OFC (Webster et al. 1991; Amaral et al. 1992; Saleem et al. 2008, 2014), we compared the effect of viewing facial expressions on various feedforward and feedback connections among these regions. We found that the winning model for modulations evoked by facial expressions on the connections between the amygdala and IT cortex was the model with feedback connections from the amygdala to both TE and TEO (Fig. 2b). Moreover, the winning model for modulations evoked by facial expressions on the connections between OFC, VLPFC, the amygdala, and IT cortex was the model with feedback connections from the amygdala to TE and TEO, as well as feedback connections from VLPFC to the amygdala and TE (Fig. 2d).

Lesion studies in both humans and monkeys have demonstrated that the valence effects evoked by emotional faces in temporal cortex reflect feedback signals generated in the amygdala (Vuilleumier et al. 2004; Hadj-Bouziane et al. 2012). In patients with lesions encompassing the amygdala, valence effects in temporal cortex are disrupted (Vuilleumier et al. 2004). In monkeys with amygdala lesions, valence effects within IT cortex are also strongly disrupted, whereas responses to neutral faces are unaffected (Hadj-Bouziane et al. 2012). Consistent with these findings, we found that the neural coupling between the amygdala and IT cortex was strongly modulated by fearful faces, which elicited stronger activations compared with neutral and appeasing faces. Moreover, we found that facial expressions differentially modulated the connections from VLPFC to the amygdala and IT cortex. Previous studies have found anatomical connections between VLPFC and both the amygdala and TE (Webster et al. 1991; Amaral et al. 1992; Saleem et al. 2008, 2014), and context-specific changes in functional connectivity between VLPFC and IT cortex (Liu et al. 2015). Our DCM analysis provides new information about the direction of these changes in connectivity, namely, feedback connections, which indicate top-down modulation of the valence effects from the amygdala and VLPFC.

We also found stimulus-dependent effective connectivity among IT cortex, the amygdala, and VLPFC during the perception of emotional faces. Neutral and emotional faces had a negative modulation on the connection from the amygdala to TEO, but positive modulation on the connections from VLPFC to the amygdala and from the amygdala and VLPFC to TE (Fig. 3). Compared with neutral faces, emotional faces were associated with differential modulations of the effective connectivity in the face network: Fearful faces evoked stronger modulations in the connections from the amygdala to TE and TEO; threatening faces evoked weaker modulations in the connections from the amygdala and VLPFC to TE; and appeasing faces evoked weaker modulations in the connection from VLPFC to the amygdala.

It is of interest that fearful and appeasing faces evoked stronger valence effects (Fig. 1), and stronger modulation of neural coupling (Fig. 3) than neutral and threatening faces. It has been suggested that the neural responses to aggressive faces (open-mouth threat) may depend on the animal's rank in the social hierarchy, whereas enhanced responses to fearful faces, which provide information about the presence of danger but not its source, reflect greater attentional engagement to select the most appropriate behavioral response (Whalen 2007; Hadj-Bouziane et al. 2008). In contrast, a recent study has shown that intranasal administration of oxytocin in monkeys reduced the activity in face-responsive ROIs to fearful and threatening faces, but not to neutral or appeasing faces, suggesting a selective effect of oxytocin on the perception of negative, but not positive, facial expressions (Liu et al. 2015). Our findings, in terms of both the differential valence effects and the differential modulation of the effective connectivity, suggest a modulation in macaques based not on the dichotomy of positive (neutral and appeasing) versus negative (fearful and threatening) facial expressions, but rather on the classification of facial expression along 3 axes: dominance (threatening), avoidance (fearful), and affiliation (appeasing) (Hadj-Bouziane et al. 2008). Thus, our study provides empirical evidence for dynamic alterations in neural coupling during the perception of behaviorally relevant facial expressions that are vital for social communication and interaction.

DCM studies of face perception in the human brain suggest differential modulation of neural coupling by emotion, particularly among the FG, amygdala, and prefrontal cortex (Fairhall and Ishai 2007; Dima et al. 2011; Herrington et al 2011). Our current results suggest homologies between monkeys and humans in the neural circuits mediating the response to emotional faces. In both species, valence effects, namely enhanced responses to emotional facial expressions, are modulated by feedback connections from the amygdala (Vuilleumier et al. 2004; Hadj-Bouziane et al. 2012), and the neural coupling between extrastriate, amygdala, and prefrontal face-responsive regions is modulated by emotion (Fairhall and Ishai 2007; Dima et al. 2011). The differences in the patterns of neural coupling between the 2 species, particularly in regard to the lack of OFC modulation on the connections to the amygdala and IT cortex in monkeys, can likely be attributed to technical limitations such as the low signal-to-noise ratio in OFC, as well as the face stimuli and tasks used. Alternatively, the lack of OFC involvement could reflect species differences and/or task demands (for the role of OFC in the human face network, see Kranz and Ishai 2006; Ishai 2007). Future studies in which similar experimental conditions will be used in humans and monkeys will determine the extent to which primates share evolutionary conserved neural circuits for face perception.

In sum, our study provides new information about the modulation of connectivity in the monkey face network as a function of facial expression. We believe this new information will be of interest to both human researchers and monkey researchers, as novel predictions about relevant facial expressions that are vital for social communication can be derived and experimentally tested and compared in humans and monkeys.

Supplementary Material

Supplementary material can be found at: http://www.cercor.oxfordjournals.org/.

Funding

Ning Liu and Leslie G. Ungerleider were supported by the National Institute of Mental Health Intramural Research Program. Alumit Ishai was supported by the Swiss National Center for Competence in Research: Neural Plasticity and Repair, and by the Commission on Gender Equality of Zurich University.

Supplementary Material

Notes

We thank Karl J. Friston and Will Penny for their guidance in the DCM analyses, David C. Ide for technical assistance, Katalin M. Gothard for providing the original monkey facial expression images, K. Saleem for helpful discussion about anatomical connections in the monkey brain, and Martin Wiener for reading the manuscript. Conflict of Interest: None declared.

References

- Amaral DG, Price JL, Pitkänen A, Carmichael ST. 1992. Anatomical organization of the primate amygdaloid complex. In: Aggleton JP, editor. The Amygdala: Neurobiological Aspects of Emotion, Memory, and Mental Dysfunction. New York: Wiley-Liss; p. 1–66. [Google Scholar]

- Bastos AM, Litvak V, Moran R, Bosman CA, Fries P, Friston KJ. 2015. A DCM study of spectral asymmetries in feedforward and feedback connections between visual areas V1 and V4 in the monkey. NeuroImage. 108:460–475. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bell AH, Hadj-Bouziane F, Frihauf JB, Tootell RB, Ungerleider LG. 2009. Object representations in the temporal cortex of monkeys and humans as revealed by functional magnetic resonance imaging. J Neurophysiol. 101:688–700. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cox RW. 1996. AFNI: software for analysis and visualization of functional magnetic resonance neuroimages. Comput Biomed Res. 29:162–173. [DOI] [PubMed] [Google Scholar]

- David O, Guillemain I, Saillet S, Reyt S, Deransart C, Segebarth C, Depaulis A. 2008. Identifying neural drivers with functional MRI: an electrophysiological validation. PLoS Biol. 6:2683–2697. [DOI] [PMC free article] [PubMed] [Google Scholar]

- David O, Wozniak A, Minotti L, Kahane P. 2008. Preictal short-term plasticity induced by intracerebral 1 Hz stimulation. NeuroImage. 39:1633–1646. [DOI] [PubMed] [Google Scholar]

- Dima D, Stephan KE, Roiser JP, Friston KJ, Frangou S. 2011. Effective connectivity during processing of facial affect: evidence for multiple parallel pathways. J Neurosci. 31:14378–14385. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fairhall SL, Ishai A. 2007. Effective connectivity within the distributed cortical network for face perception. Cereb Cortex. 17:2400–2406. [DOI] [PubMed] [Google Scholar]

- Friston KJ, Harrison L, Penny W. 2003. Dynamic causal modelling. NeuroImage. 19:1273–1302. [DOI] [PubMed] [Google Scholar]

- Gauthier I, Tarr MJ, Anderson AW, Skudlarski P, Gore JC. 1999. Activation of the middle fusiform ‘face area’ increases with expertise in recognizing novel objects. Nat Neurosci. 2:568–573. [DOI] [PubMed] [Google Scholar]

- Hadj-Bouziane F, Bell AH, Knusten TA, Ungerleider LG, Tootell RB. 2008. Perception of emotional expressions is independent of face selectivity in monkey inferior temporal cortex. Proc Natl Acad Sci USA. 105:5591–5596. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hadj-Bouziane F, Liu N, Bell AH, Gothard KM, Luh WM, Tootell RB, Murray EA, Ungerleider LG. 2012. Amygdala lesions disrupt modulation of functional MRI activity evoked by facial expression in the monkey inferior temporal cortex. Proc Natl Acad Sci USA. 109:E3640–E3648. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Haxby JV, Hoffman EA, Gobbini MI. 2000. The distributed human neural system for face perception. Trends Cogn Sci. 4:223–233. [DOI] [PubMed] [Google Scholar]

- Haxby JV, Hoffman EA, Gobbini MI. 2002. Human neural systems for face recognition and social communication. Biol Psychiatry. 51:59–67. [DOI] [PubMed] [Google Scholar]

- Herrington JD, Taylor JM, Grupe DW, Curby KM, Schultz RT. 2011. Bidirectional communication between amygdala and fusiform gyrus during facial recognition. NeuroImage. 56:2348–2355. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ishai A. 2007. Sex, beauty and the orbitofrontal cortex. Int J Psychophysiol. 63:181–185. [DOI] [PubMed] [Google Scholar]

- Ishai A, Haxby JV, Ungerleider LG. 2002. Visual imagery of famous faces: effects of memory and attention revealed by fMRI. NeuroImage. 17:1729–1741. [DOI] [PubMed] [Google Scholar]

- Ishai A, Pessoa L, Bikle PC, Ungerleider LG. 2004. Repetition suppression of faces is modulated by emotion. Proc Nat Acad Sci USA. 101:9827–9832. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ishai A, Schmidt CF, Boesiger P. 2005. Face perception is mediated by a distributed cortical network. Brain Res Bull. 67:87–93. [DOI] [PubMed] [Google Scholar]

- Ishai A, Ungerleider LG, Haxby JV. 2000. Distributed neural systems for the generation of visual images. Neuron. 28:979–990. [DOI] [PubMed] [Google Scholar]

- Kranz F, Ishai A. 2006. Face perception is modulated by sexual preference. Curr Biol. 16:63–68. [DOI] [PubMed] [Google Scholar]

- Leite FP, Tsao D, Vanduffel W, Fize D, Sasaki Y, Wald LL, Dale AM, Kwong KK, Orban GA, Rosen BR et al. . 2002. Repeated fMRI using iron oxide contrast agent in awake, behaving macaques at 3 Tesla. NeuroImage. 16:283–294. [DOI] [PubMed] [Google Scholar]

- Liu N, Hadj-Bouziane F, Jones KB, Turchi JN, Averbeck BB, Ungerleider LG. 2015. Oxytocin modulates fMRI responses to facial expression in macaques. Proc Natl Acad Sci USA. 112:E3123–E3130. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Liu N, Kriegeskorte N, Mur M, Hadj-Bouziane F, Luh WM, Tootell RB, Ungerleider LG. 2013. Intrinsic structure of visual exemplar and category representations in macaque brain. J Neurosci. 33:11346–11360. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mechelli A, Price CJ, Friston KJ, Ishai A. 2004. Where bottom-up meets top-down: neuronal interactions during perception and imagery. Cereb Cortex. 14:1256–1265. [DOI] [PubMed] [Google Scholar]

- Moeller S, Freiwald WA, Tsao DY. 2008. Patches with links: a unified system for processing faces in the macaque temporal lobe. Science. 320:1355–1359. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Moran RJ, Jones MW, Blockeel AJ, Adams RA, Stephan KE, Friston KJ. 2015. Losing control under ketamine: suppressed cortico-hippocampal drive following acute ketamine in rats. Neuropsychopharmacol. 40:268–277. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Moran RJ, Jung F, Kumagai T, Endepols H, Graf R, Dolan RJ, Friston KJ, Stephan KE, Tittgemeyer M. 2011. Dynamic causal models and physiological inference: a validation study using isoflurane anaesthesia in rodents. PloS one. 6:e22790. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Moran RJ, Stephan KE, Kiebel SJ, Rombach N, O'Connor WT, Murphy KJ, Reilly RB, Friston KJ. 2008. Bayesian estimation of synaptic physiology from the spectral responses of neural masses. NeuroImage. 42:272–284. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Papadopoulou M, Friston K, Marinazzo D. 2016. Estimating directed connectivity from cortical recordings and reconstructed sources. Brain Topography. (forthcoming) [DOI] [PMC free article] [PubMed] [Google Scholar]

- Penny WD, Stephan KE, Mechelli A, Friston KJ. 2004. Comparing dynamic causal models. NeuroImage. 22:1157–1172. [DOI] [PubMed] [Google Scholar]

- Pinsk MA, DeSimone K, Moore T, Gross CG, Kastner S. 2005. Representations of faces and body parts in macaque temporal cortex: a functional MRI study. Proc Natl Acad Sci USA. 102:6996–7001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pruessmann KP. 2004. Parallel imaging at high field strength: synergies and joint potential. Top Magn Reson Imaging. 15:237–244. [DOI] [PubMed] [Google Scholar]

- Reyt S, Picq C, Sinniger V, Clarencon D, Bonaz B, David O. 2010. Dynamic causal modelling and physiological confounds: a functional MRI study of Vagus nerve stimulation. NeuroImage. 52:1456–1464. [DOI] [PubMed] [Google Scholar]

- Romanski LM, Diehl MM. 2011. Neurons responsive to face-view in the primate ventrolateral prefrontal cortex. Neuroscience. 189:223–235. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Saleem KS, Kondo H, Price JL. 2008. Complementary circuits connecting the orbital and medial prefrontal networks with the temporal, insular, and opercular cortex in the macaque monkey. J Compar Neurol. 506:659–693. [DOI] [PubMed] [Google Scholar]

- Saleem KS, Logothetis NK. 2012. A Combined MRI and Histology Atlas of the Rhesus Monkey Brain in Stereotaxic Coordinates. 2nd ed Academic Press. [Google Scholar]

- Saleem KS, Miller B, Price JL. 2014. Subdivisions and connectional networks of the lateral prefrontal cortex in the macaque monkey. J Compar Neurol. 522:1641–1690. [DOI] [PubMed] [Google Scholar]

- Stephan KE, Harrison LM, Penny WD, Friston KJ. 2004. Biophysical models of fMRI responses. Curr Opin Neurobiol. 14:629–635. [DOI] [PubMed] [Google Scholar]

- Summerfield C, Egner T, Greene M, Koechlin E, Mangels J, Hirsch J. 2006. Predictive codes for forthcoming perception in the frontal cortex. Science. 314:1311–1314. [DOI] [PubMed] [Google Scholar]

- Tsao DY, Moeller S, Freiwald WA. 2008. Comparing face patch systems in macaques and humans. Proc Natl Acad Sci USA. 105:19514–19519. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tsao DY, Schweers N, Moeller S, Freiwald WA. 2008. Patches of face-selective cortex in the macaque frontal lobe. Nat Neurosci. 11:877–879. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Vanduffel W, Fize D, Mandeville JB, Nelissen K, Van Hecke P, Rosen BR, Tootell RB, Orban GA. 2001. Visual motion processing investigated using contrast agent-enhanced fMRI in awake behaving monkeys. Neuron. 32:565–577. [DOI] [PubMed] [Google Scholar]

- Vuilleumier P, Armony JL, Driver J, Dolan RJ. 2001. Effects of attention and emotion on face processing in the human brain: an event-related fMRI study. Neuron. 30:829–841. [DOI] [PubMed] [Google Scholar]

- Vuilleumier P, Richardson MP, Armony JL, Driver J, Dolan RJ. 2004. Distant influences of amygdala lesion on visual cortical activation during emotional face processing. Nat Neurosci. 7:1271–1278. [DOI] [PubMed] [Google Scholar]

- Webster MJ, Ungerleider LG, Bachevalier J. 1991. Connections of inferior temporal areas TE and TEO with medial temporal-lobe structures in infant and adult monkeys. J Neurosci. 11:1095–1116. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Whalen PJ. 2007. The uncertainty of it all. Trends Cogn Sci. 11:499–500. [DOI] [PubMed] [Google Scholar]

- Willis ML, Palermo R, McGrillen K, Miller L. 2014. The nature of facial expression recognition deficits following orbitofrontal cortex damage. Neuropsychology. 28:613–623. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.