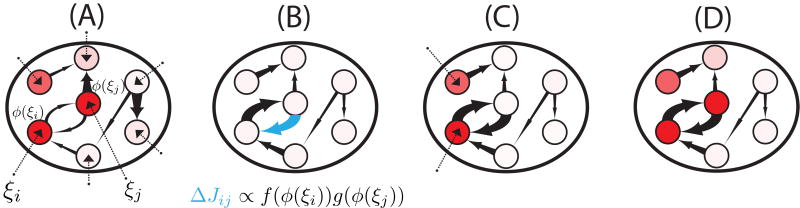

Figure 1.

Learning and retrieval in recurrent neural networks with unsupervised Hebbian learning rules. (A) When a novel pattern is presented to the network, synaptic inputs to each neuron in the network (ξl, for neurons l = 1, …, N) are drawn randomly and independently from a Gaussian distribution. Synaptic inputs elicit firing rates through the static transfer function, i.e. ϕ(ξl). Some neurons respond strongly (red circles), others weakly (white circles). (B) The firing rate pattern produced by the synaptic input currents modifies the network connectivity according to an unsupervised Hebbian learning rule. The connection strength is represented by the thickness of the corresponding arrow (the thicker the arrow the stronger the connection). (C) After learning, a pattern of synaptic inputs that is correlated but not identical to the stored pattern is presented to the network. (D) Following the presentation, the network goes to an attractor state which strongly overlaps with the stored pattern (compare with panel A), which indicates the retrieval of the corresponding memory.