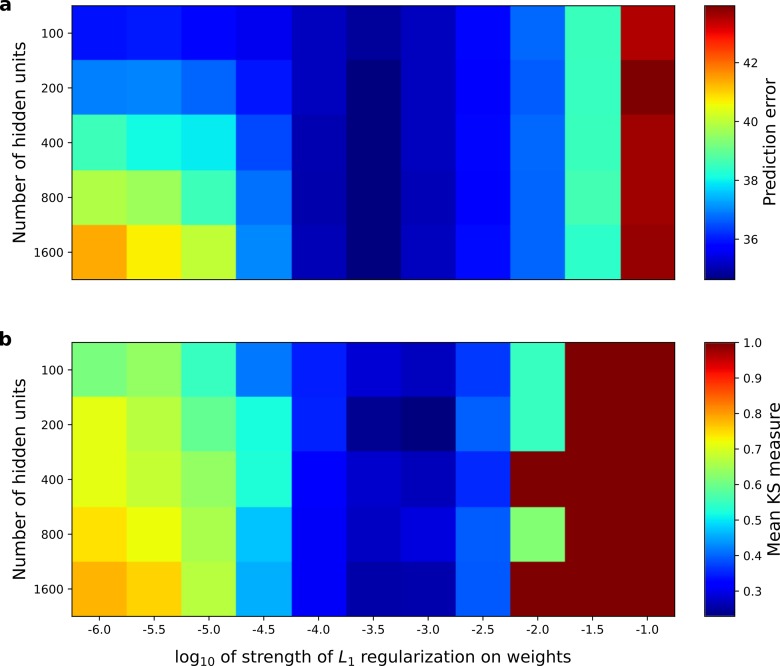

Figure 8. Correspondence between the temporal prediction model’s ability to predict future auditory input and the similarity of its units’ responses to those of real A1 neurons.

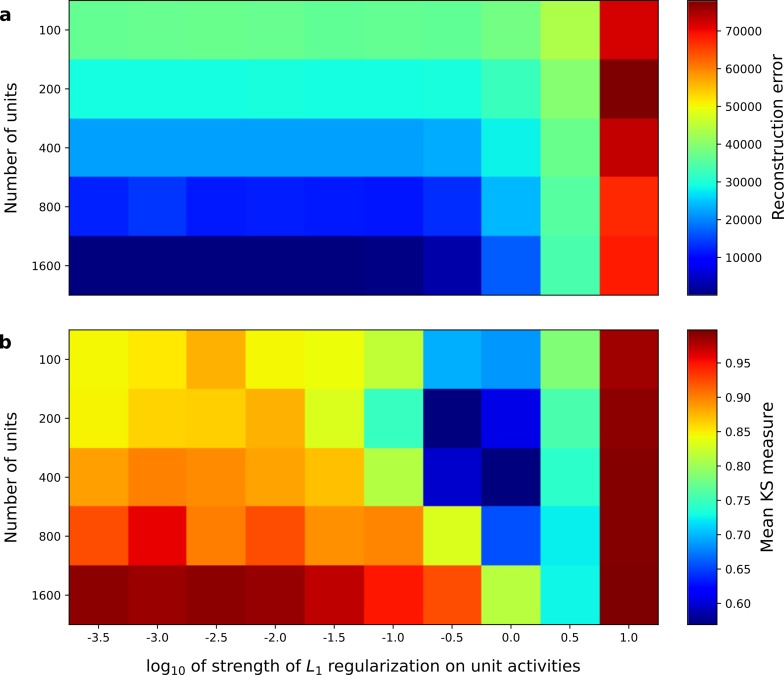

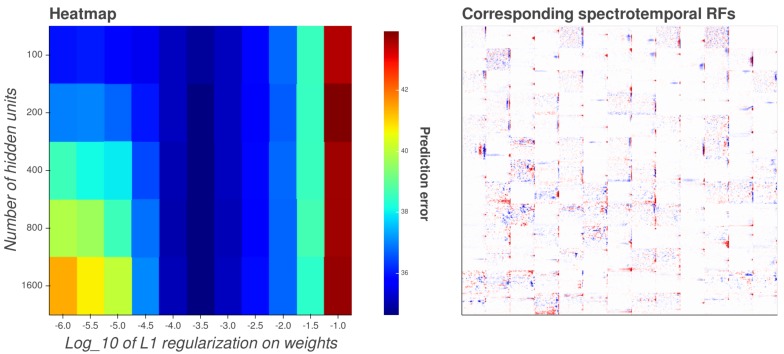

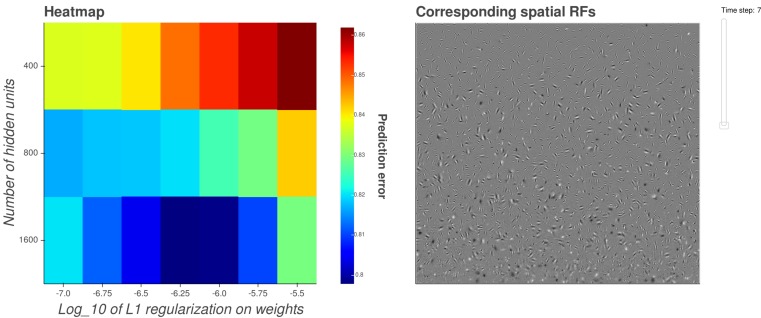

Performance of model as a function of number of hidden units and L1 regularization strength on the weights as measured by (a), prediction error (mean squared error) on the validation set at the end of training and (b), similarity between model units and real A1 neurons. The similarity between the real and model units is measured by averaging the Kolmogorov-Smirnov distance between each of the real and model distributions for the span of temporal and frequency tuning of the excitatory and inhibitory RF subfields (e.g. the distributions in Figure 6d–e and Figure 6g–h). Figure 8—figure supplement 1 shows the same analysis, performed for the sparse coding model, which does not produce a similar correspondence.