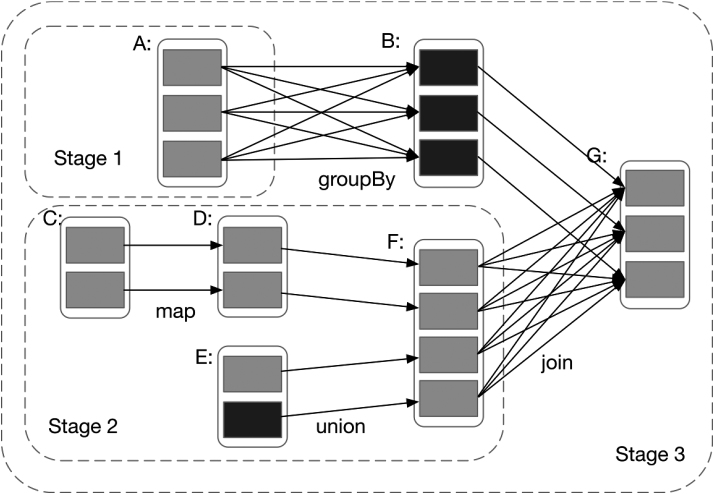

Figure 3:

An example of how Spark computes job stages. Boxes with solid outlines are RDDs. Partitions are shaded rectangles and are black if they are already in memory. To run an action on RDD G, we build stages at wide dependencies and pipeline narrow transformation inside each stage. In this case, the output RDD of stage 1 is already in memory, so we run stage 2 and then stage 3.