Abstract

Sensorimotor learning requires knowledge of the relation between movements and sensory effects: a sensorimotor map. Generally, these mappings are not innate but have to be learned. During learning the challenge is to build a continuous map from a set of discrete observations, i.e. predict locations of novel targets. One hypothesis is the learner linearly interpolates between discrete observations which are already in the map. Here this hypothesis is tested by exposing human subjects to a novel mapping between arm movements and sounds. Participants were passively moved to the edges of the workspace receiving the corresponding sounds and then were presented intermediate sounds and asked to make movements to locations they thought corresponded to those sounds. It is observed that average movements roughly match linear interpolation of the space. However, the actual distribution of participants’ movements is best described by a bimodal reaching strategy in which they move to one of two locations near the workspace edge where they had prior exposure to the sound-movement pairing. These results suggest that interpolation happens to a limited extent only and that the acquisition of sensorimotor maps may be driven not by interpolation but instead relies on a flexible recombination of instance-based learning.

Keywords: motor learning, sensorimotor mapping, generalisation, music, reaching movements, speech

Introduction

When first learning to talk or to play a musical instrument, an important challenge is to learn which movement produces which sound, i.e. a sensorimotor map. There is substantial knowledge about how maps, once learned, are adjusted such as when adapting to a visuomotor rotation or novel force dynamics. In these settings, typically subjects are studied that already have fully formed sensorimotor maps. Experimental paradigms involve perturbations to these maps which result in adaptation. However, less is known about the earliest phases of learning in which these maps still need to be learned [1, 2, 3]. Learning novel sensorimotor maps can be studied by letting human subjects make arm movements to locations in space that each correspond to a particular sound [4]. This constitutes a challenging but feasible learning problem.

The nature of the map acquisition process remains elusive. The learner is exposed to only a discrete set of observations about the sensorimotor workspace, namely previous movements and corresponding sensory results, but then often needs to move to targets that they have not heard before. That is, the learner needs to make predictions about unknown parts of the sensorimotor workspace. One hypothesis is that the learner stores a history of movements and sounds and when novel sounds are presented they interpolate between these known instances to reach to intermediate locations. Thus in principle a mapping could be learned reasonably well if only parts of the sensorimotor workspace are known.

Instances of an interpolation process have been studied previously in the context of sensorimotor mappings that are largely known at the outset. Sensorimotor learning appears to generalise to novel movements or locations within the workspace [5, 6] but this generalisation falls off steeply with distance from the training direction [7, 8, 9, 10]. Generalisation appears to be encoded in intrinsic coordinates [10]. Some studies suggest that generalisation is tied to a particular context [11, 12]. Generalisation also depends on the nature of the sensorimotor transformation being learned [6]. In these cases, generalisation was typically studied in situations where sensorimotor maps were already learned and underwent small modifications or perturbations. However, it remains unclear how generalisation occurs when one learns sensorimotor maps from scratch.

The hypothesis that generalisation is based on interpolation is tested here by limiting the exposure participants receive to specific instances of the relation between movements and sounds and then testing them across the whole of the sensory space. Specifically, participants received what will be referred to as anchor trials in which their arm was moved to positions at the edges of the workspace and then they were presented with the corresponding sounds (Fig. 1). On probe trials, one of a set of intermediate sounds was presented and participants were invited to move to the location they believed corresponds to the sound. On these trials, they did not receive feedback and therefore the only information they received about the pairing between movements and sounds was from anchor trials at the edges. In principle, participants had no way of knowing which location should correspond to these probe sounds because there are infinite mappings that would be consistent with the sound-movement mapping at the anchor locations. The central question addressed here is how participants interpolate sounds that correspond to unknown locations in the workspace.

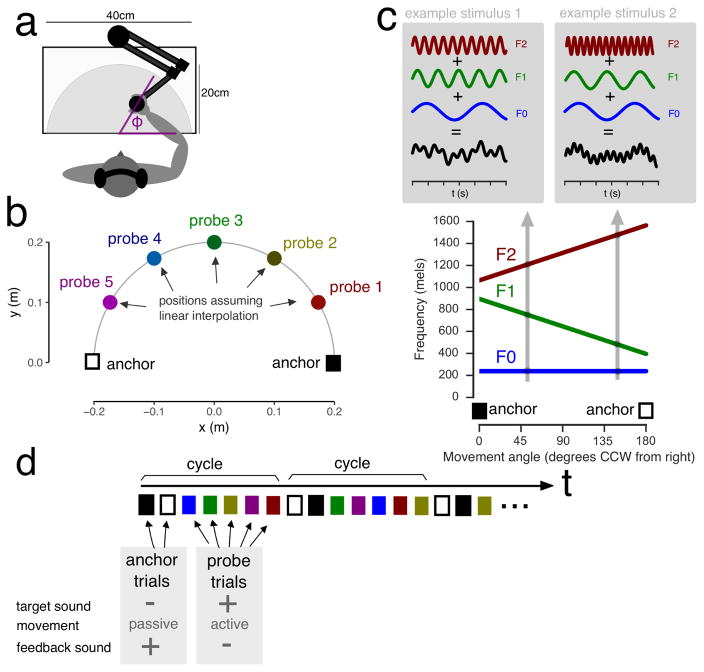

Figure 1. Participants make movements to auditory targets.

Prior to each trial, a target sound is presented. Participants then make a reaching movement to the presumed location of the sound. a, Top view of the workspace. Participants made reaching movements from the center of the workspace to points on a semi-circle (not visible to participants). b, Overview of the anchor locations and the locations that would correspond to the probe sounds assuming a linear mapping. c, The sounds that were played to participants at the end of each movement consisted of three oscillators whose frequencies depended linearly on the angle at movement end ϕ (see grey inlays for a decomposition of two example stimuli that correspond to the movement angles indicated by the arrows) d, On every set of trials (cycle), first the two anchors were presented, in random order, where the subject was moved passively with auditory feedback. Then the five probes were presented in random order and subjects were invited to make active movements but they did not receive auditory feedback.

Experimental Procedures

Subjects and experimental tasks

A total of 27 participants were recruited. All participants were right-handed (handedness quotients 80–100% according to Edinburgh Handedness Inventory) except for two who were 88% and 20% left-handed. Participants reported no neurological or hearing impairments. Each session lasted approximately 1.5 hours. Participants provided written consent and all procedures were approved by the McGill University Institutional Review Board, according to ethical principles stated in the Helsinki Declaration.

Participants filled out a questionnaire to list their musical training. Twelve participants listed no musical training of any kind. Three participants listed only vocal training (choir or singing lessons) for 1–2 years and between 8 and 20 years ago. The remaining participants listed mostly piano-only training (5 participants) followed by violin (2 participants) and others indicated various instruments. The instrumental training varied between 1 and 12 years but in all cases the last training was more than 5 years ago, except for one participant who was currently still practicing.

Participants made reaching movements while holding a robot handle. A 2-degree-of-freedom planar robotic arm (InMotion2; Interactive Motion Technologies) was used (Fig. 1a) that sampled the position of the handle at 400 Hz. A target circle was defined as a half-circle around a subject-calibrated starting point and during the experiment participants made movements from the center point to points on this circle (Fig. 1a). Vision of the circle and of the arm was blocked for the duration of the experiment.

Auditory stimuli

The sounds consisted of three sine wave oscillators: one had a fixed frequency (F0, 165 Hz); the frequency of the other two (F1, F2) decreased or increased linearly, in relation to the angle of the movement endpoint (Fig. 1c). In order to normalise the space-to-frequency mapping, angles were mapped linearly to frequencies in mel-space [13]. In order to correct for perceived loudness differences, the amplitude of each oscillator was adjusted using equal loudness curves [14] to 75 phon so that each sound would be perceived to be as loud as a 1kHz tone of 75 dB. In this way, equally spaced locations about the target circle were associated with perceptually equal changes in sound frequency and little or no change in sound intensity. Sounds were presented over Beyerdynamic DT770M headphones. Since the mapping is one-to-one, the sounds will be referred to by the angle they were mapped to on the interval [0, 180] degrees. The sounds themselves were not localised in space: the same sound was presented to both ears, hence there were no acoustic location cues and only their frequency content had information about the position they were mapped to.

Audiomotor testing

On anchor trials, indicated by a red colour cue, the robot moved participants’ arm passively to one of the two anchor locations (+90 or −90 degrees from straight ahead; Fig. 1b) along a smooth minimum-jerk trajectory of 20 cm length and 900 ms duration. The robot then held their arm at the end position and presented the corresponding sound for 1000 ms.

On probe trials, one of five target sounds was presented that under linear interpolation would correspond to the locations −60, −30, 0, +30 and +60 degrees from straight ahead (Fig. 1b). Note that although for convenience we refer to the sounds using the mapping location, this location had no physical meaning since these sounds were never presented as feedback for movements. A green colour cue indicated that subjects were expected to move. The target sound was presented for 1000 ms after which colour cue disappeared and participants could initiate their movement. Movement end was when the velocity fell below 5% of peak velocity for 50 ms. The robot then returned the subjects hand to the starting point. Subjects received no auditory feedback or any other kind of feedback for their probe movements. At no point were the subjects able to see their arm.

Anchor and probe trials were presented in cycles of 7 trials. First, the two anchors were presented in random order. Then, the five probe trials were presented in quasi-random order (with the only restriction that the first probe could not be one of the two edge sounds corresponding to +60 and −60 degrees from straight ahead). The participants performed 3 blocks with 12 cycles each, for a total of 252 trials.

A subset of participants (n=15) also performed probe-only blocks before and after the main testing described above. In these blocks, 4 cycles of the 5 probes were presented in random order without feedback for a total of 20 trials. This was done to assess participants’ baseline performance as well as performance for the same target locations following the audiomotor testing.

Movement and auditory testing

Movement-copy and auditory psychophysical testing was performed before and after audiomotor testing to measure baseline motor accuracy in reaching towards particular directions and to assess whether auditory functioning was stable [4].

Movement-copy

The participants’ hand was moved out from the starting position to a target position on the target circle in a smooth movement of 900 ms, held there for 500 ms, and then moved back. A visual icon signaled participants to reproduce the movement they just experienced. Eleven target directions were presented that were equally spaced on the half circle, including the endpoints, and presented in random order. Participants were not able to see their arm and no visual information of any kind was available.

Auditory testing

On every trial, a train of four sounds of 200 ms duration each was presented with a 75 ms pause between sounds. Three of the sounds were identical and one (either the second or third) was different. Participants’ task was to respond by pressing a button to indicate whether the mismatched sound was the second or third in the sequence. The three identical sounds were those that were mapped to the 90-degree angle in our auditory-motor mapping and the mismatched sound corresponded in the mapping to the position angles of 90.27 to 106.20 degrees in ten logarithmically spaced steps. Participants completed a total of 200 trials. No feedback was given about the accuracy of the participants’ response.

Data analysis

The main variable of interest is the movement angle on a given trial (since this provides an estimate of the participant’s prediction of the position of the target sound). Movement angles associated with different auditory target locations are then compared with the linearly interpolated location of the target sound, and the absolute deviation between them is computed, in order to assess whether participants follow linear interpolation. We computed ANOVA unless otherwise specified, and checked for sphericity using Mauchly’s test, and if significant, we reported the Greenhouse-Geisser corrected p-value of the ANOVA. In figures the error bars and shaded lines indicate the standard error of the mean.

Model fitting

Participants’ average movement direction may obscure patterns in the distribution of individual movements. In order to test hypotheses about what movements participants would make to novel targets (interpolation or lack thereof) a number of models of the distribution of movements to different target sounds were formulated that are laid out in the results section. Models were fitted for maximum likelihood. Models were compared according to the Bayesian Information Criterion (BIC) and Akaike Information Criterion (AIC) [15] which quantify how good the model fits the data (likelihood) whilst taking into account the number of parameters used to get this fit in order to penalise over-fitting. BIC is defined as ln(n)k – 2ln(L) where n is the number of observations, k is the number of free parameters, and L is the maximum likelihood fit. AIC is defined as 2k – 2ln(L). While the absolute values of AIC and BIC are meaningless, models can be compared by their difference in AIC or BIC (ΔAIC, ΔBIC). It is considered that the lower AIC or BIC, the better the model accounts for the data.

Results

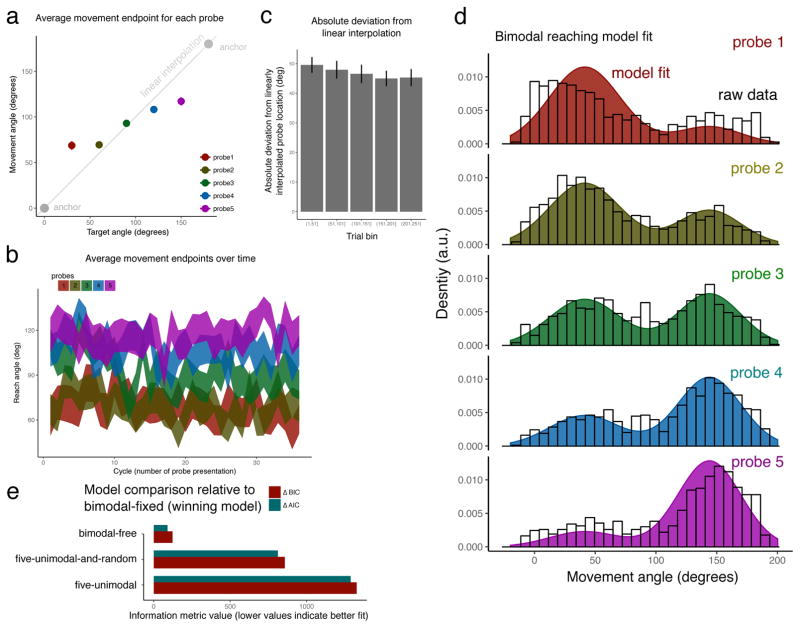

Participants made reaching movements to auditory targets based on being presented with the movement-to-sound correspondence only for the workspace edges (on anchor trials;Fig. 1). Reaching during probe trials revealed that on average participants moved to different locations for the five probes (F[4,104]=35.93, p<.0001) and these locations on average followed the order of the probe sounds (Fig 2a). There was some indication that these locations became more distinct over time (Fig 2b) but overall there was little evidence for a change in performance over the course of testing. When comparing the deviation of subjects’ reaching pattern from a linear interpolation, this deviation showed a tendency to decrease: comparing the first 50 with the last 50 trials revealed a trend for a decrease of deviation (t[26]=1.76, p=.08) but the magnitude of this change was small (Fig 2c and see Discussion).

Figure 2. Probe trial reaching averages across subjects suggest, upon first inspection, that participants interpolate to obtain probe locations.

a, Average reaching direction for each of the five probes. Error bars indicate standard error of the mean but in some cases are covered by the dots. The grey line indicates the reaching position corresponding to linear interpolation between the anchor locations (grey dots). Subjects move on avearge to different locations for the five probe sounds and the spatial ordering of these locations aligns with the auditory continuum of the probe sounds. b, The average reaching pattern over the course of the experiment indicates a tendency for the average movement endpoints to become more distinct for the five probes. c, The deviation from a linear interpolation decreases, indicating a small trend that reaching over time more resembles a linear interpolation. d, The distribution of individual reaches suggests that subjects use a bimodal reaching strategy in which they reach to one of only two locations and the relative probability depends on the target sound. Each panel depicts the distribution of reaching endpoints for each of the probes; the raw data is represented as density histograms in black. The winning model, bimodal-fixed (see text), is shown overlaid on the figure. e, Comparison of candidate models (see text for details). The model that best accounts for the data is the bimodal-fixed.

Modelling the movement distribution

Upon first inspection it may be tempting to conclude that subjects interpolated locations for the probe sounds based on their exposure in the anchor trials. However, individual reaching movements followed a bimodal pattern, as confirmed by Hartigan’s dip test [16] which revealed that the reaching distributions were significantly non-unimodal for probes 1 and 3 (p<.01) and marginally significant for probe 2 (p=.076). This indicates that the average reaching direction for a given probe is not representative of the reaching strategy. A modelling approach was therefore adopted in which a number of models were formulated to characterise the distribution of all reaching movements. Each model represents a different hypothetical interpolation strategy (or lack there of).

The five-unimodal model embodied the idea that participants would produce unimodal reaching to a different location for each of the five probes. The model contains 10 parameters: a mean and standard deviation for each probe. In the bimodal-free model participants reached to one of only two locations, each with its own mean and standard deviation, and then the probability of reaching to either one or the other was parametrised by a probability that differed for each probe. That is, a total of 9 parameters were estimated. However, the bimodal-free model left the weighting of the two response modes completely free, ignoring the fact that the probe sounds were related to the anchor sounds along an acoustic continuum. In order to accommodate this, a bimodal-fixed model was formulated which was like the bimodal-free model except that the weighting of the two response modes was assumed to reflect the acoustic similarity of the given probe to each of the two anchors. This acoustic similarity was encoded in a function “sim” that represented a distance metric in sound space that subjects were hypothesised to have at their disposal. Specifically, each mode was yoked to an anchor, a0 or a1, so that given a probe ri the probability of reaching to the mode associated with anchor aj was p = sim(ri, aj)/(sim(ri, a0) + sim(ri, a1)) where “sim” refers to the acoustic similarity between the sounds. Since the sounds varied in pitch and were controlled for loudness (see methods) the similarity was defined as the summed pitch differences in mel space between the corresponding oscillators of two sounds. The bimodal-fixed model had 4 free parameters (mean and standard deviation of the two modes). As a control, a unimodal reaching model, five-unimodal-and-random was tested which was similar to five-unimodal in assuming that subjects would reach unimodally but on some proportion of trials would reach to a random location. This model used a total of 11 parameters (10 parameters as the five-unimodal model and one additional parameter that estimated the proportion of random reaching trials).

The model that best accounted for the data was the bimodal-fixed model, both according to AIC and BIC. The runner up was bimodal-free which yielded worse model performance (ΔBIC=123, ΔAIC=90) (Fig. 2e), followed by the unimodal reaching models that did substantially worse (ΔBIC,ΔAIC>800). We re-ran this analysis with the subset of subjects who had no prior musical training whatsoever and in this case the bimodal models outperform the unimodal models as they do here, however bimodal-free outperformed the bimodal-fixed by a small margin.

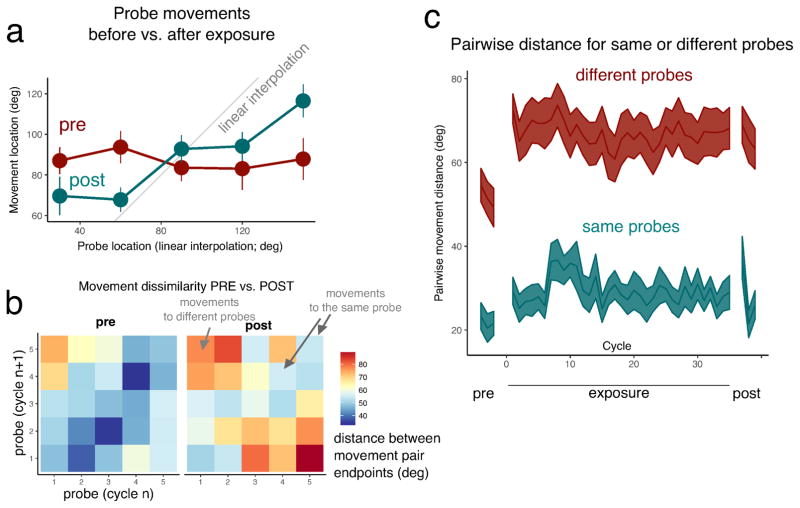

No-feedback performance before and after exposure

A subgroup of participants performed probe trials before being exposed to anchors. On these trials, there was no difference in average reaching patterns between the probes (F[1,14)=.08 p=.79), indicating that participants had no knowledge of the audiomotor map prior to learning (Fig. 3a). Bayes-Factor analysis was performed on the no-feedback trials before exposure with the reach angle as dependent variable and the probe (factor with five levels) as independent variable. The Bayes Factor for the probe factor was 0.018 (±1%), a value that is considered very strong evidence in favour of the null hypothesis, which in this case is that reaching patterns are not different for the probes. After exposure, the Bayes Factor in the same analysis was 3327.02 (±.69%), indicating very strong evidence in favour of the alternative hypothesis that reaching patterns are different across the probes. In no-feedback trials after exposure, movements differed by probe (F[1,14]=12.93, p=.003) indicating that subjects had acquired information about the mapping. Comparing pairs of movements to either the same probes or to different probes revealed that prior to exposure, although across subjects movements to different probes could not be differentiated, within subjects there was a tendency for such differentiation that was strengthened over time (Fig. 3b). Immediately after presentation of the first two anchors subjects’ reaching patterns changed so that movements to different probes became more distinct but then there was no further change for the duration of the experiment (Fig. 3c) in spite of repeated exposure to anchor trials.

Figure 3. No-feedback trials before audiomotor testing show that subjects on average do not differentiate between targets, but nevertheless they show some consistency in their reaching pattern. This consistency is strengthened upon presentation of the first anchor trial and then remains constant.

A subset of individuals performed probe-only trials (without feedback) before and after audiomotor testing. a, Movement endpoints for the various probes. The horizontal axis indicates what the probe position would be given linear interpolation and the grey line indicates the identity line where subjects would reach if they linearly interpolated the probes. Prior to learning on average subjects move to the same location for all probes but after audiomotor testing they distinguish the probes in their movements. b, Cycle-by-cycle distance between pairs of movements. Each panel represents the comparison of movements on two cycles. The horizontal axis represents the probes of a given cycle n and the vertical axis represent the probes of cycle n+1. The colour in each cell codes the absolute distance between the pair of reaching movements to those probes. Diagonal elements indicate movements to the same probe, off diagonal elements movements to different probes. It is evident that even before audiomotor testing (pre), there is some structure to the participant’s reaching reflecting an internal consistency in the way sounds are allocated in space but this is strengthened after testing (post). c, Tracking over time the distances between pairs of movements to same or different probes shows that immediately upon exposure to the first anchors, the distance between pairs of movements to different probes increases, and then remains constant for the rest of the experiment in spite of repeated exposure to the anchors.

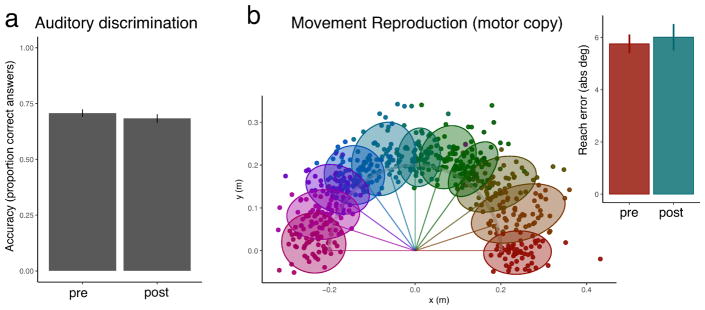

Auditory-only and movement-only testing

Before and after exposure participants were tested for auditory discrimination, and their performance on this test did not improve from before to after exposure, but instead showed a small decline (t[26]=2.49, p=.02) (Fig 4a). Participants also performed a movement-copy task where they had to reproduce passively experienced movements. Their replication of movements was reliable (Fig 4b) and did not change after exposure (t[26]=-.54, p=.6). This suggested that any changes observed in audiomotor trials, learning or other, were not a reflection of change in motor or auditory functioning alone.

Figure 4. Auditory-only and movement-only tests reveal no improvement after audiomotor testing relative to before.

a, Auditory discrimination thresholds obtained using the sounds of the auditory motor task reveal no improvements. b, Motor copy tests reveal that participants can accurately reproduce movements. The dots indicate the endpoint locations for all subjects and are colour-coded according to the target. The targets are indicated here as straight lines from the center using the same colouring scheme. The shaded ellipses represent the 2D error variance for each target. The motor copy performance does not change before vs. after exposure (inlay).

Discussion

A prerequisite for sensorimotor learning is knowledge of the relation between movements and sensory effects: a sensorimotor map. There is increasing evidence that these maps are not innate but have to be learned, as for example in the case of visually guided movements [17, 18, 19] or learning to talk [20, 21, 22]. Studying the acquisition of such maps is challenging because in typical (adaptation) motor learning settings adults are tested that already have pre-existing sensorimotor maps. Several studies approached this challenge by investigating the acquisition of novel sensorimotor maps by mapping wrist rotations to cursor movement on a screen [23, 24] but to our knowledge these studies have not directly investigated investigated how newly learned mappings generalise to enable movements to untrained locations. Other studies investigated participants learning to play the piano [25, 26], which presumably requires acquisition of a sensorimotor map but since keys correspond to discrete pitches there is little need for interpolation. Here, participants were exposed to a novel mapping between movements and sounds, which is challenging but feasible and allows studying how mappings are learned from scratch [4]. In order to investigate the process by which sensorimotor maps generalise, here we explored how subjects fill in regions of the sensorimotor workspace where they had no prior experience.

The principal observation in the present dataset is that although participants appear to reach to intermediate (interpolation) targets on average, the distribution of individual movements indicates that they achieve such averages by moving to one of only two locations with differing probabilities. That is, instead of generating unimodal movement distributions for each probe target, instead the participants appear to move to a weighted combination of two unimodal movement distributions in the vicinity of the anchor locations where they have prior exposure to the movement-to-sound mapping. Note that participants are not simply reproducing the anchors. The two locations of the bimodal reaching do not correspond to the anchor locations exactly and also the variability in movements to each of the two locations is larger than the movement-copy error.

One basic conclusion from this observation is that participants are at the very least able to judge the similarity between pairs of sounds, that is, compute a distance metric in auditory space. If instead participants perceived each sound categorically (as is often observed with speech sounds [27]) they could presumably not have produced sets of movements that align with the continuum of the probe sounds as was observed here.

In light of the apparent availability of auditory distance information the finding that participants reach to a set of only two locations seems surprising. If participants know where a probe falls in the continuum between two anchor sounds one would expect that they could produce the corresponding weighted average of the two anchor movements. Indeed, the motor system has been shown to have the capacity to maintain simultaneously in memory multiple movements to multiple targets and recombine them when task demands or the context changes [28, 29]. Averaging such movements would seem a relatively straightforward step for the motor system. It would follow therefore that the bimodal reaching strategy adopted by the subjects is not a memory limitation but originates elsewhere.

One possibility is that the motor system is reluctant to move to locations for which it has no prior experience, preferring to instead produce movements for which it can reliably predict the sensory result: in the present case the movements close to the edges of the workspace. Indeed, there is in principle an infinite number of possible sensorimotor mappings that are consistent with the sound-movement coupling the subject experienced at the workspace edges [30, 31]. It could therefore be risky to move far away from these edges. Given this constraint, the weighted bimodal reaching that subjects exhibit here might actually be the optimal strategy in a trade-off between two constraints: (1) producing movements that most closely match the target, i.e. reducing error, and (2) producing movements that are closest to the known location in the sensorimotor workspace, i.e. reducing risk. This account is in line with evidence suggesting that the motor system continually weighs the risk of novel and potentially more rewarding movements against the payoff for known movements [32, 33] and reminiscent of an exploration-exploitation tradeoff [34, 35]. Within the context of the present study it is possible that exploration has been discouraged because participants were aware that for probe movements no auditory feedback would be presented. Moving to novel locations therefore would not yield novel information about the sensorimotor workspace in the way it would in more natural settings.

Another possibility for the bimodal reaching pattern is that the sounds at the edges of the workspace are more distinct and therefore easier to memorise. Within the auditory continuum the sounds at the edges are most different from all of the other sounds. However, this explanation is contradicted by the observation that movements to the sounds at the extremities are biased towards the workspace center instead of being biased outward towards the more stereotypical (Fig 2a) as might be expected if reaching were based on memorisation accuracy.

The pattern of findings reported in this study can lead to hypotheses about the neural mechanisms underlying learning of sensorimotor maps. The process of filling in the unvisited locations of the sensorimotor workspace likely requires retrieval of auditory and motor information associated with previous visits to nearby regions of the sensorimotor workspace. This information is then recombined in auditory space, to compute a weighted average of the nearby auditory information, presumably involving auditory working memory areas of the brain. However, the present data suggests that information is not combined in the motor space, as participants in this case only select one of two movements and thus do not interpolate in motor space. Therefore one could predict that motor or somatosensory working memory areas are not involved in moving to novel targets.

Participants in the present study exhibited little learning in spite of being exposed to more and more anchor trials in the course of the experiment. This stands in contrast to continuing learning observed previously when subjects made reaching movements with feedback throughout the workspace [4]. This difference in learning performance would be predicted if learning sensorimotor maps consists in forming a look-up table connecting movements with sounds. In such case, once the anchor trials have been entered in the look-up table repeated exposure to these anchors yields little additional information and thus little to no improvement in performance. Note that a small (and not statistically significant) amount of learning was seen here which may challenge this account.

Learning audiomotor maps is also studied in songbirds and recent work has investigated generalisation. When songbirds are exposed to altered auditory feedback they adapt their vocal output and generalise this behaviour to new sequential contexts [36] and this generalisation can furthermore be modulated by the instructions given in order to flexibly respond to differential task demands in different contexts [37]. Although these studies deal with generalisation they focus on on a different phenomenon than studied here, because the birds do not make novel movements in unexplored parts of the sensorimotor workspace but instead make known movements in novel sequential contexts.

In summary, the present data suggests that when learning sensorimotor maps from scratch there is only limited interpolation and instead movements remain close to the parts of the workspace where the learner has exposure. This is consistent with some previous work on movement generalisation showing that there is little change in movements beyond those visited during training [7, 8]. Instead of generating novel movements the brain appears to prefer to use familiar movements but selecting them in such a fashion as to minimise target error.

Acknowledgments

This work was supported by National Institute of Child Health and Human Development R01 HD075740 and Fonds de recherche du Québec - Nature et technologies (FRQNT) and a Banting Fellowship BPF-NSERC-01098.

We are indebted to Bilal Alchalabi for assistance in data collection and the members of the Motor Control Lab at McGill for valuable discussions.

Footnotes

Conflict of Interest: The authors declare that they have no competing interests.

References

- 1.Huberdeau DM, Krakauer JW, Haith AM. Dual-process decomposition in human sensorimotor adaptation. Current Opinion in Neurobiology. 2015;33:71–77. doi: 10.1016/j.conb.2015.03.003. [DOI] [PubMed] [Google Scholar]

- 2.Krakauer JW. Motor learning and consolidation: the case of visuomotor rotation. Advances in experimental medicine and biology. 2009;629:405–421. doi: 10.1007/978-0-387-77064-2_21. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Houde JF, Jordan MI. Sensorimotor Adaptation in Speech Production. Science. 1998;279:1213–1216. doi: 10.1126/science.279.5354.1213. [DOI] [PubMed] [Google Scholar]

- 4.van Vugt FT, Ostry DJ. Structure and acquisition of novel sensorimotor maps. Journal of Cognitive Neuroscience. 2017 doi: 10.1162/jocn_a_01204. [DOI] [PubMed] [Google Scholar]

- 5.Goodbody SJ, Wolpert DM. Temporal and Amplitude Generalization in Motor Learning. Journal of Neurophysiology. 1998;79:1825–1838. doi: 10.1152/jn.1998.79.4.1825. [DOI] [PubMed] [Google Scholar]

- 6.Krakauer JW, Pine ZM, Ghilardi MF, Ghez C. Learning of Visuomotor Transformations for Vectorial Planning of Reaching Trajectories. Journal of Neuroscience. 2000;20:8916–8924. doi: 10.1523/JNEUROSCI.20-23-08916.2000. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Mattar AAG, Ostry DJ. Modifiability of generalization in dynamics learning. Journal of Neurophysiology. 2007;98:3321–3329. doi: 10.1152/jn.00576.2007. [DOI] [PubMed] [Google Scholar]

- 8.Mattar AAG, Ostry DJ. Generalization of dynamics learning across changes in movement amplitude. Journal of Neurophysiology. 2010;104:426–438. doi: 10.1152/jn.00886.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Gandolfo F, Mussa-Ivaldi FA, Bizzi E. Motor learning by field approximation. Proceedings of the National Academy of Sciences. 1996;93:3843–3846. doi: 10.1073/pnas.93.9.3843. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Malfait N, Shiller DM, Ostry DJ. Transfer of motor learning across arm configurations. Journal of Neuroscience. 2002;22:9656–9660. doi: 10.1523/JNEUROSCI.22-22-09656.2002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Krakauer JW, Mazzoni P, Ghazizadeh A, Ravindran R, Shadmehr R. Generalization of Motor Learning Depends on the History of Prior Action. PLOS Biology. 2006;4:e316. doi: 10.1371/journal.pbio.0040316. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Cothros N, Wong J, Gribble PL. Visual cues signaling object grasp reduce interference in motor learning. Journal of Neurophysiology. 2009;102:2112–2120. doi: 10.1152/jn.00493.2009. [DOI] [PubMed] [Google Scholar]

- 13.Stevens SS. A Scale for the Measurement of the Psychological Magnitude Pitch. The Journal of the Acoustical Society of America. 1937;8:185. [Google Scholar]

- 14.Robinson DW, Dadson RS. A re-determination of the equal-loudness relations for pure tones. British Journal of Applied Physics. 1956;7:166. [Google Scholar]

- 15.Burnham KP, Anderson DR. Multimodel Inference: Understanding AIC and BIC in Model Selection. Sociological Methods & Research. 2004;33:261–304. [Google Scholar]

- 16.Hartigan JA, Hartigan PM. The Dip Test of Unimodality. The Annals of Statistics. 1985;13:70–84. [Google Scholar]

- 17.Corbetta D, Thurman SL, Wiener R, Guan Y, Williams JL. Mapping the feel of the arm with the sight of the object: on the embodied origins of infant reaching. Cognitive Science. 2014;5:576. doi: 10.3389/fpsyg.2014.00576. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Bushnell EW. The decline of visually guided reaching during infancy. Infant Behavior and Development. 1985;8:139–155. [Google Scholar]

- 19.Clifton RK, Muir DW, Ashmead DH, Clarkson MG. Is visually guided reaching in early infancy a myth? Child Development. 1993;64:1099–1110. [PubMed] [Google Scholar]

- 20.Kuhl PK. Learning and representation in speech and language. Current opinion in neurobiology. 1994;4:812–822. doi: 10.1016/0959-4388(94)90128-7. [DOI] [PubMed] [Google Scholar]

- 21.Oller DK. The Emergence of the Speech Capacity. Taylor & Francis; 2000. [Google Scholar]

- 22.Moulin-Frier C, Nguyen SM, Oudeyer PY. Self-organization of early vocal development in infants and machines: the role of intrinsic motivation. Cognitive Science. 2014;4:1006. doi: 10.3389/fpsyg.2013.01006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Mosier KM, Scheidt RA, Acosta S, Mussa-Ivaldi FA. Remapping Hand Movements in a Novel Geometrical Environment. Journal of Neurophysiology. 2005;94:4362–4372. doi: 10.1152/jn.00380.2005. [DOI] [PubMed] [Google Scholar]

- 24.Liu X, Mosier KM, Mussa-Ivaldi FA, Casadio M, Scheidt RA. Reorganization of Finger Coordination Patterns During Adaptation to Rotation and Scaling of a Newly Learned Sensorimotor Transformation. Journal of Neurophysiology. 2011;105:454–473. doi: 10.1152/jn.00247.2010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Bangert M, Altenmüller EO. Mapping perception to action in piano practice: a longitudinal DC-EEG study. BMC Neuroscience. 2003;4:26–26. doi: 10.1186/1471-2202-4-26. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Lahav A, Boulanger A, Schlaug G, Saltzman E. The power of listening: auditory-motor interactions in musical training. Annals of the New York Academy of Sciences. 2005;1060:189–194. doi: 10.1196/annals.1360.042. [DOI] [PubMed] [Google Scholar]

- 27.Niziolek CA, Guenther FH. Vowel Category Boundaries Enhance Cortical and Behavioral Responses to Speech Feedback Alterations. The Journal of Neuroscience. 2013;33:12090–12098. doi: 10.1523/JNEUROSCI.1008-13.2013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Gallivan JP, Bowman NAR, Chapman CS, Wolpert DM, Flanagan JR. The sequential encoding of competing action goals involves dynamic restructuring of motor plans in working memory. Journal of Neurophysiology. 2016;115:3113–3122. doi: 10.1152/jn.00951.2015. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Gallivan JP, Logan L, Wolpert DM, Flanagan JR. Parallel specification of competing sensorimotor control policies for alternative action options. Nature Neuroscience. 2016;19:320–326. doi: 10.1038/nn.4214. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Jordan MI, Rumelhart DE. Forward Models: Supervised Learning with a Distal Teacher. Cognitive Science. 1992;16:307–354. [Google Scholar]

- 31.Jordan MI, Wolpert DM. Computational motor control. MIT Press; 1999. [Google Scholar]

- 32.Dhawale AK, Smith MA, Ölveczky BP. The Role of Variability in Motor Learning. Annual Review of Neuroscience. 2017;40:479–498. doi: 10.1146/annurev-neuro-072116-031548. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Pekny SE, Izawa J, Shadmehr R. Reward-dependent modulation of movement variability. Journal of Neuroscience. 2015;35:4015–4024. doi: 10.1523/JNEUROSCI.3244-14.2015. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Sutton RS, Barto AG. Adaptive computation and machine learning. MIT Press; Cambridge, Mass: 1998. Reinforcement learning: an introduction. [Google Scholar]

- 35.Peters J, Schaal S. Reinforcement learning of motor skills with policy gradients. Neural Networks. 2008;21:682–697. doi: 10.1016/j.neunet.2008.02.003. [DOI] [PubMed] [Google Scholar]

- 36.Hoffmann LA, Sober SJ. Vocal Generalization Depends on Gesture Identity and Sequence. Journal of Neuroscience. 2014;34:5564–5574. doi: 10.1523/JNEUROSCI.5169-13.2014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Tian LY, Brainard MS. Discrete Circuits Support Generalized versus Context-Specific Vocal Learning in the Songbird. Neuron. 2017;96:1168–1177. e5. doi: 10.1016/j.neuron.2017.10.019. [DOI] [PMC free article] [PubMed] [Google Scholar]