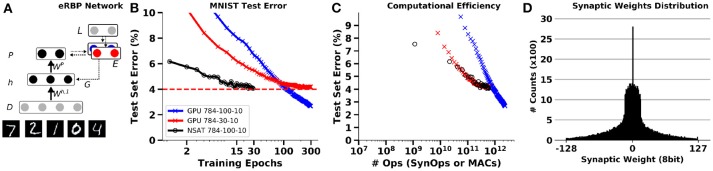

Figure 8.

Training an MNIST network with event-driven Random Back-propagation compared to GPU simulations. (A) Network architecture. (B) MNIST Classification error on the test set using a fully connected 784-100-10 network on NSAT (8 bit fixed-point weights, 16 bits state components) and on GPU (TensorFlow, floating-point 32 bits). (C) Energy efficiency of learning in the NSAT (lower left is best). The number of operations necessary to reach a given accuracy is lower or equal in the spiking neural network (NSAT-SynOps) compared to the artificial neural network (GPU-MACs) for classification errors at or above 4%. (D) Histogram of synaptic weights of the NSAT network after training. One epoch equals a full presentation of the training set.